A new benchmark for large language models (LLMs) shows that even the latest models aren’t the best chess players.

Simply called LLM Chess Puzzles by its creator, software engineer Vladimir Prelovac, the GitHub project tests LLMs by giving them 1,000 chess puzzles to complete. In contrast to a normal game of chess, puzzles are essentially logic problems where the state of the chess board is set up in a specific way. The goal of the chess puzzle is to play the best move or chain of moves to achieve the quickest possible and unstoppable checkmate.

While a game of chess tests decision making against another player, a chess puzzle is a test of logical reasoning and understanding of the mechanics of chess, and that makes for a more challenging AI benchmark.

“While providers of large language models share their own performance benchmarks, these results can be misleading due to overfitting,” Prelovac told The Register. “This means the model might be tailored to perform well on specific tests but doesn’t always reflect real-world effectiveness.”

The benchmark’s GitHub shows performance data for many of the most popular LLMs today from OpenAI (including GPT-4o), Anthropic, and Mistral. Most models achieved dismal Elo ratings, a number that represents skill level. Most LLMs landed in the 100 to 500 range, which is firmly the domain of players who have very little experience with chess. These included Claude 3 variants, GPT-3.5 Turbo, and Mistral models.

Gemini 1.5 Pro failed entirely because it couldn’t format its responses to just say the move, regardless of how prompts were worded.

However, one AI family stood above the rest. The GPT-4 and GPT-4 Turbo Preview models scored 1,047 and 1,144 Elo respectively, which is above average. Especially proficient was GPT-4o with an Elo of 1,790, a respectable amateur rating, but still below the expert level, which starts at 2,000.

Calculating Elo wasn’t exactly straightforward for Prelovac, who told The Register that these LLMs were prone to making illegal or unallowed moves, like moving a rook diagonally or capturing its own piece. Even GPT-4o made an illegal move 12.7 percent of the time, and most of the other LLMs made more illegal moves than legal ones.

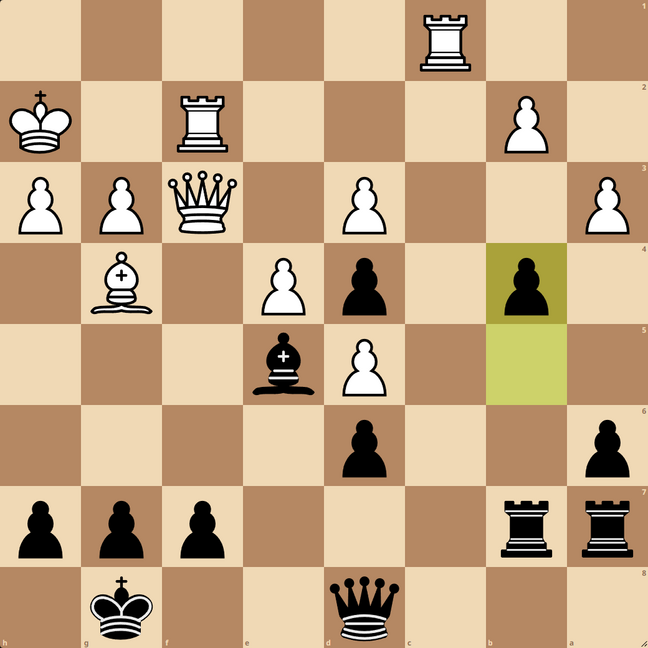

In 501 of the 1,000 puzzles, GPT-4o was able to find the best move. For instance, in this puzzle white’s best move is rook to c8, right next to black’s queen. However, the queen can’t just take the rook for free as the rook is in the line of sight of white’s light square bishop. But black can’t move the queen out of the way because then its king would be checkmated, so black must concede the loss of its queen.

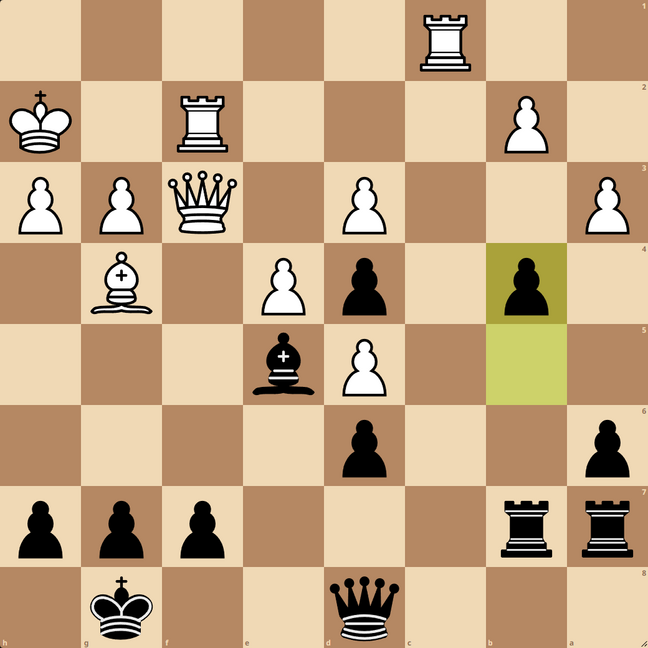

On the other hand, GPT-4o can also attempt some truly insane moves, such as in this puzzle. Here, black has a guaranteed checkmate within two moves, the first of which is moving the dark square bishop to h2 to put the king in check. But instead of that move, GPT-4o said it wanted to play its rook on e1 to e3, except there’s no rook on e1 at all.

Even if it had correctly figured out its rook was actually on e2 and captured on e3, it would have turned a guaranteed checkmate into a disadvantage against white.

LLMs struggle at chess due to lack of actual intelligence

That GPT-4o can play at a decently high level yet fail some puzzles due to playing an illegal move is paradoxical. It’s not hard to learn the rules of chess, and even beginners aren’t that likely to make an illegal move. In fact, if illegal moves weren’t counted, Prelovac says GPT-4o would have an Elo of more than 2,000, the threshold for a national master.

“It’s somewhat disappointing but expected that these models show no real generalization of intelligence or reasoning,” he said. “While they can perform specific tasks well, they don’t yet demonstrate a broad, adaptable understanding or problem-solving ability like human intelligence.”

At their core, LLMs are still big bundles of statistical models just trying to write down what makes sense.

To illustrate this, Prelovac also tested LLMs in Connect Four, a game generally considered to be way easier than chess. However, even GPT-4o couldn’t understand when it needed to block its opponent from connecting four pieces. Prelovac described its performance as on par with a four-year-old.

“The only conclusion is that this failure is due to the lack of historical records of played games in the training data,” he said. “This implies that it cannot be argued that these models are able to ‘reason’ in any sense of the word, but merely output a variation of what they have seen during training.”

As for why GPT-4o registered a remarkable improvement in chess but still made illegal moves, Prelovac speculated that perhaps its multi-modal training had something to do with it. It’s possible part of OpenAI’s training data included visuals of chess being played, which could help the AI visualize the board easier than it can with pure text.

Technically, when GPT-4o writes out the move it wants to play, it correctly formats it in Forsyth–Edwards notation (FEN), but the model doesn’t understand that even if it makes sense, that doesn’t mean it’s the best move or even legal. When individual chess moves are broken up into multiple tokens, that can especially compromise an LLM’s reasoning skills.

“Even chess moves are nothing but a series of tokens, like ‘e’ and ‘4’, and have no grounding in reality,” Prelovac said. “They are products of statistical analysis of the training data, upon which the next token is predicted.”

The difference between pawn to e4 and pawn to e5 is clear to humans as both moves would put a game of chess down completely different paths and can’t just be interchanged for each other. However, an LLM might just choose the next number based on statistics, hence why it might try to move a rook that doesn’t exist on e1 instead of a real one that’s on e2.

For the time being, no LLM is going to be able to play chess the way a chess engine like Stockfish can. However, more training data could potentially make LLMs more proficient, especially for opening moves and games where so few pieces are on the board that checkmate can be seen from just a few moves away. For the middle part of a game, a truly massive amount of data might be necessary to prevent an LLM from making illegal moves. ®

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://go.theregister.com/feed/www.theregister.com/2024/06/04/chess_puzzle_benchmark_llm/

- :has

- :is

- :not

- :where

- $UP

- 000

- 1

- 100

- 12

- 500

- 501

- 7

- a

- ability

- Able

- above

- Achieve

- achieved

- actual

- actually

- adaptable

- against

- AI

- All

- also

- always

- amateur

- amount

- an

- analysis

- and

- Another

- Anthropic

- any

- ARE

- aren

- argued

- AS

- At

- attempt

- average

- away

- based

- BE

- because

- Beginners

- being

- below

- Benchmark

- benchmarks

- BEST

- between

- Big

- Black

- Block

- board

- border

- both

- broad

- Broken

- bundles

- but

- by

- called

- CAN

- cannot

- captured

- Capturing

- Center

- chain

- challenging

- check

- Chess

- Choose

- clear

- click

- CO

- complete

- completely

- compromise

- conclusion

- Connect

- Connecting

- considered

- contrast

- Core

- correctly

- could

- couldn

- creator

- Dark

- data

- decision

- Decision Making

- demonstrate

- described

- didn

- difference

- different

- Disadvantage

- disappointing

- dismal

- do

- doesn

- domain

- don

- down

- due

- during

- e

- each

- easier

- effectiveness

- Engine

- engineer

- entirely

- especially

- essentially

- Even

- exactly

- example

- Except

- exist

- expected

- experience

- expert

- fact

- FAIL

- Failed

- Failure

- family

- few

- figured

- Find

- firmly

- First

- For

- format

- four

- Free

- from

- game

- Games

- generally

- get

- GitHub

- Giving

- goal

- going

- guaranteed

- had

- hand

- Hard

- Have

- he

- help

- hence

- here

- High

- historical

- How

- However

- HTTPS

- human

- human intelligence

- Humans

- if

- Illegal

- illustrate

- implies

- improvement

- in

- included

- Including

- individual

- INSANE

- instance

- instead

- Intelligence

- into

- IT

- ITS

- just

- King

- Lack

- language

- large

- latest

- LEARN

- Legal

- Level

- light

- like

- likely

- Line

- little

- LLM

- logic

- logical

- loss

- made

- make

- MAKES

- Making

- many

- massive

- master

- mean

- means

- mechanics

- merely

- Middle

- might

- misleading

- model

- models

- more

- most

- Most Popular

- move

- moves

- moving

- multiple

- must

- National

- necessary

- needed

- New

- next

- no

- normal

- nothing

- number

- of

- on

- ONE

- ones

- only

- OpenAI

- opening

- or

- Other

- out

- output

- own

- part

- partly

- paths

- percent

- Perform

- performance

- perhaps

- piece

- pieces

- plato

- Plato Data Intelligence

- PlatoData

- Play

- played

- player

- players

- playing

- Popular

- possible

- potentially

- predicted

- prevent

- Preview

- Pro

- problem-solving

- problems

- Products

- promising

- prompts

- proves

- providers

- prowess

- pure

- put

- puzzle

- Puzzles

- quickest

- range

- rating

- ratings

- real

- real world

- Reality

- reason

- reasoning

- records

- reflect

- Regardless

- registered

- remarkable

- represents

- respectable

- respectively

- responses

- REST

- Results

- right

- ROOK

- rules

- s

- Said

- say

- says

- scored

- seen

- sense

- Series

- set

- Share

- show

- Shows

- Sight

- skill

- skills

- So

- Software

- Software Engineer

- Solving

- some

- something

- somewhat

- specific

- square

- starts

- State

- statistical

- statistics

- Still

- stood

- straightforward

- Struggle

- such

- tailored

- Take

- tasks

- test

- tested

- tests

- text

- than

- that

- The

- The LINE

- The State

- their

- Them

- then

- There.

- These

- they

- this

- threshold

- time

- to

- today

- token

- Tokens

- told

- Training

- truly

- try

- trying

- Turned

- two

- understand

- understanding

- unstoppable.

- upon

- very

- visualize

- visuals

- wanted

- wants

- was

- wasn

- Way..

- WELL

- were

- weren

- What

- when

- which

- while

- white

- WHO

- why

- with

- within

- Word

- would

- write

- yet

- zephyrnet