Analysis Arm this week announced the availability of new top-end CPU and GPU designs ready made for system-on-chips for laptops, smartphones, and similar personal electronics. These cores are expected to power next-gen Android phones, at least, by late 2024.

The announcements touched a range of topics, some obvious from the marketing; some not. Here are the main takeaways in our view.

The new cores themselves

Arm announced the 64-bit Armv9.2 Cortex-A925 CPU core that succeeds last year’s Cortex-X4. The X925 can be clocked up to 3.8GHz, can target 3nm process nodes, and according to Arm executes instructions at least roughly 15 percent faster than the X4 on a level-playing field.

We’re told the CPU has various architectural improvements, such as double the L1 instruction and data cache bandwidth, a doubled instruction window size, better prefetching and branch prediction – key drivers of performance – and wider microarchitecture (eg, four rather than three load pipelines, double the integer multiplication execution, and increased SIMD/FP issue queues). All the stuff CPU designers get excited about. The key thing for users is that Arm believes devices powered by the X925 will in real-world use get a 36 percent increase in peak single-core performance over last year’s hardware, dropping down to about a 30 percent average uplift in performance across a mix of workloads.

The X925 is intended to be the main beefy application core or cores in a big.Little CPU cluster of up to 14 cores total in future devices. How that cluster is configured is up to the system-on-chip designer that licenses this tech from Arm. The other CPU cores in the cluster can be the new mid-range Cortex-A725 and the smaller, efficient A520. The X925 can have up to a 3MB private L2 cache, while the A725 can go to 1MB L2. The cluster management system has been tuned to provide energy savings, too, we’re told.

Then there’s the new Immortalis-G925 GPU that chip designers can license and add to their processors; a 14-core G720 cluster is supposed to have roughly 30 percent or more performance over its 12-core G720 predecessor. It’s said that the GPU and its drivers have been optimized to boost machine-learning tasks in games and graphical apps, particularly those built using Unity.

The G925, according to Arm, has some interesting hardware-level acceleration for reducing the amount of work the CPU-based rendering threads need to do; this includes some new in-GPU object sorting, avoiding the need to draw on screen stuff that’s hidden anyway, and similarly better hidden surface removal. That should improve performance and reduce energy usage, which is good for battery-powered things. There are also optimizations to the hardware ray tracing, support for up to 24 GPU cores in a cluster, and improvements to the tiler and job dispatch to make use of that increase in GPU cores.

All in all, it’s more Arm CPU and GPU cores from Arm with the usual promises of increased performance and efficiency, meaning the next batch of Android phones – among other things – will run faster and not eat the battery quite as much as they should. We’ll await the independent reviews and benchmarking of real hardware.

Physical implementations

Normally, system-on-chip chip designers license cores and other bits and pieces from Arm to integrate into their processors; and then after carrying out rounds of testing, verification, and optimizing, these chip designers pass the final layout to a factory to manufacture and put into devices.

Last year Arm started offering pre-baked designs – physical implementations – of its cores that have already gone through optimization and validation with selected fabs; these designs were offered as Neoverse Compute Subsystems for datacenter-grade processors. This was offered as a way for server chip designers to get a jump-start in creating high-performance components.

Now Arm has taken that shake-and-bake approach to personal or client devices, and will offer complete physical implementations of the above new Cortex CPU and Immortalis GPU core designs under the banner of Compute Subsystems for Client. These designs were made with the help of TSMC and Samsung, specifically targeting those fabs’ 3nm process nodes. Again, the idea being that chip designers license these physical implementations to include on their processor dies, and using TSMC or Samsung get a head start in creating competitive, high-end PC and mobile processors.

This is also necessary, in Arm’s mind, because scaling below 7nm starts opening up engineering challenges that can’t be simply solved by system-on-chip designers alone. The RAM in the core caches, and the minute wires carrying signals from one part of the die to another, don’t scale as easily down to 3nm as they did to 7nm, or so we’re told. Unless you get the scaling just right, at the microarchitecture level, the resulting chip may not perform as well as expected.

That’s led Arm to providing these optimized physical blueprints for its cores at 3nm, with the help of the fabs themselves, to help processor designers avoid what Arm staff have called a pain point in reaching 3nm. It’s a step closer to taking Arm to all-out designing whole chips for its customers, though we get the feeling the biz still isn’t ready for or willing to enter that kind of space.

We understand it’s not necessary or required for Arm licensees to use the compute subsystem; they can license and integrate the cores as they’ve normally done but they’ll have to do all the tuning and optimization themselves, and find a way to overcome the 3nm scaling issues without hampering core performance. Also it’s not required that licensees have to use Arm’s GPU if they pick the CPU cores; we’re told there is no take-it-all-or-leave-it lock-in situation or similar here.

As we said, this is interesting but not totally revolutionary: Arm already offers this kind of pre-baked design IP for Neoverse. It’s just extending that approach to client-level chips now.

Who needs separate AI accelerators?

Here’s where things start to get a bit messy, and where Arm has to position itself carefully. Arm licenses its CPU and GPU designs to system-on-chip designers, which themselves can include in their processors their own custom hardware acceleration units for AI code. These units typically speed up the execution of matrix multiplication and other operations crucial to running neural networks, handily taking that work away from the CPU and GPU cores, and are often referred to as NPUs or neural processing units.

Arm’s licensees, from Qualcomm to Google, love putting their own AI acceleration in their processors as this helps those designers differentiate their products from one another. And Arm doesn’t want to step too much on people’s toes and publicly state that it’s not a fan of that custom acceleration. Arm staff stressed repeatedly to us that it is not anti-NPU.

But.

Arm told us that on Android at least, 70 percent of AI inference done by apps usually runs on a device’s CPU cores, not the NPU if present nor on the GPU. Most application code just throws neural network and other ML operations at the CPU cores. There are a bunch of reasons why that happens, we presume one being that app makers don’t want to make any assumptions about the hardware present in the device.

If it’s possible to use a framework that auto-detects available acceleration and uses it, great, but generally: Inference is staying on the CPU. Of course, first-party apps, such as Google’s own mobile software, are expected to make use of known built-in acceleration, such as Google’s Tensor-branded NPUs in its Pixel range of phones.

And here’s the main thing: Arm staff we spoke to want to see 80 to 90 percent of AI inference running on the CPU cores. That would, for one thing, avoid third-party apps from missing out on acceleration that first-party apps enjoy. That’s because, crucially, this approach simplifies the environment for developers: It’s OK to run AI work on the CPU cores because modern Arm CPU cores, such as the new Armv9.2 Cortexes above, include acceleration for AI operations at the CPU ISA level.

Specifically, we’re talking about Armv9’s Scalable Matrix Extension (SME2) as well as its Scalable Vector Extension (SVE2) instructions.

Arm really wants chip designers to migrate to using Armv9, which brings in more neural network acceleration on the CPU side. And that’s kinda why Arm has this beef with Qualcomm, which is sticking to Armv8 (with NEON) and custom NPUs for its latest Nuvia-derived Snapdragon system-on-chips. You’ve got the likes of Apple on one side using Armv9 and SME2 in its latest M4 chips, and Qualcomm and others on the other side persisting with NPUs. Arm would be happier without this fragmentation going forward.

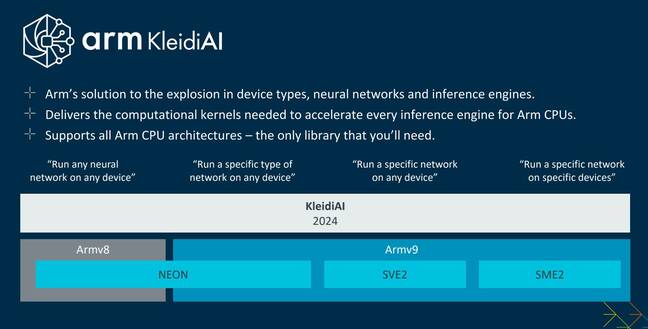

And so that brings us to KleidiAI, a handy open source library Arm has made available, is still developing, and is said to be upstreaming to projects like the LLM inference driver Llama.cpp, that provides a standard interface to all of the potential CPU-level acceleration available on the modern Arm architecture. It’s best illustrated with this briefing slide:

The idea, eventually, is that app developers won’t have to use any new frameworks or APIs nor make any assumptions. They just keep using the engines they are already using; those engines will hopefully incorporate KleidiAI so that the right CPU-level acceleration is chosen automatically at runtime depending on the device being used, and AI operations are handled efficiently by the CPU cores without having to offload that work to a GPU or NPU.

Offloading that work to SME2 or SVE2 is preferable over NEON, we’re led to believe.

Arm says it isn’t against NPUs, and that it can see the benefit of offloading certain tasks to custom units. But our impression is that Arm is so done with the hype over AI accelerators and the notion that AI inference can only be properly performed by custom units.

For 90 percent of apps, Arm would rather you use its CPU cores and extensions like SME2 to run your neural networks. And that means more chip designers licensing more modern CPU cores from Arm, natch. ®

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://go.theregister.com/feed/www.theregister.com/2024/05/30/arm_cortex_x925_ai_cores/

- :has

- :is

- :not

- :where

- $UP

- 14

- 15%

- 2024

- 24

- 30

- 36

- 70

- 80

- a

- About

- above

- acceleration

- accelerators

- According

- across

- add

- After

- again

- against

- AI

- All

- alone

- already

- also

- among

- amount

- and

- android

- announced

- Announcements

- Another

- any

- APIs

- app

- Apple

- Application

- approach

- apps

- architectural

- architecture

- ARE

- ARM

- AS

- assumptions

- At

- automatically

- availability

- available

- average

- avoid

- avoiding

- await

- away

- Bandwidth

- banner

- battery

- BE

- because

- been

- being

- believe

- believes

- below

- benchmarking

- benefit

- BEST

- Better

- Big

- Bit

- bits

- biz

- boost

- border

- Branch

- Briefing

- Brings

- built

- built-in

- Bunch

- but

- by

- Cache

- called

- CAN

- carefully

- carrying

- certain

- challenges

- chip

- Chips

- chosen

- click

- client

- closer

- Cluster

- CO

- code

- competitive

- complete

- components

- Compute

- configured

- Core

- Course

- Creating

- crucial

- crucially

- custom

- Customers

- data

- Depending

- Design

- Designer

- designers

- designing

- designs

- developers

- developing

- device

- Devices

- DID

- Die

- differentiate

- dispatch

- do

- doesn

- don

- done

- double

- doubled

- down

- draw

- driver

- drivers

- Dropping

- easily

- eat

- efficiency

- efficient

- efficiently

- Electronics

- emphasizes

- energy

- Engineering

- Engines

- enjoy

- Enter

- Environment

- eventually

- excited

- Executes

- execution

- expected

- extending

- extensions

- factory

- fan

- faster

- feeling

- field

- final

- Find

- For

- Forward

- four

- fragmentation

- Framework

- frameworks

- from

- future

- Games

- generally

- get

- Go

- going

- gone

- good

- got

- GPU

- great

- handy

- happens

- happier

- Hardware

- Have

- having

- head

- help

- helps

- here

- Hidden

- High-End

- high-performance

- Hopefully

- How

- HTTPS

- Hype

- idea

- if

- implementations

- improve

- improvements

- in

- include

- includes

- incorporate

- Increase

- increased

- independent

- instructions

- integrate

- intended

- interesting

- Interface

- into

- IP

- ISA

- isn

- issue

- issues

- IT

- ITS

- itself

- Job

- jpg

- just

- Keep

- Key

- Kind

- known

- L1

- l2

- Label

- laptops

- Last

- Last Year

- Late

- latest

- Layout

- least

- Led

- Level

- License

- licensees

- licenses

- Licensing

- like

- likes

- little

- ll

- LLM

- load

- love

- made

- Main

- make

- Makers

- management

- Marketing

- Matrix

- May..

- meaning

- means

- migrate

- mind

- minute

- missing

- mix

- ML

- Mobile

- Modern

- more

- most

- much

- necessary

- Need

- needs

- Neon

- network

- networks

- neural

- neural network

- neural networks

- New

- next

- no

- nodes

- nor

- normally

- Notion

- now

- object

- obvious

- of

- offer

- offered

- offering

- Offers

- often

- on

- ONE

- only

- opening

- Operations

- optimization

- optimizations

- optimized

- optimizing

- or

- Other

- Others

- our

- out

- over

- Overcome

- own

- Pain

- part

- particularly

- pass

- PC

- Peak

- People

- percent

- Perform

- performance

- performed

- personal

- phones

- physical

- pick

- pieces

- Pixel

- plato

- Plato Data Intelligence

- PlatoData

- Point

- position

- possible

- potential

- power

- powered

- predecessor

- prediction

- preferable

- present

- private

- process

- processing

- Processor

- processors

- Products

- projects

- promises

- properly

- provide

- provides

- providing

- publicly

- put

- Putting

- qualcomm

- quite

- RAM

- range

- rather

- RAY

- RE

- reaching

- ready

- real

- real world

- really

- reasons

- reduce

- reducing

- referred

- removal

- rendering

- REPEATEDLY

- required

- resulting

- Reviews

- revolutionary

- right

- roughly

- rounds

- Run

- running

- runs

- runtime

- s

- Said

- Samsung

- Savings

- says

- Scale

- scaling

- Screen

- see

- selected

- separate

- server

- should

- side

- signals

- similar

- Similarly

- simplifies

- simply

- situation

- Size

- Slide

- smaller

- smartphones

- snapdragon

- So

- Software

- some

- Source

- Space

- specifically

- speed

- Staff

- standard

- start

- started

- starts

- State

- staying

- Step

- sticking

- Still

- such

- support

- supposed

- Surface

- system

- Takeaways

- taken

- taking

- talking

- Target

- targeting

- tasks

- tech

- Testing

- than

- that

- The

- their

- themselves

- then

- There.

- These

- they

- thing

- things

- third-party

- this

- this week

- those

- though?

- three

- Through

- to

- told

- too

- Topics

- Total

- TOTALLY

- touched

- Tracing

- Tsmc

- tuned

- tuning

- typically

- under

- understand

- units

- unity

- unless

- Uplift

- us

- Usage

- use

- used

- users

- uses

- using

- usual

- usually

- validation

- various

- Ve

- Verification

- View

- want

- wants

- was

- Way..

- we

- week

- WELL

- were

- What

- which

- while

- whole

- why

- wider

- will

- willing

- window

- with

- without

- Won

- Work

- would

- year

- You

- Your

- zephyrnet