Introduction

Edge detection is something we do naturally, but isn’t as easy when it comes to defining rules for computers. While various methods have been devised, the reigning method was developed by John F. Canny in 1986., and is aptly named the Canny method.

It’s fast, fairly robust, and works just about the best it could work for the type of technique it is. By the end of the guide, you’ll know how to perform real-time edge detection on videos, and produce something along the lines of:

Canny Edge Detection

What is the Canny method? It consists of four distinct operations:

- Gaussian smoothing

- Computing gradients

- Non-Max Supression

- Hysteresis Thresholding

Gaussian smoothing is used as the first step to “iron out” the input image, and soften the noise, making the final output much cleaner.

Image gradients have been in use in earlier applications for edge detection. Most notably, Sobel and Scharr filters rely on image gradients. The Sobel filter boils down to two kernels (Gx and Gy), where Gx detects horizontal changes, while Gy detects vertical changes:

G

x

=

[

−

1

0

+

1

−

2

0

+

2

−

1

0

+

1

]

G

y

=

[

−

1

−

2

−

1

0

0

0

+

1

+

2

+

1

]

When you slide them over an image, they’ll each “pick up” (emphasize) the lines in their respective orientation. Scharr kernels work in the same way, with different values:

G

x

=

[

+

3

0

−

3

+

10

0

−

10

+

3

0

−

3

]

G

y

=

[

+

3

+

10

+

3

0

0

0

−

3

−

10

−

3

]

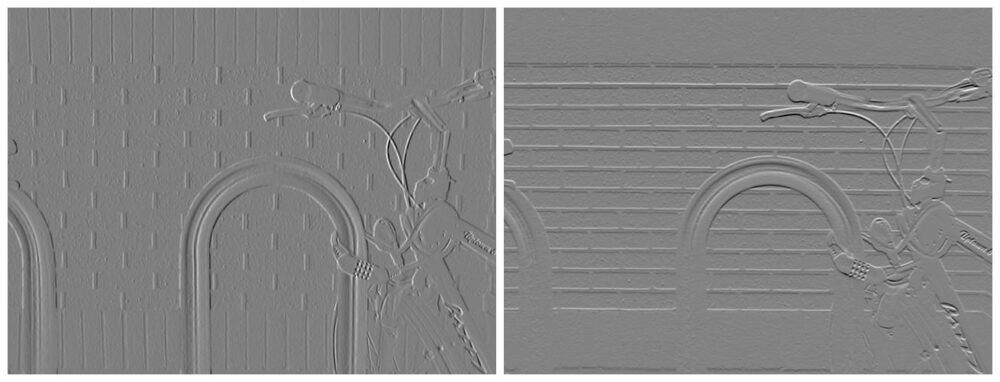

These filters, once convolved over the image, will produce feature maps:

Image credit: Davidwkennedy

For these feature maps, you can compute the gradient magnitude and gradient orientation – i.e. how intense the change is (how likely it is that something is an edge) and in which direction the change is pointing. Since Gy denotes the vertical change (Y-gradient), and Gx denotes the horizontal change (X-gradient) – you can calculate the magnitude by simply applying the Pythagorean theorem, to get the hypothenuse of the triangle formed by the “left” and “right” directions:

$$

{G} ={sqrt {{{G} _{x}}^{2}+{{G} _{y}}^{2}}}

$$

Using the magnitude and orientation, you can produce an image with its edged highlighted:

Image credit: Davidwkennedy

However – you can see how much noise was also caught from the tecture of the bricks! Image gradients are very sensitive to noise. This is why Sobel and Scharr filters were used as the component, but not the only approach in Canny’s method. Gaussian smoothing helps here as well.

Non-Max Supression

A noticable issue with the Sobel filter is that edges aren’t really clear. It’s not like someone took a pencil and drew a line to create lineart of the image. The edges usually aren’t so clear cut in images, as light diffuses gradually. However, we can find the common line in the edges, and supress the rest of the pixels around it, yielding a clean, thin separation line instead. This is known as Non-Max Supression! The non-max pixels (ones smaller than the one we’re comparing them to in a small local field, such as a 3×3 kernel) get supressed. The concept is applicable to more tasks than this, but let’s bind it to this context for now.

Hysteresis Thresholding

Many non-edges can and likely will be evaluated as edges, due to lighting conditions, the materials in the image, etc. Because of the various reasons these miscalculations occur – it’s hard to make an automated evaluation of what an edge certainly is and isn’t. You can threshold gradients, and only include the stronger ones, assuming that “real” edges are more intense than “fake” edges.

Thresholding works in much the same way as usual – if the gradient is below a lower threshold, remove it (zero it out), and if it’s above a given top threshold, keep it. Everything in-between the lower bound and upper bound is in the “gray zone”. If any edge in-between the thresholds is connected to a definitive edge (ones above the threshold) – they’re also considered edges. If they’re not connected, they’re likely arficats of a miscalculated edge.

That’s hysteresis thresholding! In effect, it helps clean up the final output and remove false edges, depending on what you classify as a false edge. To find good threshold values, you’ll generally experiment with different lower and upper bounds for the thresholds, or employ an automated method such as Otsu’s method or the Triangle method.

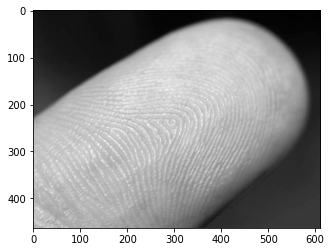

Let’s load an image in and greyscale it (Canny, just as Sobel/Scharr requires images to be greyscaled):

import cv2

import matplotlib.pyplot as plt

img = cv2.imread('finger.jpg', cv2.IMREAD_GRAYSCALE)

img_blur = cv2.GaussianBlur(img, (3,3), 0)

plt.imshow(img_blur, cmap='gray')

The closeup image of a finger will serve as a good testing ground for edge detection – it’s not easy to discern a fingerprint from the image, but we can approximate one.

Edge Detection on Images with cv2.Canny()

Canny’s algorithm can be applied using OpenCV’s Canny() method:

cv2.Canny(input_img, lower_bound, upper_bound)

Check out our hands-on, practical guide to learning Git, with best-practices, industry-accepted standards, and included cheat sheet. Stop Googling Git commands and actually learn it!

Finding the right balance between the lower bound and upper bound can be tricky. If both are low – you’ll have few edges. If the lower bound is low and upper is high – you’ll have noise. If both are high and close to each other – you’ll have few edges. The right spot has just enough gap between the bounds, and has them on the right scale. Experiment!

The input image will be blurred by the Canny method, but oftentimes, you’ll benefit from blurring it before it goes in as well. The method applies a 5×5 Gaussian blur to the input before going through the rest of the operations, but even with this blur, some noise can still seep through, so we’ve blurred the image before feeding it into the algorithm:

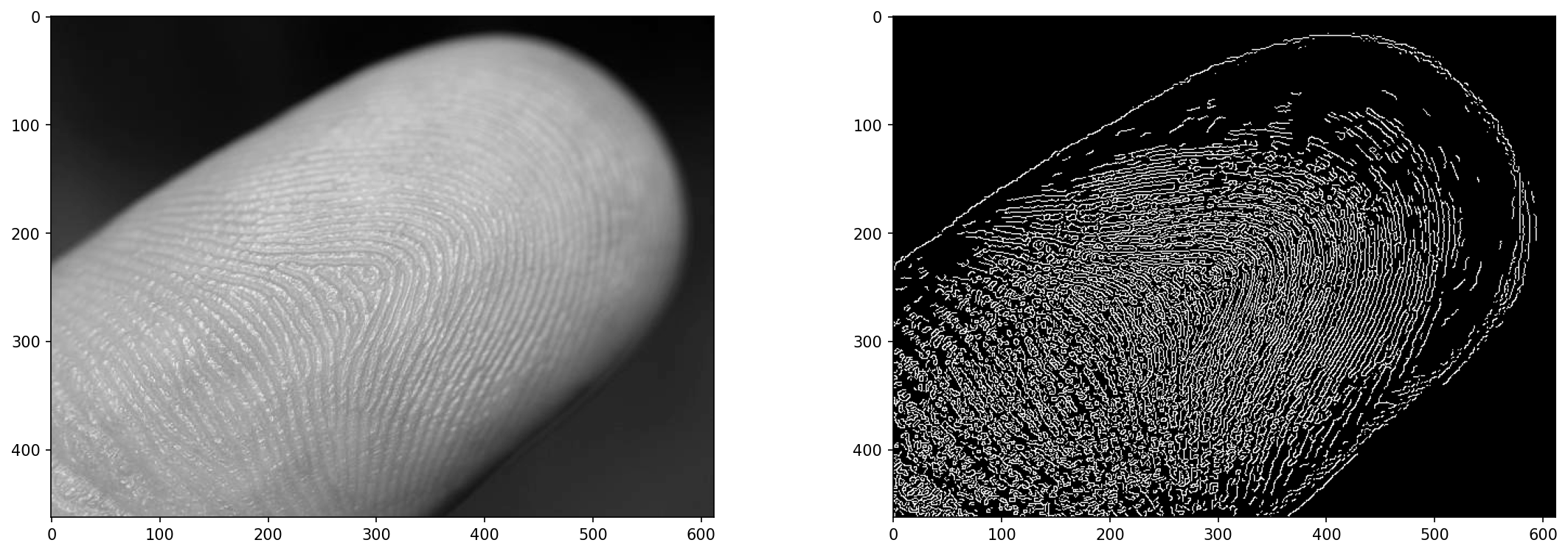

edge = cv2.Canny(img_blur, 20, 30)

fig, ax = plt.subplots(1, 2, figsize=(18, 6), dpi=150)

ax[0].imshow(img, cmap='gray')

ax[1].imshow(edge, cmap='gray')

This results in:

The values of 20 and 30 here aren’t arbitrary – I’ve tested the method on various parameters, and chose a set that seemed to produce a decent result. Can we try to automate this?

Automated Thresholding for cv2.Canny()?

Can you find an optimal set of threshold values? Yes, but it doesn’t always work. You can make your own calculation for some good value, and then adjust the range with a sigma around that threshold:

lower_bound = (1-sigma)*threshold

upper_bound = (1+sigma)*threshold

When sigma, is say, 0.33 – the bounds will be 0.66*threshold and 1.33*threshold, allowing a ~1/3 range around it. Though, finding the threshold is what’s more difficult. OpenCV provides us with Otsu’s method (works great for bi-modal images) and the Triangle method. Let’s try both of them out, as well as taking a simple median of the pixel values as the third option:

otsu_thresh, _ = cv2.threshold(img_blur, 0, 255, cv2.THRESH_OTSU)

triangle_thresh, _ = cv2.threshold(img_blur, 0, 255, cv2.THRESH_TRIANGLE)

manual_thresh = np.median(img_blur)

def get_range(threshold, sigma=0.33):

return (1-sigma) * threshold, (1+sigma) * threshold

otsu_thresh = get_range(otsu_thresh)

triangle_thresh = get_range(triangle_thresh)

manual_thresh = get_range(manual_thresh)

print(f"Otsu's Threshold: {otsu_thresh} nTriangle Threshold: {triangle_thresh} nManual Threshold: {manual_thresh}")

This results in:

Otsu's Threshold: (70.35, 139.65)

Triangle Threshold: (17.419999999999998, 34.58)

Manual Threshold: (105.18999999999998, 208.81)

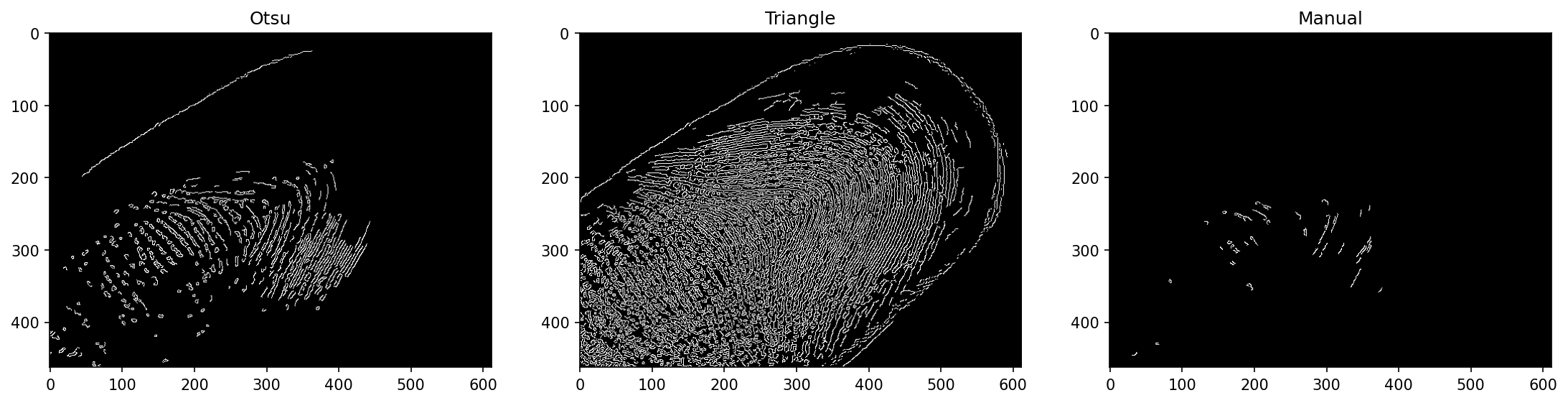

These are pretty different! From the values we’ve seen before, we can anticipate the Triangle method working thebest here. The manual threshold isn’t very informed, since it just takes the median pixel value, and ends up having a high base threshold which is further multiplied into a wide range for this image. Otsu’s method suffers less from this, but suffers nonetheless.

If we run the Canny() method with these threshold ranges:

edge_otsu = cv2.Canny(img_blur, *otsu_thresh)

edge_triangle = cv2.Canny(img_blur, *triangle_thresh)

edge_manual = cv2.Canny(img_blur, *manual_thresh)

fig, ax = plt.subplots(1, 3, figsize=(18, 6), dpi=150)

ax[0].imshow(edge_otsu, cmap='gray')

ax[1].imshow(edge_triangle, cmap='gray')

ax[2].imshow(edge_manual, cmap='gray')

Note: The function expects multiple arguments, and our thresholds are a single tuple. We can destructure the tuple into multiple arguments by prefixing it with *. This works on lists and sets as well, and is a great way of supplying multiple arguments after obtaining them by programmatic means.

This results in:

The Triangle method worked pretty well here! This is no guarantee that it’ll work well in other cases as well.

Real-Time Edge Detection on Videos with cv2.Canny()

Finally, let’s apply Canny edge detection to a video in real-time! We’ll display the video being processed (each frame as it’s done) using cv2.imshow() which displays a window with the frame we’d like to display. Though, we’ll also save the video into an MP4 file that can later be inspected and shared.

To load a video using OpenCV, we use the VideoCapture() method. If we pass in 0 – it’ll record from the current webcam, so you can run the code on your webcam as well! If you pass in a filename, it’ll load the file:

def edge_detection_video(filename):

cap = cv2.VideoCapture(filename)

fourcc = cv2.VideoWriter_fourcc(*'MP4V')

out = cv2.VideoWriter('output.mp4', fourcc, 30.0, (int(cap.get(3)), int(cap.get(4))), isColor=False)

while cap.isOpened():

(ret, frame) = cap.read()

if ret == True:

frame = cv2.GaussianBlur(frame, (3, 3), 0)

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

edge = cv2.Canny(frame, 50, 100)

out.write(edge)

cv2.imshow('Edge detection', edge)

else:

break

if cv2.waitKey(10) & 0xFF == ord('q'):

break

cap.release()

out.release()

cv2.destroyAllWindows()

edge_detection_video('secret_video.mp4')

The VideoWriter accepts several parameters – the output filename, the FourCC (four codec codes, denoting the codec used to encode the video), the framerate and the resolution as a tuple. To not guess or resize the video – we’ve used the width and height of the original video, obtained through the VideoCapture instance that contains data about the video itself, such as the width, height, total number of frames, etc.

While the capture is opened, we try to read the next frame with cap.read(), which returns a result code and the next frame. The result code is True or False, denoting the presence of the next frame or a lack thereof. Only when there is a frame, we’ll try to process it further, otherwise, we’ll break the loop. For each valid frame, we run it through a gaussian blur, convert it to grayscale, run cv2.Canny() on it and write it using the VideoWriter to the disk, and display using cv2.imshow() for a live view.

Finally, we release the capture and video writer, as they’re both working with files on the disk, and destroy all existing windows.

When you run the method with a secret_video.mp4 input – you’ll see a window pop up and once it’s finished, a file in your working directory:

Conclusion

In this guide, we’ve taken a look at how Canny edge detection works, and its constituent parts – gaussian smoothing, Sobel filters and image gradients, Non-Max Supression and Hysteresis Thresholding. Finally, we’ve explored methods for automated threshold range search for Canny edge detection with cv2.Canny(), and employed the technique on a video, providing real-time edge detection and saving the results in a video file.

Going Further – Practical Deep Learning for Computer Vision

Your inquisitive nature makes you want to go further? We recommend checking out our Course: “Practical Deep Learning for Computer Vision with Python”.

Another Computer Vision Course?

We won’t be doing classification of MNIST digits or MNIST fashion. They served their part a long time ago. Too many learning resources are focusing on basic datasets and basic architectures before letting advanced black-box architectures shoulder the burden of performance.

We want to focus on demystification, practicality, understanding, intuition and real projects. Want to learn how you can make a difference? We’ll take you on a ride from the way our brains process images to writing a research-grade deep learning classifier for breast cancer to deep learning networks that “hallucinate”, teaching you the principles and theory through practical work, equipping you with the know-how and tools to become an expert at applying deep learning to solve computer vision.

What’s inside?

- The first principles of vision and how computers can be taught to “see”

- Different tasks and applications of computer vision

- The tools of the trade that will make your work easier

- Finding, creating and utilizing datasets for computer vision

- The theory and application of Convolutional Neural Networks

- Handling domain shift, co-occurrence, and other biases in datasets

- Transfer Learning and utilizing others’ training time and computational resources for your benefit

- Building and training a state-of-the-art breast cancer classifier

- How to apply a healthy dose of skepticism to mainstream ideas and understand the implications of widely adopted techniques

- Visualizing a ConvNet’s “concept space” using t-SNE and PCA

- Case studies of how companies use computer vision techniques to achieve better results

- Proper model evaluation, latent space visualization and identifying the model’s attention

- Performing domain research, processing your own datasets and establishing model tests

- Cutting-edge architectures, the progression of ideas, what makes them unique and how to implement them

- KerasCV – a WIP library for creating state of the art pipelines and models

- How to parse and read papers and implement them yourself

- Selecting models depending on your application

- Creating an end-to-end machine learning pipeline

- Landscape and intuition on object detection with Faster R-CNNs, RetinaNets, SSDs and YOLO

- Instance and semantic segmentation

- Real-Time Object Recognition with YOLOv5

- Training YOLOv5 Object Detectors

- Working with Transformers using KerasNLP (industry-strength WIP library)

- Integrating Transformers with ConvNets to generate captions of images

- DeepDream

- Deep Learning model optimization for computer vision