If you have searched for an item to buy on amazon.com, you have used Amazon Search services. At Amazon Search, we’re responsible for the search and discovery experience for our customers worldwide. In the background, we index our worldwide catalog of products, deploy highly scalable AWS fleets, and use advanced machine learning (ML) to match relevant and interesting products to every customer’s query.

Our scientists regularly train thousands of ML models to improve the quality of search results. Supporting large-scale experimentation presents its own challenges, especially when it comes to improving the productivity of the scientists training these ML models.

In this post, we share how we built a management system around Amazon SageMaker training jobs, allowing our scientists to fire-and-forget thousands of experiments and be notified when needed. They can now focus on high-value tasks and resolving algorithmic errors, saving 60% of their time.

Izziv

At Amazon Search, our scientists solve information retrieval problems by experimenting and running numerous ML model training jobs on SageMaker. To keep up with our team’s innovation, our models’ complexity and number of training jobs have increased over time. SageMaker training jobs allow us to reduce the time and cost to train and tune those models at scale, without the need to manage infrastructure.

Like everything in such large-scale ML projects, training jobs can fail due to a variety of factors. This post focuses on capacity shortages and failures due to algorithm errors.

We designed an architecture with a job management system to tolerate and reduce the probability of a job failing due to capacity unavailability or algorithm errors. It allows scientists to fire-and-forget thousands of training jobs, automatically retry them on transient failure, and get notified of success or failure if needed.

Pregled rešitev

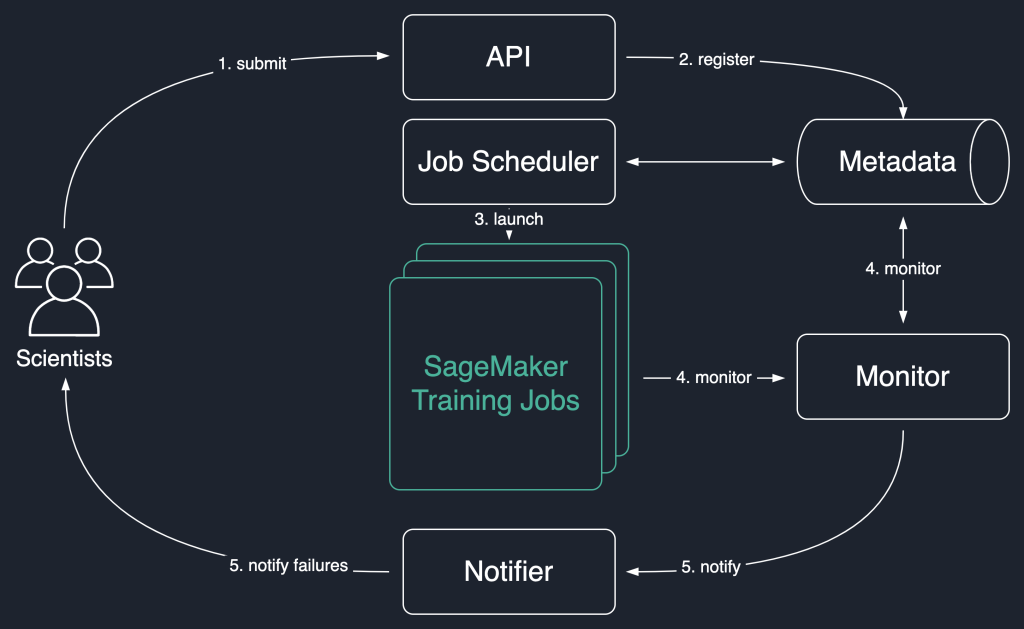

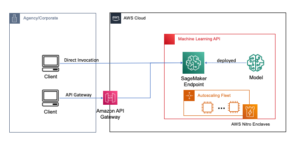

In the following solution diagram, we use SageMaker training jobs as the basic unit of our solution. That is, a job represents the end-to-end training of an ML model.

The high-level workflow of this solution is as follows:

- Scientists invoke an API to submit a new job to the system.

- The job is registered with the

Newstatus in a metadata store. - A job scheduler asynchronously retrieves

Newjobs from the metadata store, parses their input, and tries to launch SageMaker training jobs for each one. Their status changes toLaunchedorFaileddepending on success. - A monitor checks the jobs progress at regular intervals, and reports their

Completed,FailedaliInProgressstate in the metadata store. - A notifier is triggered to report

CompletedinFailedjobs to the scientists.

Persisting the jobs history in the metadata store also allows our team to conduct trend analysis and monitor project progress.

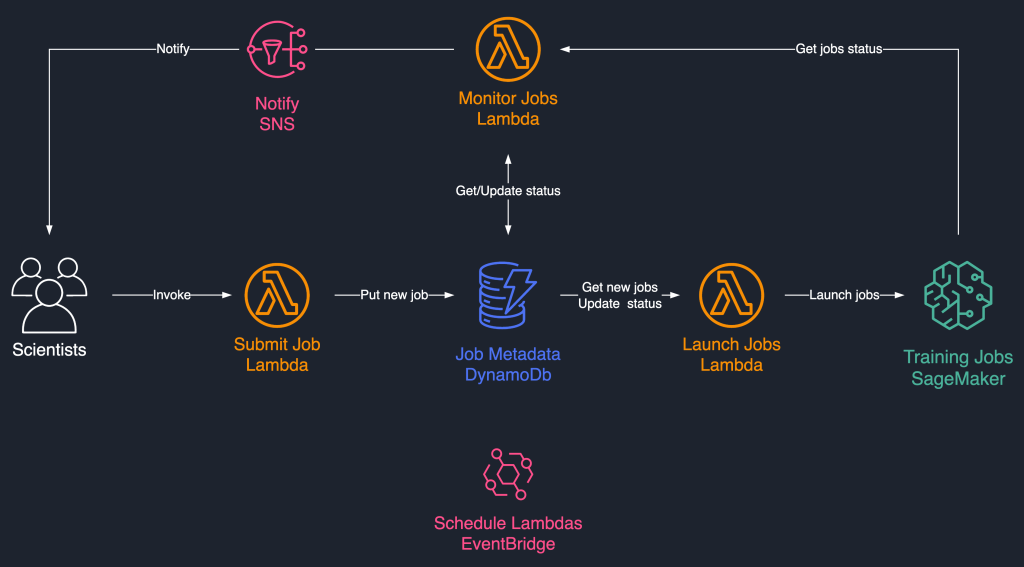

This job scheduling solution uses loosely coupled serverless components based on AWS Lambda, Amazon DynamoDB, Amazon Simple notification Service (Amazon SNS) in Amazon EventBridge. This ensures horizontal scalability, allowing our scientists to launch thousands of jobs with minimal operations effort. The following diagram illustrates the serverless architecture.

In the following sections, we go into more detail about each service and its components.

DynamoDB as the metadata store for job runs

The ease of use and scalability of DynamoDB made it a natural choice to persist the jobs metadata in a DynamoDB table. This solution stores several attributes of jobs submitted by scientists, thereby helping with progress tracking and workflow orchestration. The most important attributes are as follows:

- JobId – A unique job ID. This can be auto-generated or provided by the scientist.

- JobStatus – The status of the job.

- JobArgs – Other arguments required for creating a training job, such as the input path in Amazon S3, the training image URI, and more. For a complete list of parameters required to create a training job, refer to CreateTrainingJob.

Lambda for the core logic

Uporabljamo tri na osnovi posode Lambda functions to orchestrate the job workflow:

- Oddaj delo – This function is invoked by scientists when they need to launch new jobs. It acts as an API for simplicity. You can also front it with Amazon API Gateway, if needed. This function registers the jobs in the DynamoDB table.

- Launch Jobs – This function periodically retrieves

Newjobs from the DynamoDB table and launches them using the SageMaker CreateTrainingJob command. It retries on transient failures, such asResourceLimitExceededinCapacityError, to instrument resiliency into the system. It then updates the job status asLaunchedorFaileddepending on success. - Monitor Jobs – This function periodically keeps track of job progress using the DescribeTrainingJob command, and updates the DynamoDB table accordingly. It polls

Failedjobs from the metadata and assesses whether they should be resubmitted or marked as terminally failed. It also publishes notification messages to the scientists when their jobs reach a terminal state.

EventBridge for scheduling

We use EventBridge to run the Launch Jobs and Monitor Jobs Lambda functions on a schedule. For more information, refer to Vadnica: Načrtujte funkcije AWS Lambda z uporabo EventBridge.

Lahko pa uporabite tudi Amazon DynamoDB Streams for the triggers. For more information, see DynamoDB Streams in sprožilci AWS Lambda.

Notifications with Amazon SNS

Our scientists are notified by email using Amazon SNS when their jobs reach a terminal state (Failed after a maximum number of retries), Completedali Stopped.

zaključek

In this post, we shared how Amazon Search adds resiliency to ML model training workloads by scheduling them, and retrying them on capacity shortages or algorithm errors. We used Lambda functions in conjunction with a DynamoDB table as a central metadata store to orchestrate the entire workflow.

Such a scheduling system allows scientists to submit their jobs and forget about them. This saves time and allows them to focus on writing better models.

To go further in your learnings, you can visit Awesome SageMaker and find in a single place, all the relevant and up-to-date resources needed for working with SageMaker.

O avtorjih

Luochao Wang is a Software Engineer at Amazon Search. He focuses on scalable distributed systems and automation tooling on the cloud to accelerate the pace of scientific innovation for Machine Learning applications.

Luochao Wang is a Software Engineer at Amazon Search. He focuses on scalable distributed systems and automation tooling on the cloud to accelerate the pace of scientific innovation for Machine Learning applications.

Ishan Bhatt is a Software Engineer in Amazon Prime Video team. He primarily works in the MLOps space and has experience building MLOps products for the past 4 years using Amazon SageMaker.

Ishan Bhatt is a Software Engineer in Amazon Prime Video team. He primarily works in the MLOps space and has experience building MLOps products for the past 4 years using Amazon SageMaker.

Abhinandan Patni je višji programski inženir pri Amazon Search. Osredotoča se na gradnjo sistemov in orodij za razširljivo porazdeljeno usposabljanje poglobljenega učenja in sklepanje v realnem času.

Abhinandan Patni je višji programski inženir pri Amazon Search. Osredotoča se na gradnjo sistemov in orodij za razširljivo porazdeljeno usposabljanje poglobljenega učenja in sklepanje v realnem času.

Eiman Elnahrawy je glavni programski inženir pri Amazon Search, ki vodi prizadevanja za pospeševanje, skaliranje in avtomatizacijo strojnega učenja. Njeno strokovno znanje zajema več področij, vključno s strojnim učenjem, porazdeljenimi sistemi in personalizacijo.

Eiman Elnahrawy je glavni programski inženir pri Amazon Search, ki vodi prizadevanja za pospeševanje, skaliranje in avtomatizacijo strojnega učenja. Njeno strokovno znanje zajema več področij, vključno s strojnim učenjem, porazdeljenimi sistemi in personalizacijo.

Sofian Hamiti je strokovnjak za rešitve AI / ML za rešitve pri AWS. Strankam v različnih panogah pomaga, da pospešijo svojo umetno inteligenco / ML, tako da jim pomaga zgraditi in operacionalizirati rešitve strojnega učenja od konca do konca.

Sofian Hamiti je strokovnjak za rešitve AI / ML za rešitve pri AWS. Strankam v različnih panogah pomaga, da pospešijo svojo umetno inteligenco / ML, tako da jim pomaga zgraditi in operacionalizirati rešitve strojnega učenja od konca do konca.

Romi Datta je višji vodja produktnega upravljanja v skupini Amazon SageMaker, odgovoren za usposabljanje, obdelavo in trgovino s funkcijami. V AWS je že več kot 4 leta in opravlja več vodilnih vlog pri upravljanju izdelkov v SageMaker, S3 in IoT. Pred AWS je delal v različnih vodstvenih vlogah produktnega menedžmenta, inženiringa in operativnih vodstev pri IBM, Texas Instruments in Nvidia. Ima magisterij in doktorat znanosti. magistriral iz elektrotehnike in računalništva na Univerzi v Teksasu v Austinu in magistriral na poslovni šoli Booth Univerze v Chicagu.

Romi Datta je višji vodja produktnega upravljanja v skupini Amazon SageMaker, odgovoren za usposabljanje, obdelavo in trgovino s funkcijami. V AWS je že več kot 4 leta in opravlja več vodilnih vlog pri upravljanju izdelkov v SageMaker, S3 in IoT. Pred AWS je delal v različnih vodstvenih vlogah produktnega menedžmenta, inženiringa in operativnih vodstev pri IBM, Texas Instruments in Nvidia. Ima magisterij in doktorat znanosti. magistriral iz elektrotehnike in računalništva na Univerzi v Teksasu v Austinu in magistriral na poslovni šoli Booth Univerze v Chicagu.

RJ je inženir v ekipi Search M5, ki vodi prizadevanja za izgradnjo obsežnih sistemov globokega učenja za usposabljanje in sklepanje. Zunaj službe raziskuje različne kulinarike hrane in igra športe z loparjem.

RJ je inženir v ekipi Search M5, ki vodi prizadevanja za izgradnjo obsežnih sistemov globokega učenja za usposabljanje in sklepanje. Zunaj službe raziskuje različne kulinarike hrane in igra športe z loparjem.

- Napredno (300)

- AI

- ai art

- ai art generator

- imajo robota

- Amazonski SageMaker avtopilot

- Umetna inteligenca

- certificiranje umetne inteligence

- umetna inteligenca v bančništvu

- robot z umetno inteligenco

- roboti z umetno inteligenco

- programska oprema za umetno inteligenco

- Strojno učenje AWS

- blockchain

- blockchain konferenca ai

- coingenius

- pogovorna umetna inteligenca

- kripto konferenca ai

- dall's

- globoko učenje

- strojno učenje

- platon

- platon ai

- Platonova podatkovna inteligenca

- Igra Platon

- PlatoData

- platogaming

- lestvica ai

- sintaksa

- zefirnet