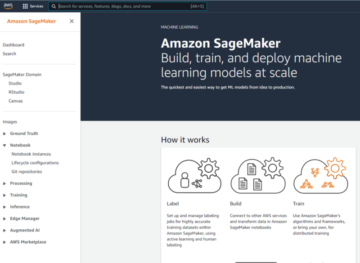

Decembra 2020 je dr. AWS napovedal splošna razpoložljivost Amazon SageMaker JumpStart, zmožnost Amazon SageMaker ki vam pomaga hitro in enostavno začeti s strojnim učenjem (ML). JumpStart zagotavlja fino nastavitev in uvajanje široke palete vnaprej usposobljenih modelov v priljubljenih nalogah ML z enim klikom, kot tudi izbor celovitih rešitev, ki rešujejo pogoste poslovne težave. Te funkcije odpravljajo težko delo pri vsakem koraku procesa ML, kar olajša razvoj visokokakovostnih modelov in skrajša čas do uvajanja.

This post is the third in a series on using JumpStart for specific ML tasks. In the prva objava, we showed how you can run image classification use cases on JumpStart. In the druga objava, we showed how you can run text classification use cases on JumpStart. In this post, we provide a step-by-step walkthrough on how to fine-tune and deploy an image segmentation model, using trained models from MXNet. We explore two ways of obtaining the same result: via JumpStart’s graphical interface on Amazon SageMaker Studio, in programsko prek API-ji JumpStart.

If you want to jump straight into the JumpStart API code we explain in this post, you can refer to the following sample Jupyter notebooks:

JumpStart pregled

JumpStart helps you get started with ML models for a variety of tasks without writing a single line of code. At the time of writing, JumpStart enables you to do the following:

- Razmestite vnaprej usposobljene modele za pogoste naloge ML – JumpStart vam omogoča, da obravnavate običajne naloge ML brez razvojnih naporov, saj zagotavlja enostavno uvajanje modelov, ki so bili predhodno usposobljeni na velikih, javno dostopnih nizih podatkov. Raziskovalna skupnost ML je vložila veliko truda v to, da je večina nedavno razvitih modelov postala javno dostopna za uporabo. JumpStart gosti zbirko več kot 300 modelov, ki obsegajo 15 najbolj priljubljenih nalog ML, kot so zaznavanje predmetov, klasifikacija besedila in generiranje besedila, kar začetnikom olajša njihovo uporabo. Ti modeli so vzeti iz priljubljenih središč modelov, kot so TensorFlow, PyTorch, Hugging Face in MXNet.

- Natančno prilagodite vnaprej usposobljene modele – JumpStart vam omogoča natančno nastavitev vnaprej pripravljenih modelov brez potrebe po pisanju lastnega algoritma za usposabljanje. V ML se imenuje sposobnost prenosa znanja, pridobljenega na enem področju, na drugo področje transferno učenje. Učenje prenosa lahko uporabite za izdelavo natančnih modelov na vaših manjših naborih podatkov z veliko nižjimi stroški usposabljanja kot stroški usposabljanja izvirnega modela. JumpStart vključuje tudi priljubljene algoritme za usposabljanje, ki temeljijo na LightGBM, CatBoost, XGBoost in Scikit-learn, ki jih lahko trenirate od začetka za tabelarično regresijo in klasifikacijo.

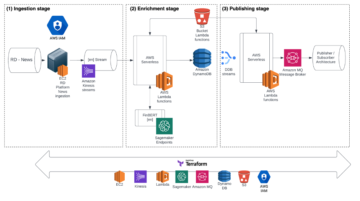

- Uporabite vnaprej izdelane rešitve – JumpStart ponuja nabor 17 rešitev za pogoste primere uporabe ML, kot so napovedovanje povpraševanja ter industrijske in finančne aplikacije, ki jih lahko uvedete z le nekaj kliki. Rešitve so aplikacije ML od konca do konca, ki združujejo različne storitve AWS za rešitev določenega primera poslovne uporabe. Uporabljajo Oblikovanje oblaka AWS predloge in referenčne arhitekture za hitro uvajanje, kar pomeni, da so popolnoma prilagodljivi.

- Glejte primere zvezkov za algoritme SageMaker – SageMaker ponuja nabor vgrajenih algoritmov za pomoč podatkovnim znanstvenikom in izvajalcem ML, da hitro začnejo z usposabljanjem in uvajanjem modelov ML. JumpStart ponuja vzorčne zvezke, ki jih lahko uporabite za hitro uporabo teh algoritmov.

- Preglejte video posnetke in bloge za usposabljanje – JumpStart ponuja tudi številne objave v spletnem dnevniku in videoposnetke, ki vas učijo, kako uporabljati različne funkcije znotraj SageMakerja.

JumpStart sprejema nastavitve VPC po meri in AWS Service Key Management (AWS KMS) šifrirne ključe, tako da lahko varno uporabljate razpoložljive modele in rešitve v okolju vašega podjetja. Svoje varnostne nastavitve lahko posredujete JumpStartu v Studiu ali prek SDK-ja SageMaker Python.

Semantična segmentacija

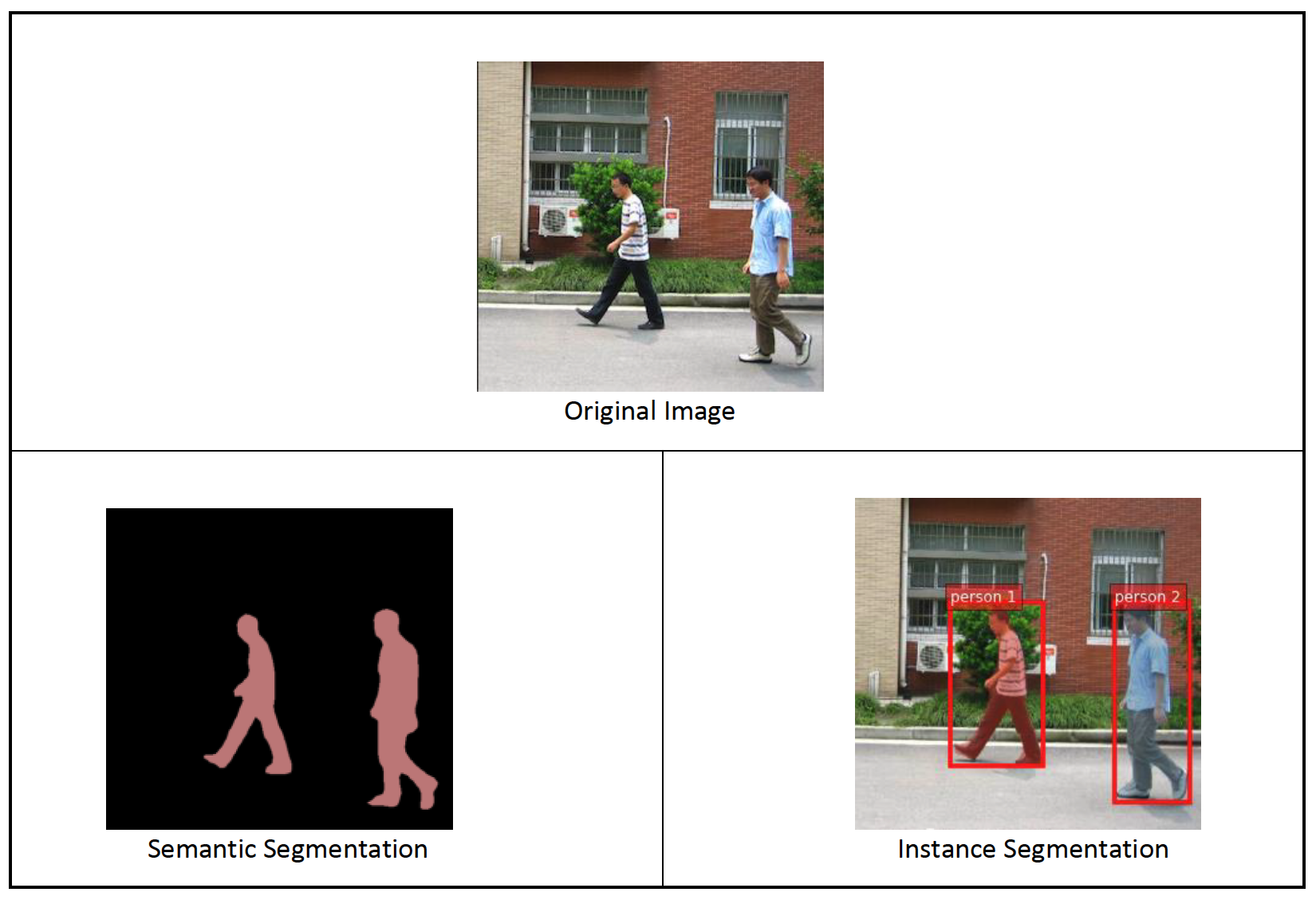

Semantic segmentation delineates each class of objects appearing in an input image. It tags (classifies) each pixel of the input image with a class label from a predefined set of classes. Multiple objects of the same class are mapped to the same mask.

The model available for fine-tuning builds a fully convolutional network (FCN) “head” on top of the base network. The fine-tuning step fine-tunes the FCNHead while keeping the parameters of the rest of the model frozen, and returns the fine-tuned model. The objective is to minimize per-pixel softmax cross entropy loss to train the FCN. The model returned by fine-tuning can be further deployed for inference.

The input directory should look like the following code if the training data contains two images. The names of the .png files can be anything.

The mask files should have class label information for each pixel.

Segmentacija primerkov

Instance segmentation detects and delineates each distinct object of interest appearing in an image. It tags every pixel with an instance label. Whereas semantic segmentation assigns the same tag to pixels of multiple objects of the same class, instance segmentation further labels pixels corresponding to each occurrence of an object on the image with a separate tag.

Currently, JumpStart offers inference-only models for instance segmentation and doesn’t support fine-tuning.

The following images illustrate the difference between the inference in semantic segmentation and instance segmentation. The original image has two people in the image. Semantic segmentation treats multiple people in the image as one entity: Person. However, instance segmentation identifies individual people within the Person kategorija.

Pregled rešitev

The following sections provide a step-by-step demo to perform semantic segmentation with JumpStart, both via the Studio UI and via JumpStart APIs.

Sprehodimo se skozi naslednje korake:

- Do JumpStart dostopajte prek uporabniškega vmesnika Studio:

- Zaženite sklepanje na predhodno usposobljenem modelu.

- Natančno prilagodite predhodno usposobljeni model.

- Uporabite JumpStart programsko s SDK SageMaker Python:

- Zaženite sklepanje na predhodno usposobljenem modelu.

- Natančno prilagodite predhodno usposobljeni model.

We also discuss additional advanced features of JumpStart.

Do JumpStart dostopajte prek uporabniškega vmesnika Studio

V tem razdelku prikazujemo, kako učiti in uvajati modele JumpStart prek uporabniškega vmesnika Studio.

Zaženite sklepanje na predhodno usposobljenem modelu

The following video shows you how to find a pre-trained semantic segmentation model on JumpStart and deploy it. The model page contains valuable information about the model, how to use it, expected data format, and some fine-tuning details. You can deploy any of the pre-trained models available in JumpStart. For inference, we pick the ml.g4dn.xlarge instance type. It provides the GPU acceleration needed for low inference latency, but at a lower price point. After you configure the SageMaker hosting instance, choose uvajanje. Traja lahko od 5 do 10 minut, dokler vaša vztrajna končna točka ne začne delovati.

After a few minutes, your endpoint is operational and ready to respond to inference requests.

Similarly, you can deploy a pre-trained instance segmentation model by following the same steps in the preceding video while searching for instance segmentation instead of semantic segmentation in the JumpStart search bar.

Natančno prilagodite predhodno usposobljeni model

The following video shows how to find and fine-tune a semantic segmentation model in JumpStart. In the video, we fine-tune the model using the Nabor podatkov PennFudanPed, provided by default in JumpStart, which you can download under the Licenca Apache 2.0.

Fine-tuning on your own dataset involves taking the correct formatting of data (as explained on the model page), uploading it to Preprosta storitev shranjevanja Amazon (Amazon S3), and specifying its location in the data source configuration. We use the same hyperparameter values set by default (number of epochs, learning rate, and batch size). We also use a GPU-backed ml.p3.2xlarge as our SageMaker training instance.

You can monitor your training job running directly on the Studio console, and are notified upon its completion. After training is complete, you can deploy the fine-tuned model from the same page that holds the training job details. The deployment workflow is the same as deploying a pre-trained model.

Uporabite JumpStart programsko s SDK SageMaker

In the preceding sections, we showed how you can use the JumpStart UI to deploy a pre-trained model and fine-tune it interactively, in a matter of a few clicks. However, you can also use JumpStart’s models and easy fine-tuning programmatically by using APIs that are integrated into the SageMaker SDK. We now go over a quick example of how you can replicate the preceding process. All the steps in this demo are available in the accompanying notebooks Uvod v JumpStart – Segmentacija primerkov in Uvod v JumpStart – Semantična segmentacija.

Zaženite sklepanje na predhodno usposobljenem modelu

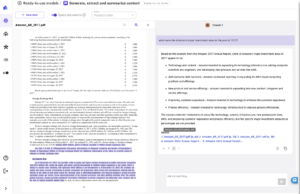

In this section, we choose an appropriate pre-trained model in JumpStart, deploy this model to a SageMaker endpoint, and run inference on the deployed endpoint.

SageMaker je platforma, ki temelji na vsebnikih Docker. JumpStart uporablja razpoložljivo specifično ogrodje Vsebniki za globoko učenje SageMaker (DLC-ji). Pridobimo morebitne dodatne pakete, kot tudi skripte za izvajanje usposabljanja in sklepanja za izbrano nalogo. Na koncu se vnaprej pripravljeni artefakti modela pridobijo ločeno model_uris, ki platformi zagotavlja prilagodljivost. Z enim skriptom za usposabljanje ali sklepanje lahko uporabite poljubno število modelov, vnaprej usposobljenih za isto nalogo. Oglejte si naslednjo kodo:

For instance segmentation, we can set model_id do mxnet-semseg-fcn-resnet50-ade. The is in the identifier corresponds to instance segmentation.

Nato vire vnesemo v a Model SageMaker in uvesti končno točko:

Po nekaj minutah je naš model razporejen in iz njega lahko v realnem času pridobimo napovedi!

The following code snippet gives you a glimpse of what semantic segmentation looks like. The predicted mask for each pixel is visualized. To get inferences from a deployed model, an input image needs to be supplied in binary format. The response of the endpoint is a predicted label for each pixel in the image. We use the query_endpoint in parse_response helper functions, which are defined in the spremljajoči zvezek:

Natančno prilagodite predhodno usposobljeni model

To fine-tune a selected model, we need to get that model’s URI, as well as that of the training script and the container image used for training. Thankfully, these three inputs depend solely on the model name, version (for a list of the available models, see JumpStart Available Model Table), and the type of instance you want to train on. This is demonstrated in the following code snippet:

Pridobivamo model_id corresponding to the same model we used previously. You can now fine-tune this JumpStart model on your own custom dataset using the SageMaker SDK. We use a dataset that is publicly hosted on Amazon S3, conveniently focused on semantic segmentation. The dataset should be structured for fine-tuning as explained in the previous section. See the following example code:

Za naš izbrani model pridobimo enake privzete hiperparametre kot tiste, ki smo jih videli v prejšnjem razdelku, z uporabo sagemaker.hyperparameters.retrieve_default(). We then instantiate a SageMaker estimator and call the .fit method to start fine-tuning our model, passing it the Amazon S3 URI for our training data. The entry_point script provided is named transfer_learning.py (the same for other tasks and models), and the input data channel passed to .fit mora biti imenovan training.

Medtem ko se algoritem uri, lahko spremljate njegov napredek v zvezku SageMaker, kjer izvajate samo kodo, ali na amazoncloudwatch. When training is complete, the fine-tuned model artifacts are uploaded to the Amazon S3 output location specified in the training configuration. You can now deploy the model in the same manner as the pre-trained model.

Napredne funkcije

In addition to fine-tuning and deploying pre-trained models, JumpStart offers many advanced features.

Prva je automatic model tuning. This allows you to automatically tune your ML models to find the hyperparameter values with the highest accuracy within the range provided through the SageMaker API.

Drugi je postopno usposabljanje. This allows you to train a model you have already fine-tuned using an expanded dataset that contains an underlying pattern not accounted for in previous fine-tuning runs, which resulted in poor model performance. Incremental training saves both time and resources because you don’t need to retrain the model from scratch.

zaključek

In this post, we showed how to fine-tune and deploy a pre-trained semantic segmentation model, and how to adapt it for instance segmentation using JumpStart. You can accomplish this without needing to write code. Try out the solution on your own and send us your comments.

Če želite izvedeti več o JumpStart in o tem, kako lahko uporabite odprtokodne vnaprej usposobljene modele za vrsto drugih nalog ML, si oglejte naslednje Video AWS re:Invent 2020.

O avtorjih

dr. Vivek Madan je uporabni znanstvenik pri ekipi Amazon SageMaker JumpStart. Doktoriral je na Univerzi Illinois v Urbana-Champaign in bil podoktorski raziskovalec na Georgia Tech. Je aktiven raziskovalec strojnega učenja in oblikovanja algoritmov ter je objavil članke na konferencah EMNLP, ICLR, COLT, FOCS in SODA.

dr. Vivek Madan je uporabni znanstvenik pri ekipi Amazon SageMaker JumpStart. Doktoriral je na Univerzi Illinois v Urbana-Champaign in bil podoktorski raziskovalec na Georgia Tech. Je aktiven raziskovalec strojnega učenja in oblikovanja algoritmov ter je objavil članke na konferencah EMNLP, ICLR, COLT, FOCS in SODA.

Santosh Kulkarni je arhitekt za podjetniške rešitve pri Amazon Web Services, ki dela s športnimi strankami v Avstraliji. Navdušen je nad gradnjo obsežnih porazdeljenih aplikacij za reševanje poslovnih problemov z uporabo svojega znanja o AI/ML, velikih podatkih in razvoju programske opreme.

Santosh Kulkarni je arhitekt za podjetniške rešitve pri Amazon Web Services, ki dela s športnimi strankami v Avstraliji. Navdušen je nad gradnjo obsežnih porazdeljenih aplikacij za reševanje poslovnih problemov z uporabo svojega znanja o AI/ML, velikih podatkih in razvoju programske opreme.

Leonardo Bachega is a senior scientist and manager in the Amazon SageMaker JumpStart team. He’s passionate about building AI services for computer vision.

Leonardo Bachega is a senior scientist and manager in the Amazon SageMaker JumpStart team. He’s passionate about building AI services for computer vision.

- AI

- ai art

- ai art generator

- imajo robota

- Amazon SageMaker

- Umetna inteligenca

- certificiranje umetne inteligence

- umetna inteligenca v bančništvu

- robot z umetno inteligenco

- roboti z umetno inteligenco

- programska oprema za umetno inteligenco

- Strojno učenje AWS

- blockchain

- blockchain konferenca ai

- coingenius

- pogovorna umetna inteligenca

- kripto konferenca ai

- dall's

- globoko učenje

- strojno učenje

- platon

- platon ai

- Platonova podatkovna inteligenca

- Igra Platon

- PlatoData

- platogaming

- lestvica ai

- sintaksa

- zefirnet