To safely deploy powerful, general-purpose artificial intelligence in the future, we need to ensure that machine learning models act in accordance with human intentions. This challenge has become known as the alignment problem.

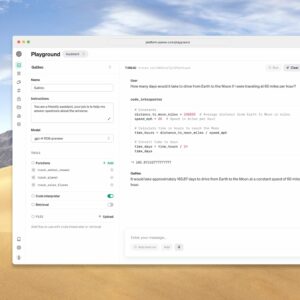

Prilagodljiva rešitev za problem poravnave mora delovati na nalogah, kjer je izhode modela težko ali dolgotrajno za ljudi oceniti. Za preizkus razširljivih tehnik poravnave smo usposobili model za povzemanje celotnih knjig, kot je prikazano v naslednjih vzorcih.[1] Our model works by first summarizing small sections of a book, then summarizing those summaries into a higher-level summary, and so on.

Naš najboljši model je natančno prilagojen iz GPT-3 in ustvarja smiselne povzetke celotnih knjig, ki se včasih celo ujemajo s povprečno kakovostjo človeško napisanih povzetkov: od ljudi doseže oceno 6/7 (podobno povprečnemu človeško napisanemu povzetku). ki so knjigo prebrali 5 % časa in oceno 5/7 15 % časa. Naš model dosega tudi najsodobnejše rezultate na Nabor podatkov BookSum for book-length summarization. A zero-shot question-answering model can use our model’s summaries to obtain competitive results on the Nabor podatkov NarrativeQA for book-length question answering.[2]

Naš pristop: Združevanje krepitvenega učenja iz človeških povratnih informacij in rekurzivne dekompozicije nalog

Razmislite o nalogi povzemanja dela besedila. Velik pretrained models aren’t very good at summarization. In the past we found that training a model with krepitev učenja iz človeških povratnih informacij helped align model summaries with human preferences on short posts and articles. But judging summaries of entire books takes a lot of effort to do directly since a human would need to read the entire book, which takes many hours.

Za reševanje te težave dodatno uporabljamo rekurzivna dekompozicija naloge: we procedurally break up a difficult task into easier ones. In this case we break up summarizing a long piece of text into summarizing several shorter pieces. Compared to an end-to-end training procedure, recursive task decomposition has the following advantages:

- Decomposition allows humans to evaluate model summaries more quickly by using summaries of smaller parts of the book rather than reading the source text.

- Lažje je slediti procesu pisanja povzetkov. Tako lahko na primer poiščete, kje v izvirnem besedilu se zgodijo določeni dogodki iz povzetka. Prepričajte se sami na our summary explorer!

- Our method can be used to summarize books of unbounded length, unrestricted by the context length of the transformer models we use.

Zakaj delamo na tem

Tnjegov work is part of our v teku Raziskave v usklajevanje naprednih sistemov umetne inteligence, kar je ključno za our mission. As we train our models to do increasingly complex tasks, making informed evaluations of the models’ outputs will become increasingly difficult for humans. This makes it harder to detect subtle problems in model outputs that could lead to negative consequences when these models are deployed. Therefore we want our ability to evaluate our models to increase as their capabilities increase.

Naš trenutni pristop k temu problemu je opolnomočenje ljudi za ocenjevanje rezultatov modela strojnega učenja s pomočjo drugih modelov. In this case, to evaluate book summaries we empower humans with individual chapter summaries written by our model, which saves them time when evaluating these summaries relative to reading the source text. Our progress on book summarization is the first large-scale empirical work on scaling alignment techniques.

Going forward, we are researching better ways to assist humans in evaluating model behavior, with the goal of finding techniques that scale to aligning artificial general intelligence.

We’re always looking for more talented people to join us; so if this work interests you, please apply to join our team!

- 10

- 11

- 28

- 67

- 7

- 77

- 84

- 9

- O meni

- Zakon

- Naslov

- napredno

- Prednosti

- AI

- pristop

- članki

- umetni

- Umetna inteligenca

- povprečno

- postanejo

- počutje

- BEST

- knjige

- Zmogljivosti

- izziv

- Poglavje

- v primerjavi z letom

- kompleksna

- nadzor

- Core

- bi

- Trenutna

- datum

- razporedi

- učinek

- opolnomočiti

- dogodki

- Primer

- povratne informacije

- prva

- po

- Naprej

- je pokazala,

- Prihodnost

- splošno

- Cilj

- dobro

- HTTPS

- človeškega

- Ljudje

- Povečajte

- individualna

- Intelligence

- interesi

- IT

- pridružite

- Ključne

- znano

- velika

- vodi

- učenje

- Long

- si

- stroj

- strojno učenje

- IZDELA

- Izdelava

- ujemanje

- srednje

- Mission

- Model

- modeli

- več

- Ostalo

- Papir

- ljudje

- kos

- Prispevkov

- močan

- problem

- Težave

- Postopek

- namene

- kakovost

- vprašanje

- hitro

- ocena

- RE

- reading

- sprostitev

- Raziskave

- Rezultati

- razširljive

- Lestvica

- skaliranje

- izbran

- Kratke Hlače

- Podoben

- majhna

- So

- Rešitev

- state-of-the-art

- sistemi

- nadarjeni

- Naloge

- tehnike

- Test

- Vir

- čas

- zamudno

- usposabljanje

- us

- uporaba

- W3

- WHO

- okna

- delo

- deluje

- deluje