Amazon SageMaker Autopilot has added a new training mode that supports model ensembling powered by AutoGluon. Ensemble training mode in Autopilot trains several base models and combines their predictions using model stacking. For datasets less than 100 MB, ensemble training mode builds machine learning (ML) models with high accuracy quickly—up to eight times faster than hyperparameter optimization (HPO) training mode with 250 trials, and up to 5.8 times faster than HPO training mode with 100 trials. It supports a wide range of algorithms, including LightGBM, CatBoost, XGBoost, Random Forest, Extra Trees, linear models, and neural networks based on PyTorch and FastAI.

How AutoGluon builds ensemble models

AutoGluon-Tabular (AGT) is a popular open-source AutoML framework that trains highly accurate ML models on tabular datasets. Unlike existing AutoML frameworks, which primarily focus on model and hyperparameter selection, AGT succeeds by ensembling multiple models and stacking them in multiple layers. The default behavior of AGT can be summarized as follows: Given a dataset, AGT trains various base models ranging from off-the-shelf boosted trees to customized neural networks on the dataset. The predictions from the base models are used as features to build a stacking model, which learns the appropriate weight of each base model. With these learned weights, the stacking model then combines the base model’s predictions and returns the combined predictions as the final set of predictions.

How Autopilot’s ensemble training mode works

Different datasets have characteristics that are suitable for different algorithms. Given a dataset with unknown characteristics, it’s difficult to know beforehand which algorithms will work best on a dataset. With this in mind, data scientists using AGT often create multiple custom configurations with a subset of algorithms and parameters. They run these configurations on a given dataset to find the best configuration in terms of performance and inference latency.

Autopilot is a low-code ML product that automatically builds the best ML models for your data. In the new ensemble training mode, Autopilot selects an optimal set of AGT configurations and runs multiple trials to return the best model. These trials are run in parallel to evaluate if AGT’s performance can be further improved, in terms of objective metrics or inference latency.

Results observed using OpenML benchmarks

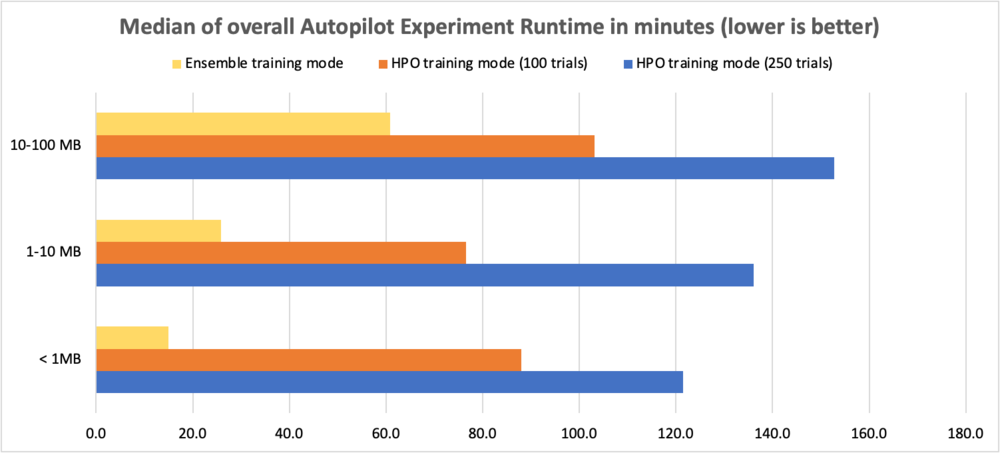

To evaluate the performance improvements, we used OpenML benchmark datasets with sizes varying from 0.5–100 MB and ran 10 AGT trials with different combinations of algorithms and hyperparameter configurations. The tests compared ensemble training mode to HPO mode with 250 trials and HPO mode with 100 trials. The following table compares the overall Autopilot experiment runtime (in minutes) between the two training modes for various dataset sizes.

| Adatkészlet mérete | HPO Mode (250 trials) | HPO Mode (100 trials) | Ensemble Mode (10 trials) | Runtime Improvement with HPO 250 | Runtime Improvement with HPO 100 |

| < 1MB | 121.5 perce | 88.0 perce | 15.0 perce | 8.1x | 5.9x |

| 1-10 MB | 136.1 perce | 76.5 perce | 25.8 perce | 5.3x | 3.0x |

| 10-100 MB | 152.7 perce | 103.1 perce | 60.9 perce | 2.5x | 1.7x |

For comparing performance of multiclass classification problems, we use accuracy, for binary classification problems we use the F1-score, and for regression problems we use R2. The gains in objective metrics are shown in the following tables. We observed that ensemble training mode performed better than HPO training mode (both 100 and 250 trials).

Note that the ensemble mode shows consistent improvement over HPO mode with 250 trials irrespective of dataset size and problem types.

The following table compares accuracy for multi-class classification problems (higher is better).

| Adatkészlet mérete | HPO Mode (250 trials) | HPO Mode (100 trials) | Ensemble Mode (10 trials) | Percentage Improvement over HPO 250 |

| < 1MB | 0.759 | 0.761 | 0.771 | 1.46% |

| 1-5 MB | 0.941 | 0.935 | 0.957 | 1.64% |

| 5-10 MB | 0.639 | 0.633 | 0.671 | 4.92% |

| 10-50 MB | 0.998 | 0.999 | 0.999 | 0.11% |

| 51-100 MB | 0.853 | 0.852 | 0.875 | 2.56% |

The following table compares F1 scores for binary classification problems (higher is better).

| Adatkészlet mérete | HPO Mode (250 trials) | HPO Mode (100 trials) | Ensemble Mode (10 trials) | Percentage Improvement over HPO 250 |

| < 1MB | 0.801 | 0.807 | 0.826 | 3.14% |

| 1-5 MB | 0.59 | 0.587 | 0.629 | 6.60% |

| 5-10 MB | 0.886 | 0.889 | 0.898 | 1.32% |

| 10-50 MB | 0.731 | 0.736 | 0.754 | 3.12% |

| 51-100 MB | 0.503 | 0.493 | 0.541 | 7.58% |

The following table compares R2 for regression problems (higher is better).

| Adatkészlet mérete | HPO Mode (250 trials) | HPO Mode (100 trials) | Ensemble Mode (10 trials) | Percentage Improvement over HPO 250 |

| < 1MB | 0.717 | 0.718 | 0.716 | 0% |

| 1-5 MB | 0.803 | 0.803 | 0.817 | 2% |

| 5-10 MB | 0.590 | 0.586 | 0.614 | 4% |

| 10-50 MB | 0.686 | 0.688 | 0.684 | 0% |

| 51-100 MB | 0.623 | 0.626 | 0.631 | 1% |

In the next sections, we show how to use the new ensemble training mode in Autopilot to analyze datasets and easily build high-quality ML models.

Adatkészlet áttekintése

Az általunk használt Titanic adatkészlet to predict if a given passenger survived or not. This is a binary classification problem. We focus on creating an Autopilot experiment using the new ensemble training mode and compare the results of F1 score and overall runtime with an Autopilot experiment using HPO training mode (100 trials).

| Oszlop neve | Leírás |

| Passengerid | Azonosító szám |

| túlélte | Túlélés |

| Pclass | Jegyosztály |

| Név | Utas neve |

| Szex | Szex |

| Kor | Életkor években |

| Sibsp | A Titanic fedélzetén tartózkodó testvérek vagy házastársak száma |

| Szárít | A Titanic fedélzetén tartózkodó szülők vagy gyermekek száma |

| Jegy | Jegy szám |

| Viteldíj | Passenger fare |

| Kabin | Kabin száma |

| Beindult | Beszállási kikötő |

The dataset has 890 rows and 12 columns. It contains demographic information about the passengers (age, sex, ticket class, and so on) and the Survived (yes/no) target column.

Előfeltételek

Hajtsa végre a következő előfeltétel lépéseket:

- Győződjön meg arról, hogy rendelkezik AWS-fiókkal, és biztonságos hozzáféréssel jelentkezhet be a fiókba a következőn keresztül AWS felügyeleti konzolés AWS Identity and Access Management (IAM) használati engedélyeket Amazon SageMaker és a Amazon egyszerű tárolási szolgáltatás (Amazon S3) erőforrásokat.

- Töltse le a Titanic adatkészlet és a töltse fel egy S3 vödörbe fiókjában.

- Onboard to a SageMaker domain and access Amazon SageMaker Studio to use Autopilot. For instructions, refer Bekapcsolva az Amazon SageMaker domainbe. Ha meglévő Studio-t használ, frissítsen a a Studio legújabb verziója to use the new ensemble training mode.

Create an Autopilot experiment with ensemble training mode

When the dataset is ready, you can initialize an Autopilot experiment in Studio. For full instructions, refer to Hozzon létre egy Amazon SageMaker Autopilot kísérletet. Create an Autopilot experiment by providing an experiment name, the data input, and specifying the target data to predict in the Experiment and data details section. Optionally, you can specify the data spilt ratio and auto creation of the Amazon S3 output location.

For our use case, we provide an experiment name, input Amazon S3 location, and choose túlélte as the target. We keep the auto split enabled and override the default output Amazon S3 location.

Next, we specify the training method in the Képzési módszer section. You can either let Autopilot select the training mode automatically using kocsi based on the dataset size, or select the training mode manually for either ensembling or HPO. The details on each option are as follows:

- kocsi – Autopilot automatically chooses either ensembling or HPO mode based on your dataset size. If your dataset is larger than 100 MB, Autopilot chooses HPO, otherwise it chooses ensembling.

- Összeállítás – Autopilot uses AutoGluon’s ensembling technique to train several base models and combines their predictions using model stacking into an optimal predictive model.

- Hiperparaméter optimalizálás – Autopilot finds the best version of a model by tuning hyperparameters using the Bayesian Optimization technique and running training jobs on your dataset. HPO selects the algorithms most relevant to your dataset and picks the best range of hyperparameters to tune the models.

For our use case, we select Összeállítás as our training mode.

After this, we proceed to the Üzembe helyezés és speciális beállítások section. Here, we deselect the Automatikus telepítés választási lehetőség. Alatt Speciális beállítások, you can specify the type of ML problem that you want to solve. If nothing is provided, Autopilot automatically determines the model based on the data you provide. Because ours is a binary classification problem, we choose Bináris osztályozás as our problem type and F1 as our objective metric.

Finally, we review our selections and choose Kísérlet létrehozása.

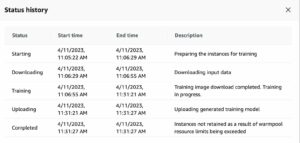

At this point, it’s safe to leave Studio and return later to check on the result, which you can find on the kísérletek menüben.

The following screenshot shows the final results of our titanic-ens ensemble training mode Autopilot job.

You can see the multiple trials that have been attempted by the Autopilot in ensemble training mode. Each trial returns the best model from the pool of individual model runs and stacking ensemble model runs.

To explain this a little further, let’s assume Trial 1 considered all eight supported algorithms and used stacking level 2. It will internally create the individual models for each algorithm as well as the weighted ensemble models with stack Level 0, Level 1, and Level 2. However, the output of Trial 1 will be the best model from the pool of models created.

Similarly, let’s consider Trial 2 to have picked up tree based boosting algorithms only. In this case, Trial 2 will internally create three individual models for each of the three algorithms as well as the weighted ensemble models, and return the best model from its run.

The final model returned by a trial may or may not be a weighted ensemble model, but the majority of the trials will most likely return their best weighted ensemble model. Finally, based on the selected objective metric, the best model amongst all the 10 trials will be identified.

In the preceding example, our best model was the one with highest F1 score (our objective metric). Several other useful metrics, including accuracy, balanced accuracy, precision, and recall are also shown. In our environment, the end-to-end runtime for this Autopilot experiment was 10 minutes.

Create an Autopilot experiment with HPO training mode

Now let’s perform all of the aforementioned steps to create a second Autopilot experiment with the HPO training method (default 100 trials). Apart from training method selection, which is now Hiperparaméter optimalizálás, everything else stays the same. In HPO mode, you can specify the number of trials by setting Maximum jelöltek alatt Speciális beállítások mert Runtime, but we recommend leaving this to default. Not providing any value in Maximum jelöltek will run 100 HPO trials. In our environment, the end-to-end runtime for this Autopilot experiment was 2 hours.

Runtime and performance metric comparison

We see that for our dataset (under 1 MB), not only did ensemble training mode run 12 times faster than HPO training mode (120 minutes to 10 minutes), but it also produced improved F1 scores and other performance metrics.

| Edzési mód | F1 pontszám | Pontosság | Kiegyensúlyozott pontosság | AUC | Pontosság | visszahívás | Log Loss | Runtime |

| Ensemble mode - WeightedEnsemble | 0.844 | 0.878 | 0.865 | 0.89 | 0.912 | 0.785 | 0.394 | 10 perce |

| HPO mode – XGBoost | 0.784 | 0.843 | 0.824 | 0.867 | 0.831 | 0.743 | 0.428 | 120 perce |

Következtetés

Now that we have a winner model, we can either telepítse egy végpontra valós idejű következtetéshez or use batch transforms to make predictions on the unlabeled dataset we downloaded earlier.

Összegzésként

You can run your Autopilot experiments faster without any impact on performance with the new ensemble training mode for datasets less than 100 MB. To get started, create an SageMaker Autopilot experiment on the Studio console and select Összeállítás as your training mode, or let Autopilot infer the training mode automatically based on the dataset size. You can refer to the CreateAutoMLJob API reference guide for updates to API, and upgrade to the a Studio legújabb verziója to use the new ensemble training mode. For more information on this feature, see Model support, metrics, and validation with Amazon SageMaker Autopilot and to learn more about Autopilot, visit the Termékoldal.

A szerzőkről

Janisha Anand Senior Product Manager a SageMaker Low/No Code ML csapatában, amely magában foglalja a SageMaker Autopilotot is. Imádja a kávét, aktív marad, és a családjával tölti az idejét.

Janisha Anand Senior Product Manager a SageMaker Low/No Code ML csapatában, amely magában foglalja a SageMaker Autopilotot is. Imádja a kávét, aktív marad, és a családjával tölti az idejét.

Saket Sathe is a Senior Applied Scientist in the SageMaker Autopilot team. He is passionate about building the next generation of machine learning algorithms and systems. Aside from work, he loves to read, cook, slurp ramen, and play badminton.

Saket Sathe is a Senior Applied Scientist in the SageMaker Autopilot team. He is passionate about building the next generation of machine learning algorithms and systems. Aside from work, he loves to read, cook, slurp ramen, and play badminton.

Abhishek singh is a Software Engineer for the Autopilot team in AWS. He has 8+ years experience as a software developer, and is passionate about building scalable software solutions that solve customer problems. In his free time, Abhishek likes to stay active by going on hikes or getting involved in pick up soccer games.

Abhishek singh is a Software Engineer for the Autopilot team in AWS. He has 8+ years experience as a software developer, and is passionate about building scalable software solutions that solve customer problems. In his free time, Abhishek likes to stay active by going on hikes or getting involved in pick up soccer games.

Vadim Omelcsenko Sr. AI/ML Solutions Architect, aki szenvedélyesen segíti az AWS-ügyfeleket a felhőben való innovációban. Korábbi informatikai tapasztalatait túlnyomórészt a helyszínen szerezte.

Vadim Omelcsenko Sr. AI/ML Solutions Architect, aki szenvedélyesen segíti az AWS-ügyfeleket a felhőben való innovációban. Korábbi informatikai tapasztalatait túlnyomórészt a helyszínen szerezte.

- AI

- ai művészet

- ai art generátor

- van egy robotod

- Amazon SageMaker

- Amazon SageMaker Autopilot

- mesterséges intelligencia

- mesterséges intelligencia tanúsítás

- mesterséges intelligencia a bankszektorban

- mesterséges intelligencia robot

- mesterséges intelligencia robotok

- mesterséges intelligencia szoftver

- AWS gépi tanulás

- blockchain

- blokklánc konferencia ai

- coingenius

- társalgási mesterséges intelligencia

- kriptokonferencia ai

- dall's

- mély tanulás

- google azt

- gépi tanulás

- Plató

- plato ai

- Platón adatintelligencia

- Platón játék

- PlatoData

- platogaming

- skála ai

- szintaxis

- zephyrnet