When a customer has a production-ready intelligens dokumentumfeldolgozás (IDP) workload, we often receive requests for a Well-Architected review. To build an enterprise solution, developer resources, cost, time and user-experience have to be balanced to achieve the desired business outcome. The AWS jól felépített keretrendszer provides a systematic way for organizations to learn operational and architectural best practices for designing and operating reliable, secure, efficient, cost-effective, and sustainable workloads in the cloud.

The IDP Well-Architected Custom Lens follows the AWS Well-Architected Framework, reviewing the solution with six pillars with the granularity of a specific AI or machine learning (ML) use case, and providing the guidance to tackle common challenges. The IDP Well-Architected Custom Lens in the Well-Architected Tool contains questions regarding each of the pillars. By answering these questions, you can identify potential risks and resolve them by following your improvement plan.

This post focuses on the Performance Efficiency pillar of the IDP workload. We dive deep into designing and implementing the solution to optimize for throughput, latency, and overall performance. We start with discussing some common indicators that you should conduct a Well-Architected review, and introduce the fundamental approaches with design principles. Then we go through each focus area from a technical perspective.

To follow along with this post, you should be familiar with the previous posts in this series (rész 1 és a rész 2) and the guidelines in Útmutató az AWS intelligens dokumentumfeldolgozásához. These resources introduce common AWS services for IDP workloads and suggested workflows. With this knowledge, you’re now ready to learn more about productionizing your workload.

Közös mutatók

The following are common indicators that you should conduct a Well-Architected Framework review for the Performance Efficiency pillar:

- Magas késleltetés – When the latency of optical character recognition (OCR), entity recognition, or the end-to-end workflow takes longer than your previous benchmark, this may be an indicator that the architecture design doesn’t cover load testing or error handling.

- Frequent throttling – You may experience throttling by AWS services like Amazon szöveg due to request limits. This means that the architecture needs to be adjusted by reviewing the architecture workflow, synchronous and asynchronous implementation, transactions per second (TPS) calculation, and more.

- Debugging difficulties – When there’s a document process failure, you may not have an effective way to identify where the error is located in the workflow, which service it’s related to, and why the failure occurred. This means the system lacks visibility into logs and failures. Consider revisiting the logging design of the telemetry data and adding infrastructure as code (IaC), such as document processing pipelines, to the solution.

| mutatók | Leírás | Architectural Gap |

| Magas késés | OCR, entity recognition, or end-to-end workflow latency exceeds previous benchmark |

|

| Frequent Throttling | Throttling by AWS services like Amazon Textract due to request limits |

|

| Hard to Debug | No visibility into location, cause, and reason for document processing failures |

|

Tervezési elvek

In this post, we discuss three design principles: delegating complex AI tasks, IaC architectures, and serverless architectures. When you encounter a trade-off between two implementations, you can revisit the design principles with the business priorities of your organization so that you can make decisions effectively.

- Delegating complex AI tasks – You can enable faster AI adoption in your organization by offloading the ML model development lifecycle to managed services and taking advantage of the model development and infrastructure provided by AWS. Rather than requiring your data science and IT teams to build and maintain AI models, you can use pre-trained AI services that can automate tasks for you. This allows your teams to focus on higher-value work that differentiates your business, while the cloud provider handles the complexity of training, deploying, and scaling the AI models.

- IaC architectures – When running an IDP solution, the solution includes multiple AI services to perform the end-to-end workflow chronologically. You can architect the solution with workflow pipelines using AWS lépésfunkciók to enhance fault tolerance, parallel processing, visibility, and scalability. These advantages can enable you to optimize the usage and cost of underlying AI services.

- vagy szerver architektúrák – IDP is often an event-driven solution, initiated by user uploads or scheduled jobs. The solution can be horizontally scaled out by increasing the call rates for the AI services, AWS Lambda, and other services involved. A serverless approach provides scalability without over-provisioning resources, preventing unnecessary expenses. The monitoring behind the serverless design assists in detecting performance issues.

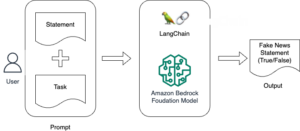

Figure 1.The benefit when applying design principles.

With these three design principles in mind, organizations can establish an effective foundation for AI/ML adoption on cloud platforms. By delegating complexity, implementing resilient infrastructure, and designing for scale, organizations can optimize their AI/ML solutions.

In the following sections, we discuss how to address common challenges in regards to technical focus areas.

Fókuszterületek

When reviewing performance efficiency, we review the solution from five focus areas: architecture design, data management, error handling, system monitoring, and model monitoring. With these focus areas, you can conduct an architecture review from different aspects to enhance the effectivity, observability, and scalability of the three components of an AI/ML project, data, model, or business goal.

Építészeti tervezés

By going through the questions in this focus area, you will review the existing workflow to see if it follows best practices. The suggested workflow provides a common pattern that organizations can follow and prevents trial-and-error costs.

Alapján proposed architecture, the workflow follows the six stages of data capture, classification, extraction, enrichment, review and validation, and consumption. In the common indicators we discussed earlier, two out of three come from architecture design problems. This is because when you start a project with an improvised approach, you may meet project restraints when trying to align your infrastructure to your solution. With the architecture design review, the improvised design can be decoupled as stages, and each of them can be reevaluated and reordered.

You can save time, money, and labor by implementing osztályozási in your workflow, and documents go to downstream applications and APIs based on document type. This enhances the observability of the document process and makes the solution straightforward to maintain when adding new document types.

Adatkezelés

Performance of an IDP solution includes latency, throughput, and the end-to-end user experience. How to manage the document and its extracted information in the solution is the key to data consistency, security, and privacy. Additionally, the solution must handle high data volumes with low latency and high throughput.

When going through the questions of this focus area, you will review the document workflow. This includes data ingestion, data preprocessing, converting documents to document types accepted by Amazon Textract, handling incoming document streams, routing documents by type, and implementing access control and retention policies.

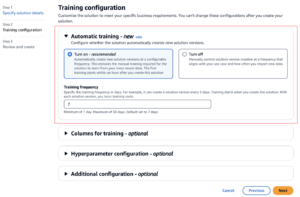

For example, by storing a document in the different processed phases, you can reverse processing to the previous step if needed. The data lifecycle ensures the reliability and compliance for the workload. By using the Amazon Textract Service Quotas Calculator (see the following screenshot), asynchronous features on Amazon Textract, Lambda, Step Functions, Amazon Simple Queue Service (Amazon SQS), és Amazon Simple Notification Service (Amazon SNS), organizations can automate and scale document processing tasks to meet specific workload needs.

Figure 2. Amazon Textract Service Quota Calculator.

Hibakezelés

Robust error handling is critical for tracking the document process status, and it provides the operation team time to react to any abnormal behaviors, such as unexpected document volumes, new document types, or other unplanned issues from third-party services. From the organization’s perspective, proper error handling can enhance system uptime and performance.

You can break down error handling into two key aspects:

- AWS service configuration – You can implement retry logic with exponential backoff to handle transient errors like throttling. When you start processing by calling an asynchronous Start* operation, such as StartDocumentTextDetection, you can specify that the completion status of the request is published to an SNS topic in the NotificationChannel configuration. This helps you avoid throttling limits on API calls due to polling the Get* APIs. You can also implement alarms in amazonfelhőóra and triggers to alert when unusual error spikes occur.

- Error report enhancement – This includes detailed messages with an appropriate level of detail by error type and descriptions of error handling responses. With the proper error handling setup, systems can be more resilient by implementing common patterns like automatically retrying intermittent errors, using circuit breakers to handle cascading failures, and monitoring services to gain insight into errors. This allows the solution to balance between retry limits and prevents never-ending circuit loops.

Modellfigyelés

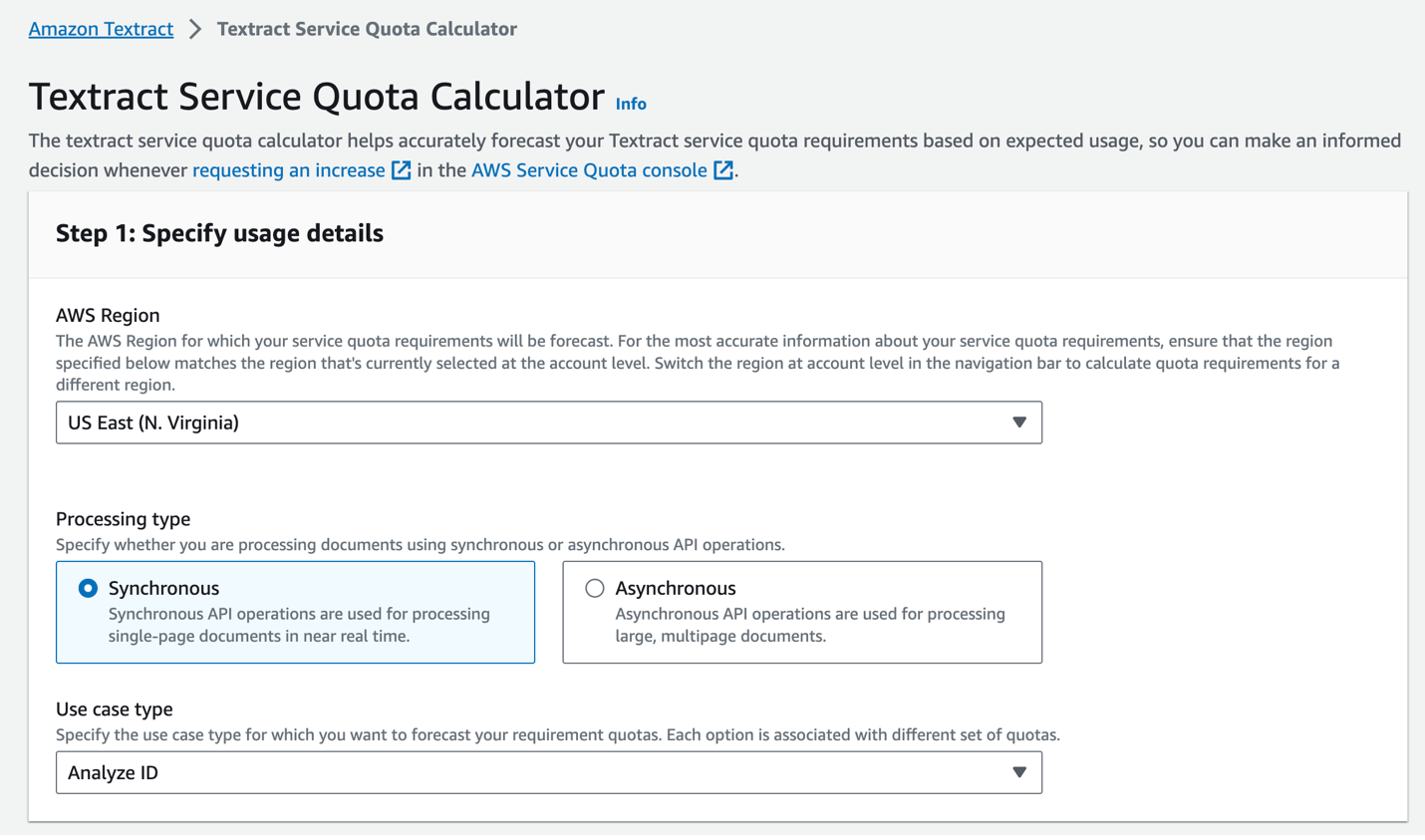

The performance of ML models is monitored for degradation over time. As data and system conditions change, the model performance and efficiency metrics are tracked to ensure retraining is performed when needed.

The ML model in an IDP workflow can be an OCR model, entity recognition model, or classification model. The model can come from an AWS AI service, an open source model on Amazon SageMaker, Amazon alapkőzet, or other third-party services. You must understand the limitations and use cases of each service in order to identify ways to improve the model with human feedback and enhance service performance over time.

A common approach is using service logs to understand different levels of accuracy. These logs can help the data science team identify and understand any need for model retraining. Your organization can choose the retraining mechanism—it can be quarterly, monthly, or based on science metrics, such as when accuracy drops below a given threshold.

The goal of monitoring is not just detecting issues, but closing the loop to continuously refine models and keep the IDP solution performing as the external environment evolves.

Rendszerfelügyelet

After you deploy the IDP solution in production, it’s important to monitor key metrics and automation performance to identify areas for improvement. The metrics should include business metrics and technical metrics. This allows the company to evaluate the system’s performance, identify issues, and make improvements to models, rules, and workflows over time to increase the automation rate to understand the operational impact.

On the business side, metrics like extraction accuracy for important fields, overall automation rate indicating the percentage of documents processed without human intervention, and average processing time per document are paramount. These business metrics help quantify the end-user experience and operational efficiency gains.

Technical metrics including error and exception rates occurring throughout the workflow are essential to track from an engineering perspective. The technical metrics can also monitor at each level from end to end and provide a comprehensive view of a complex workload. You can break the metrics down into different levels, such as solution level, end-to-end workflow level, document type level, document level, entity recognition level, and OCR level.

Now that you have reviewed all the questions in this pillar, you can assess the other pillars and develop an improvement plan for your IDP workload.

Következtetés

In this post, we discussed common indicators that you may need to perform a Well-Architected Framework review for the Performance Efficiency pillar for your IDP workload. We then walked through design principles to provide a high-level overview and discuss the solution goal. By following these suggestions in reference to the IDP Well-Architected Custom Lens and by reviewing the questions by focus area, you should now have a project improvement plan.

A szerzőkről

Mia Chang az Amazon Web Services ML Specialist Solutions Architect. EMEA-beli ügyfelekkel dolgozik, és megosztja a bevált gyakorlatokat az AI/ML munkaterhelések felhőben történő futtatásával kapcsolatban alkalmazott matematikai, számítástechnikai és mesterséges intelligencia/ML háttérrel. Az NLP-specifikus munkaterhelésekre összpontosít, és megosztja tapasztalatait konferencia előadóként és könyvszerzőként. Szabadidejében szeret túrázni, társasjátékozni és kávét főzni.

Mia Chang az Amazon Web Services ML Specialist Solutions Architect. EMEA-beli ügyfelekkel dolgozik, és megosztja a bevált gyakorlatokat az AI/ML munkaterhelések felhőben történő futtatásával kapcsolatban alkalmazott matematikai, számítástechnikai és mesterséges intelligencia/ML háttérrel. Az NLP-specifikus munkaterhelésekre összpontosít, és megosztja tapasztalatait konferencia előadóként és könyvszerzőként. Szabadidejében szeret túrázni, társasjátékozni és kávét főzni.

Brijesh Pati az AWS vállalati megoldások építésze. Elsődleges célja, hogy segítse a vállalati ügyfeleket abban, hogy a felhőtechnológiákat alkalmazzák munkaterhelésükhöz. Alkalmazásfejlesztéssel és vállalati architektúrával rendelkezik, és különböző iparágakban, például sport-, pénzügy-, energia- és professzionális szolgáltatásokkal foglalkozó ügyfelekkel dolgozott. Érdeklődési köre a szerver nélküli architektúrák és az AI/ML.

Brijesh Pati az AWS vállalati megoldások építésze. Elsődleges célja, hogy segítse a vállalati ügyfeleket abban, hogy a felhőtechnológiákat alkalmazzák munkaterhelésükhöz. Alkalmazásfejlesztéssel és vállalati architektúrával rendelkezik, és különböző iparágakban, például sport-, pénzügy-, energia- és professzionális szolgáltatásokkal foglalkozó ügyfelekkel dolgozott. Érdeklődési köre a szerver nélküli architektúrák és az AI/ML.

Rui Cardoso az Amazon Web Services (AWS) partner megoldások tervezője. Az AI/ML-re és az IoT-re összpontosít. Az AWS-partnerekkel dolgozik, és támogatja őket az AWS-beli megoldások fejlesztésében. Amikor nem dolgozik, szeret kerékpározni, túrázni és új dolgokat tanulni.

Rui Cardoso az Amazon Web Services (AWS) partner megoldások tervezője. Az AI/ML-re és az IoT-re összpontosít. Az AWS-partnerekkel dolgozik, és támogatja őket az AWS-beli megoldások fejlesztésében. Amikor nem dolgozik, szeret kerékpározni, túrázni és új dolgokat tanulni.

Tim Condello az Amazon Web Services (AWS) mesterséges intelligencia (AI) és gépi tanulási (ML) megoldások vezető építészmérnöke. Fókuszában a természetes nyelvi feldolgozás és a számítógépes látás áll. Tim élvezi az ügyfelek ötleteit, és skálázható megoldásokká alakítja azokat.

Tim Condello az Amazon Web Services (AWS) mesterséges intelligencia (AI) és gépi tanulási (ML) megoldások vezető építészmérnöke. Fókuszában a természetes nyelvi feldolgozás és a számítógépes látás áll. Tim élvezi az ügyfelek ötleteit, és skálázható megoldásokká alakítja azokat.

Sherry Ding az Amazon Web Services (AWS) mesterséges intelligencia (AI) és gépi tanulási (ML) megoldások vezető építészmérnöke. Számítástechnikai PhD fokozattal rendelkezik a gépi tanulás területén. Főleg a közszférában dolgozó ügyfelekkel dolgozik különféle mesterséges intelligenciával/ML-lel kapcsolatos üzleti kihívásokon, segítve őket az AWS felhőben való gépi tanulási útjuk felgyorsításában. Amikor nem segít az ügyfeleknek, szereti a szabadtéri tevékenységeket.

Sherry Ding az Amazon Web Services (AWS) mesterséges intelligencia (AI) és gépi tanulási (ML) megoldások vezető építészmérnöke. Számítástechnikai PhD fokozattal rendelkezik a gépi tanulás területén. Főleg a közszférában dolgozó ügyfelekkel dolgozik különféle mesterséges intelligenciával/ML-lel kapcsolatos üzleti kihívásokon, segítve őket az AWS felhőben való gépi tanulási útjuk felgyorsításában. Amikor nem segít az ügyfeleknek, szereti a szabadtéri tevékenységeket.

Suyin Wang AI/ML Specialist Solutions Architect az AWS-nél. Interdiszciplináris oktatási háttérrel rendelkezik gépi tanulás, pénzügyi információs szolgáltatás és közgazdaságtan területén, valamint több éves tapasztalattal rendelkezik olyan adattudományi és gépi tanulási alkalmazások építésében, amelyek valós üzleti problémákat oldottak meg. Szívesen segít ügyfeleinek a megfelelő üzleti kérdések azonosításában és a megfelelő AI/ML megoldások kidolgozásában. Szabadidejében szeret énekelni és főzni.

Suyin Wang AI/ML Specialist Solutions Architect az AWS-nél. Interdiszciplináris oktatási háttérrel rendelkezik gépi tanulás, pénzügyi információs szolgáltatás és közgazdaságtan területén, valamint több éves tapasztalattal rendelkezik olyan adattudományi és gépi tanulási alkalmazások építésében, amelyek valós üzleti problémákat oldottak meg. Szívesen segít ügyfeleinek a megfelelő üzleti kérdések azonosításában és a megfelelő AI/ML megoldások kidolgozásában. Szabadidejében szeret énekelni és főzni.

- SEO által támogatott tartalom és PR terjesztés. Erősödjön még ma.

- PlatoData.Network Vertical Generative Ai. Erősítse meg magát. Hozzáférés itt.

- PlatoAiStream. Web3 Intelligence. Felerősített tudás. Hozzáférés itt.

- PlatoESG. Carbon, CleanTech, Energia, Környezet, Nap, Hulladékgazdálkodás. Hozzáférés itt.

- PlatoHealth. Biotechnológiai és klinikai vizsgálatok intelligencia. Hozzáférés itt.

- Forrás: https://aws.amazon.com/blogs/machine-learning/build-well-architected-idp-solutions-with-a-custom-lens-part-4-performance-efficiency/

- :van

- :is

- :nem

- :ahol

- 1

- 10

- 100

- 32

- 7

- 8

- a

- Rólunk

- gyorsul

- elfogadott

- hozzáférés

- pontosság

- Elérése

- tevékenységek

- hozzáadásával

- Ezen kívül

- cím

- Beállított

- elfogadja

- Örökbefogadás

- Előny

- előnyei

- AI

- AI modellek

- AI szolgáltatások

- AI / ML

- Éber

- összehangolása

- Minden termék

- lehetővé teszi, hogy

- mentén

- Is

- amazon

- Amazon szöveg

- Az Amazon Web Services

- Amazon Web Services (AWS)

- an

- és a

- és az infrastruktúra

- bármilyen

- api

- API-k

- Alkalmazás

- Application Development

- alkalmazások

- alkalmazott

- Alkalmazása

- megközelítés

- megközelít

- megfelelő

- építészeti

- építészet

- VANNAK

- TERÜLET

- területek

- mesterséges

- mesterséges intelligencia

- Mesterséges intelligencia (AI)

- AS

- szempontok

- értékeli

- segítséget nyújt

- At

- szerző

- automatizált

- automatikusan

- Automatizálás

- átlagos

- elkerülése érdekében

- AWS

- háttér

- Egyenleg

- kiegyensúlyozott

- alapján

- BE

- mert

- viselkedés

- mögött

- lent

- benchmark

- haszon

- BEST

- legjobb gyakorlatok

- között

- bizottság

- Társasjátékok

- könyv

- szünet

- épít

- Épület

- üzleti

- de

- by

- számítás

- hívás

- hívás

- kéri

- TUD

- elfog

- eset

- esetek

- Okoz

- kihívások

- változik

- karakter

- karakter felismerés

- A pop-art design, négy időzóna kijelzése egyszerre és méretének arányai azok az érvek, amelyek a NeXtime Time Zones-t kiváló választássá teszik. Válassza a

- besorolás

- záró

- felhő

- kód

- Kávé

- hogyan

- Közös

- vállalat

- befejezés

- bonyolult

- bonyolultság

- teljesítés

- alkatrészek

- átfogó

- számítógép

- Computer Science

- Számítógépes látás

- Körülmények

- Magatartás

- Konferencia

- Configuration

- Fontolja

- fogyasztás

- tartalmaz

- folyamatosan

- ellenőrzés

- konvertáló

- Költség

- költséghatékony

- kiadások

- terjed

- kritikai

- szokás

- vevő

- Ügyfelek

- dátum

- adatkezelés

- adat-tudomány

- határozatok

- függetlenített

- mély

- Fok

- telepíteni

- bevezetéséhez

- Design

- tervezési elvek

- tervezés

- kívánatos

- részlet

- részletes

- Fejleszt

- Fejlesztő

- fejlesztése

- Fejlesztés

- különböző

- nehézségek

- megvitatni

- tárgyalt

- megbeszélése

- merülés

- dokumentum

- dokumentum folyamat

- dokumentumok

- Nem

- le-

- cseppek

- két

- minden

- Korábban

- Közgazdaságtan

- Oktatás

- Hatékony

- hatékonyan

- hatékonyság

- hatékony

- EMEA

- lehetővé

- végén

- végtől végig

- energia

- Mérnöki

- növelése

- Javítja

- gazdagítás

- biztosítására

- biztosítja

- Vállalkozás

- egység

- Környezet

- hiba

- hibák

- alapvető

- létrehozni

- értékelni

- fejlődik

- példa

- meghaladja

- kivétel

- létező

- költségek

- tapasztalat

- exponenciális

- kiterjedt

- Átfogó tapasztalat

- külső

- kitermelés

- Kudarc

- hibák

- ismerős

- gyorsabb

- Jellemzők

- Visszacsatolás

- Fields

- Ábra

- finanszíroz

- pénzügyi

- pénzügyi információ

- öt

- Összpontosít

- koncentrál

- összpontosítás

- következik

- következő

- következik

- A

- Alapítvány

- Keretrendszer

- Ingyenes

- ból ből

- funkciók

- alapvető

- Nyereség

- Nyereség

- Games

- adott

- Go

- cél

- megy

- útmutatást

- irányelvek

- fogantyú

- Fogantyúk

- Kezelés

- Legyen

- he

- segít

- segít

- segít

- neki

- Magas

- magas szinten

- övé

- vízszintesen

- Hogyan

- How To

- HTML

- http

- HTTPS

- emberi

- ötletek

- azonosítani

- if

- Hatás

- végre

- végrehajtás

- megvalósítások

- végrehajtási

- fontos

- javul

- javulás

- fejlesztések

- in

- tartalmaz

- magában foglalja a

- Beleértve

- Bejövő

- Növelje

- növekvő

- Mutató

- mutatók

- iparágak

- információ

- Infrastruktúra

- kezdeményezett

- Insight

- Intelligencia

- Intelligens

- Intelligens dokumentumfeldolgozás

- érdekek

- beavatkozás

- bele

- bevezet

- részt

- tárgyak internete

- kérdések

- IT

- ITS

- Állások

- utazás

- jpg

- éppen

- Tart

- Kulcs

- tudás

- munkaerő

- nyelv

- Késleltetés

- TANUL

- tanulás

- szint

- szintek

- életciklus

- mint

- korlátozások

- határértékek

- kiszámításának

- található

- elhelyezkedés

- fakitermelés

- logika

- hosszabb

- szeret

- Elő/Utó

- gép

- gépi tanulás

- főleg

- fenntartása

- csinál

- KÉSZÍT

- kezelése

- sikerült

- vezetés

- matematika

- Lehet..

- eszközök

- Találkozik

- üzenetek

- Metrics

- bánja

- ML

- modell

- modellek

- pénz

- monitor

- ellenőrizni

- ellenőrzés

- havi

- több

- többszörös

- kell

- Természetes

- Természetes nyelvi feldolgozás

- Szükség

- szükséges

- igények

- Új

- bejelentés

- Most

- történt

- előforduló

- OCR

- of

- gyakran

- on

- nyitva

- nyílt forráskódú

- üzemeltetési

- működés

- operatív

- optikai karakter felismerés

- Optimalizálja

- or

- érdekében

- szervezet

- szervezetek

- Más

- ki

- Eredmény

- felett

- átfogó

- áttekintés

- Párhuzamos

- Legfőbb

- rész

- partner

- partnerek

- Mintás

- minták

- mert

- százalék

- Teljesít

- teljesítmény

- teljesített

- előadó

- perspektíva

- phd

- Pillér

- pilléreket

- terv

- Platformok

- Plató

- Platón adatintelligencia

- PlatoData

- Politikák

- állás

- Hozzászólások

- potenciális

- gyakorlat

- megakadályozása

- megakadályozza

- előző

- elsődleges

- elvek

- magánélet

- problémák

- folyamat

- Feldolgozott

- feldolgozás

- Termelés

- szakmai

- program

- megfelelő

- ad

- feltéve,

- ellátó

- biztosít

- amely

- nyilvános

- közzétett

- Kérdések

- Arány

- Az árak

- Inkább

- Reagál

- kész

- való Világ

- ok

- kap

- elismerés

- referencia

- finomítani

- tekintettel

- tekintetében

- összefüggő

- megbízhatóság

- megbízható

- jelentést

- kérni

- kéri

- rugalmas

- megoldása

- Tudástár

- válaszok

- visszatartás

- fordított

- Kritika

- felül

- felülvizsgálata

- jobb

- kockázatok

- routing

- szabályok

- futás

- Megtakarítás

- skálázhatóság

- skálázható

- Skála

- skálázás

- tervezett

- Ütemezett munkák

- Tudomány

- Második

- szakaszok

- szektor

- biztonság

- biztonság

- lát

- idősebb

- Series of

- vagy szerver

- szolgáltatás

- Szolgáltatások

- felépítés

- Megoszt

- ő

- kellene

- oldal

- Egyszerű

- SIX

- So

- megoldások

- Megoldások

- néhány

- forrás

- Hangszóró

- szakember

- különleges

- tüskék

- Sport

- állapota

- kezdet

- Állapot

- Lépés

- tárolása

- egyértelmű

- patakok

- ilyen

- támogatás

- fenntartható

- rendszer

- Systems

- felszerelés

- tart

- bevétel

- feladatok

- csapat

- csapat

- Műszaki

- Technologies

- Tesztelés

- mint

- hogy

- A

- azok

- Őket

- akkor

- Ezek

- dolgok

- harmadik fél

- ezt

- három

- küszöb

- Keresztül

- egész

- áteresztőképesség

- Tim

- idő

- nak nek

- tolerancia

- téma

- idő

- vágány

- Csomagkövetés

- Képzések

- Tranzakciók

- próbál

- Turning

- kettő

- típus

- típusok

- mögöttes

- megért

- Váratlan

- felesleges

- üzemidő

- Használat

- használ

- használati eset

- használó

- User Experience

- segítségével

- érvényesítés

- különféle

- Megnézem

- láthatóság

- látomás

- kötetek

- vs

- sétált

- Út..

- módon

- we

- háló

- webes szolgáltatások

- amikor

- ami

- míg

- miért

- lesz

- val vel

- nélkül

- Munka

- dolgozott

- munkafolyamat

- munkafolyamatok

- dolgozó

- művek

- év

- te

- A te

- zephyrnet