Introduction

On March 15, intriguing seminar announcements sent rumblings through the field of combinatorics, the mathematical study of counting. Three collaborators planned to give coordinated talks the following day. Julian Sahasrabudhe would address mathematicians in Cambridge, England, while Simon Griffiths would share the news in Rio de Janeiro and Marcelo Campos in São Paulo. All three talks sported identical titles and cryptic, two-sentence abstracts referencing “recent progress on an old problem of Erdős.” While Paul Erdős, a Hungarian mathematician who died in 1996, posed hundreds of problems during his career, combinatorists quickly divined the specific issue that the trio was planning to talk about. Rumors swirled about something called the Ramsey number, one of the most difficult quantities to calculate in all of mathematics.

Ramsey numbers have spawned a whole discipline called Ramsey theory, which looks for inescapable patterns in a huge range of systems.

For example, say you try to spread all the integers among a number of buckets, and you want to avoid placing sequences of evenly spaced numbers in any of the buckets. Ramsey theory shows that you will fail (unless you have infinitely many buckets). The theory can be applied to most anything you can count. Its central lesson is that “you cannot create a completely chaotic system,” said Benny Sudakov, a mathematician at the Swiss Federal Institute of Technology Zurich.

The Ramsey number quantifies how big a paradigmatic example has to be before particular patterns inevitably arise. But despite its centrality, no one has been able to calculate the Ramsey number for all but the simplest instances. The best that they have been able to do is find limits (or bounds) on what it might be. Even then, the upper bound first established by Erdős and a collaborator nearly a century ago had barely budged.

Then, in the March 15 seminars, and in a paper posted later that evening, the researchers announced that they had improved the upper bound on the Ramsey number by an exponential amount.

Introduction

“I was floored,” said Yuval Wigderson, a mathematician at Tel Aviv University, on hearing about the new result. “I was literally shaking for half an hour to an hour.”

The Party Lines

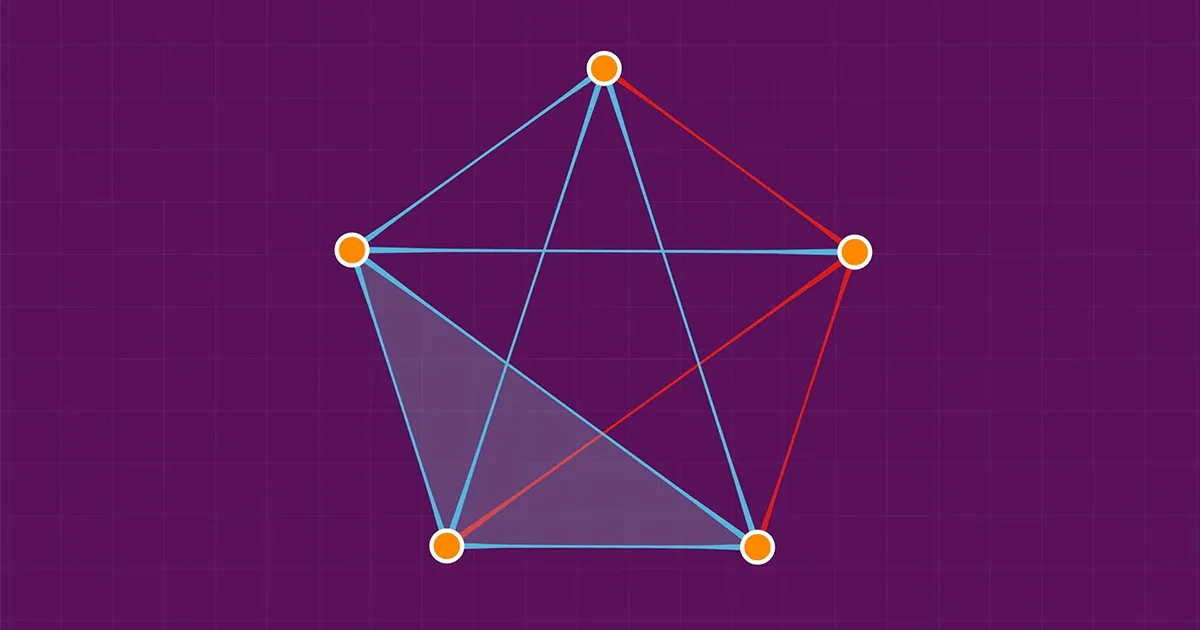

Ramsey theory most commonly asks questions either about the integers or about graphs. A graph, in this context, refers to collections of points called nodes, connected by lines called edges, which can have properties like length or — as in the case of the Ramsey numbers — color.

A complete graph is both complicated and simple — every node is connected to every other node. The Ramsey number describes how many nodes a complete graph must contain to be forced to have a particular structure. Say the edges of a complete graph are assigned one of two colors: red or blue. And say you try to color the edges in a way that avoids connecting a group of nodes with edges of the same color. In 1930, Frank Ramsey proved that if a graph is big enough, it becomes impossible to avoid creating what mathematicians call a monochromatic clique — a group of nodes whose common edges are either all red, or all blue.

How big, exactly, must a graph be before a monochromatic clique is forced to emerge? The answer depends on the size of the clique. Ramsey showed that there exists a number, now called the Ramsey number, representing the minimum number of nodes for which a monochromatic clique of a given size must exist, no matter how the edges are colored.

But the size of the Ramsey number is hard to pin down. In 1935, five years after Ramsey showed it exists, Erdős and George Szekeres provided a new, tighter upper bound on how big the Ramsey number is for a clique of a given size. But since then, mathematicians have barely been able to improve on Erdős and Szekeres’ calculation.

To get a better intuition for what this means, consider a classic example, in which nodes represent guests at a party. Color the edge between any two guests red if they’ve met before, and blue if they haven’t. You can pick any clique size you like — invite enough people to the party, and you can’t avoid either inviting a group of people who all know one another (a clique in multiple senses of the word) or inviting a group of people who have never met before.

“The simplest thing you can have in a graph is a monochromatic clique,” said Maria Chudnovsky, a mathematician at Princeton University. “It’s kind of amazing that in every huge graph you can find a big one of those. It’s completely not clear.”

The first few Ramsey numbers are relatively simple to calculate. Let’s say you want to know the size of the smallest graph that must unavoidably hold a clique of size two, or R(2) to mathematicians. Since a complete graph with two nodes is just two nodes connected by an edge, and that edge has to be either red or blue, R(2) is 2. More generally, R(k), or the Ramsey number of k, is the minimum number of nodes beyond which a graph can’t avoid containing a clique of size k.

It isn’t so hard to show that the Ramsey number for a clique of size 3, or R(3), is 6 (see graphic). But it wasn’t until 1955 that R(4) was pinned down at 18. R(5) remains unknown — it’s at least 43 and no bigger than 48. Though these numbers are small, sifting through all of the possible colorings is out of the question, said David Conlon of the California Institute of Technology. Consider the number of colorings on a complete graph with 43 nodes. “You have 903 edges; each of those can be colored in two ways,” he explained. “So you get 2903, which is just astronomically large.”

As the size of the clique increases, the task of nailing down the Ramsey number only gets more difficult. Erdős quipped that all-out war with mathematically demanding aliens would be easier than trying to figure out R(6), which is somewhere between 102 and 165. The range of uncertainty grows quickly: According to estimates compiled by Stanisław Radziszowski, R(10) could be as small as 798 and as big as 23,556. But mathematicians’ goals reach far beyond the Ramsey number of 10. They want a formula that will give a good estimate of R(k), even — or especially — when k is extremely large.

“I don’t know of a person in combinatorics who has not thought about this problem at least a little bit,” Wigderson said. “This problem is, I think, really special.”

Introduction

Order in the Court

Frank Ramsey was an eclectic, brilliant figure who died when he was 26 years old. Just weeks before he died, the Proceedings of the London Mathematical Society published the paper in which he introduced Ramsey numbers. That work wasn’t even about graphs, but about a problem in mathematical logic. Ramsey proved that a statement that satisfies certain conditions has to be true at least some of the time. One of those conditions was that there be a large “universe” of scenarios to test the statement in. As a steppingstone to this result, Ramsey showed that the Ramsey number is finite.

Five years later, Erdős and Szekeres showed that the Ramsey number of k is less than 4k. And 12 years after that, Erdős showed that it is bigger than about $latex sqrt{2}^k$. To do that, he calculated the chances that a graph with randomly colored edges contains a monochromatic clique. This “probabilistic” technique became massively influential in graph theory. It also trapped R(k) between two exponentially growing goalposts: $latex sqrt{2}^k$ and $latex 4^k$.

As the decades slid by, numerous mathematicians attempted to narrow the gap between the possible values of the Ramsey number. Some succeeded: In 1975, Joel Spencer doubled the lower limit. And a series of papers by Conlon, Andrew Thomason and Ashwin Sah pushed down the upper limit by a factor of about $latex 4^{log(k)^2}$ by 2020. But compared to the sizes of the bounds on the Ramsey number, these adjustments were small. By contrast, any reduction to the 4 in Erdős and Szekeres’ formula R(k) < 4k would be an exponential improvement, growing quickly as k gets bigger.

Introduction

“It seems like just a cute little question,” said Rob Morris, a mathematician at IMPA, Brazil’s Institute for Pure and Applied Mathematics, in Rio de Janeiro, who co-authored the new result with Campos, Griffiths and Sahasrabudhe. “It’s a little subtle to appreciate. But people really care about it.” This is possibly an understatement. “Had they proved it in 1936, people would have said, OK, so what’s the big deal?” said Béla Bollobás, who was Morris and Sahasrabudhe’s doctoral adviser at the University of Memphis. “Since then, it has been proved that it’s a very big problem, because over the years, several thousand papers have been written on various variants of the Ramsey problem.” As Liana Yepremyan, a mathematician at Emory University, said, “The Ramsey numbers create that bridge between combinatorics and probability and geometry.”

Game Theory

In August 2018, Sahasrabudhe was a postdoctoral fellow under Morris at IMPA. The two were hoping to start a new project with Griffiths, who teaches at the nearby Pontifical Catholic University, when a paper by Conlon caught their attention. The paper outlined a possible strategy for getting an exponential improvement on the Ramsey number. Griffiths, Morris and Sahasrabudhe began playing with the idea.

“It was really exciting in the beginning,” Sahasrabudhe recalled. It only took them about a month, he said, to lay out a sketch of their argument.

Their plan was to build on ideas used in Erdős and Szekeres’ original proof that $latex R(k) < 4^k$. To prove that the Ramsey number is at most $latex 4^k$, imagine playing a game on a complete graph with $latex 4^k$ nodes. The game has two players. First, your opponent colors each edge either red or blue, hoping to color the edges in a way that avoids creating a monochromatic clique of k nodes.

Once your opponent is done coloring, it’s your job to search for a monochromatic clique. If you find one, you win.

To win this game, you can follow a simple strategy. It helps to think (metaphorically) about sorting your nodes into two buckets. The nodes in one bucket will form a blue clique, and the nodes in the other will form a red clique. Some nodes will be deleted, never to be heard from again. At the beginning, both buckets are empty, and every node is a candidate to go into either one.

Introduction

Start with any node that strikes your fancy. Then look at the connecting edges. If half or more of the edges are red, then delete all the blue edges and the nodes they are connected to. Then put your original choice into the “red” bucket. All of this node’s red edges are still alive and well, clinging onto the rest of the graph from inside the bucket. If more than half of the edges are blue, you analogously delete the red edges and nodes, and put it in the blue bucket.

Repeat until you have no nodes left. (Since the graph is complete, every remaining node at any point is connected to both buckets until it is placed into one of them.)

When you’re done, look inside the buckets. Because a node went into the red bucket only after its blue neighbors were eliminated, all the nodes in the red bucket are connected by red edges — they form a red clique. Likewise, the blue bucket forms a blue clique. If your original graph has at least $latex 4^k$ nodes, it’s possible to prove that one of these buckets must contain at least k nodes, guaranteeing a monochromatic clique in the original graph.

This argument is clever and elegant, but it has you building two cliques — one blue and one red — even though you only really need one. It would be more efficient to always go red, Conlon explained. Under this strategy, you’d pick a node at each step, erase its blue edges, and throw it into the red bucket. The red bucket would then quickly fill up, and you’d amass a red clique of k nodes in half the time.

But your strategy has to work for any red-blue coloring, and it’s hard to know whether you can always find a node with lots of red edges. So following Conlon’s suggestion runs the risk of running into a node that has almost no red edges attached to it. That would force you to delete a huge portion of the graph at once, leaving you scrambling to build your clique before you run out of nodes. To carry out Conlon’s suggestion, Griffiths, Morris and Sahasrabudhe needed to prove that this risk was avoidable.

Introduction

An Open-Book Exam

In updating their gameplay, Griffiths, Morris and Sahasrabudhe followed a slightly more circuitous route. Rather than build a monochromatic clique directly by tossing nodes in their red and blue buckets, they first focused on building a structure called a red book.

A book, in graph theory, is made up of two parts: There’s a monochromatic clique, called the “spine,” and a second, distinct cluster of nodes called the “pages.” In a red book, all of the edges connecting nodes within the spine are red, as are the edges linking the spine to the pages. The edges connecting nodes within the pages, however, can be any combination of colors. Conlon had noted in his 2018 paper that if you can find a red clique inside the pages of a book, then you could combine it with the spine to get a larger red clique. This lets you decompose a search for a large red clique into two easier searches. First, look for a red book. Then look for a clique in the pages of the book.

Griffiths, Morris and Sahasrabudhe wanted to adjust the game-winning algorithm so that it built a red book instead of a red clique. Though they settled on this plan just weeks into their project, it would take years to get it to work. They still needed to stave off the threat of losing all their red edges.

“We were stuck for a very long time,” said Campos, who joined the project in 2021.

This January, the four mathematicians agreed to switch to another version of the problem. That version sounds more complicated, but it turned out to be easier.

All along, the team had been focused on the Ramsey number R(k), also known as the “diagonal” Ramsey number. A graph of size R(k) must contain k nodes, all connected by edges of the same color, but it doesn’t matter if that color is red or blue. On the other hand, the “off-diagonal” Ramsey number R(k, l) measures how big a graph must be before it contains either a red clique with k nodes, or a blue clique with l nodes. Instead of continuing to hack away at the diagonal problem, the group decided to try the off-diagonal version. This proved revelatory.

“For a long time, it felt like every door you pushed on was either bolted shut, or at least pretty difficult to get through,” Griffiths said. “And after that change, you just felt like every door was open. Somehow, everything just seemed to work.” In the off-diagonal version, they found a way to delete a bunch of blue edges at once following a particular protocol, which increased the density of red edges, and led to an improved bound on the off-diagonal Ramsey number. This method, called a “density increment” argument, had previously been used to solve other important problems in combinatorics, but it had not been used on the Ramsey number problem.

The four mathematicians then used the new bound on the off-diagonal Ramsey number to clear the way for the diagonal result. By the beginning of February, they had finally lowered the limit on the Ramsey number by an exponential factor, an achievement mathematicians had sought for nearly a century. And they did it by modernizing the same line of argument that Erdős and Szekeres had put forth in 1935.

Introduction

Epsilon, Epsilon

After the talks on March 16, attendees began to confirm the rumors. Photos from Sahasrabudhe’s talk circulated through phone calls and private messages — even in a vague but suggestive post on the mathematician Gil Kalai’s blog. Campos, Griffiths, Sahasrabudhe and Morris claimed to have shown that $latex R(k) < 3.993^k$. That night, the four authors posted their paper online, allowing mathematicians to see the new proof for themselves.

“I think many of us did not expect to see such an improvement in our lifetime, essentially,” said Matija Bucić, a mathematician at Princeton University and the Institute for Advanced Study. “I think it’s an absolutely amazing result.”

Many experts are hopeful that, with the exponential barrier felled, more progress will quickly follow. The authors of the new paper intentionally did not push their method to its limits, to avoid clouding their argument with unnecessary details. “I’m very interested in how far the method can actually go, because I have no idea,” Campos said.

“It’s an utterly ingenious, absolutely wonderful proof, and a genuine breakthrough. So now I expect the floodgates to be opened,” Bollobás said. “I am convinced that in three years’ time, the debate will be about possible improvements. Can we improve 3.993 to 3.9? Maybe to 3.4? And what about 3?”

The new proof comes in at 56 pages, and it will take weeks for every detail to be fully verified by the combinatorics community. But colleagues are optimistic. “This group of authors, they’re very serious people. And they’re people who are really, really good at very technical things,” Wigderson said.

When it comes to his collaborators, Griffiths agrees. “It’s a privilege to work with brilliant people, isn’t it? And I think that’s what I feel very grateful for,” he said. “If they’d left it to me, I might have taken another five years to get the details right.”

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoAiStream. Web3 Data Intelligence. Knowledge Amplified. Access Here.

- Minting the Future w Adryenn Ashley. Access Here.

- Source: https://www.quantamagazine.org/after-nearly-a-century-a-new-limit-for-patterns-in-graphs-20230502/

- :has

- :is

- :not

- ][p

- $UP

- 1

- 10

- 12

- 1930

- 1996

- 2018

- 2020

- 2021

- 23

- 26%

- 9

- a

- Able

- About

- about IT

- absolutely

- abstracts

- AC

- According

- achievement

- actually

- address

- advanced

- After

- again

- ago

- algorithm

- aliens

- All

- Allowing

- along

- also

- always

- am

- amazing

- among

- amount

- an

- and

- announced

- Announcements

- Another

- answer

- any

- applied

- appreciate

- ARE

- argument

- AS

- assigned

- At

- attempted

- attendees

- attention

- AUGUST

- authors

- avoid

- away

- barrier

- BE

- became

- because

- becomes

- been

- before

- began

- Beginning

- BEST

- Better

- between

- Beyond

- Big

- bigger

- Bit

- Blog

- Blue

- book

- both

- Bound

- breakthrough

- BRIDGE

- brilliant

- build

- Building

- built

- Bunch

- but

- by

- calculate

- calculated

- california

- call

- called

- Calls

- cambridge

- CAN

- candidate

- cannot

- care

- Career

- carry

- case

- caught

- central

- Centrality

- Century

- certain

- chances

- change

- choice

- claimed

- classic

- clear

- Cluster

- colleagues

- collections

- color

- combination

- combine

- comes

- Common

- commonly

- community

- compared

- complete

- completely

- complicated

- conditions

- Confirm

- connected

- Connecting

- Consider

- contain

- contains

- context

- continuing

- contrast

- coordinated

- could

- create

- Creating

- David

- day

- deal

- debate

- decades

- decided

- demanding

- depends

- Despite

- detail

- details

- DID

- died

- difficult

- directly

- distinct

- do

- Doesn’t

- done

- Dont

- Door

- down

- during

- each

- easier

- eclectic

- Edge

- efficient

- either

- eliminated

- emerge

- England

- enough

- especially

- essentially

- established

- estimate

- Even

- evening

- Every

- everything

- exactly

- example

- exciting

- exist

- exists

- expect

- experts

- explained

- exponential

- exponentially

- extremely

- FAIL

- far

- February

- Federal

- feel

- fellow

- few

- field

- Figure

- fill

- Finally

- Find

- First

- focused

- follow

- followed

- following

- For

- Force

- form

- forms

- formula

- forth

- Forward

- found

- four

- from

- fully

- game

- gameplay

- gap

- generally

- genuine

- George

- get

- getting

- GitHub

- Give

- given

- Go

- Goals

- good

- graph

- Graphic

- graphs

- grateful

- Group

- Growing

- Grows

- guests

- hack

- had

- Half

- hand

- Hard

- Have

- he

- heard

- hearing

- helps

- his

- hold

- hopeful

- hoping

- hour

- How

- However

- http

- HTTPS

- huge

- Hungarian

- i

- idea

- ideas

- identical

- if

- imagine

- important

- impossible

- improve

- improved

- improvement

- improvements

- in

- increased

- Increases

- inevitably

- Influential

- instead

- Institute

- intentionally

- interested

- into

- introduced

- invite

- inviting

- issue

- IT

- ITS

- January

- Job

- joined

- just

- Kind

- Know

- known

- large

- larger

- later

- Leap

- least

- leaving

- Led

- Length

- lesson

- Lets

- lifetime

- like

- LIMIT

- limits

- Line

- lines

- linking

- little

- logic

- London

- Long

- long time

- Look

- LOOKS

- losing

- lower

- made

- many

- March

- massively

- math

- mathematical

- mathematically

- mathematics

- Matter

- means

- measures

- messages

- method

- might

- minimum

- Month

- more

- more efficient

- most

- multiple

- must

- nearly

- Need

- needed

- neighbors

- New

- news

- night

- no

- node

- nodes

- noted

- now

- number

- numbers

- numerous

- of

- off

- Old

- on

- once

- ONE

- only

- open

- opened

- Optimistic

- or

- original

- Other

- our

- out

- outlined

- over

- Paper

- papers

- particular

- parts

- party

- patterns

- Paul

- People

- person

- phone

- phone calls

- Photos

- pick

- placing

- plan

- planned

- planning

- plato

- Plato Data Intelligence

- PlatoData

- players

- playing

- Point

- points

- portion

- possible

- possibly

- posted

- pretty

- previously

- private

- Problem

- problems

- Progress

- project

- proof

- properties

- protocol

- Prove

- proved

- provided

- published

- Push

- pushed

- put

- Quantamagazine

- question

- Questions

- quickly

- range

- rather

- reach

- really

- Red

- refers

- relatively

- remaining

- remains

- represent

- representing

- researchers

- REST

- result

- rio de janeiro

- Risk

- Route

- Rumors

- Run

- running

- Said

- same

- say

- scenarios

- Search

- Second

- see

- seemed

- seems

- seminar

- Series

- serious

- Settled

- several

- Share

- Short

- show

- shown

- Shows

- Simple

- since

- Size

- sizes

- small

- So

- SOLVE

- some

- something

- somewhere

- special

- specific

- spread

- start

- Statement

- Step

- Still

- Strategy

- Strikes

- structure

- Study

- such

- Swiss

- Switch

- system

- Systems

- Take

- Talk

- Talks

- Task

- team

- Technical

- Technology

- Tel

- Tel Aviv

- test

- than

- that

- The

- The Graph

- their

- Them

- themselves

- then

- There.

- These

- they

- thing

- things

- think

- this

- those

- thought

- threat

- three

- Through

- tighter

- time

- titles

- to

- took

- true

- Turned

- two

- Uncertainty

- under

- university

- unknown

- updating

- us

- used

- Values

- various

- verified

- version

- very

- want

- wanted

- war

- was

- Way..

- ways

- we

- webp

- Weeks

- WELL

- were

- What

- when

- whether

- which

- while

- WHO

- whole

- will

- win

- with

- within

- wonderful

- Word

- WordPress

- Work

- would

- written

- years

- You

- Your

- zephyrnet

- Zurich