Amazon Comprehend is a natural-language processing (NLP) service that provides pre-trained and custom APIs to derive insights from textual data. Amazon Comprehend customers can train custom named entity recognition (NER) models to extract entities of interest, such as location, person name, and date, that are unique to their business.

To train a custom model, you first prepare training data by manually annotating entities in documents. This can be done with the Comprehend Semi-Structured Documents Annotation Tool, which creates an Amazon SageMaker Ground Truth job with a custom template, allowing annotators to draw bounding boxes around the entities directly on the PDF documents. However, for companies with existing tabular entity data in ERP systems like SAP, manual annotation can be repetitive and time-consuming.

To reduce the effort of preparing training data, we built a pre-labeling tool using AWS Step Functions that automatically pre-annotates documents by using existing tabular entity data. This significantly decreases the manual work needed to train accurate custom entity recognition models in Amazon Comprehend.

In this post, we walk you through the steps of setting up the pre-labeling tool and show examples of how it automatically annotates documents from a public dataset of sample bank statements in PDF format. The full code is available on the GitHub repo.

Solution overview

In this section, we discuss the inputs and outputs of the pre-labeling tool and provide an overview of the solution architecture.

Inputs and outputs

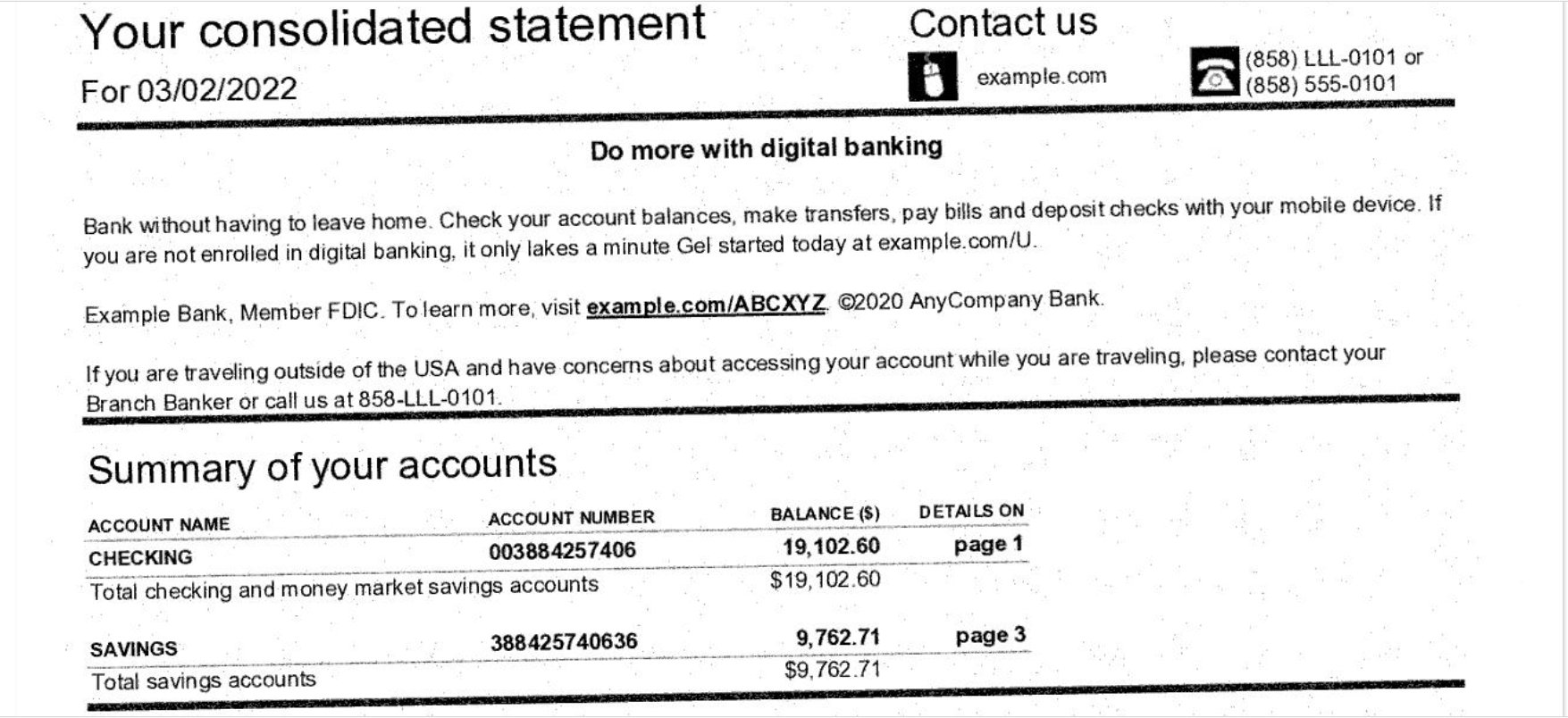

As input, the pre-labeling tool takes PDF documents that contain text to be annotated. For the demo, we use simulated bank statements like the following example.

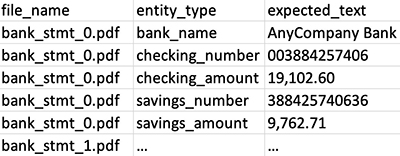

The tool also takes a manifest file that maps PDF documents with the entities that we want to extract from these documents. Entities consists of two things: the expected_text to extract from the document (for example, AnyCompany Bank) and the corresponding entity_type (for example, bank_name). Later in this post, we show how to construct this manifest file from a CSV document like the following example.

The pre-labeling tool uses the manifest file to automatically annotate the documents with their corresponding entities. We can then use these annotations directly to train an Amazon Comprehend model.

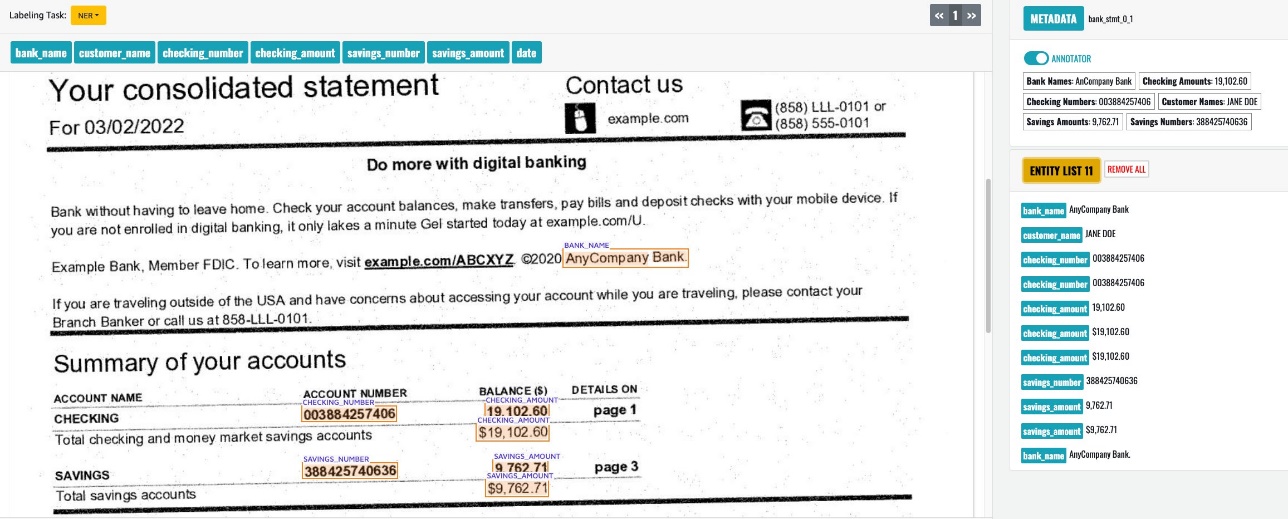

Alternatively, you can create a SageMaker Ground Truth labeling job for human review and editing, as shown in the following screenshot.

When the review is complete, you can use the annotated data to train an Amazon Comprehend custom entity recognizer model.

Architecture

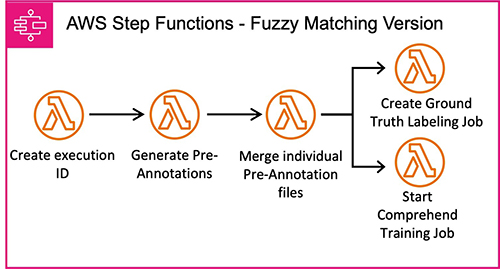

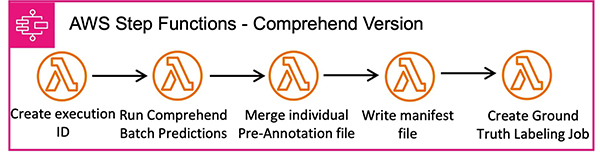

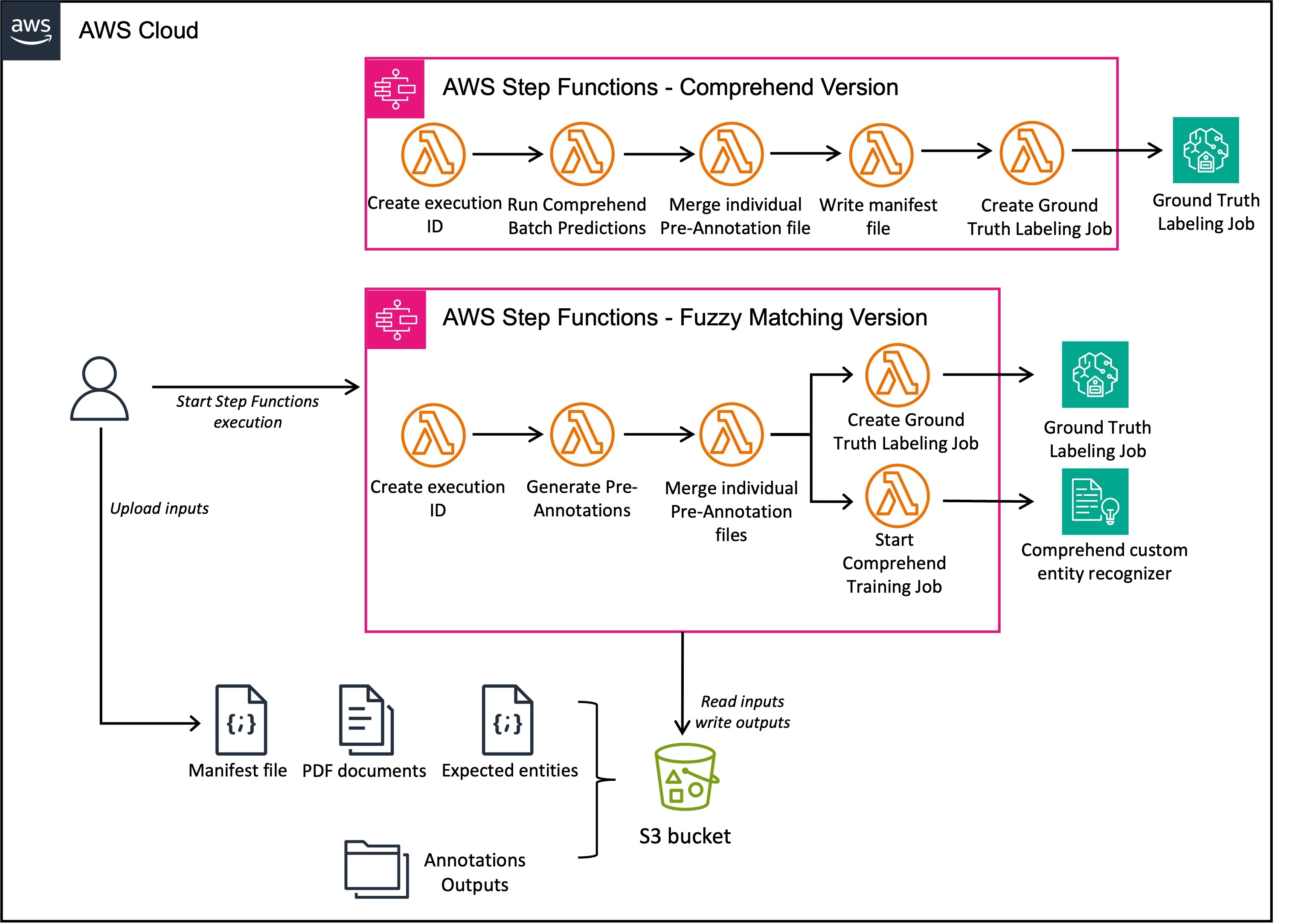

The pre-labeling tool consists of multiple AWS Lambda functions orchestrated by a Step Functions state machine. It has two versions that use different techniques to generate pre-annotations.

The first technique is fuzzy matching. This requires a pre-manifest file with expected entities. The tool uses the fuzzy matching algorithm to generate pre-annotations by comparing text similarity.

Fuzzy matching looks for strings in the document that are similar (but not necessarily identical) to the expected entities listed in the pre-manifest file. It first calculates text similarity scores between the expected text and words in the document, then it matches all pairs above a threshold. Therefore, even if there are no exact matches, fuzzy matching can find variants like abbreviations and misspellings. This allows the tool to pre-label documents without requiring the entities to appear verbatim. For example, if 'AnyCompany Bank' is listed as an expected entity, Fuzzy Matching will annotate occurrences of 'Any Companys Bank'. This provides more flexibility than strict string matching and enables the pre-labeling tool to automatically label more entities.

The following diagram illustrates the architecture of this Step Functions state machine.

The second technique requires a pre-trained Amazon Comprehend entity recognizer model. The tool generates pre-annotations using the Amazon Comprehend model, following the workflow shown in the following diagram.

The following diagram illustrates the full architecture.

In the following sections, we walk through the steps to implement the solution.

Deploy the pre-labeling tool

Clone the repository to your local machine:

This repository has been built on top of the Comprehend Semi-Structured Documents Annotation Tool and extends its functionalities by enabling you to start a SageMaker Ground Truth labeling job with pre-annotations already displayed on the SageMaker Ground Truth UI.

The pre-labeling tool includes both the Comprehend Semi-Structured Documents Annotation Tool resources as well as some resources specific to the pre-labeling tool. You can deploy the solution with AWS Serverless Application Model (AWS SAM), an open source framework that you can use to define serverless application infrastructure code.

If you have previously deployed the Comprehend Semi-Structured Documents Annotation Tool, refer to the FAQ section in Pre_labeling_tool/README.md for instructions on how to deploy only the resources specific to the pre-labeling tool.

If you haven’t deployed the tool before and are starting fresh, do the following to deploy the whole solution.

Change the current directory to the annotation tool folder:

Build and deploy the solution:

Create the pre-manifest file

Before you can use the pre-labeling tool, you need to prepare your data. The main inputs are PDF documents and a pre-manifest file. The pre-manifest file contains the location of each PDF document under 'pdf' and the location of a JSON file with expected entities to label under 'expected_entities'.

The notebook generate_premanifest_file.ipynb shows how to create this file. In the demo, the pre-manifest file shows the following code:

Each JSON file listed in the pre-manifest file (under expected_entities) contains a list of dictionaries, one for each expected entity. The dictionaries have the following keys:

- ‘expected_texts’ – A list of possible text strings matching the entity.

- ‘entity_type’ – The corresponding entity type.

- ‘ignore_list’ (optional) – The list of words that should be ignored in the match. These parameters should be used to prevent fuzzy matching from matching specific combinations of words that you know are wrong. This can be useful if you want to ignore some numbers or email addresses when looking at names.

For example, the expected_entities of the PDF shown previously looks like the following:

Run the pre-labeling tool

With the pre-manifest file that you created in the previous step, start running the pre-labeling tool. For more details, refer to the notebook start_step_functions.ipynb.

To start the pre-labeling tool, provide an event with the following keys:

- Premanifest – Maps each PDF document to its

expected_entitiesfile. This should contain the Amazon Simple Storage Service (Amazon S3) bucket (underbucket) and the key (underkey) of the file. - Prefix – Used to create the

execution_id, which names the S3 folder for output storage and the SageMaker Ground Truth labeling job name. - entity_types – Displayed in the UI for annotators to label. These should include all entity types in the expected entities files.

- work_team_name (optional) – Used for creating the SageMaker Ground Truth labeling job. It corresponds to the private workforce to use. If it’s not provided, only a manifest file will be created instead of a SageMaker Ground Truth labeling job. You can use the manifest file to create a SageMaker Ground Truth labeling job later on. Note that as of this writing, you can’t provide an external workforce when creating the labeling job from the notebook. However, you can clone the created job and assign it to an external workforce on the SageMaker Ground Truth console.

- comprehend_parameters (optional) – Parameters to directly train an Amazon Comprehend custom entity recognizer model. If omitted, this step will be skipped.

To start the state machine, run the following Python code:

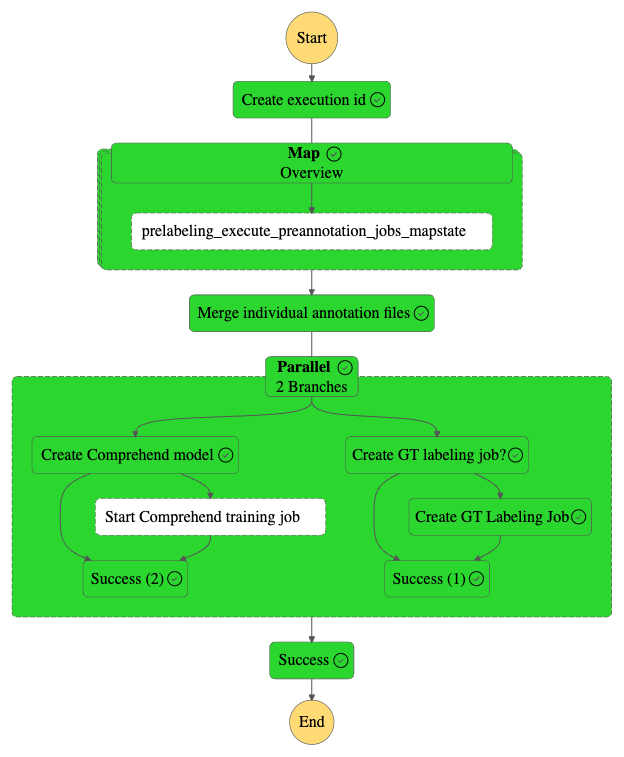

This will start a run of the state machine. You can monitor the progress of the state machine on the Step Functions console. The following diagram illustrates the state machine workflow.

When the state machine is complete, do the following:

- Inspect the following outputs saved in the

prelabeling/folder of thecomprehend-semi-structured-docsS3 bucket:- Individual annotation files for each page of the documents (one per page per document) in

temp_individual_manifests/ - A manifest for the SageMaker Ground Truth labeling job in

consolidated_manifest/consolidated_manifest.manifest - A manifest that can be used to train a custom Amazon Comprehend model in

consolidated_manifest/consolidated_manifest_comprehend.manifest

- Individual annotation files for each page of the documents (one per page per document) in

- On the SageMaker console, open the SageMaker Ground Truth labeling job that was created to review the annotations

- Inspect and test the custom Amazon Comprehend model that was trained

As mentioned previously, the tool can only create SageMaker Ground Truth labeling jobs for private workforces. To outsource the human labeling effort, you can clone the labeling job on the SageMaker Ground Truth console and attach any workforce to the new job.

Clean up

To avoid incurring additional charges, delete the resources that you created and delete the stack that you deployed with the following command:

Conclusion

The pre-labeling tool provides a powerful way for companies to use existing tabular data to accelerate the process of training custom entity recognition models in Amazon Comprehend. By automatically pre-annotating PDF documents, it significantly reduces the manual effort required in the labeling process.

The tool has two versions: fuzzy matching and Amazon Comprehend-based, giving flexibility on how to generate the initial annotations. After documents are pre-labeled, you can quickly review them in a SageMaker Ground Truth labeling job or even skip the review and directly train an Amazon Comprehend custom model.

The pre-labeling tool enables you to quickly unlock the value of your historical entity data and use it in creating custom models tailored to your specific domain. By speeding up what is typically the most labor-intensive part of the process, it makes custom entity recognition with Amazon Comprehend more accessible than ever.

For more information about how to label PDF documents using a SageMaker Ground Truth labeling job, see Custom document annotation for extracting named entities in documents using Amazon Comprehend and Use Amazon SageMaker Ground Truth to Label Data.

About the authors

Oskar Schnaack is an Applied Scientist at the Generative AI Innovation Center. He is passionate about diving into the science behind machine learning to make it accessible for customers. Outside of work, Oskar enjoys cycling and keeping up with trends in information theory.

Oskar Schnaack is an Applied Scientist at the Generative AI Innovation Center. He is passionate about diving into the science behind machine learning to make it accessible for customers. Outside of work, Oskar enjoys cycling and keeping up with trends in information theory.

Romain Besombes is a Deep Learning Architect at the Generative AI Innovation Center. He is passionate about building innovative architectures to address customers’ business problems with machine learning.

Romain Besombes is a Deep Learning Architect at the Generative AI Innovation Center. He is passionate about building innovative architectures to address customers’ business problems with machine learning.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://aws.amazon.com/blogs/machine-learning/automate-pdf-pre-labeling-for-amazon-comprehend/

- :has

- :is

- :not

- $UP

- 100

- 11

- 150

- 152

- 19

- 400

- 500

- 600

- 7

- 804

- 9

- a

- About

- above

- accelerate

- accessible

- accurate

- Additional

- address

- addresses

- After

- AI

- algorithm

- All

- Allowing

- allows

- already

- also

- Amazon

- Amazon Comprehend

- Amazon SageMaker

- Amazon SageMaker Ground Truth

- Amazon Web Services

- an

- and

- any

- APIs

- appear

- Application

- applied

- architecture

- ARE

- around

- AS

- At

- attach

- automate

- automatically

- available

- avoid

- AWS

- Bank

- BE

- been

- before

- behind

- between

- both

- boxes

- Building

- built

- business

- but

- by

- calculates

- CAN

- Center

- charges

- code

- COM

- combinations

- Companies

- comparing

- complete

- comprehend

- consists

- Console

- construct

- contain

- contains

- Corresponding

- corresponds

- create

- created

- creates

- Creating

- Current

- custom

- Customers

- data

- Date

- decreases

- deep

- deep learning

- define

- demo

- deploy

- deployed

- derive

- details

- different

- directly

- discuss

- displayed

- diving

- do

- document

- documents

- doe

- domain

- done

- draw

- each

- effort

- enables

- enabling

- entities

- entity

- ERP

- Even

- EVER

- example

- examples

- existing

- expected

- extends

- external

- extract

- FAQ

- File

- Files

- Find

- First

- Flexibility

- following

- For

- format

- Framework

- fresh

- from

- full

- functionalities

- functions

- generate

- generates

- generative

- Generative AI

- Giving

- Ground

- Have

- he

- historical

- How

- How To

- However

- HTML

- http

- HTTPS

- human

- identical

- if

- ignore

- illustrates

- implement

- in

- include

- includes

- information

- Infrastructure

- initial

- Innovation

- innovative

- input

- inputs

- insights

- instead

- instructions

- interest

- into

- IT

- ITS

- jane

- Job

- Jobs

- jpg

- json

- keeping

- Key

- keys

- Know

- Label

- labeling

- later

- learning

- like

- List

- Listed

- local

- location

- looking

- LOOKS

- machine

- machine learning

- Main

- make

- MAKES

- manual

- manual work

- manually

- Maps

- Match

- matches

- matching

- mentioned

- model

- models

- Monitor

- more

- most

- multiple

- name

- Named

- names

- necessarily

- Need

- needed

- New

- nlp

- no

- note

- notebook

- numbers

- of

- on

- ONE

- only

- open

- open source

- or

- orchestrated

- output

- outputs

- outside

- outsource

- overview

- page

- pairs

- parameters

- part

- passionate

- per

- person

- plato

- Plato Data Intelligence

- PlatoData

- possible

- Post

- powerful

- Prepare

- preparing

- prevent

- previous

- previously

- private

- problems

- process

- processing

- Progress

- provide

- provided

- provides

- public

- Python

- quickly

- recognition

- reduce

- reduces

- refer

- repetitive

- repository

- required

- requires

- Resources

- review

- Run

- running

- sagemaker

- Sam

- sap

- saved

- Science

- Scientist

- Second

- Section

- sections

- see

- Serverless

- service

- Services

- setting

- should

- show

- shown

- Shows

- significantly

- similar

- Simple

- solution

- some

- Source

- specific

- stack

- start

- Starting

- State

- statements

- Step

- Steps

- storage

- Strict

- String

- such

- Systems

- tailored

- takes

- technique

- techniques

- template

- test

- text

- textual

- than

- that

- The

- The State

- their

- Them

- then

- theory

- There.

- therefore

- These

- things

- this

- threshold

- Through

- time-consuming

- to

- tool

- top

- Train

- Training

- Trends

- truth

- two

- type

- types

- typically

- ui

- under

- unique

- unlock

- use

- used

- uses

- using

- value

- versions

- walk

- want

- was

- Way..

- we

- web

- web services

- WELL

- What

- What is

- when

- which

- whole

- Wikipedia

- will

- with

- without

- words

- Work

- workflow

- Workforce

- writing

- Wrong

- You

- Your

- zephyrnet

- Zip