I was at an “all things data and analytics benchmarking” event last week. It was fabulous, most major banks were represented, also the hottest of hot hedge funds, a chunk of exchanges, and some of not-the-usual vendors.

I found it refreshing, like most events where tangible analytics gets discussed. My early career was quant /data science/analytics-focused, but now I’m in the, ahem,

“Data” profession, which is made up by a confusing array of (mostly) commercial products underpining ”data warehouses,” ”data lakes,” and “data mesh,” ”data fabric,” “data swamp” infrastructures, amounting to $trillions in expenditure and sustaining some very large organizations. I personally find the language, jargon and ecosystem in this industry abstracted from reality, but it helps sustains commercial providers with beautifully named products and categories. Follow the money, as they say: a “complex” data platform carries a much higher price ticket than a modelling tool that any MSC student can use.

The data industry was a background to the very pragmatic event, but got discussed, enjoyably and explicitly, on a panel on so-called “data lineage.” Data lineage is a predominantly a sequential linear process that captures data transformation from ingest to utilization and helps underpin so-called data governance which drives a heck of a lot of costly warehouse tooling. In the cloud era, cloud data warehouses are all the rage, one in particular. But here’s the thing. Data transformation is actually not linear, particularly when useful. It’s complex, cyclical, morphing like Doctor Who and the tardis, travelling through time and across galaxies. Pseudo-philosophy and popular physics aside, in finance the same data, when tweaked, transformed and analyzed, can serve many different use cases, also across time and place. Furthermore, regulators ask us to document change, being transparent about what we did when, why, and what changed. You might say, “that’s data governance they’re asking for”. Kind of. But regulators are actually asking for reporting of actionable decisions that require models, engagement, and resulting in actions that have impact. It involves people, decisions and tangible use cases, not just data.

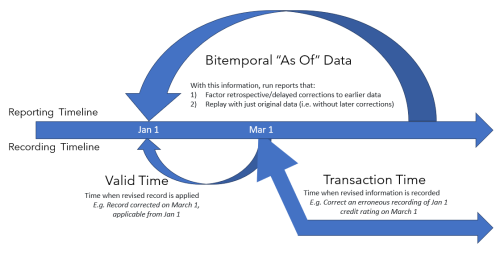

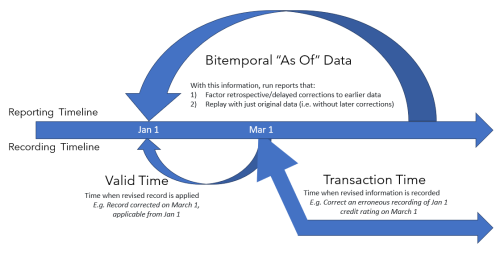

The panel discussion introduced and discussed at length the concept of bitemporality, a practical data management tactic servicing financial use cases, and regulatory processes nicely. One panelist from a (very) regulated tier 1 bank praised bitemporality effusively. His architecture used bitemporality to adjust through time and replay data changes. Let’s say you want to recreate an old financial report or derivatives trade as it looked at the time of creation, and then as it should have looked given later corrections/additions/payoffs, for example in a compliance report. With bitemporality in his case, a single data source informs multiple (validated) views of data, when it happened and later, with “wisdom”. It’s simple to implement, not costly, and here’s what you need to know about it.

- A data model should store and facilitate analysis of data across two dimensions of time – a bitemporal data model, i.e., a model catering for data at inception and at any points of time in future when revisions to its state occur and can be represented “as-of” a given time.

- This model stores more than one timestamp for each property, object, and value.

- Data points can be joined and connected – an “as-of” join

In a traditional data warehouse type architecture, such lineage can mean costly data copies, and untimely inefficiencies and complexities of retrieval. This is one way cloud data warehouse providers make money, multiple copies of data managed, a blunt approach.

A simple alternative is just simple data patterns with a supporting storage/in-memory process. That can and should be cheap, Python-centered. Simply use timestamps (with your data) and as-of joins (in code) to make the process straightforward, with an ability to dive deep into individual records as needed.

To save costs on your data warehouse, then, engineer with some simple Python, paying attention to in-memory performance. There’s less need to engineer inside an expensive data warehouse process.

Where do you use bitemporality in finance? Well, compliance is an obvious case. Take for example

spoofing. Now, spoofing is at heart a trade intent pattern, albeit a fraudulent one where particular types of trades are placed but not followed through. The reason for deep-diving spoofs is primarily compliance, but the pattern of deep diving trades, successful, unsuccessful, fraudulent or just simply great, benefits the front office too. This in turn informs back-testing and strategy development, which also can include notions of time. This is because strategies, when they go into production trading, risk or portfolio management systems, only know what’s in front of them, but the back-test can try and incorporate known assumptions to mitigate risks. Examples include comparing short term transaction costs as opposed to estimated ones, compare real and anticipated short term pairs correlations, medium term pay-offs for, say, derivatives and fixed income instruments, dividends in equities, stock/sector correlations in portfolio management, and longer term “macro” market/risk regimes beloved by economists. Time – and bitemporality – matters. The use cases impacted make the technique much more valuable than being just a simple data engineering manoeuvre.

Beyond capital markets, consider payments. For example, actions on a payment device will report transactions centrally. What is known at the point of the transaction then gets updated by information, for example that of the customer. Fraud detection is an obvious use case for this, and that needs to be timely. Smart payment data gets processed point in time, but adjusted to improve data quality and inform downstream events. The use of a bitemporal data model on the master and time-series data helps manage point in time activities.

In conclusion, what can potentially be a costly, “highly governed” linear warehouse-lineage transformation can be simplified with some common-sense analytics and an empathy with real world use-cases. Bitemporality is well worth a look.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.finextra.com/blogposting/25203/bitemporality-helping-lower-costs-for-financial-services-use-cases?utm_medium=rssfinextra&utm_source=finextrablogs

- :is

- :not

- :where

- $UP

- 1

- a

- ability

- About

- about IT

- across

- actionable

- actions

- activities

- actually

- Adjusted

- All

- also

- alternative

- an

- analysis

- analytics

- analyzed

- and

- Anticipated

- any

- approach

- architecture

- ARE

- Array

- AS

- aside

- ask

- asking

- assumptions

- At

- attention

- background

- Bank

- Banks

- BE

- beautifully

- because

- being

- beloved

- benefits

- but

- by

- CAN

- capital

- Capital Markets

- captures

- Career

- case

- cases

- categories

- catering

- change

- changed

- Changes

- cheap

- Cloud

- code

- commercial

- compare

- comparing

- complex

- complexities

- compliance

- concept

- conclusion

- confusing

- connected

- Consider

- correlations

- costly

- Costs

- creation

- customer

- Cyclical

- data

- data management

- Data Platform

- decisions

- deep

- Derivatives

- Detection

- Development

- device

- DID

- different

- dimensions

- discussed

- discussion

- dive

- dividends

- diving

- do

- Doctor

- document

- drives

- e

- each

- Early

- economists

- ecosystem

- empathy

- engagement

- engineer

- Engineering

- Equities

- Era

- estimated

- Event

- events

- example

- examples

- Exchanges

- expensive

- explicitly

- fabric

- facilitate

- finance

- financial

- financial report

- financial services

- Find

- Finextra

- fixed

- fixed income

- follow

- followed

- For

- found

- fraud

- fraud detection

- fraudulent

- from

- front

- funds

- Furthermore

- future

- Galaxies

- given

- Go

- got

- governance

- great

- happened

- Have

- Heart

- hedge

- Hedge Funds

- helping

- helps

- higher

- his

- HOT

- hottest

- HTML

- HTTPS

- i

- Impact

- impacted

- implement

- improve

- in

- inception

- include

- Income

- incorporate

- individual

- industry

- inefficiencies

- inform

- information

- informs

- infrastructures

- inside

- instruments

- intent

- into

- introduced

- IT

- ITS

- jargon

- joined

- Joins

- just

- Kind

- Know

- known

- lakes

- language

- large

- Last

- later

- less

- like

- lineage

- longer

- Look

- looked

- Lot

- lower

- made

- major

- make

- make money

- manage

- managed

- management

- many

- Markets

- master

- Matters

- mean

- medium

- mesh

- might

- Mitigate

- model

- modelling

- models

- money

- more

- most

- mostly

- much

- multiple

- my

- Named

- Need

- needed

- needs

- now

- object

- obvious

- of

- Office

- Old

- on

- ONE

- ones

- only

- opposed

- or

- organizations

- pairs

- panel

- panel discussion

- particular

- particularly

- Pattern

- patterns

- paying

- payment

- payments

- People

- performance

- Personally

- Physics

- Place

- platform

- plato

- Plato Data Intelligence

- PlatoData

- Point

- points

- Popular

- portfolio

- portfolio management

- potentially

- Practical

- pragmatic

- Praised

- predominantly

- price

- primarily

- process

- Processed

- processes

- Production

- Products

- profession

- property

- providers

- Python

- quality

- Quant

- Rage

- real

- real world

- Reality

- reason

- records

- regimes

- regulated

- Regulators

- regulatory

- report

- Reporting

- represented

- require

- resulting

- revisions

- Risk

- risks

- same

- Save

- say

- serve

- Services

- Short

- should

- Simple

- simplified

- simply

- single

- smart

- some

- Source

- State

- store

- stores

- straightforward

- strategies

- Strategy

- Student

- successful

- such

- Supporting

- Systems

- Take

- tangible

- technique

- term

- than

- that

- The

- Them

- then

- they

- thing

- things

- this

- Through

- ticket

- tier

- time

- timely

- timestamp

- to

- too

- tool

- trade

- trades

- Trading

- traditional

- transaction

- transaction costs

- Transactions

- Transformation

- transformed

- transparent

- try

- TURN

- two

- type

- types

- underpin

- updated

- us

- use

- use case

- use-cases

- used

- validated

- Valuable

- value

- vendors

- very

- views

- want

- was

- Way..

- we

- week

- WELL

- were

- What

- What is

- when

- which

- WHO

- why

- Wikipedia

- will

- with

- world

- worth

- You

- Your

- zephyrnet