Note: This article is the first in a series called “Dissecting AI applications”, which introduces a mental model for AI systems. The model serves as a tool for the discussion, planning, and definition of AI products by cross-disciplinary AI and product teams, as well as for alignment with the business department. It aims to bring together the perspectives of product managers, UX designers, data scientists, engineers, and other team members. In this article, I introduce the mental model, while future articles will demonstrate how to apply it to specific AI products and features.

Often, companies assume that all they need to include AI in their offering is to hire AI experts and let them play the technical magic. This approach leads them straight into the integration fallacy: even if these experts and engineers produce exceptional models and algorithms, their outputs often get stuck at the level of playgrounds, sandboxes, and demos, and never really become full-fledged parts of a product. Over the years, I have seen a great deal of frustration from data scientists and engineers whose technically outstanding AI implementations did not find their way into user-facing products. Rather, they had the honorable status of bleeding-edge experiments that gave internal stakeholders the impression of riding the AI wave. Now, with the ubiquitous proliferation of AI since the publication of ChatGPT in 2022, companies can no longer afford to use AI as a “lighthouse” feature to show off their technological acumen.

Why is it so difficult to integrate AI? There are a couple of reasons:

- Often, teams focus on a single aspect of an AI system. This has even led to the emergence of separate camps, such as data-centric, model-centric, and human-centric AI. While each of them offers exciting perspectives for research, a real-life product needs to combine the data, the model, and the human-machine interaction into a coherent system.

- AI development is a highly collaborative enterprise. In traditional software development, you work with a relatively clear dichotomy consisting of the backend and the frontend components. In AI, you will not only need to add more diverse roles and skills to your team but also ensure closer cooperation between the different parties. The different components of your AI system will interact with each other in intimate ways. For example, if you are working on a virtual assistant, your UX designers will have to understand prompt engineering to create a natural user flow. Your data annotators need to be aware of your brand and the “character traits” of your virtual assistant to create training data that are consistent and aligned with your positioning, and your product manager needs to grasp and scrutinize the architecture of the data pipeline to ensure it meets the governance concerns of your users.

- When building AI, companies often underestimate the importance of design. While AI starts in the backend, good design is indispensable to make it shine in production. AI design pushes the boundaries of traditional UX. A lot of the functionality you offer is not per se visible in the interface, but “hidden” in the model, and you need to educate and guide your users to maximize these benefits. Besides, modern foundational models are wild things that can produce toxic, wrong, and harmful outputs, so you will set up additional guardrails to reduce these risks. All of this might require new skills on your team such as prompt engineering and conversational design. Sometimes, it also means doing counterintuitive stuff, like understating value to manage users’ expectations and adding friction to give them more control and transparency.

- The AI hype creates pressure. Many companies put the cart before the horse by jumping into implementations that are not validated by customer and market needs. Occasionally throwing in the AI buzzword can help you market and position yourself as a progressive and innovative business, but in the long term, you will need to back your buzz and experimentation with real opportunities. This can be achieved with tight coordination between business and technology, which is based on an explicit mapping of market-side opportunities to technological potentials.

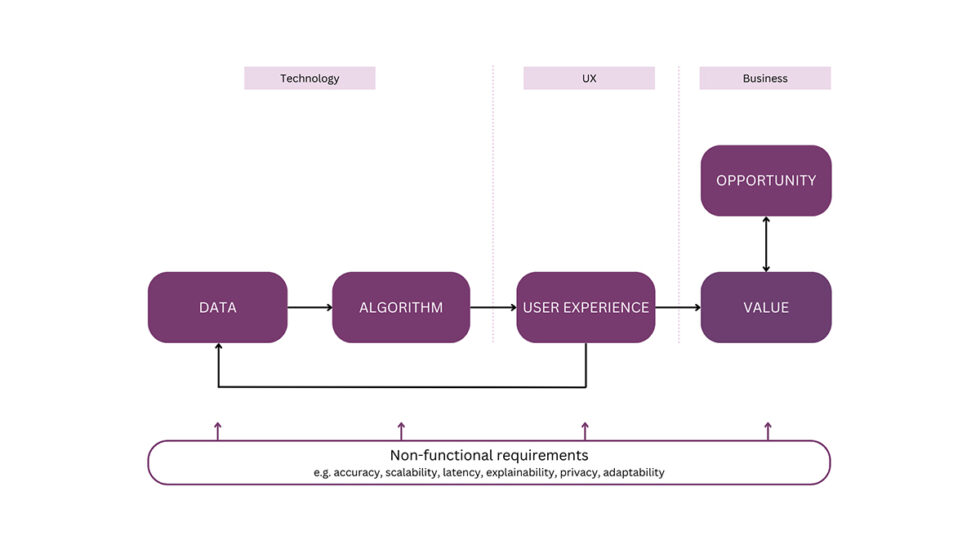

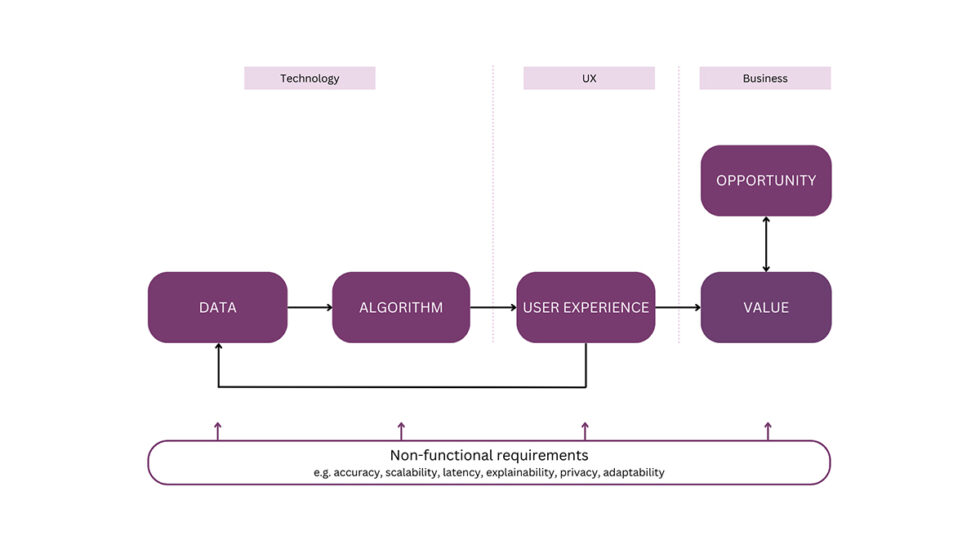

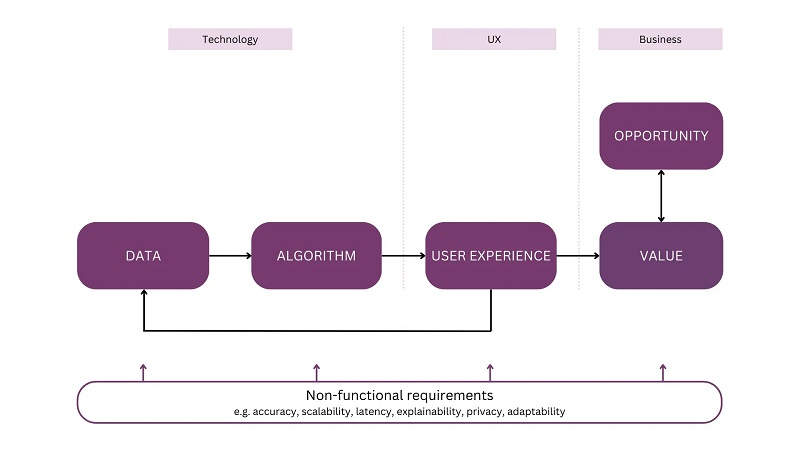

In this article, we will construct a mental model for AI systems that integrates these different aspects (cf. figure 1). It encourages builders to think holistically, create a clear understanding of their target product, and update it with new insights and inputs along the way. The model can be used as a tool to ease collaboration, align the diverse perspectives inside and outside the AI team, and build successful products based on a shared vision. It can be applied not only to new, AI-driven products but also to AI features that are incorporated into existing products.

The following sections will briefly describe each of the components, focussing on parts that are specific to AI products. We will start with the business perspective — the market-side opportunity and the value — and then dive into UX and technology. To illustrate the model, we will use the running example of a copilot for the generation of marketing content.

If this in-depth educational content is useful for you, you can subscribe to our AI research mailing list to be alerted when we release new material.

1. Opportunity

With all the cool stuff you can now do with AI, you might be impatient to get your hands dirty and start building. However, to build something your users need and love, you should back your development with a market opportunity. In the ideal world, opportunities reach us from customers who tell us what they need or want.[1] These can be unmet needs, pain points, or desires. You can look for this information in existing customer feedback, such as in product reviews and notes from your sales and success teams. Also, don’t forget yourself as a potential user of your product — if you are targeting a problem that you have experienced yourself, this information advantage is an additional edge. Beyond this, you can also conduct proactive customer research using tools like surveys and interviews.

For example, I don’t need to look too far to see the pains of content marketing for startups, but also larger companies. I have experienced it myself — as competition grows, developing thought leadership with individual, regular, and (!) high-quality content becomes more and more important for differentiation. Meanwhile, with a small and busy team, there will always be things on the table that seem more important than writing the blog post of the week. I also often meet people in my network who struggle to set up a consistent content marketing routine. These “local”, potentially biased observations can be validated by surveys that go beyond one’s network and confirm a broader market for a solution.

The real world is slightly fuzzier, and customers will not always come to you to present new, well-formulated opportunities. Rather, if stretch out your antennae, opportunities will reach you from many directions, such as:

- Market positioning: AI is trendy — for established businesses, it can be used to reinforce the image of a business as innovative, high-tech, future-proof, etc. For example, it can elevate an existing marketing agency to an AI-powered service and differentiate it from competitors. However, don’t do AI for the sake of AI. The positioning trick is to be applied with caution and in combination with other opportunities — otherwise, you risk losing credibility.

- Competitors: When your competitors make a move, it is likely that they have already done the underlying research and validation. Look at them after some time — was their development successful? Use this information to optimize your own solution, adopt the successful parts, and iron out the mistakes. For example, let’s say you are observing a competitor that is offering a service for fully automated generation of marketing content. Users click a “big red button”, and the AI marches ahead to write and publish the content. After some research, you learn that users hesitate to use this product because they want to retain more control over the process and contribute their own expertise and personality to the writing. After all, writing is also about self-expression and individual creativity. This is the time for you to move ahead with a versatile tool that offers rich functionality and configuration for shaping your content. It boosts the efficiency of users while allowing them to “inject” themselves into the process whenever they wish.

- Regulations: megatrends such as technological disruption and globalization force regulators to tighten their requirements. Regulations create pressure and are a bullet-proof source of opportunity. For example, imagine a regulation comes into place that strictly requires everyone to advertise AI-generated content as such. Those companies that already use tools for AI content generation will disappear for internal discussions on whether they want this. Many of them will refrain because they want to maintain an image of genuine thought leadership, as opposed to producing visibly AI-generated boilerplate. Let’s say you were smart and opted for an augmented solution that gives users enough control so they can remain the official “authors” of the texts. As the new restriction is introduced, you are immune and can dash forward to capitalize on the regulation, while your competitors with fully automated solutions will need time to recover from the setback.

- Enabling technologies: Emerging technologies and significant leaps in existing technologies, such as the wave of generative AI in 2022–23, can open up new ways of doing things, or catapult existing applications to a new level. Let’s say you have been running a traditional marketing agency for the last decade. Now, you can start introducing AI hacks and solutions into your business to increase the efficiency of your employees, serve more clients with the existing resources, and increase your profit. You are building on your existing expertise, reputation, and (hopefully good-willed) customer base, so introducing AI enhancements can be much smoother and less risky than it would be for a newcomer.

Finally, in the modern product world, opportunities are often less explicit and formal and can be directly validated in experiments, which speeds up your development. Thus, in product-led growth, team members can come up with their own hypotheses without a strict data-driven argument. These hypotheses can be formulated in a piecemeal fashion, like modifying a prompt or changing the local layout of some UX elements, which makes them easy to implement, deploy, and test. By removing the pressure to provide a priori data for each new suggestion, this approach leverages the intuitions and imaginations of all team members while enforcing a direct validation of the suggestions. Let’s say that your content generation runs smoothly, but you hear more and more complaints about a general lack of AI transparency and explainability. You decide to implement an additional transparency level and show your users the specific documents that were used to generate a piece of content. Your team puts the feature to test with a cohort of users and finds that they are happy to use it for tracing back to the original information sources. Thus, you decide to establish it in the core product to increase usage and satisfaction.

2. Value

To understand and communicate the value of your AI product or feature, you first need to map it to a use case — a specific business problem it will solve — and figure out the ROI (return on investment). This forces you to shift your mind away from the technology and focus on the user-side benefits of the solution. ROI can be measured along different dimensions. For AI, some of them are are:

- Increased efficiency: AI can be a booster for the productivity of individuals, teams, and whole companies. For example, for content generation, you might find that instead of the 4–5 hours normally needed to write a blog post [2], you can now do it in 1–2 hours, and spend the time you saved for other tasks. Efficiency gains often go hand-in-hand with cost savings, since less human effort is required to perform the same amount of work. Thus, in the business context, this benefit is attractive both for users and for leadership.

- A more personalized experience: For example, your content generation tool can ask users to set parameters of their company like the brand attributes, terminology, product benefits, etc. Additionally, it can track the edits made by a specific writer, and adapt its generations to the unique writing style of this user over time.

- Fun and pleasure: Here, we get into the emotional side of product use, also called the “visceral” level by Don Norman [3]. Whole categories of products for fun and entertainment exist in the B2C camp, like gaming and Augmented Reality. What about B2B — wouldn’t you assume that B2B products exist in a sterile professional vacuum? In reality, this category can generate even stronger emotional responses than B2C.[4] For example, writing can be perceived as a satisfying act of self-expression, or as an inner struggle with writer’s block and other issues. Think about how your product can reinforce the positive emotions of a task while alleviating or even transforming its painful aspects.

- Convenience: What does your user need to do to leverage the magic powers of AI? Imagine integrating your content generation copilot into popular collaboration tools like MS Office, Google Docs, and Notion. Users will be able to access the intelligence and efficiency of your product without leaving the comfort of their digital “home”. Thus, you minimize the effort users need to make to experience the value of the product and keep using it, which in turn boosts your user acquisition and adoption.

Some of the AI benefits — for example efficiency — can be directly quantified for ROI. For less tangible gains like convenience and pleasure, you will need to think of proxy metrics like user satisfaction. Keep in mind that thinking in terms of end-user value will not only close the gap between your users and your product. As a welcome side effect, it can reduce technical detail in your public communications. This will prevent you from accidentally inviting unwanted competition to the party.

Finally, a fundamental aspect of value that you should consider early on is sustainability. How does your solution impact the society and the environment? In our example, automated or augmented content generation can displace and eliminate large-scale human workloads. You probably don’t want to become known as the killer-to-be of an entire job category — after all, this will not only throw up ethical questions but also call resistance on the part of users whose jobs you are threatening. Think about how you can address these fears. For instance, you could educate users about how they can efficiently use their new free time to design even more sophisticated marketing strategies. These can provide for a defensible moat even as other competitors catch up with automated content generation.

3. Data

For any kind of AI and machine learning, you need to collect and prepare your data so it reflects the real-life inputs and provides sufficient learning signals for your model. Nowadays, we see a trend towards data-centric AI — an AI philosophy that moves away from endless tweaking and optimization of models, and focuses on fixing the numerous issues in the data that are fed into these models. When you start out, there are different ways to get your hands on a decent dataset:

- You can use an existing dataset. This can either be a standard machine learning dataset or a dataset with a different initial purpose that you adapt for your task. There are some dataset classics, such as the IMDB Movie Reviews Dataset for sentiment analysis and the MNIST dataset for hand-written character recognition. There are more exotic and exciting alternatives, like Catching Illegal Fishing and Dog Breed Identification, and innumerable user-curated datasets on data hubs like Kaggle. The chances that you will find a dataset that is made for your specific task and completely satisfies your requirements are rather low, and in most cases, you will need to also use other methods to enrich your data.

- You can annotate or create the data manually to create the right learning signals. Manual data annotation — for example, the annotation of texts with sentiment scores — was the go-to method in the early days of machine learning. Recently, it has regained attention as the main ingredient in ChatGPT’s secret sauce. A huge manual effort was spent on creating and ranking the model’s responses to reflect human preferences. This technique is also called Reinforcement Learning from Human Feedback (RLHF). If you have the necessary resources, you can use them to create high-quality data for more specific tasks, like the generation of marketing content. Annotation can be done either internally or using an external provider or a crowdsourcing service such as Amazon Mechanical Turk. Anyway, most companies will not want to spend the huge resources required for the manual creation of RLHF data and will consider some tricks to automate the creation of their data.

- So, you can add more examples to an existing dataset using data augmentation. For simpler tasks like sentiment analysis, you could introduce some additional noise into the texts, switch up a couple of words, etc. For more open generation tasks, there is currently a lot of enthusiasm about using large models (e.g. foundational models) for automated training data generation. Once you have identified the best method to augment your data, you can easily scale it to reach the required dataset size.

When creating your data, you face a trade-off between quality and quantity. You can manually annotate less data with a high quality, or spend your budget on developing hacks and tricks for automated data augmentation that will introduce additional noise. If you go for manual annotation, you can do it internally and shape a culture of detail and quality, or crowdsource the work to anonymous folks. Crowdsourcing typically has a lower quality, so you might need to annotate more to compensate for the noise. How do you find the ideal balance? There are no ready recipes here — ultimately, you will find your ideal data composition through a constant back-and-forth between training and enhancing your data. In general, when pre-training a model, it needs to acquire knowledge from scratch, which can only happen with a larger quantity of data. On the other hand, if you want to fine-tune and give the last touches of specialization to an existing large model, you might value quality over quantity. The controlled manual annotation of a small dataset using detailed guidelines might be the optimal solution in this case.

4. Algorithm

Data is the raw material from which your model will learn, and hopefully, you can compile a representative, high-quality dataset. Now, the actual superpower of your AI system — its ability to learn from existing data and generalize to new data — resides in the algorithm. In terms of the core AI models, there are three main options that you can use:

- Prompt an existing model. Advanced LLMs (Large Language Models) of the GPT family, such as ChatGPT and GPT-4, as well as from other providers such as Anthropic and AI21 Labs are available for inference via API. With prompting, you can directly talk to these models, including in your prompt all the domain- and task-specific information required for a task. This can include specific content to be used, examples of analogous tasks (few-shot prompting) as well as instructions for the model to follow. For example, if your user wants to generate a blog post about a new product feature, you might ask them to provide some core information about the feature, such as its benefits and use cases, how to use it, the launch date, etc. Your product then fills this information into a carefully crafted prompt template and asks the LLM to generate the text. Prompting is great to get a head-start into pre-trained models. However, the moat you can construct with prompting will quickly thin out over time — in the middle term, you need a more defensible model strategy to sustain your competitive edge.

- Fine-tune a pre-trained model. This approach has made AI so popular in the past years. As more and more pre-trained models become available and portals such as Huggingface offer model repositories as well as standard code to work with the models, fine-tuning is becoming the go-to method to try and implement. When you work with a pre-trained model, you can benefit from the investment that someone has already made into the data, training, and evaluation of the model, which already “knows” a lot of stuff about language and the world. All you need to do is fine-tune the model using a task-specific dataset, which can be much smaller than the dataset used originally for pre-training. For example, for marketing content generation, you can collect a set of blog posts that performed well in terms of engagement, and reverse-engineer the instructions for these. From this data, your model will learn about the structure, flow, and style of successful articles. Fine-tuning is the way to go when using open-source models, but LLM API providers such as OpenAI and Cohere are also increasingly offering fine-tuning functionality. Especially for the open-source track, you will still need to consider the issues of model selection, the cost overhead of training and deploying larger models, and the maintenance and update schedules of your model.

- Train your ML model from scratch. In general, this approach works well for simpler, but highly specific problems for which you have specific know-how or decent datasets. The generation of content does not exactly fall into this category — it requires advanced linguistic capabilities to get you off the ground, and these can only be acquired after training on ridiculously large amounts of data. Simpler problems such as sentiment analysis for a specific type of text can often be solved with established machine learning methods like logistic regression, which are computationally less expensive than fancy deep learning methods. Of course, there is also the middle ground of reasonably complex problems like concept extraction for specific domains, for which you might consider training a deep neural network from scratch.

Beyond the training, evaluation is of primary importance for the successful use of machine learning. Suitable evaluation metrics and methods are not only important for a confident launch of your AI features, but will also serve as a clear target for further optimization and as a common ground for internal discussions and decisions. While technical metrics such as precision, recall, and accuracy can provide a good starting point, ultimately you will want to look for metrics that reflect the real-life value that your AI is delivering to users.

5. User experience

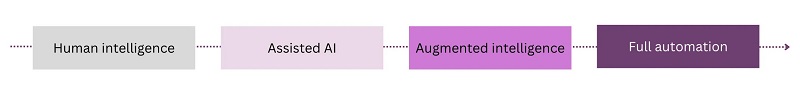

The user experience of AI products is a captivating theme — after all, users have high hopes but also fears about “partnering” with an AI that can supercharge and potentially outsmart their intelligence. The design of this human-AI partnership requires a thoughtful and sensible discovery and design process. One of the key considerations is the degree of automation you want to grant with your product — and mind you, total automation is by far not always the ideal solution. The following figure illustrates the automation continuum:

Let’s look at each of these levels:

- In the first stage, humans do all the work, and no automation is performed. Despite the hype around AI, most knowledge-intensive tasks in modern companies are still carried out on this level, presenting huge opportunities for automation. For example, the content writer who resists AI-driven tools and is persuaded that writing is a highly manual and idiosyncratic craft works here.

- In the second stage of assisted AI, users have complete control over task execution and do a big part of the work manually, but AI tools help them save time and compensate for their weak points. For example, when writing a blog post with a tight deadline, a final linguistic check with Grammarly or a similar tool can become a welcome timesaver. It can eliminate manual revision, which requires a lot of your scarce time and attention and might still leave you with errors and overlooks — after all, to err is human.

- With augmented intelligence, AI is a partner that augments the intelligence of the human, thus leveraging the strengths of both worlds. Compared to assisted AI, the machine has much more to say in your process and covers a larger set of responsibilities, like ideation, generation, and editing of drafts, and the final linguistic check. Users still need to participate in the work, make decisions, and perform parts of the task. The user interface should clearly indicate the labor distribution between human and AI, highlight error potentials, and provide transparency into the steps it performs. In short, the “augmented” experience guides users to the desired outcome via iteration and refinement.

- And finally, we have full automation — an intriguing idea for AI geeks, philosophers, and pundits, but often not the optimal choice for real-life products. Full automation means that you are offering one “big red button” that kicks off the process. Once the AI is done, your users face the final output and either take it or leave it. Anything that happened in-between they cannot control. As you can imagine, the UX options here are rather limited since there is virtually no interactivity. The bulk of the responsibility for success rests on the shoulders of your technical colleagues, who need to ensure an exceptionally high quality of the outputs.

AI products need special treatment when it comes to design. Standard graphical interfaces are deterministic and allow you to foresee all possible paths the user might take. By contrast, large AI models are probabilistic and uncertain — they expose a range of amazing capabilities but also risks such as toxic, wrong, and harmful outputs. From the outside, your AI interface might look simple because a lot of the capabilities of your product reside directly in the model. For example, an LLM can interpret prompts, produce text, search for information, summarize it, adopt a certain style and terminology, execute instructions, etc. Even if your UI is a simple chat or prompting interface, don’t leave this potential unseen — in order to lead users to success, you need to be explicit and realistic. Make users aware of the capabilities and limitations of your AI models, allow them to easily discover and fix errors made by the AI, and teach them ways to iterate themselves to optimal outputs. By emphasizing trust, transparency, and user education, you can make your users collaborate with the AI. While a deep dive into the emerging discipline of AI design is out of the scope of this article, I strongly encourage you to look for inspiration not only from other AI companies but also from other areas of design such as human-machine interaction. You will soon identify a range of recurring design patterns, such as autocompletes, prompt suggestions, and AI notices, that you can integrate into your own interface to make the most out of your data and models.

Further, to deliver a truly great design, you might need to add new design skills to your team. For example, if you are building a chat application for the refinement of marketing content, you will work with a conversational designer who takes care of the conversational flows and the “personality” of your chatbot. If you are building a rich augmented product that needs to thoroughly educate and guide your users through the available options, a content designer can help you build the right kind of information architecture, and add the right amount of nudging and prompting for your users.

And finally, be open to surprises. AI design can make you rethink your original conceptions about user experience. For example, many UX designers and product managers were drilled to minimize latency and friction in order to smoothen out the experience of the user. Well, in AI products, you can pause this fight and use both to your advantage. Latency and waiting times are great for educating your users, e.g. by explaining what the AI is currently doing and indicating possible next steps on their side. Breaks, like dialogue and notification pop-ups, can introduce friction to reinforce the human-AI partnership and increase transparency and control for your users.

6. Non-functional requirements

Beyond the data, algorithm and UX which enable you to implement a specific functionality, so-called non-functional requirements (NFRs) such as accuracy, latency, scalability, reliability, and data governance ensure that the user indeed gets the envisioned value. The concept of NFRs comes from software development but is not yet systematically accounted for in the domain of AI. Often, these requirements are picked up in an ad-hoc fashion as they come up during user research, ideation, development, and operation of AI capabilities.

You should try to understand and define your NFRs as early as possible since different NFRs will be coming to life at different points in your journey. For example, privacy needs to be considered starting at the very initial step of data selection. Accuracy is most sensitive in the production stage when users start using your system online, potentially overwhelming it with unexpected inputs. Scalability is a strategic consideration that comes into play when your business scales the number of users and/or requests or the spectrum of offered functionality.

When it comes to NFRs, you cannot have them all. Here are some of the typical trade-offs that you will need to balance:

- One of the first methods to increase accuracy is to use a bigger model, which will affect latency.

- Using production data “as is” for further optimization can be best for learning, but can violate your privacy and anonymization rules.

- More scalable models are generalists, which impacts their accuracy on company- or user-specific tasks.

How you prioritize the different requirements will depend on the available computational resources, your UX concept including the degree of automation, and the impact of the decisions supported by the AI.

Key takeaways

- Start with the end in mind: Don’t assume that technology alone will do the job; you need a clear roadmap for integrating your AI into the user-facing product and educating your users about its benefits, risks, and limitations.

- Market alignment: Prioritize market opportunities and customer needs to guide AI development. Don’t rush AI implementations driven by hype and without market-side validation.

- User value: Define, quantify, and communicate the value of AI products in terms of efficiency, personalization, convenience, and other dimensions of value.

- Data quality: Focus on data quality and relevance to train AI models effectively. Try to use small, high-quality data for fine-tuning, and larger datasets for training from scratch.

- Algorithm/model selection: Choose the right level of complexity and defensibility (prompting, fine-tuning, training from scratch) for your use case and carefully evaluate its performance. Over time, as you acquire the necessary expertise and confidence in your product, you might want to switch to more advanced model strategies.

- User-centric design: Design AI products with user needs and emotions in mind, balancing automation and user control. Mind the “unpredictability” of probabilistic AI models, and guide your users to work with it and benefit from it.

- Collaborative design: By emphasizing trust, transparency, and user education, you can make your users collaborate with the AI.

- Non-functional requirements: Consider factors like accuracy, latency, scalability, and reliability throughout development, and try to evaluate the trade-offs between these early on.

- Collaboration: Foster close collaboration between AI experts, designers, product managers, and other team members to benefit from cross-disciplinary intelligence and successfully integrate your AI.

References

[1] Teresa Torres (2021). Continuous Discovery Habits: Discover Products that Create Customer Value and Business Value.

[2] Orbit Media (2022). New Blogging Statistics: What Content Strategies Work in 2022? We asked 1016 Bloggers.

[3] Don Norman (2013). The Design of Everyday Things.

[4] Google, Gartner and Motista (2013). From Promotion to Emotion: Connecting B2B Customers to Brands.

Note: All images are by the author.

This article was originally published on Towards Data Science and re-published to TOPBOTS with permission from the author.

Enjoy this article? Sign up for more AI research updates.

We’ll let you know when we release more summary articles like this one.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- ChartPrime. Elevate your Trading Game with ChartPrime. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://www.topbots.com/building-ai-products-with-a-holistic-mental-model/

- :has

- :is

- :not

- $UP

- 1

- 2013

- 2021

- 2022

- a

- ability

- Able

- About

- access

- accounted

- accuracy

- achieved

- acquire

- acquired

- acquisition

- Act

- actual

- acumen

- adapt

- add

- adding

- Additional

- Additionally

- address

- adopt

- Adoption

- advanced

- ADvantage

- Advertise

- affect

- After

- agency

- ahead

- AI

- AI models

- ai research

- AI systems

- AI-powered

- aims

- algorithm

- algorithms

- align

- aligned

- alignment

- All

- allow

- Allowing

- alone

- along

- already

- also

- alternatives

- always

- amazing

- Amazon

- amount

- amounts

- an

- analysis

- and

- Anonymous

- Anthropic

- any

- anything

- api

- Application

- applications

- applied

- Apply

- approach

- architecture

- ARE

- areas

- argument

- around

- article

- articles

- AS

- ask

- aspect

- aspects

- Assistant

- assisted

- assume

- At

- attention

- attractive

- attributes

- augmented

- Augmented Reality

- author

- automate

- Automated

- Automation

- available

- aware

- away

- B2B

- B2C

- back

- Backend

- Balance

- balancing

- base

- based

- BE

- because

- become

- becomes

- becoming

- been

- before

- benefit

- benefits

- besides

- BEST

- between

- Beyond

- biased

- Big

- bigger

- Block

- Blog

- Blog Posts

- booster

- boosts

- both

- boundaries

- brand

- breaks

- BREED

- briefly

- bring

- broader

- budget

- build

- builders

- Building

- business

- businesses

- busy

- but

- buzzword

- by

- call

- called

- Camp

- CAN

- cannot

- capabilities

- capitalize

- captivating

- care

- carefully

- carried

- case

- cases

- Catch

- categories

- Category

- caution

- certain

- chances

- changing

- character

- character recognition

- chatbot

- ChatGPT

- check

- choice

- Choose

- classics

- clear

- clearly

- click

- clients

- Close

- closer

- code

- COHERENT

- Cohort

- collaborate

- collaboration

- collaborative

- colleagues

- collect

- combination

- combine

- come

- comes

- comfort

- coming

- Common

- communicate

- Communications

- Companies

- company

- compared

- competition

- competitive

- competitor

- competitors

- complaints

- complete

- completely

- complex

- complexity

- components

- composition

- concept

- Concerns

- Conduct

- confidence

- confident

- Configuration

- Confirm

- Connecting

- Consider

- consideration

- considerations

- considered

- consistent

- Consisting

- constant

- construct

- content

- Content Generation

- Content Marketing

- context

- continuous

- Continuum

- contrast

- contribute

- control

- controlled

- convenience

- conversational

- Cool

- cooperation

- coordination

- Core

- Cost

- cost savings

- could

- Couple

- Course

- Covers

- craft

- crafted

- create

- creates

- Creating

- creation

- creativity

- Credibility

- crowdsourcing

- Culture

- Currently

- customer

- Customers

- Dash

- data

- data-driven

- datasets

- Date

- Days

- deadline

- deal

- decade

- decide

- decisions

- deep

- deep dive

- deep learning

- define

- definition

- Degree

- deliver

- delivering

- demonstrate

- Demos

- Department

- deploy

- deploying

- describe

- Design

- design patterns

- design process

- Designer

- designers

- desired

- Despite

- detail

- detailed

- developing

- Development

- dialogue

- DID

- different

- differentiate

- Differentiation

- difficult

- digital

- dimensions

- direct

- directly

- disappear

- discipline

- discover

- discovery

- discussion

- discussions

- Disruption

- distribution

- dive

- diverse

- diverse perspectives

- do

- documents

- does

- Dog

- doing

- domain

- domains

- don

- done

- Dont

- driven

- during

- e

- each

- Early

- ease

- easily

- easy

- Edge

- educate

- educating

- Education

- educational

- effect

- effectively

- efficiency

- efficiently

- effort

- either

- elements

- ELEVATE

- eliminate

- emergence

- emerging

- emerging technologies

- emotions

- emphasizing

- employees

- enable

- encourage

- encourages

- end

- Endless

- enforcing

- engagement

- Engineering

- Engineers

- enhancements

- enhancing

- enough

- enrich

- ensure

- Enterprise

- Entertainment

- enthusiasm

- Entire

- Environment

- error

- Errors

- especially

- establish

- established

- etc

- ethical

- evaluate

- evaluation

- Even

- everyday

- everyone

- exactly

- example

- examples

- exceptional

- exceptionally

- exciting

- execute

- execution

- exist

- existing

- Exotic

- expectations

- expensive

- experience

- experienced

- experiments

- expertise

- experts

- explaining

- external

- extraction

- Face

- factors

- Fall

- family

- far

- Fashion

- fears

- Feature

- Features

- Fed

- feedback

- fight

- Figure

- final

- Finally

- Find

- finds

- First

- Fix

- flow

- Flows

- Focus

- focuses

- follow

- following

- For

- For Startups

- Force

- Forces

- foresee

- formal

- Forward

- Foster

- Free

- friction

- from

- Frontend

- frustration

- full

- full-fledged

- fully

- fun

- functionality

- fundamental

- further

- future

- Gains

- gaming

- gap

- Gartner

- gave

- General

- generate

- generation

- generations

- generative

- Generative AI

- genuine

- get

- GitHub

- Give

- gives

- globalization

- Go

- good

- governance

- grant

- grasp

- great

- Ground

- Grows

- Growth

- guide

- guidelines

- Guides

- hacks

- had

- hand

- Hands

- happen

- happened

- happy

- harmful

- Have

- hear

- help

- here

- High

- high-quality

- Highlight

- highly

- hire

- holistic

- Hopefully

- hopes

- Horse

- HOURS

- How

- How To

- However

- HTTPS

- hubs

- huge

- HuggingFace

- human

- Humans

- Hype

- i

- idea

- ideal

- ideation

- identified

- identify

- if

- Illegal

- illustrates

- image

- images

- imaginations

- imagine

- Impact

- Impacts

- implement

- implementations

- importance

- important

- in

- in-depth

- include

- Including

- Incorporated

- Increase

- increasingly

- indeed

- indicate

- individual

- individuals

- information

- initial

- innovative

- inputs

- inside

- insights

- Inspiration

- instance

- instead

- instructions

- integrate

- Integrates

- Integrating

- integration

- Intelligence

- interact

- interaction

- interactivity

- Interface

- interfaces

- internal

- internally

- Interviews

- intimate

- into

- intriguing

- introduce

- introduced

- Introduces

- introducing

- investment

- inviting

- issues

- IT

- iteration

- ITS

- Job

- Jobs

- journey

- jpg

- Keep

- Key

- Kicks

- Kind

- Know

- knowledge

- known

- labor

- Labs

- Lack

- language

- large

- large-scale

- larger

- Last

- Latency

- launch

- Layout

- lead

- Leadership

- Leads

- leaps

- LEARN

- learning

- Leave

- leaving

- Led

- less

- let

- Level

- levels

- Leverage

- leverages

- leveraging

- Life

- like

- likely

- limitations

- Limited

- LLM

- local

- Long

- longer

- Look

- losing

- Lot

- love

- Low

- lower

- machine

- machine learning

- made

- magic

- mailing

- Main

- maintain

- maintenance

- make

- MAKES

- manage

- manager

- Managers

- manual

- manually

- many

- map

- mapping

- Market

- market opportunities

- Marketing

- marketing agency

- Marketing Strategies

- material

- max-width

- Maximize

- means

- Meanwhile

- mechanical

- Media

- Meet

- Meets

- Members

- mental

- method

- methods

- Metrics

- Middle

- might

- mind

- mistakes

- ML

- model

- models

- Modern

- more

- most

- move

- moves

- movie

- MS

- much

- my

- myself

- Natural

- necessary

- Need

- needed

- needs

- network

- neural

- neural network

- never

- New

- new product

- newcomer

- next

- no

- Noise

- normally

- Notes

- notification

- Notion

- now

- number

- numerous

- of

- off

- offer

- offered

- offering

- Offers

- Office

- official

- often

- on

- once

- ONE

- online

- only

- open

- open source

- OpenAI

- operation

- opportunities

- Opportunity

- opposed

- optimal

- optimization

- Optimize

- Options

- or

- Orbit

- order

- original

- originally

- Other

- otherwise

- our

- out

- Outcome

- output

- outside

- outstanding

- over

- overwhelming

- own

- Pain

- painful

- pains

- parameters

- part

- participate

- parties

- partner

- Partnership

- parts

- party

- past

- patterns

- pause

- People

- per

- perceived

- Perform

- performance

- performed

- performs

- permission

- Personality

- personalization

- Personalized

- perspective

- perspectives

- persuaded

- philosophy

- picked

- piece

- pipeline

- Place

- planning

- plato

- Plato Data Intelligence

- PlatoData

- Play

- pleasure

- Point

- points

- Popular

- position

- positioning

- positive

- possible

- Post

- Posts

- potential

- potentially

- potentials

- powers

- Precision

- preferences

- Prepare

- present

- pressure

- prevent

- primary

- Prioritize

- privacy

- Proactive

- probably

- Problem

- problems

- process

- produce

- producing

- Product

- product manager

- Product Reviews

- Production

- productivity

- Products

- professional

- Profit

- progressive

- promotion

- provide

- provider

- providers

- provides

- proxy

- public

- Public Communications

- Publication

- publish

- published

- purpose

- pushes

- put

- Puts

- quality

- quantity

- Questions

- quickly

- range

- Ranking

- rather

- Raw

- reach

- ready

- real

- real world

- realistic

- Reality

- really

- reasons

- recently

- recognition

- Recover

- recurring

- Red

- reduce

- reflect

- reflects

- regular

- Regulation

- regulations

- Regulators

- reinforce

- relatively

- release

- relevance

- reliability

- remain

- removing

- representative

- reputation

- requests

- require

- required

- Requirements

- requires

- research

- Resistance

- Resources

- responses

- responsibilities

- responsibility

- restriction

- retain

- return

- Reviews

- Rich

- riding

- right

- Risk

- risks

- Risky

- roadmap

- ROI

- roles

- routine

- rules

- running

- runs

- rush

- sake

- sales

- same

- sandboxes

- satisfaction

- Save

- saved

- Savings

- say

- Scalability

- scalable

- Scale

- scales

- Scarce

- scientists

- scope

- scratch

- Search

- Second

- Secret

- sections

- see

- seem

- seen

- selection

- sensitive

- sentiment

- separate

- Series

- serve

- serves

- service

- set

- Shape

- shaping

- shared

- shift

- shine

- Short

- should

- show

- side

- sign

- signals

- significant

- similar

- Simple

- simpler

- since

- single

- Size

- skills

- small

- smaller

- smart

- smoother

- smoothly

- So

- Society

- Software

- software development

- solution

- Solutions

- SOLVE

- some

- Someone

- something

- soon

- sophisticated

- Source

- Sources

- special

- specific

- Spectrum

- speeds

- spend

- spent

- Stage

- stakeholders

- standard

- start

- Starting

- starts

- Startups

- statistics

- Status

- Step

- Steps

- Still

- straight

- Strategic

- strategies

- Strategy

- strengths

- Strict

- stronger

- strongly

- structure

- Struggle

- style

- success

- successful

- Successfully

- such

- sufficient

- suitable

- summarize

- SUMMARY

- Supercharge

- superpower

- Supported

- surprises

- Sustainability

- Switch

- system

- Systems

- table

- Take

- takes

- Talk

- tangible

- Target

- targeting

- Task

- tasks

- team

- Team members

- teams

- Technical

- technically

- technological

- Technologies

- Technology

- tell

- template

- term

- terminology

- terms

- test

- text

- than

- that

- The

- the world

- their

- Them

- theme

- themselves

- then

- There.

- These

- they

- things

- think

- Thinking

- this

- thoroughly

- those

- thought

- thought leadership

- three

- Through

- throughout

- Throwing

- Thus

- tighten

- time

- times

- to

- together

- too

- tool

- tools

- TOPBOTS

- Total

- touches

- towards

- Tracing

- track

- traditional

- Train

- Training

- transforming

- Transparency

- treatment

- Trend

- truly

- Trust

- try

- TURN

- tweaking

- type

- typical

- typically

- ubiquitous

- ui

- Ultimately

- Uncertain

- underlying

- understand

- understanding

- Unexpected

- unique

- unwanted

- Update

- Updates

- us

- Usage

- use

- use case

- used

- User

- User Experience

- User Interface

- users

- using

- ux

- ux designers

- Vacuum

- validated

- validation

- value

- versatile

- very

- via

- Virtual

- virtual assistant

- virtually

- visible

- vision

- Waiting

- want

- wants

- was

- Wave

- Way..

- ways

- we

- week

- welcome

- WELL

- were

- What

- when

- whenever

- whether

- which

- while

- WHO

- whole

- whose

- Wild

- will

- with

- without

- words

- Work

- working

- works

- world

- world’s

- would

- write

- writer

- writing

- Wrong

- years

- yet

- You

- Your

- yourself

- zephyrnet