1Institute of Physics, École Polytechnique Fédérale de Lausanne (EPFL), CH-1015 Lausanne, Switzerland

2Center for Quantum Science and Engineering, École Polytechnique Fédérale de Lausanne (EPFL), CH-1015 Lausanne, Switzerland

3Sorbonne Université, CNRS, Laboratoire de Physique Théorique de la Matière Condensée, LPTMC, F-75005 Paris, France

Find this paper interesting or want to discuss? Scite or leave a comment on SciRate.

Abstract

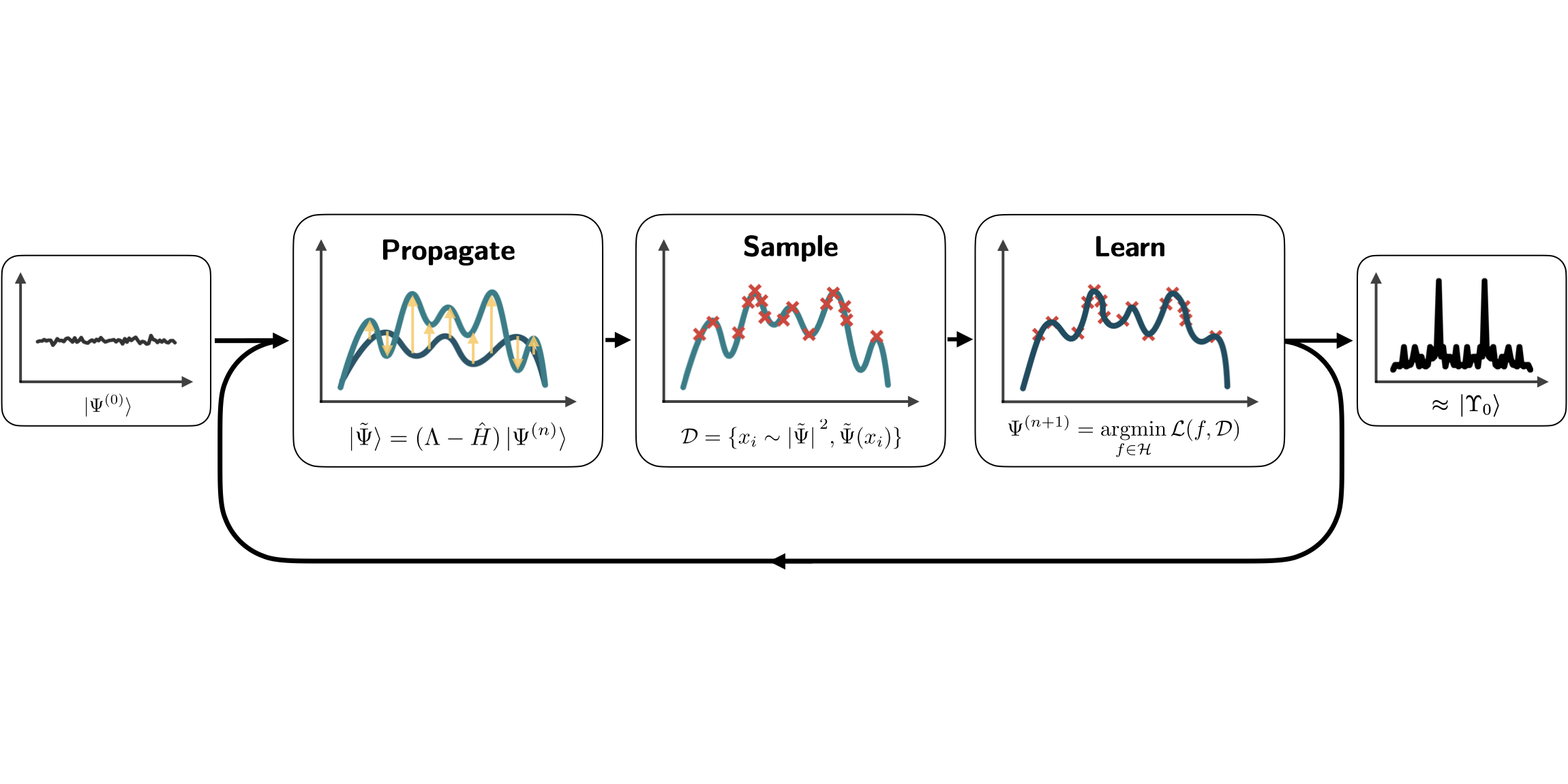

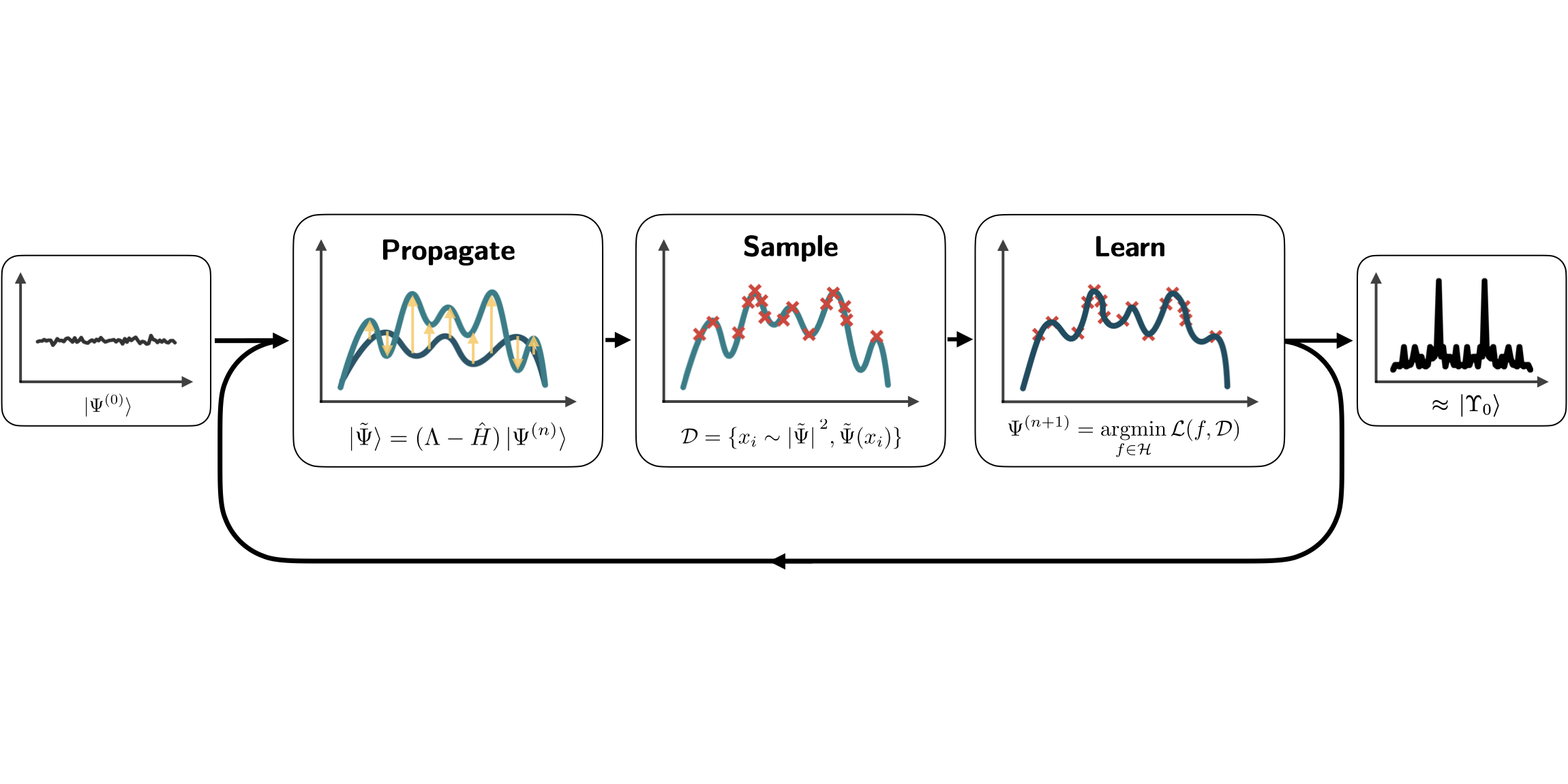

Neural network approaches to approximate the ground state of quantum hamiltonians require the numerical solution of a highly nonlinear optimization problem. We introduce a statistical learning approach that makes the optimization trivial by using kernel methods. Our scheme is an approximate realization of the power method, where supervised learning is used to learn the next step of the power iteration. We show that the ground state properties of arbitrary gapped quantum hamiltonians can be reached with polynomial resources under the assumption that the supervised learning is efficient. Using kernel ridge regression, we provide numerical evidence that the learning assumption is verified by applying our scheme to find the ground states of several prototypical interacting many-body quantum systems, both in one and two dimensions, showing the flexibility of our approach.

Featured image: Sketch of the self-learning power method, the iterative method we use to find the ground state by solving a sequence of supervised learning problems. We use Markov-chain Monte Carlo methods for the “Sample” step and a kernel method called Kernel Ridge Regression for the “Learn” step.

Popular summary

In this article, we show that by using the power method as an iterative supervised learning strategy, combined with a kernel method called kernel ridge regression, this can be cast into a series of trivial-to-solve convex optimization problems. These do not require gradient-descent learning as in standard neural-network-based ML approaches.

More generally, we show that this approximate self-learning power method converges to the ground state as long as the error per step is small.

► BibTeX data

► References

[1] P. A. Lee, N. Nagaosa, and X.-G. Wen. “Doping a mott insulator: Physics of high-temperature superconductivity”. Rev. Mod. Phys. 78, 17–85 (2006). doi: 10.1103/RevModPhys.78.17.

https://doi.org/10.1103/RevModPhys.78.17

[2] A. Kopp and S. Chakravarty. “Criticality in correlated quantum matter”. Nature Physics 1, 53–56 (2005). doi: 10.1038/nphys105.

https://doi.org/10.1038/nphys105

[3] P. O. Dral. “Quantum chemistry in the age of machine learning”. The Journal of Physical Chemistry Letters 11, 2336–2347 (2020). doi: 10.1021/acs.jpclett.9b03664.

https://doi.org/10.1021/acs.jpclett.9b03664

[4] S. McArdle, S. Endo, A. Aspuru-Guzik, S. C. Benjamin, and X. Yuan. “Quantum computational chemistry”. Reviews of Modern Physics 92 (2020). doi: 10.1103/revmodphys.92.015003.

https://doi.org/10.1103/revmodphys.92.015003

[5] S. R. White. “Density matrix formulation for quantum renormalization groups”. Phys. Rev. Lett. 69, 2863–2866 (1992). doi: 10.1103/PhysRevLett.69.2863.

https://doi.org/10.1103/PhysRevLett.69.2863

[6] F. Verstraete and J. I. Cirac. “Renormalization algorithms for quantum-many body systems in two and higher dimensions” (2004) arXiv:cond-mat/0407066.

arXiv:cond-mat/0407066

[7] D. Ceperley and B. Alder. “Quantum monte carlo”. Science 231, 555–560 (1986). doi: 10.1126/science.231.4738.555.

https://doi.org/10.1126/science.231.4738.555

[8] F. Becca and S. Sorella. “Quantum monte carlo approaches for correlated systems”. Cambridge University Press. (2017). doi: 10.1017/9781316417041.

https://doi.org/10.1017/9781316417041

[9] S. Bravyi, D. DiVincenzo, R. Oliveira, and B. Terhal. “The complexity of stoquastic local hamiltonian problems”. Quantum Information and Computation 8, 361–385 (2008). doi: 10.26421/qic8.5-1.

https://doi.org/10.26421/qic8.5-1

[10] E. Loh Jr, J. Gubernatis, R. Scalettar, S. White, D. Scalapino, and R. Sugar. “Sign problem in the numerical simulation of many-electron systems”. Phys. Rev. B 41, 9301–9307 (1990). doi: 10.1103/PhysRevB.41.9301.

https://doi.org/10.1103/PhysRevB.41.9301

[11] M. Troyer and U.-J. Wiese. “Computational complexity and fundamental limitations to fermionic quantum monte carlo simulations”. Phys. Rev. Lett. 94, 170201 (2005). doi: 10.1103/PhysRevLett.94.170201.

https://doi.org/10.1103/PhysRevLett.94.170201

[12] R. Jastrow. “Many-body problem with strong forces”. Phys. Rev. 98, 1479–1484 (1955). doi: 10.1103/PhysRev.98.1479.

https://doi.org/10.1103/PhysRev.98.1479

[13] J. Bardeen, L. N. Cooper, and J. R. Schrieffer. “Theory of superconductivity”. Phys. Rev. 108, 1175–1204 (1957). doi: 10.1103/PhysRev.108.1175.

https://doi.org/10.1103/PhysRev.108.1175

[14] S. Sorella. “Green function monte carlo with stochastic reconfiguration”. Phys. Rev. Lett. 80, 4558–4561 (1998). doi: 10.1103/PhysRevLett.80.4558.

https://doi.org/10.1103/PhysRevLett.80.4558

[15] H. Yokoyama and H. Shiba. “Variational Monte-Carlo studies of Hubbard model. I”. Journal of the Physical Society of Japan 56, 1490–1506 (1987). doi: 10.1143/JPSJ.56.1490.

https://doi.org/10.1143/JPSJ.56.1490

[16] C. Gros, R. Joynt, and T. M. Rice. “Antiferromagnetic correlations in almost-localized fermi liquids”. Phys. Rev. B 36, 381–393 (1987). doi: 10.1103/PhysRevB.36.381.

https://doi.org/10.1103/PhysRevB.36.381

[17] C. Gros. “Superconductivity in correlated wave functions”. Phys. Rev. B 38, 931–934 (1988). doi: 10.1103/PhysRevB.38.931.

https://doi.org/10.1103/PhysRevB.38.931

[18] J. Carrasquilla and R. G. Melko. “Machine learning phases of matter”. Nature Physics 13, 431–434 (2017). doi: 10.1038/nphys4035.

https://doi.org/10.1038/nphys4035

[19] G. Torlai, G. Mazzola, J. Carrasquilla, M. Troyer, R. Melko, and G. Carleo. “Neural-network quantum state tomography”. Nature Physics 14, 447–450 (2018). doi: 10.1038/s41567-018-0048-5.

https://doi.org/10.1038/s41567-018-0048-5

[20] A. Glielmo, Y. Rath, G. Csányi, A. De Vita, and G. H. Booth. “Gaussian process states: A data-driven representation of quantum many-body physics”. Phys. Rev. X 10, 041026 (2020). doi: 10.1103/PhysRevX.10.041026.

https://doi.org/10.1103/PhysRevX.10.041026

[21] Y. Rath, A. Glielmo, and G. H. Booth. “A bayesian inference framework for compression and prediction of quantum states”. The Journal of Chemical Physics 153, 124108 (2020). doi: 10.1063/5.0024570.

https://doi.org/10.1063/5.0024570

[22] D. Luo and J. Halverson. “Infinite neural network quantum states: entanglement and training dynamics”. Machine Learning: Science and Technology 4, 025038 (2023). doi: 10.1088/2632-2153/ace02f.

https://doi.org/10.1088/2632-2153/ace02f

[23] G. Carleo and M. Troyer. “Solving the quantum many-body problem with artificial neural networks”. Science 355, 602–606 (2017). doi: 10.1126/science.aag2302.

https://doi.org/10.1126/science.aag2302

[24] Y. Rath and G. H. Booth. “Quantum gaussian process state: A kernel-inspired state with quantum support data”. Phys. Rev. Research 4, 023126 (2022). doi: 10.1103/PhysRevResearch.4.023126.

https://doi.org/10.1103/PhysRevResearch.4.023126

[25] Y. Rath and G. H. Booth. “Framework for efficient ab initio electronic structure with gaussian process states”. Phys. Rev. B 107, 205119 (2023). doi: 10.1103/PhysRevB.107.205119.

https://doi.org/10.1103/PhysRevB.107.205119

[26] Y. Nomura and M. Imada. “Dirac-type nodal spin liquid revealed by refined quantum many-body solver using neural-network wave function, correlation ratio, and level spectroscopy”. Phys. Rev. X 11, 031034 (2021). doi: 10.1103/PhysRevX.11.031034.

https://doi.org/10.1103/PhysRevX.11.031034

[27] C. Roth and A. H. MacDonald. “Group convolutional neural networks improve quantum state accuracy” (2021) arXiv:2104.05085.

arXiv:2104.05085

[28] N. Astrakhantsev, T. Westerhout, A. Tiwari, K. Choo, A. Chen, M. H. Fischer, G. Carleo, and T. Neupert. “Broken-symmetry ground states of the heisenberg model on the pyrochlore lattice”. Phys. Rev. X 11, 041021 (2021). doi: 10.1103/PhysRevX.11.041021.

https://doi.org/10.1103/PhysRevX.11.041021

[29] A. Lovato, C. Adams, G. Carleo, and N. Rocco. “Hidden-nucleons neural-network quantum states for the nuclear many-body problem”. Phys. Rev. Res. 4, 043178 (2022). doi: 10.1103/PhysRevResearch.4.043178.

https://doi.org/10.1103/PhysRevResearch.4.043178

[30] T. Zhao, J. Stokes, and S. Veerapaneni. “Scalable neural quantum states architecture for quantum chemistry”. Machine Learning: Science and Technology (2023). doi: 10.1088/2632-2153/acdb2f.

https://doi.org/10.1088/2632-2153/acdb2f

[31] T. Westerhout, N. Astrakhantsev, K. S. Tikhonov, M. I. Katsnelson, and A. A. Bagrov. “Generalization properties of neural network approximations to frustrated magnet ground states”. Nature Communications 11, 1593 (2020). doi: 10.1038/s41467-020-15402-w.

https://doi.org/10.1038/s41467-020-15402-w

[32] A. Szabó and C. Castelnovo. “Neural network wave functions and the sign problem”. Phys. Rev. Research 2, 033075 (2020). doi: 10.1103/PhysRevResearch.2.033075.

https://doi.org/10.1103/PhysRevResearch.2.033075

[33] D. Kochkov and B. K. Clark. “Variational optimization in the ai era: Computational graph states and supervised wave-function optimization” (2018) arXiv:1811.12423.

arXiv:1811.12423

[34] B. Jónsson, B. Bauer, and G. Carleo. “Neural-network states for the classical simulation of quantum computing” (2018) arXiv:1808.05232.

arXiv:1808.05232

[35] H. Atanasova, L. Bernheimer, and G. Cohen. “Stochastic representation of many-body quantum states”. Nature Communications 14 (2023). doi: 10.1038/s41467-023-39244-4.

https://doi.org/10.1038/s41467-023-39244-4

[36] J. Shawe-Taylor and N. Cristianini. “Kernel methods for pattern analysis”. Cambridge university press. (2004). doi: 10.1017/CBO9780511809682.

https://doi.org/10.1017/CBO9780511809682

[37] T. Hofmann, B. Schölkopf, and A. J. Smola. “Kernel methods in machine learning”. The Annals of Statistics 36, 1171 – 1220 (2008). doi: 10.1214/009053607000000677.

https://doi.org/10.1214/009053607000000677

[38] M. Hardt and E. Price. “The noisy power method: A meta algorithm with applications”. In Advances in Neural Information Processing Systems. Volume 27. (2014). url: https://proceedings.neurips.cc/paper/2014/file/729c68884bd359ade15d5f163166738a-Paper.pdf.

https://proceedings.neurips.cc/paper/2014/file/729c68884bd359ade15d5f163166738a-Paper.pdf

[39] S. Russell and P. Norvig. “Artificial intelligence: A modern approach”. Prentice Hall Press. (2020). 4th edition.

[40] J. Mercer. “Functions ofpositive and negativetypeand theircommection with the theory ofintegral equations”. Philosophical Transactions of the Royal Society of London. Series A, Containing Papers of a Mathematical or Physical Character 209, 415–446 (1909). doi: 10.1098/rsta.1909.0016.

https://doi.org/10.1098/rsta.1909.0016

[41] N. Aronszajn. “Theory of reproducing kernels”. Transactions of the American Mathematical Society 68, 337–404 (1950). doi: 10.1090/s0002-9947-1950-0051437-7.

https://doi.org/10.1090/s0002-9947-1950-0051437-7

[42] G. S. Kimeldorf and G. Wahba. “A Correspondence Between Bayesian Estimation on Stochastic Processes and Smoothing by Splines”. The Annals of Mathematical Statistics 41, 495 – 502 (1970). doi: 10.1214/aoms/1177697089.

https://doi.org/10.1214/aoms/1177697089

[43] B. Schölkopf, R. Herbrich, and A. J. Smola. “A generalized representer theorem”. In Computational Learning Theory. Pages 416–426. Springer Berlin Heidelberg (2001). doi: 10.1007/3-540-44581-1_27.

https://doi.org/10.1007/3-540-44581-1_27

[44] M. Tipping. “The relevance vector machine”. In S. Solla, T. Leen, and K. Müller, editors, Advances in Neural Information Processing Systems. Volume 12. MIT Press (1999). url: https://proceedings.neurips.cc/paper_files/paper/1999/file/f3144cefe89a60d6a1afaf7859c5076b-Paper.pdf.

https://proceedings.neurips.cc/paper_files/paper/1999/file/f3144cefe89a60d6a1afaf7859c5076b-Paper.pdf

[45] M. E. Tipping. “Sparse bayesian learning and the relevance vector machine”. J. Mach. Learn. Res. 1, 211–244 (2001). url: https://www.jmlr.org/papers/volume1/tipping01a/tipping01a.pdf.

https://www.jmlr.org/papers/volume1/tipping01a/tipping01a.pdf

[46] M. E. Tipping and A. C. Faul. “Fast marginal likelihood maximisation for sparse bayesian models”. In Proceedings of the Ninth International Workshop on Artificial Intelligence and Statistics. Pages 276–283. PMLR (2003). url: http://proceedings.mlr.press/r4/tipping03a/tipping03a.pdf.

http://proceedings.mlr.press/r4/tipping03a/tipping03a.pdf

[47] A. Jacot, F. Gabriel, and C. Hongler. “Neural tangent kernel: Convergence and generalization in neural networks”. In Advances in Neural Information Processing Systems. Volume 31. (2018). url: https://proceedings.neurips.cc/paper/2018/file/5a4be1fa34e62bb8a6ec6b91d2462f5a-Paper.pdf.

https://proceedings.neurips.cc/paper/2018/file/5a4be1fa34e62bb8a6ec6b91d2462f5a-Paper.pdf

[48] T. Westerhout. “lattice-symmetries: A package for working with quantum many-body bases”. Journal of Open Source Software 6, 3537 (2021). doi: 10.21105/joss.03537.

https://doi.org/10.21105/joss.03537

[49] T. Westerhout. “SpinED: User-friendly exact diagonalization package for quantum many-body systems”. url: https://github.com/twesterhout/spin-ed.

https://github.com/twesterhout/spin-ed

[50] A. Albuquerque et al. “The alps project release 1.3: Open-source software for strongly correlated systems”. Journal of Magnetism and Magnetic Materials 310, 1187–1193 (2007). doi: https://doi.org/10.1016/j.jmmm.2006.10.304.

https://doi.org/10.1016/j.jmmm.2006.10.304

[51] B. Bauer et al. “The ALPS project release 2.0: open source software for strongly correlated systems”. Journal of Statistical Mechanics: Theory and Experiment 2011, P05001 (2011). doi: 10.1088/1742-5468/2011/05/p05001.

https://doi.org/10.1088/1742-5468/2011/05/p05001

[52] R. J. Elliott, P. Pfeuty, and C. Wood. “Ising model with a transverse field”. Phys. Rev. Lett. 25, 443–446 (1970). doi: 10.1103/PhysRevLett.25.443.

https://doi.org/10.1103/PhysRevLett.25.443

[53] M. S. L. du Croo de Jongh and J. M. J. van Leeuwen. “Critical behavior of the two-dimensional ising model in a transverse field: A density-matrix renormalization calculation”. Phys. Rev. B 57, 8494–8500 (1998). doi: 10.1103/PhysRevB.57.8494.

https://doi.org/10.1103/PhysRevB.57.8494

[54] H. Rieger and N. Kawashima. “Application of a continuous time cluster algorithm to the two-dimensional random quantum ising ferromagnet”. The European Physical Journal B – Condensed Matter and Complex Systems 9, 233–236 (1999). doi: 10.1007/s100510050761.

https://doi.org/10.1007/s100510050761

[55] H. W. J. Blöte and Y. Deng. “Cluster monte carlo simulation of the transverse ising model”. Phys. Rev. E 66, 066110 (2002). doi: 10.1103/PhysRevE.66.066110.

https://doi.org/10.1103/PhysRevE.66.066110

[56] A. F. Albuquerque, F. Alet, C. Sire, and S. Capponi. “Quantum critical scaling of fidelity susceptibility”. Phys. Rev. B 81, 064418 (2010). doi: 10.1103/PhysRevB.81.064418.

https://doi.org/10.1103/PhysRevB.81.064418

[57] W. Marshall. “Antiferromagnetism”. Proceedings of the Royal Society of London 232, 48–68 (1955). doi: 10.1098/rspa.1955.0200.

https://doi.org/10.1098/rspa.1955.0200

[58] R. Boloix-Tortosa, J. J. Murillo-Fuentes, I. Santos, and F. Pérez-Cruz. “Widely linear complex-valued kernel methods for regression”. IEEE Transactions on Signal Processing 65, 5240–5248 (2017). doi: 10.1109/TSP.2017.2726991.

https://doi.org/10.1109/TSP.2017.2726991

[59] “cqsl/learning-ground-states-with-kernel-methods”. doi: 10.5281/zenodo.7738168.

https://doi.org/10.5281/zenodo.7738168

[60] J. Bradbury et al. “JAX: composable transformations of Python+NumPy programs”. url: https://github.com/google/jax.

https://github.com/google/jax

[61] F. Vicentini et al. “NetKet 3: Machine Learning Toolbox for Many-Body Quantum Systems”. SciPost Phys. CodebasesPage 7 (2022). doi: 10.21468/SciPostPhysCodeb.7.

https://doi.org/10.21468/SciPostPhysCodeb.7

[62] G. Carleo et al. “Netket: A machine learning toolkit for many-body quantum systems”. SoftwareX 10, 100311 (2019). doi: https://doi.org/10.1016/j.softx.2019.100311.

https://doi.org/10.1016/j.softx.2019.100311

[63] D. Häfner and F. Vicentini. “mpi4jax: Zero-copy mpi communication of jax arrays”. Journal of Open Source Software 6, 3419 (2021). doi: 10.21105/joss.03419.

https://doi.org/10.21105/joss.03419

[64] A. W. Sandvik. “Finite-size scaling of the ground-state parameters of the two-dimensional heisenberg model”. Phys. Rev. B 56, 11678–11690 (1997). doi: 10.1103/PhysRevB.56.11678.

https://doi.org/10.1103/PhysRevB.56.11678

[65] R. Novak, L. Xiao, J. Hron, J. Lee, A. A. Alemi, J. Sohl-Dickstein, and S. S. Schoenholz. “Neural tangents: Fast and easy infinite neural networks in python” (2020) arXiv:1912.02803.

arXiv:1912.02803

[66] C. Williams. “Computing with infinite networks”. In Advances in Neural Information Processing Systems. Volume 9. MIT Press (1996). url: https://proceedings.neurips.cc/paper/1996/file/ae5e3ce40e0404a45ecacaaf05e5f735-Paper.pdf.

https://proceedings.neurips.cc/paper/1996/file/ae5e3ce40e0404a45ecacaaf05e5f735-Paper.pdf

Cited by

[1] Eimantas Ledinauskas and Egidijus Anisimovas, “Scalable Imaginary Time Evolution with Neural Network Quantum States”, arXiv:2307.15521, (2023).

[2] Paulin de Schoulepnikoff, Oriel Kiss, Sofia Vallecorsa, Giuseppe Carleo, and Michele Grossi, “Hybrid Ground-State Quantum Algorithms based on Neural Schrödinger Forging”, arXiv:2307.02633, (2023).

The above citations are from SAO/NASA ADS (last updated successfully 2023-08-30 02:41:06). The list may be incomplete as not all publishers provide suitable and complete citation data.

On Crossref’s cited-by service no data on citing works was found (last attempt 2023-08-30 02:41:05).

This Paper is published in Quantum under the Creative Commons Attribution 4.0 International (CC BY 4.0) license. Copyright remains with the original copyright holders such as the authors or their institutions.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- ChartPrime. Elevate your Trading Game with ChartPrime. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://quantum-journal.org/papers/q-2023-08-29-1096/

- :is

- :not

- :where

- ][p

- 06

- 1

- 1.3

- 10

- 11

- 12

- 13

- 14

- 15%

- 16

- 17

- 19

- 1996

- 1998

- 1999

- 20

- 2001

- 2005

- 2006

- 2008

- 2011

- 2014

- 2017

- 2018

- 2019

- 2020

- 2021

- 2022

- 2023

- 22

- 23

- 24

- 25

- 26%

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 36

- 39

- 40

- 49

- 4th

- 50

- 51

- 54

- 60

- 66

- 7

- 8

- 80

- 9

- 98

- a

- above

- ABSTRACT

- access

- accuracy

- advances

- affiliations

- age

- AI

- AL

- algorithm

- algorithms

- All

- Alps

- American

- an

- analysis

- and

- applications

- Applying

- approach

- approaches

- approximate

- architecture

- ARE

- article

- artificial

- artificial intelligence

- AS

- assumption

- Aug

- author

- authors

- based

- Bayesian

- BE

- been

- behavior

- Benjamin

- Berlin

- between

- body

- both

- Break

- by

- calculation

- called

- cambridge

- CAN

- character

- chemical

- chemistry

- chen

- Choo

- Cluster

- cohen

- combined

- comment

- Commons

- Communication

- Communications

- complete

- complex

- complexity

- computation

- computing

- Condensed matter

- continuous

- Convergence

- Convex

- cooper

- copyright

- Correlation

- critical

- data

- data-driven

- dimensions

- discuss

- do

- dynamics

- e

- E&T

- easy

- edition

- efficient

- Electronic

- Elliott

- Engineering

- entanglement

- equations

- Era

- error

- European

- evidence

- evolution

- experiment

- FAST

- fidelity

- field

- Find

- Flexibility

- For

- Forces

- Forging

- found

- Framework

- from

- frustrated

- function

- functions

- fundamental

- generally

- graph

- Ground

- Group’s

- Hall

- Hard

- harvard

- Have

- higher

- highly

- holders

- However

- http

- HTTPS

- Hybrid

- i

- IEEE

- image

- imaginary

- improve

- in

- Infinite

- information

- institutions

- Intelligence

- interacting

- interesting

- International

- into

- introduce

- iteration

- Japan

- JavaScript

- journal

- kiss

- Last

- LEARN

- learning

- Leave

- Lee

- Level

- License

- likelihood

- limitations

- Liquid

- List

- local

- London

- Long

- machine

- machine learning

- Magnetism

- MAKES

- materials

- mathematical

- Matrix

- Matter

- max-width

- May..

- mechanics

- Mercer

- Meta

- method

- methods

- MIT

- ML

- model

- models

- Modern

- Month

- Nature

- network

- networks

- neural network

- neural networks

- next

- no

- nomura

- nuclear

- of

- on

- ONE

- open

- open source

- Open-source Software

- optimization

- or

- original

- our

- package

- pages

- Paper

- papers

- parameters

- paris

- Pattern

- per

- Phases of Matter

- physical

- Physics

- plato

- Plato Data Intelligence

- PlatoData

- power

- prediction

- press

- price

- Problem

- problems

- Proceedings

- process

- processes

- processing

- Programs

- project

- properties

- proposed

- provide

- published

- publisher

- publishers

- Python

- Quantum

- quantum algorithms

- quantum computing

- quantum information

- quantum systems

- R

- random

- ratio

- reached

- realization

- references

- refined

- release

- relevance

- remains

- representation

- require

- research

- Resources

- Revealed

- Reviews

- Rice

- royal

- s

- scalable

- scaling

- scheme

- Science

- Science and Technology

- Sequence

- Series

- Series A

- several

- Shiba

- show

- sign

- Signal

- simulation

- small

- Society

- Software

- solution

- SOLVE

- Solving

- Source

- Spectroscopy

- Spin

- standard

- State

- States

- statistical

- statistics

- Step

- straightforward

- Strategy

- strong

- strongly

- structure

- studies

- Successfully

- such

- sugar

- suitable

- Superconductivity

- support

- system

- Systems

- Task

- techniques

- Technology

- that

- The

- their

- theory

- These

- this

- time

- Title

- to

- Toolbox

- toolkit

- Training

- Transactions

- transformations

- two

- under

- university

- updated

- URL

- use

- used

- user-friendly

- using

- verified

- volume

- W

- want

- was

- Wave

- we

- which

- white

- Williams

- with

- wood

- working

- works

- workshop

- X

- year

- Yuan

- zephyrnet

- Zhao