Mozilla on Thursday attempted to explain its decision to disable, at least temporarily, the error-prone AI Explain button implemented last week on the MDN documentation website.

Steve Teixeira, Mozilla’s chief product officer, emitted a statement summarizing the Firefox maker’s enthusiasm for the possibility of using generative AI for for “creating new value for people” while implementing the technology responsibly.

Teixeira said MDN last week added two AI services to its web developer documentation, AI Help and AI Explain.

AI Help, in beta, allows signed-in MDN users to pose questions in a conversation interface and receive answers from an OpenAI GPT-3.5 chatbot. It essentially allows you to query the documentation in natural language.

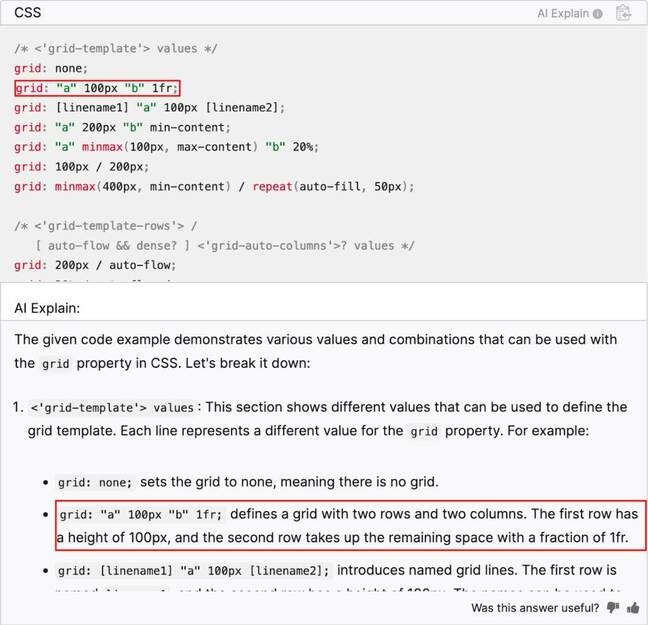

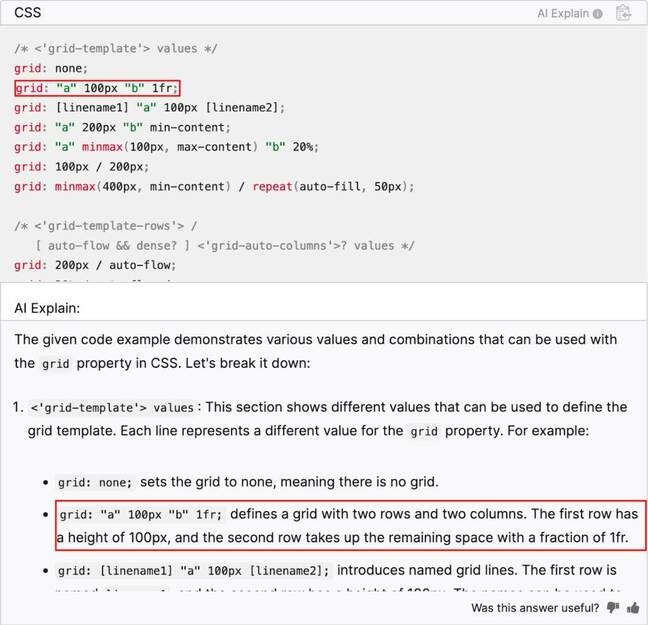

AI Explain, described as experimental, lets the same MDN users query the bot about code examples on the documentation page. If you have any questions about sample code in the docs, you can tap the AI Explain button, and the bot expands upon it with more detail, as it does incorrectly below.

Oops … AI Explain wrongly saying the highlighted code will define a grid of two rows and two columns, whereas the CSS actually only defines two rows. Source: Mozilla

According to Teixeira, both services produced “a wide range of feedback from readers, from delight to constructive criticisms to concerns about the technical accuracy of the responses.”

For AI Help, there were 129 “likes” and 41 “dislikes,” representing 75.88 percent positive feedback and 24.12 percent negative.

For AI Explain, there were 1,017 “likes” and 459 “dislikes,” representing 68.90 percent positive feedback and 31.10 percent negative.

As developers have pointed out, however, these statistics are not very useful – how many “likes” means it’s okay for “one of the most trusted resources for information about web standards” to provide incorrect answers?

Judging by the GitHub Issues bug report about AI Explain answering technical questions incorrectly, few of the 114 comments posted before the thread was locked like the idea of an errant chatbot.

Teixeira said that for AI Help, most of those responding considered the AI answers helpful. “In the case of AI Explain, the pattern of feedback we received was similar, but readers also pointed out a handful of concrete cases where an incorrect answer was rendered,” he said.

“This feedback is enormously helpful, and the MDN team is now investigating these bug reports. We’ve elected to be cautious in our approach and have temporarily removed the AI Explain tool from MDN until we’ve completed our investigation and have high-quality remediations in place for the issues that have been observed.”

Mozilla, however, plans to continue working on ways to deploy generative AI. Teixeira said the MDN team aims to identify when its algorithmic help services provide incorrect information and to improve responses. The remediation effort will also include better options for flagging and reporting wrong answers.

Teixeira promised a postmortem report in the days ahead covering the launch of AI Help and AI Explain and the decision to suspend part of the service.

He also acknowledged the differences in opinion about how generative AI fits into a source of human-authored documentation and indicated that community feedback will continue to adapt how MDN incorporates algorithmic tools.

“We do see a path forward that preserves the human-authored goodness while also providing tools that offer additional value over that amazing body of content,” he said.

We do see a path forward that preserves the human-authored goodness while also providing tools that offer additional value

“We need your input, criticism, kudos, and experience to ensure we’re employing AI in the most useful and responsible ways. Your feedback is critical to this process, and we will continue to take the feedback and adjust our plans in response to it. LLM technology remains relatively immature, so there will certainly be speed bumps along the way.”

Those differences of opinion surfaced in another GitHub Issues post and they suggest some people in the community want no part of the proposed middle ground.

“One problem is this implementation – this particular integration of an LLM into MDN that will happily lie to users,” wrote software engineer Leonora Tindall in a comment on Thursday.

“But, the solution is not ‘make the LLM better.’ It solves no real problems that hiring technical writers wouldn’t solve. It is, in essence, a copyright laundering machine that saves money (by not hiring writers) at the expense of quality. It is an attempt to replace the labor of trained, knowledgeable writers with OpenAI’s exploitation machine – exploitation, not just of reams of copyrighted material scraped from across the Web, but also of underpaid workers in the global south.

“That is a practice that I, personally, think is unacceptable and abhorrent.”

Another developer who goes by the name “Be” offered a similar take:

“Everything about this blog post is disgusting. It’s abundantly clear at this point that Mozilla is an irresponsible steward of web documentation and should have no further involvement in it. I find it repugnant that Mozilla is trying to profit off content that they’re barely even writing anymore. That they are trying to profit from content they’re not writing using an LLM that they didn’t make either makes this situation even more bizarre.

“Fork it.” ®

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://go.theregister.com/feed/www.theregister.com/2023/07/06/mozilla_ai_explain_shift/

- :is

- :not

- :where

- 1

- 10

- 12

- 24

- 31

- 7

- 75

- a

- About

- accuracy

- acknowledged

- across

- actually

- adapt

- added

- Additional

- ahead

- AI

- AI services

- aims

- algorithmic

- allows

- along

- also

- amazing

- an

- and

- answer

- answers

- any

- anymore

- approach

- ARE

- AS

- At

- attempted

- BE

- been

- before

- below

- beta

- Better

- Blog

- body

- Bot

- both

- Bug

- but

- button

- by

- CAN

- case

- cases

- cautious

- certainly

- chatbot

- chief

- chief product officer

- clear

- CO

- code

- Columns

- comments

- community

- Completed

- Concerns

- considered

- constructive

- content

- continue

- Conversation

- copyright

- covering

- critical

- criticism

- CSS

- Days

- decision

- Defines

- delight

- deploy

- described

- detail

- Developer

- developers

- differences

- do

- documentation

- does

- effort

- either

- elected

- engineer

- enormously

- ensure

- enthusiasm

- essence

- essentially

- Even

- everything

- examples

- expands

- experience

- Explain

- exploitation

- Feature

- feedback

- few

- Find

- Firefox

- For

- fork

- Forward

- from

- further

- generative

- Generative AI

- getting

- GitHub

- Global

- Goes

- Grid

- Ground

- handful

- Have

- he

- help

- helpful

- high-quality

- Highlighted

- Hiring

- How

- However

- HTTPS

- i

- idea

- identify

- if

- implementation

- implemented

- implementing

- improve

- in

- include

- incorrectly

- indicated

- information

- input

- integration

- Interface

- into

- investigation

- involvement

- issues

- IT

- ITS

- jpg

- just

- KUDOS

- labor

- language

- Last

- launch

- Laundering

- least

- Lets

- lie

- like

- likes

- LLM

- locked

- machine

- make

- maker

- MAKES

- many

- material

- mdn

- means

- Middle

- money

- more

- most

- Mozilla

- name

- Natural

- Need

- negative

- New

- no

- now

- of

- off

- offer

- offered

- Officer

- Okay

- on

- ONE

- only

- OpenAI

- Opinion

- Options

- our

- out

- over

- page

- part

- particular

- path

- Pattern

- People

- percent

- Personally

- Place

- plans

- plato

- Plato Data Intelligence

- PlatoData

- Point

- positive

- possibility

- Post

- posted

- practice

- Problem

- problems

- process

- Produced

- Product

- Profit

- promised

- proposed

- provide

- providing

- quality

- Questions

- range

- RE

- readers

- real

- receive

- received

- relatively

- remains

- Removed

- replace

- report

- Reporting

- Reports

- representing

- responding

- response

- responses

- responsible

- s

- Said

- same

- saying

- see

- service

- Services

- should

- similar

- situation

- So

- Software

- Software Engineer

- solution

- SOLVE

- Solves

- some

- Source

- speed

- standards

- statistics

- suggest

- Suspend

- Take

- Tap

- team

- Technical

- Technology

- that

- The

- There.

- These

- they

- think

- this

- those

- thursday

- to

- tool

- tools

- trained

- trusted

- two

- until

- upon

- users

- using

- value

- very

- want

- was

- Way..

- ways

- we

- web

- Website

- week

- were

- when

- whereas

- while

- WHO

- wide

- Wide range

- will

- with

- workers

- working

- wouldn

- writing

- Wrong

- You

- Your

- zephyrnet