The success of generative AI applications across a wide range of industries has attracted the attention and interest of companies worldwide who are looking to reproduce and surpass the achievements of competitors or solve new and exciting use cases. These customers are looking into foundation models, such as TII Falcon, Stable Diffusion XL, or OpenAI’s GPT-3.5, as the engines that power the generative AI innovation.

Foundation models are a class of generative AI models that are capable of understanding and generating human-like content, thanks to the vast amounts of unstructured data they have been trained on. These models have revolutionized various computer vision (CV) and natural language processing (NLP) tasks, including image generation, translation, and question answering. They serve as the building blocks for many AI applications and have become a crucial component in the development of advanced intelligent systems.

However, the deployment of foundation models can come with significant challenges, particularly in terms of cost and resource requirements. These models are known for their size, often ranging from hundreds of millions to billions of parameters. Their large size demands extensive computational resources, including powerful hardware and significant memory capacity. In fact, deploying foundation models usually requires at least one (often more) GPUs to handle the computational load efficiently. For example, the TII Falcon-40B Instruct model requires at least an ml.g5.12xlarge instance to be loaded into memory successfully, but performs best with bigger instances. As a result, the return on investment (ROI) of deploying and maintaining these models can be too low to prove business value, especially during development cycles or for spiky workloads. This is due to the running costs of having GPU-powered instances for long sessions, potentially 24/7.

Earlier this year, we announced Amazon Bedrock, a serverless API to access foundation models from Amazon and our generative AI partners. Although it’s currently in Private Preview, its serverless API allows you to use foundation models from Amazon, Anthropic, Stability AI, and AI21, without having to deploy any endpoints yourself. However, open-source models from communities such as Hugging Face have been growing a lot, and not every one of them has been made available through Amazon Bedrock.

In this post, we target these situations and solve the problem of risking high costs by deploying large foundation models to Amazon SageMaker asynchronous endpoints from Amazon SageMaker JumpStart. This can help cut costs of the architecture, allowing the endpoint to run only when requests are in the queue and for a short time-to-live, while scaling down to zero when no requests are waiting to be serviced. This sounds great for a lot of use cases; however, an endpoint that has scaled down to zero will introduce a cold start time before being able to serve inferences.

Solution overview

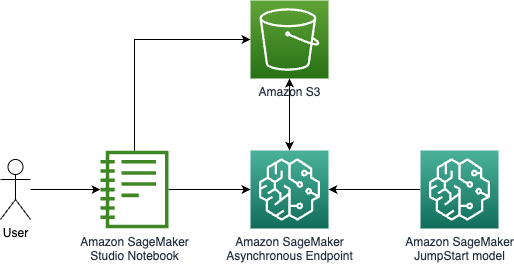

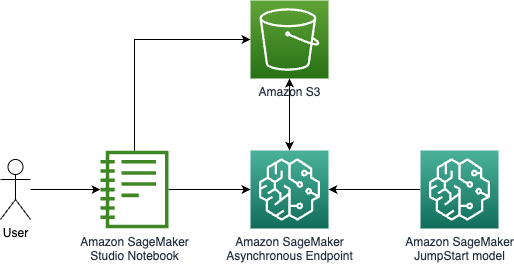

The following diagram illustrates our solution architecture.

The architecture we deploy is very straightforward:

- The user interface is a notebook, which can be replaced by a web UI built on Streamlit or similar technology. In our case, the notebook is an Amazon SageMaker Studio notebook, running on an ml.m5.large instance with the PyTorch 2.0 Python 3.10 CPU kernel.

- The notebook queries the endpoint in three ways: the SageMaker Python SDK, the AWS SDK for Python (Boto3), and LangChain.

- The endpoint is running asynchronously on SageMaker, and on the endpoint, we deploy the Falcon-40B Instruct model. It’s currently the state of the art in terms of instruct models and available in SageMaker JumpStart. A single API call allows us to deploy the model on the endpoint.

What is SageMaker asynchronous inference

SageMaker asynchronous inference is one of the four deployment options in SageMaker, together with real-time endpoints, batch inference, and serverless inference. To learn more about the different deployment options, refer to Deploy models for Inference.

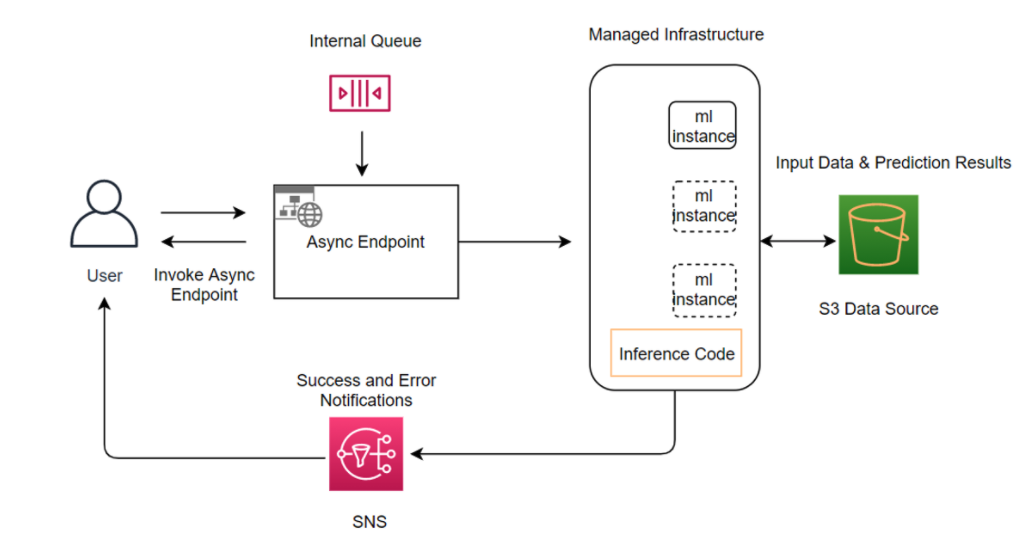

SageMaker asynchronous inference queues incoming requests and processes them asynchronously, making this option ideal for requests with large payload sizes up to 1 GB, long processing times, and near-real-time latency requirements. However, the main advantage that it provides when dealing with large foundation models, especially during a proof of concept (POC) or during development, is the capability to configure asynchronous inference to scale in to an instance count of zero when there are no requests to process, thereby saving costs. For more information about SageMaker asynchronous inference, refer to Asynchronous inference. The following diagram illustrates this architecture.

To deploy an asynchronous inference endpoint, you need to create an AsyncInferenceConfig object. If you create AsyncInferenceConfig without specifying its arguments, the default S3OutputPath will be s3://sagemaker-{REGION}-{ACCOUNTID}/async-endpoint-outputs/{UNIQUE-JOB-NAME} and S3FailurePath will be s3://sagemaker-{REGION}-{ACCOUNTID}/async-endpoint-failures/{UNIQUE-JOB-NAME}.

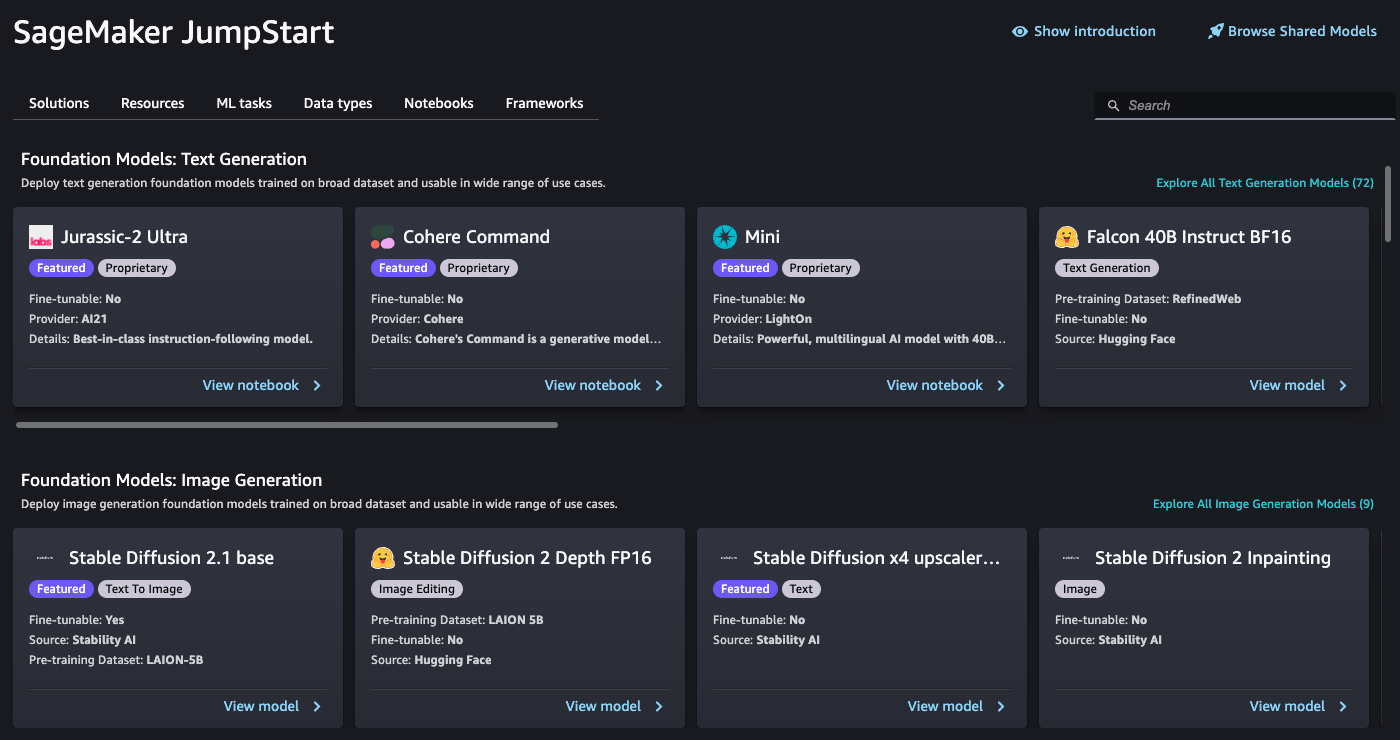

What is SageMaker JumpStart

Our model comes from SageMaker JumpStart, a feature of SageMaker that accelerates the machine learning (ML) journey by offering pre-trained models, solution templates, and example notebooks. It provides access to a wide range of pre-trained models for different problem types, allowing you to start your ML tasks with a solid foundation. SageMaker JumpStart also offers solution templates for common use cases and example notebooks for learning. With SageMaker JumpStart, you can reduce the time and effort required to start your ML projects with one-click solution launches and comprehensive resources for practical ML experience.

The following screenshot shows an example of just some of the models available on the SageMaker JumpStart UI.

Deploy the model

Our first step is to deploy the model to SageMaker. To do that, we can use the UI for SageMaker JumpStart or the SageMaker Python SDK, which provides an API that we can use to deploy the model to the asynchronous endpoint:

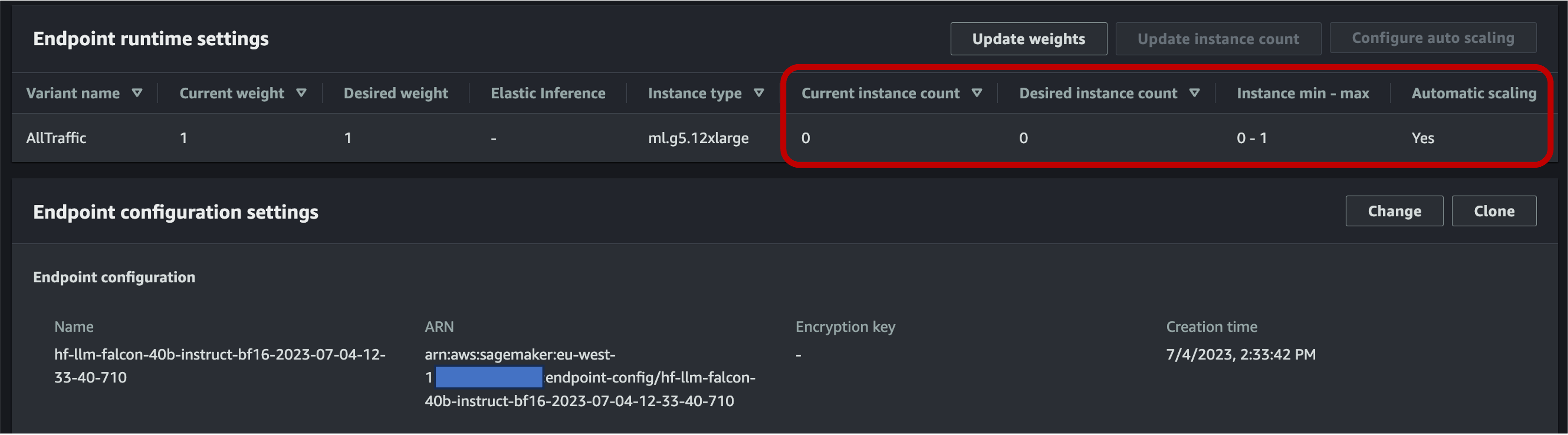

This call can take approximately10 minutes to complete. During this time, the endpoint is spun up, the container together with the model artifacts are downloaded to the endpoint, the model configuration is loaded from SageMaker JumpStart, then the asynchronous endpoint is exposed via a DNS endpoint. To make sure that our endpoint can scale down to zero, we need to configure auto scaling on the asynchronous endpoint using Application Auto Scaling. You need to first register your endpoint variant with Application Auto Scaling, define a scaling policy, and then apply the scaling policy. In this configuration, we use a custom metric using CustomizedMetricSpecification, called ApproximateBacklogSizePerInstance, as shown in the following code. For a detailed list of Amazon CloudWatch metrics available with your asynchronous inference endpoint, refer to Monitoring with CloudWatch.

You can verify that this policy has been set successfully by navigating to the SageMaker console, choosing Endpoints under Inference in the navigation pane, and looking for the endpoint we just deployed.

Invoke the asynchronous endpoint

To invoke the endpoint, you need to place the request payload in Amazon Simple Storage Service (Amazon S3) and provide a pointer to this payload as a part of the InvokeEndpointAsync request. Upon invocation, SageMaker queues the request for processing and returns an identifier and output location as a response. Upon processing, SageMaker places the result in the Amazon S3 location. You can optionally choose to receive success or error notifications with Amazon Simple Notification Service (Amazon SNS).

SageMaker Python SDK

After deployment is complete, it will return an AsyncPredictor object. To perform asynchronous inference, you need to upload data to Amazon S3 and use the predict_async() method with the S3 URI as the input. It will return an AsyncInferenceResponse object, and you can check the result using the get_response() method.

Alternatively, if you would like to check for a result periodically and return it upon generation, use the predict() method. We use this second method in the following code:

Boto3

Let’s now explore the invoke_endpoint_async method from Boto3’s sagemaker-runtime client. It enables developers to asynchronously invoke a SageMaker endpoint, providing a token for progress tracking and retrieval of the response later. Boto3 doesn’t offer a way to wait for the asynchronous inference to be completed like the SageMaker Python SDK’s get_result() operation. Therefore, we take advantage of the fact that Boto3 will store the inference output in Amazon S3 in the response["OutputLocation"]. We can use the following function to wait for the inference file to be written to Amazon S3:

With this function, we can now query the endpoint:

LangChain

LangChain is an open-source framework launched in October 2022 by Harrison Chase. It simplifies the development of applications using large language models (LLMs) by providing integrations with various systems and data sources. LangChain allows for document analysis, summarization, chatbot creation, code analysis, and more. It has gained popularity, with contributions from hundreds of developers and significant funding from venture firms. LangChain enables the connection of LLMs with external sources, making it possible to create dynamic, data-responsive applications. It offers libraries, APIs, and documentation to streamline the development process.

LangChain provides libraries and examples for using SageMaker endpoints with its framework, making it easier to use ML models hosted on SageMaker as the “brain” of the chain. To learn more about how LangChain integrates with SageMaker, refer to the SageMaker Endpoint in the LangChain documentation.

One of the limits of the current implementation of LangChain is that it doesn’t support asynchronous endpoints natively. To use an asynchronous endpoint to LangChain, we have to define a new class, SagemakerAsyncEndpoint, that extends the SagemakerEndpoint class already available in LangChain. Additionally, we provide the following information:

- The S3 bucket and prefix where asynchronous inference will store the inputs (and outputs)

- A maximum number of seconds to wait before timing out

- An

updated _call()function to query the endpoint withinvoke_endpoint_async()instead ofinvoke_endpoint() - A way to wake up the asynchronous endpoint if it’s in cold start (scaled down to zero)

To review the newly created SagemakerAsyncEndpoint, you can check out the sagemaker_async_endpoint.py file available on GitHub.

Clean up

When you’re done testing the generation of inferences from the endpoint, remember to delete the endpoint to avoid incurring in extra charges:

Conclusion

When deploying large foundation models like TII Falcon, optimizing cost is crucial. These models require powerful hardware and substantial memory capacity, leading to high infrastructure costs. SageMaker asynchronous inference, a deployment option that processes requests asynchronously, reduces expenses by scaling the instance count to zero when there are no pending requests. In this post, we demonstrated how to deploy large SageMaker JumpStart foundation models to SageMaker asynchronous endpoints. We provided code examples using the SageMaker Python SDK, Boto3, and LangChain to illustrate different methods for invoking asynchronous endpoints and retrieving results. These techniques enable developers and researchers to optimize costs while using the capabilities of foundation models for advanced language understanding systems.

To learn more about asynchronous inference and SageMaker JumpStart, check out the following posts:

About the author

Davide Gallitelli is a Specialist Solutions Architect for AI/ML in the EMEA region. He is based in Brussels and works closely with customers throughout Benelux. He has been a developer since he was very young, starting to code at the age of 7. He started learning AI/ML at university, and has fallen in love with it since then.

Davide Gallitelli is a Specialist Solutions Architect for AI/ML in the EMEA region. He is based in Brussels and works closely with customers throughout Benelux. He has been a developer since he was very young, starting to code at the age of 7. He started learning AI/ML at university, and has fallen in love with it since then.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- ChartPrime. Elevate your Trading Game with ChartPrime. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://aws.amazon.com/blogs/machine-learning/optimize-deployment-cost-of-amazon-sagemaker-jumpstart-foundation-models-with-amazon-sagemaker-asynchronous-endpoints/

- :has

- :is

- :not

- :where

- $UP

- 1

- 10

- 100

- 12

- 13

- 15%

- 1M

- 2022

- 25

- 7

- a

- Able

- About

- accelerates

- Accepts

- access

- achievements

- across

- activity

- Additionally

- advanced

- ADvantage

- After

- age

- AI

- AI models

- AI/ML

- Allowing

- allows

- already

- also

- Although

- Amazon

- Amazon SageMaker

- Amazon SageMaker JumpStart

- Amazon Web Services

- amount

- amounts

- an

- analysis

- and

- announced

- Another

- Anthropic

- any

- api

- APIs

- Application

- applications

- Apply

- architecture

- ARE

- arguments

- Art

- AS

- At

- attention

- attracted

- auto

- available

- average

- avoid

- AWS

- based

- BE

- become

- been

- before

- being

- BEST

- bigger

- billions

- Blocks

- body

- Break

- Brussels

- Building

- built

- business

- but

- by

- call

- called

- CAN

- capabilities

- capability

- capable

- Capacity

- case

- cases

- chain

- chains

- challenges

- charges

- chase

- chatbot

- check

- Choose

- choosing

- class

- client

- closely

- code

- cold

- come

- comes

- Common

- Communities

- Companies

- competitors

- complete

- Completed

- Completes

- component

- comprehensive

- computer

- Computer Vision

- concept

- Configuration

- connection

- Console

- Container

- content

- contributions

- Cost

- Costs

- create

- created

- creation

- crucial

- Current

- Currently

- custom

- Customers

- Cut

- cut costs

- cycles

- data

- dealing

- Default

- define

- demands

- demonstrated

- deploy

- deployed

- deploying

- deployment

- detailed

- Dev

- Developer

- developers

- Development

- DICT

- different

- Diffusion

- dimensions

- disabled

- dns

- do

- document

- documentation

- Doesn’t

- done

- down

- due

- during

- dynamic

- e

- easier

- efficiently

- effort

- else

- EMEA

- enable

- enables

- Endpoint

- Engines

- enough

- error

- especially

- Every

- example

- examples

- Except

- exception

- exciting

- expenses

- experience

- explore

- exposed

- extends

- extensive

- external

- extra

- Face

- fact

- Fallen

- false

- Feature

- File

- firms

- First

- following

- For

- Foundation

- four

- Framework

- from

- function

- funding

- gained

- generated

- generating

- generation

- generative

- Generative AI

- GitHub

- good

- GPUs

- great

- Growing

- handle

- Hardware

- Have

- having

- he

- help

- here

- High

- hosted

- How

- How To

- However

- HTML

- http

- HTTPS

- Hundreds

- hundreds of millions

- ideal

- identifier

- if

- illustrates

- image

- implementation

- import

- in

- Including

- Incoming

- indicates

- industries

- information

- Infrastructure

- Innovation

- input

- inputs

- instance

- instead

- Integrates

- integrations

- Intelligent

- interest

- Interface

- into

- introduce

- investment

- IT

- ITS

- journey

- jpg

- json

- just

- known

- language

- large

- Latency

- later

- launched

- launches

- leading

- LEARN

- learning

- least

- libraries

- like

- limits

- List

- load

- location

- Long

- looking

- Lot

- love

- Low

- machine

- machine learning

- made

- Main

- Maintaining

- make

- Making

- many

- max

- maximum

- Memory

- method

- methods

- metric

- Metrics

- millions

- Minutes

- ML

- model

- models

- more

- name

- Natural

- Natural Language Processing

- navigating

- Navigation

- Need

- New

- newly

- next

- nlp

- no

- notebook

- notification

- notifications

- now

- number

- object

- october

- of

- offer

- offering

- Offers

- often

- on

- ONE

- only

- open source

- operation

- Optimize

- optimizing

- Option

- Options

- or

- our

- out

- output

- pane

- parameters

- part

- particularly

- partners

- pending

- Perform

- performs

- picture

- Place

- Places

- plato

- Plato Data Intelligence

- PlatoData

- PoC

- policy

- popularity

- possible

- Post

- Posts

- potentially

- power

- powerful

- Practical

- prediction

- Predictor

- Preview

- private

- Problem

- process

- processes

- processing

- Progress

- projects

- proof

- proof of concept

- Prove

- provide

- provided

- provides

- providing

- Python

- pytorch

- queries

- question

- raise

- range

- ranging

- Read

- ready

- real-time

- receive

- reduce

- reduces

- region

- register

- remember

- remove

- replaced

- request

- requests

- require

- required

- Requirements

- requires

- researchers

- resource

- Resources

- response

- result

- Results

- return

- returns

- review

- revolutionized

- risking

- ROI

- Run

- running

- sagemaker

- saving

- scalable

- Scale

- scaling

- sdk

- Second

- seconds

- SELF

- serve

- Serverless

- service

- Services

- sessions

- set

- Short

- shown

- Shows

- significant

- similar

- Simple

- since

- single

- situations

- Size

- sizes

- solid

- solution

- Solutions

- SOLVE

- some

- Sources

- specialist

- spun

- Stability

- stable

- start

- started

- Starting

- State

- Step

- Stop

- storage

- store

- straightforward

- streamline

- substantial

- success

- Successfully

- such

- support

- Supports

- sure

- surpass

- Systems

- Take

- Target

- tasks

- techniques

- Technology

- templates

- terms

- Testing

- Thanks

- that

- The

- The State

- their

- Them

- then

- There.

- thereby

- therefore

- These

- they

- this

- this year

- three

- Through

- throughout

- time

- times

- timing

- to

- together

- token

- too

- Tracking

- trained

- Translation

- true

- try

- types

- ui

- under

- understanding

- university

- until

- upon

- us

- use

- User

- User Interface

- using

- usually

- value

- Variant

- various

- Vast

- venture

- verify

- very

- via

- vision

- wait

- Waiting

- Wake

- Wake Up

- want

- was

- Way..

- ways

- we

- web

- web services

- when

- whether

- which

- while

- WHO

- wide

- Wide range

- will

- with

- without

- Won

- works

- worldwide

- would

- written

- year

- You

- young

- Your

- yourself

- zephyrnet

- zero