With recent advancements in generative AI, there are lot of discussions happening on how to use generative AI across different industries to solve specific business problems. Generative AI is a type of AI that can create new content and ideas, including conversations, stories, images, videos, and music. It is all backed by very large models that are pre-trained on vast amounts of data and commonly referred to as foundation models (FMs). These FMs can perform a wide range of tasks that span multiple domains, like writing blog posts, generating images, solving math problems, engaging in dialog, and answering questions based on a document. The size and general-purpose nature of FMs make them different from traditional ML models, which typically perform specific tasks, like analyzing text for sentiment, classifying images, and forecasting trends.

While organizations are looking to use the power of these FMs, they also want the FM-based solutions to be running in their own protected environments. Organizations operating in heavily regulated spaces like global financial services and healthcare and life sciences have auditory and compliance requirements to run their environment in their VPCs. In fact, a lot of times, even direct internet access is disabled in these environments to avoid exposure to any unintended traffic, both ingress and egress.

Amazon SageMaker JumpStart is an ML hub offering algorithms, models, and ML solutions. With SageMaker JumpStart, ML practitioners can choose from a growing list of best performing open source FMs. It also provides the ability to deploy these models in your own Virtual Private Cloud (VPC).

In this post, we demonstrate how to use JumpStart to deploy a Flan-T5 XXL model in a VPC with no internet connectivity. We discuss the following topics:

- How to deploy a foundation model using SageMaker JumpStart in a VPC with no internet access

- Advantages of deploying FMs via SageMaker JumpStart models in VPC mode

- Alternate ways to customize deployment of foundation models via JumpStart

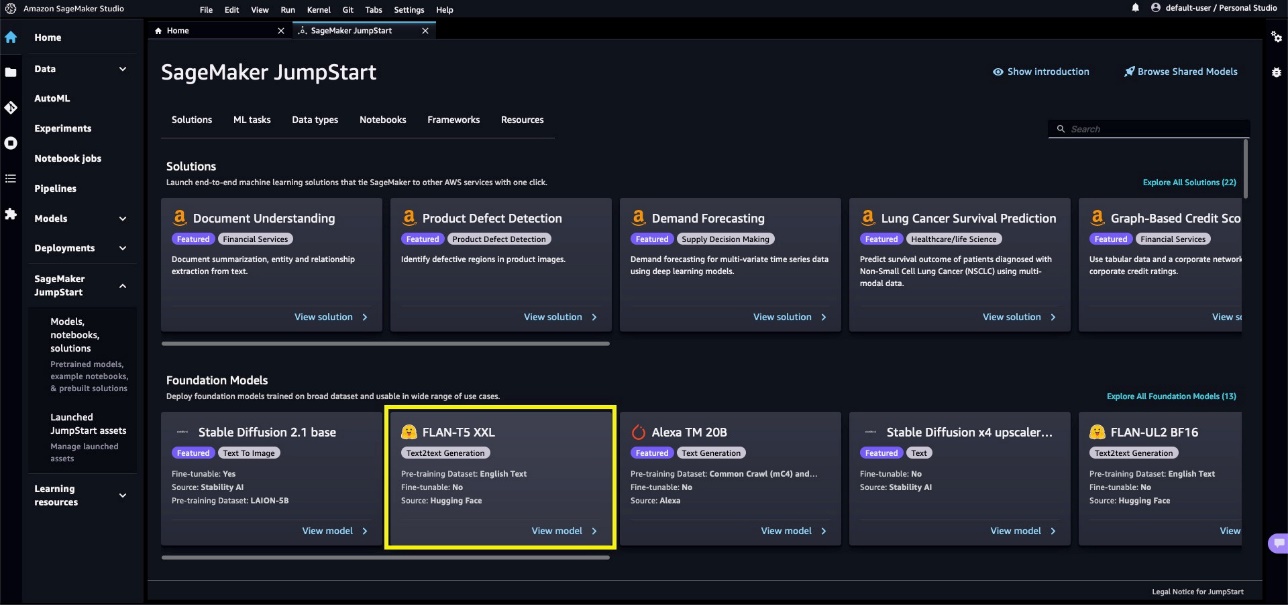

Apart from FLAN-T5 XXL, JumpStart provides lot of different foundation models for various tasks. For the complete list, check out Getting started with Amazon SageMaker JumpStart.

Solution overview

As part of the solution, we cover the following steps:

- Set up a VPC with no internet connection.

- Set up Amazon SageMaker Studio using the VPC we created.

- Deploy the generative AI Flan T5-XXL foundation model using JumpStart in the VPC with no internet access.

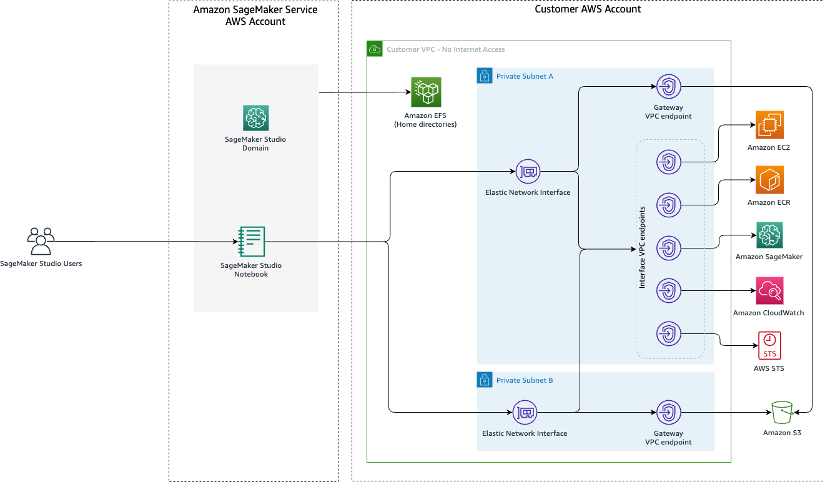

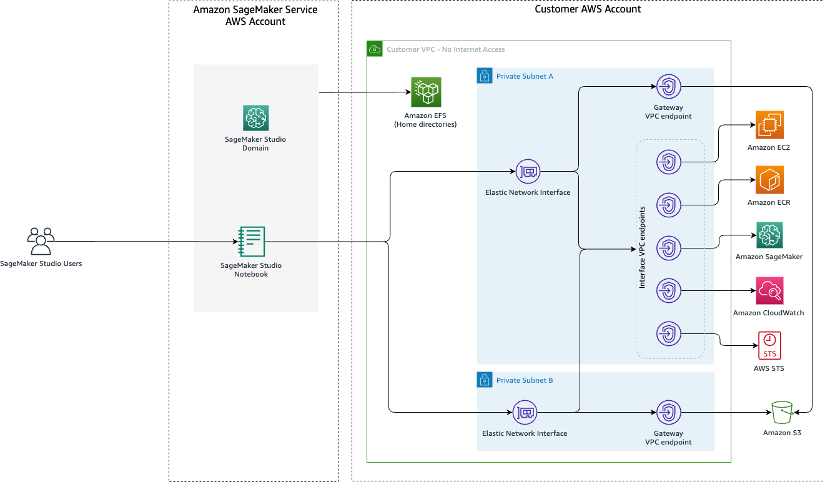

The following is an architecture diagram of the solution.

Let’s walk through the different steps to implement this solution.

Prerequisites

To follow along with this post, you need the following:

Set up a VPC with no internet connection

Create a new CloudFormation stack by using the 01_networking.yaml template. This template creates a new VPC and adds two private subnets across two Availability Zones with no internet connectivity. It then deploys gateway VPC endpoints for accessing Amazon Simple Storage Service (Amazon S3) and interface VPC endpoints for SageMaker and a few other services to allow the resources in the VPC to connect to AWS services via AWS PrivateLink.

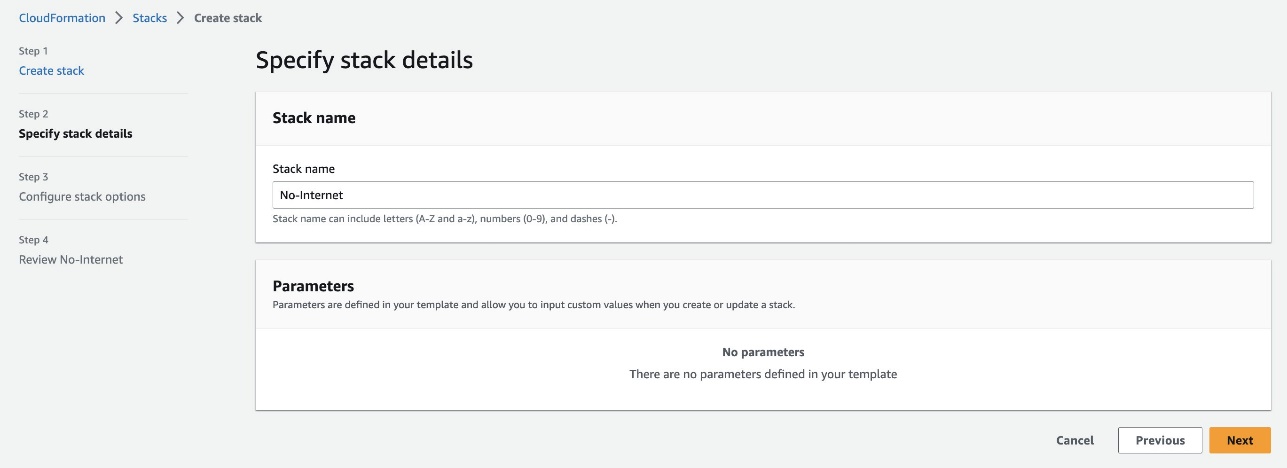

Provide a stack name, such as No-Internet, and complete the stack creation process.

This solution is not highly available because the CloudFormation template creates interface VPC endpoints only in one subnet to reduce costs when following the steps in this post.

Set up Studio using the VPC

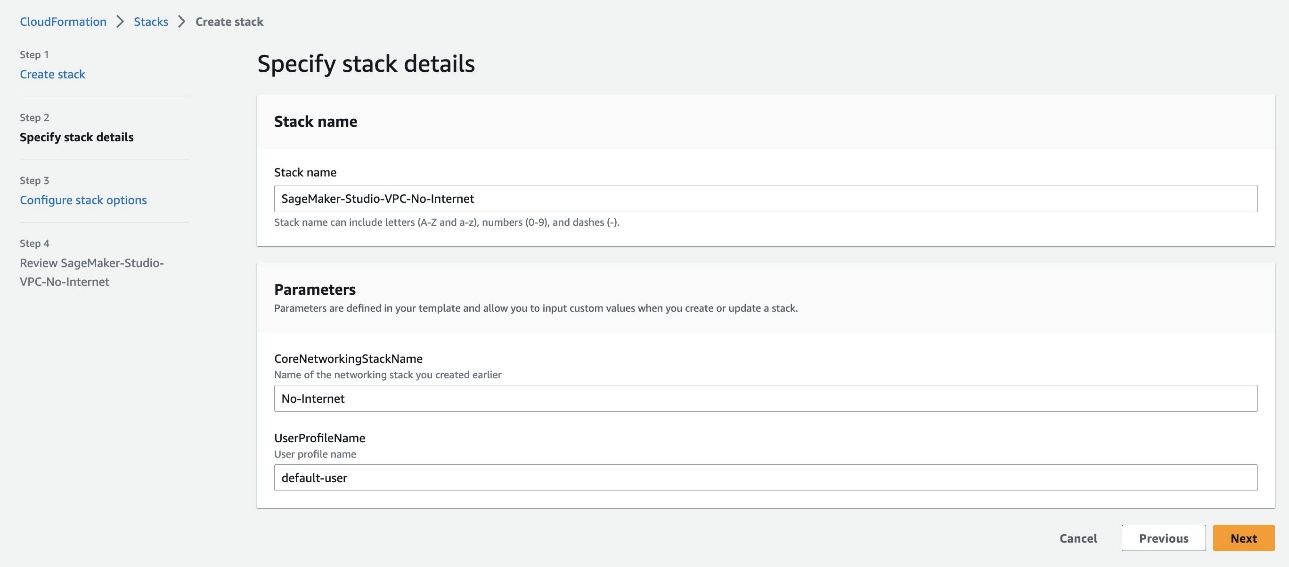

Create another CloudFormation stack using 02_sagemaker_studio.yaml, which creates a Studio domain, Studio user profile, and supporting resources like IAM roles. Choose a name for the stack; for this post, we use the name SageMaker-Studio-VPC-No-Internet. Provide the name of the VPC stack you created earlier (No-Internet) as the CoreNetworkingStackName parameter and leave everything else as default.

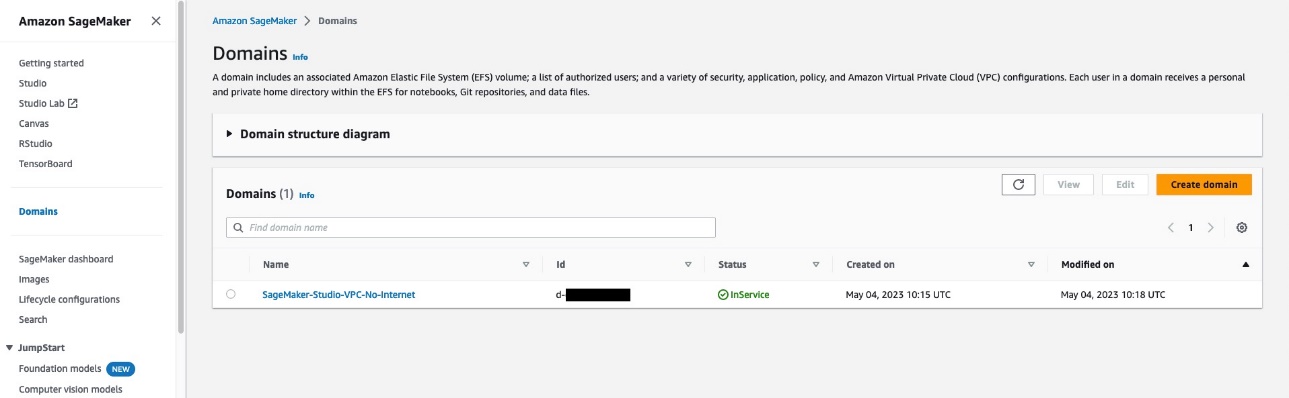

Wait until AWS CloudFormation reports that the stack creation is complete. You can confirm the Studio domain is available to use on the SageMaker console.

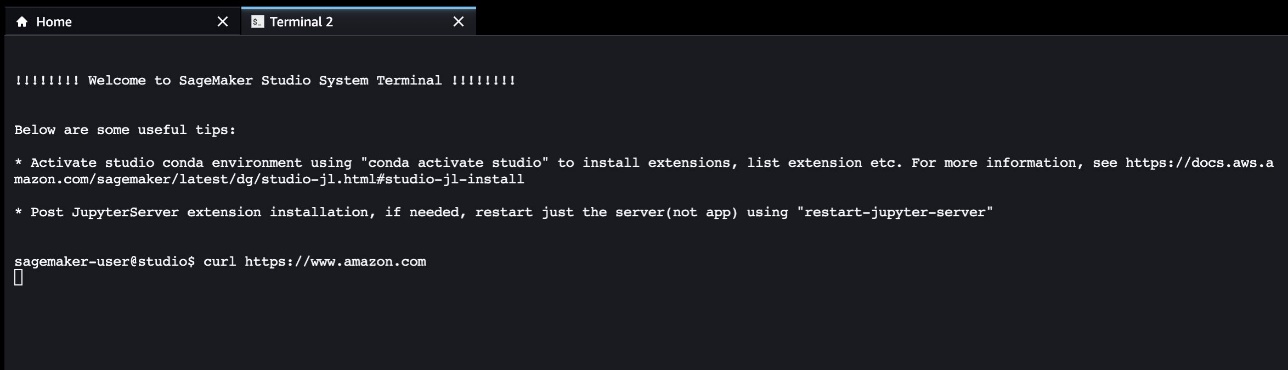

To verify the Studio domain user has no internet access, launch Studio using the SageMaker console. Choose File, New, and Terminal, then attempt to access an internet resource. As shown in the following screenshot, the terminal will keep waiting for the resource and eventually time out.

This proves that Studio is operating in a VPC that doesn’t have internet access.

Deploy the generative AI foundation model Flan T5-XXL using JumpStart

We can deploy this model via Studio as well as via API. JumpStart provides all the code to deploy the model via a SageMaker notebook accessible from within Studio. For this post, we showcase this capability from the Studio.

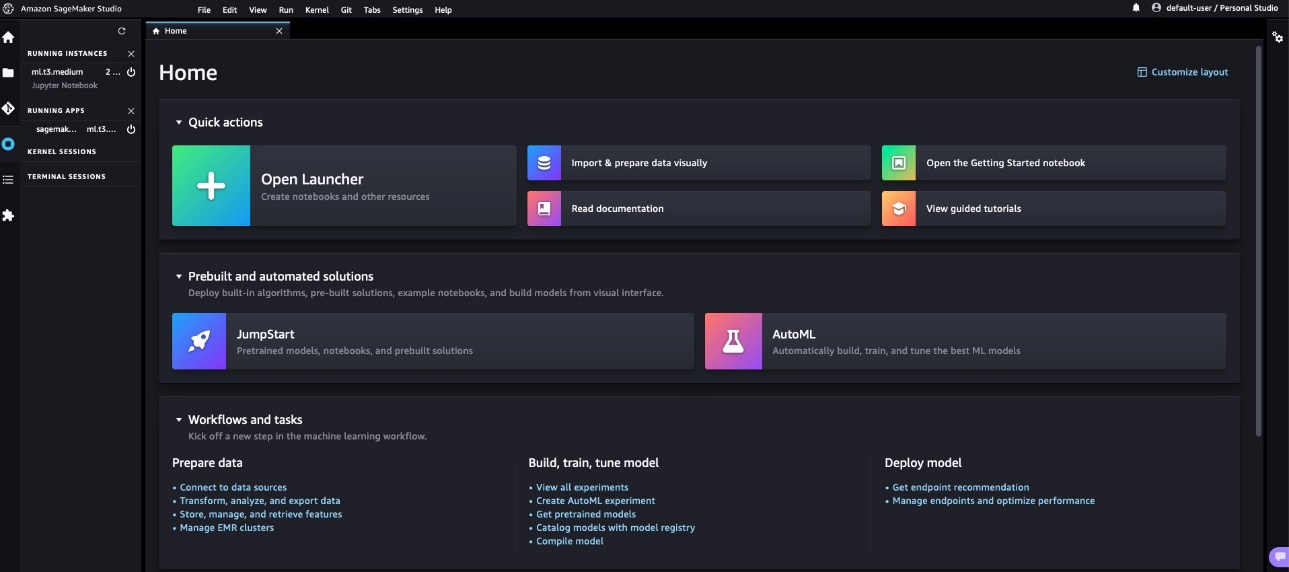

- On the Studio welcome page, choose JumpStart under Prebuilt and automated solutions.

- Choose the Flan-T5 XXL model under Foundation Models.

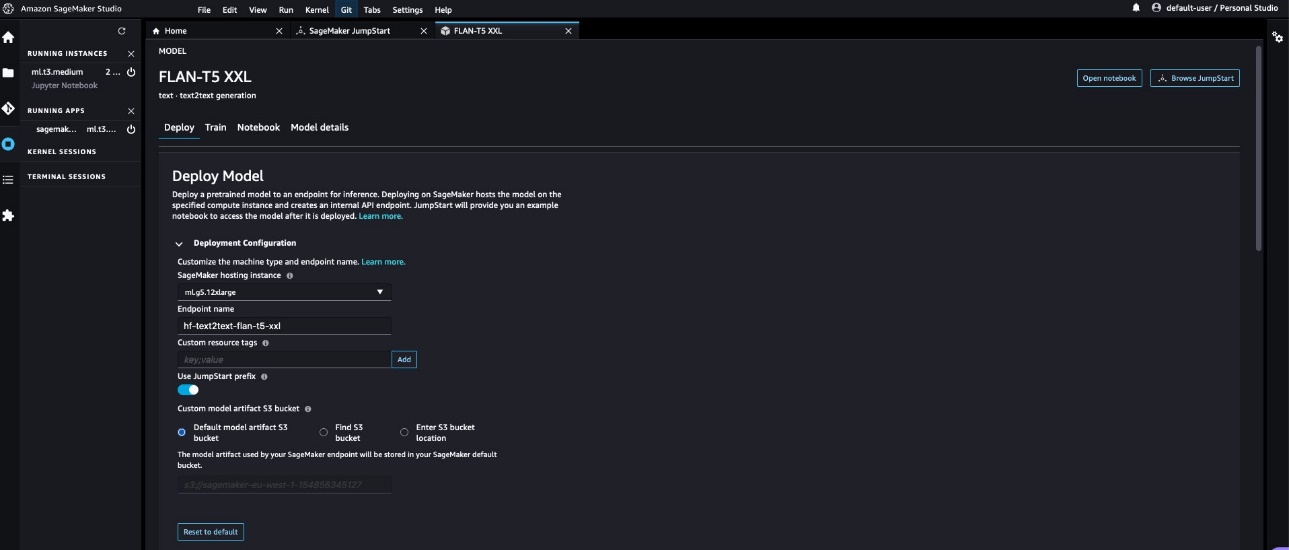

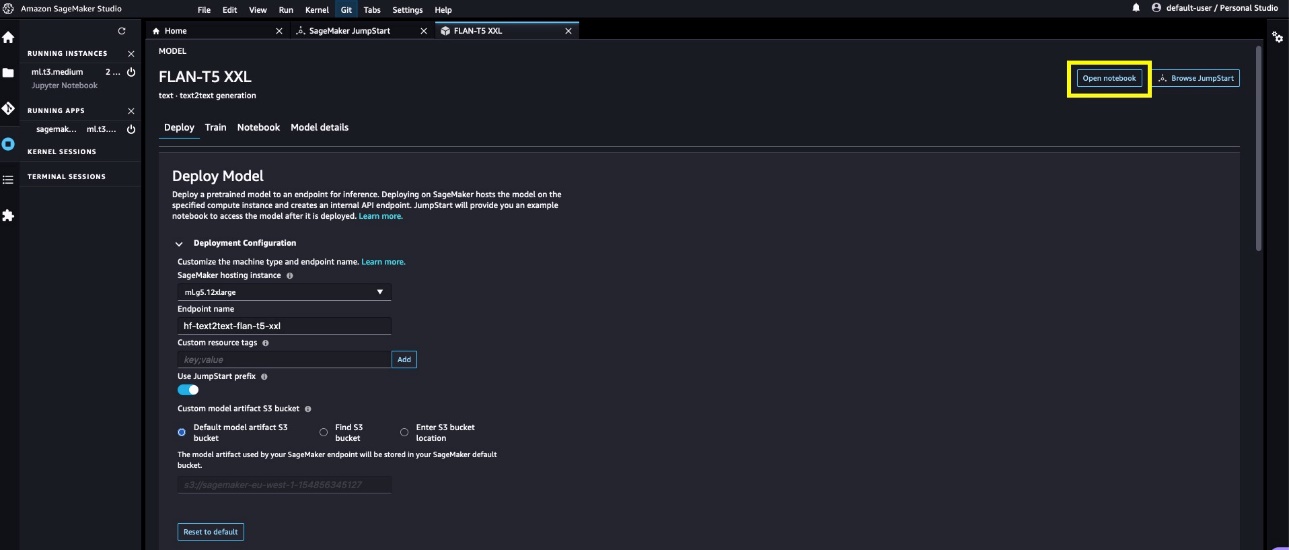

- By default, it opens the Deploy tab. Expand the Deployment Configuration section to change the

hosting instanceandendpoint name, or add any additional tags. There is also an option to change theS3 bucket locationwhere the model artifact will be stored for creating the endpoint. For this post, we leave everything at its default values. Make a note of the endpoint name to use while invoking the endpoint for making predictions.

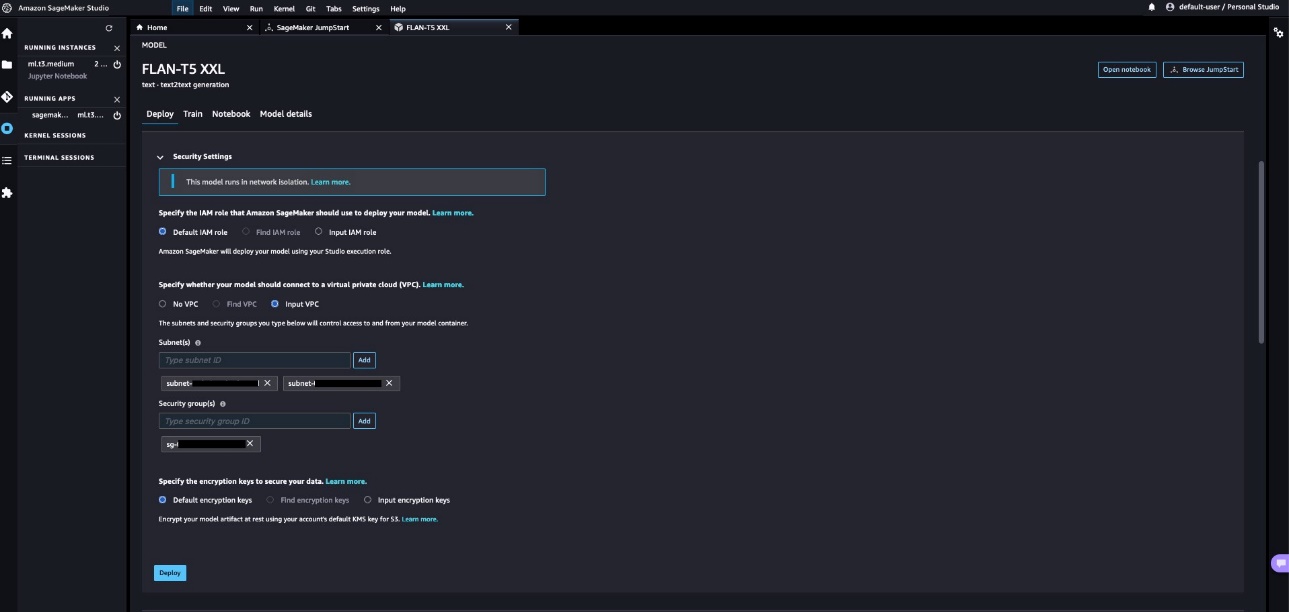

- Expand the Security Settings section, where you can specify the

IAM rolefor creating the endpoint. You can also specify theVPC configurationsby providing thesubnetsandsecurity groups. The subnet IDs and security group IDs can be found from the VPC stack’s Outputs tab on the AWS CloudFormation console. SageMaker JumpStart requires at least two subnets as part of this configuration. The subnets and security groups control access to and from the model container.

NOTE: Irrespective of whether the SageMaker JumpStart model is deployed in the VPC or not, the model always runs in network isolation mode, which isolates the model container so no inbound or outbound network calls can be made to or from the model container. Because we’re using a VPC, SageMaker downloads the model artifact through our specified VPC. Running the model container in network isolation doesn’t prevent your SageMaker endpoint from responding to inference requests. A server process runs alongside the model container and forwards it the inference requests, but the model container doesn’t have network access.

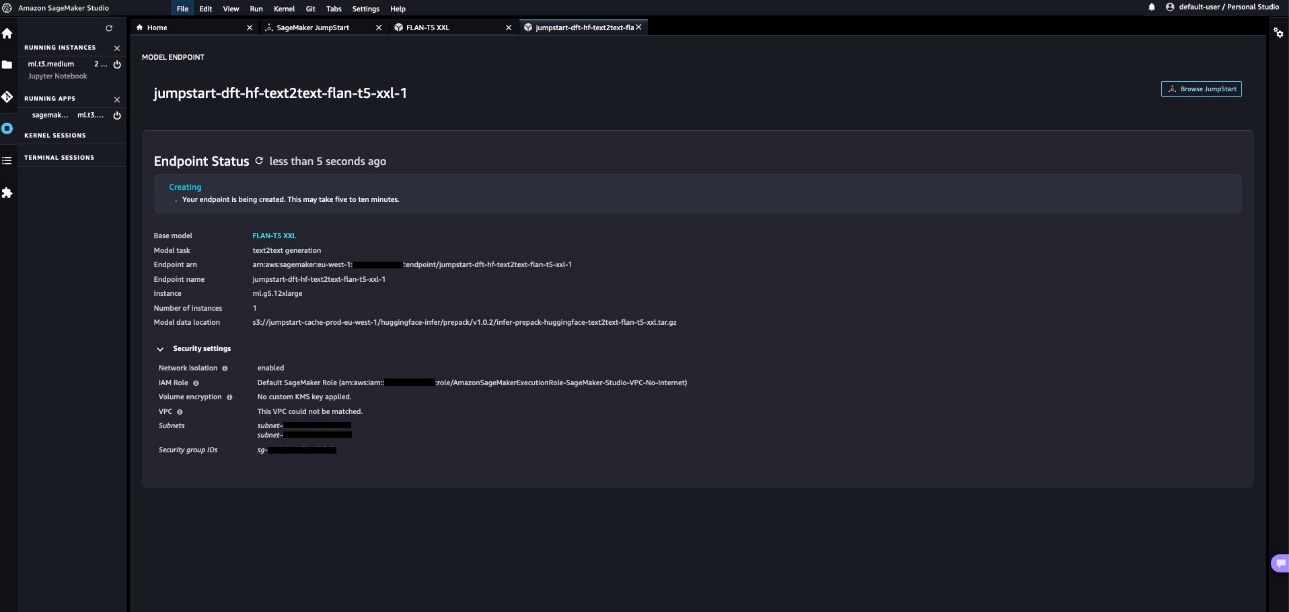

- Choose Deploy to deploy the model. We can see the near-real-time status of the endpoint creation in progress. The endpoint creation may take 5–10 minutes to complete.

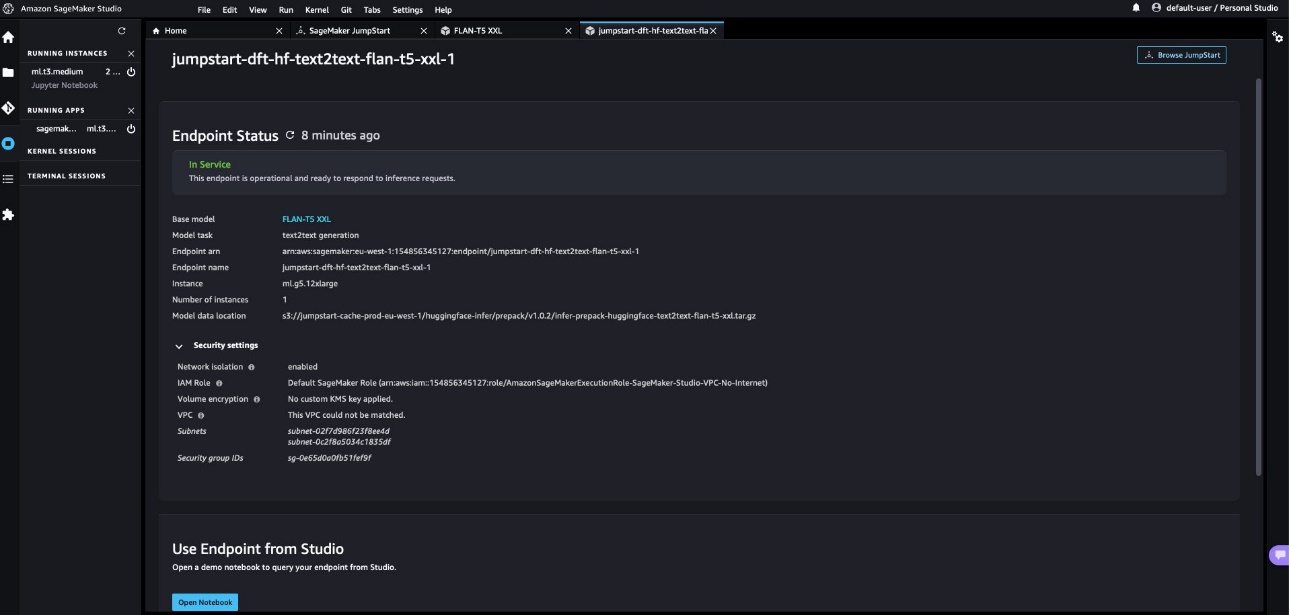

Observe the value of the field Model data location on this page. All the SageMaker JumpStart models are hosted on a SageMaker managed S3 bucket (s3://jumpstart-cache-prod-{region}). Therefore, irrespective of which model is picked from JumpStart, the model gets deployed from the publicly accessible SageMaker JumpStart S3 bucket and the traffic never goes to the public model zoo APIs to download the model. This is why the model endpoint creation started successfully even when we’re creating the endpoint in a VPC that doesn’t have direct internet access.

The model artifact can also be copied to any private model zoo or your own S3 bucket to control and secure model source location further. You can use the following command to download the model locally using the AWS Command Line Interface (AWS CLI):

aws s3 cp s3://jumpstart-cache-prod-eu-west-1/huggingface-infer/prepack/v1.0.2/infer-prepack-huggingface-text2text-flan-t5-xxl.tar.gz .- After a few minutes, the endpoint gets created successfully and shows the status as In Service. Choose

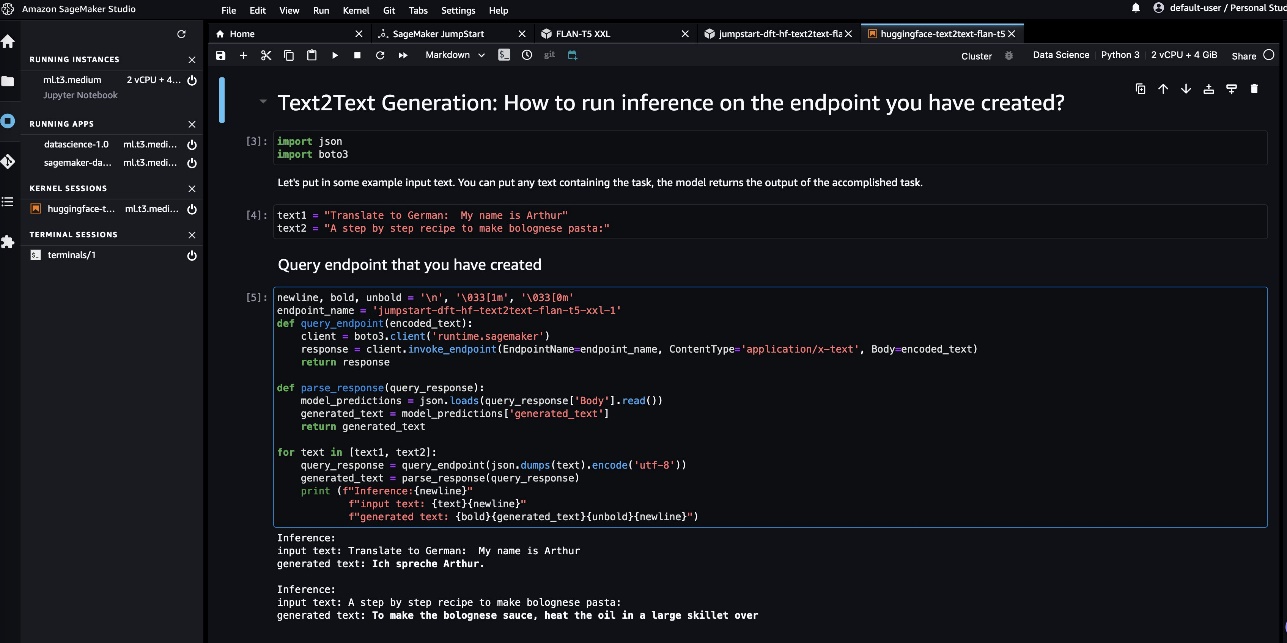

Open Notebookin theUse Endpoint from Studiosection. This is a sample notebook provided as part of the JumpStart experience to quickly test the endpoint.

- In the notebook, choose the image as Data Science 3.0 and the kernel as Python 3. When the kernel is ready, you can run the notebook cells to make predictions on the endpoint. Note that the notebook uses the invoke_endpoint() API from the AWS SDK for Python to make predictions. Alternatively, you can use the SageMaker Python SDK’s predict() method to achieve the same result.

This concludes the steps to deploy the Flan-T5 XXL model using JumpStart within a VPC with no internet access.

Advantages of deploying SageMaker JumpStart models in VPC mode

The following are some of the advantages of deploying SageMaker JumpStart models in VPC mode:

- Because SageMaker JumpStart doesn’t download the models from a public model zoo, it can be used in fully locked-down environments as well where there is no internet access

- Because the network access can be limited and scoped down for SageMaker JumpStart models, this helps teams improve the security posture of the environment

- Due to the VPC boundaries, access to the endpoint can also be limited via subnets and security groups, which adds an extra layer of security

Alternate ways to customize deployment of foundation models via SageMaker JumpStart

In this section, we share some alternate ways to deploy the model.

Use SageMaker JumpStart APIs from your preferred IDE

Models provided by SageMaker JumpStart don’t require you to access Studio. You can deploy them to SageMaker endpoints from any IDE, thanks to the JumpStart APIs. You could skip the Studio setup step discussed earlier in this post and use the JumpStart APIs to deploy the model. These APIs provide arguments where VPC configurations can be supplied as well. The APIs are part of the SageMaker Python SDK itself. For more information, refer to Pre-trained models.

Use notebooks provided by SageMaker JumpStart from SageMaker Studio

SageMaker JumpStart also provides notebooks to deploy the model directly. On the model detail page, choose Open notebook to open a sample notebook containing the code to deploy the endpoint. The notebook uses SageMaker JumpStart Industry APIs that allow you to list and filter the models, retrieve the artifacts, and deploy and query the endpoints. You can also edit the notebook code per your use case-specific requirements.

Clean up resources

Check out the CLEANUP.md file to find detailed steps to delete the Studio, VPC, and other resources created as part of this post.

Troubleshooting

If you encounter any issues in creating the CloudFormation stacks, refer to Troubleshooting CloudFormation.

Conclusion

Generative AI powered by large language models is changing how people acquire and apply insights from information. However, organizations operating in heavily regulated spaces are required to use the generative AI capabilities in a way that allows them to innovate faster but also simplifies the access patterns to such capabilities.

We encourage you to try out the approach provided in this post to embed generative AI capabilities in your existing environment while still keeping it inside your own VPC with no internet access. For further reading on SageMaker JumpStart foundation models, check out the following:

About the authors

Vikesh Pandey is a Machine Learning Specialist Solutions Architect at AWS, helping customers from financial industries design and build solutions on generative AI and ML. Outside of work, Vikesh enjoys trying out different cuisines and playing outdoor sports.

Vikesh Pandey is a Machine Learning Specialist Solutions Architect at AWS, helping customers from financial industries design and build solutions on generative AI and ML. Outside of work, Vikesh enjoys trying out different cuisines and playing outdoor sports.

Mehran Nikoo is a Senior Solutions Architect at AWS, working with Digital Native businesses in the UK and helping them achieve their goals. Passionate about applying his software engineering experience to machine learning, he specializes in end-to-end machine learning and MLOps practices.

Mehran Nikoo is a Senior Solutions Architect at AWS, working with Digital Native businesses in the UK and helping them achieve their goals. Passionate about applying his software engineering experience to machine learning, he specializes in end-to-end machine learning and MLOps practices.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://aws.amazon.com/blogs/machine-learning/use-generative-ai-foundation-models-in-vpc-mode-with-no-internet-connectivity-using-amazon-sagemaker-jumpstart/

- :has

- :is

- :not

- :where

- $UP

- 100

- 7

- a

- ability

- About

- access

- accessible

- accessing

- Achieve

- acquire

- across

- add

- Additional

- Adds

- advancements

- advantages

- AI

- algorithms

- All

- allow

- allows

- along

- alongside

- also

- always

- Amazon

- Amazon SageMaker

- Amazon SageMaker JumpStart

- Amazon Web Services

- amounts

- an

- analyzing

- and

- Another

- any

- api

- APIs

- Apply

- Applying

- approach

- architecture

- ARE

- arguments

- AS

- At

- Automated

- availability

- available

- avoid

- AWS

- AWS CloudFormation

- backed

- based

- BE

- because

- BEST

- Blog

- Blog Posts

- both

- boundaries

- build

- business

- businesses

- but

- by

- Calls

- CAN

- capabilities

- capability

- Cells

- change

- changing

- check

- Choose

- Cloud

- code

- commonly

- complete

- compliance

- Configuration

- Confirm

- Connect

- connection

- Connectivity

- Console

- Container

- content

- control

- conversations

- Costs

- could

- cover

- create

- created

- creates

- Creating

- creation

- Customers

- customize

- data

- Default

- demonstrate

- deploy

- deployed

- deploying

- deployment

- deploys

- Design

- detail

- detailed

- dialog

- different

- digital

- direct

- directly

- disabled

- discuss

- discussed

- discussions

- document

- Doesn’t

- domain

- domains

- Dont

- down

- download

- downloads

- Earlier

- else

- embed

- encourage

- end-to-end

- Endpoint

- engaging

- Engineering

- Environment

- environments

- Even

- eventually

- everything

- existing

- Expand

- experience

- Exposure

- extra

- fact

- faster

- few

- field

- File

- filter

- financial

- financial industries

- financial services

- Find

- follow

- following

- For

- found

- Foundation

- from

- fully

- further

- gateway

- general-purpose

- generating

- generative

- Generative AI

- Global

- global financial

- Goals

- Goes

- Group

- Group’s

- Growing

- Happening

- Have

- he

- healthcare

- heavily

- helping

- helps

- highly

- his

- hosted

- How

- How To

- However

- HTML

- http

- HTTPS

- Hub

- ideas

- ids

- image

- images

- implement

- improve

- in

- Including

- industries

- industry

- information

- innovate

- inside

- insights

- Interface

- Internet

- internet access

- internet connection

- irrespective

- isolation

- issues

- IT

- ITS

- itself

- jpg

- Keep

- keeping

- language

- large

- layer

- learning

- least

- Leave

- Life

- Life Sciences

- like

- Limited

- Line

- List

- locally

- location

- looking

- Lot

- machine

- machine learning

- made

- make

- Making

- managed

- math

- May..

- method

- Minutes

- ML

- MLOps

- Mode

- model

- models

- more

- multiple

- Music

- name

- native

- Nature

- Need

- network

- never

- New

- no

- notebook

- of

- offering

- on

- ONE

- only

- open

- open source

- opens

- operating

- Option

- or

- organizations

- Other

- our

- out

- outside

- own

- page

- parameter

- part

- passionate

- patterns

- People

- per

- Perform

- performing

- picked

- plato

- Plato Data Intelligence

- PlatoData

- playing

- Post

- Posts

- power

- powered

- practices

- predict

- Predictions

- Predictor

- preferred

- prevent

- private

- problems

- process

- Profile

- Progress

- protected

- proves

- provide

- provided

- provides

- providing

- public

- publicly

- Python

- Questions

- quickly

- range

- Reading

- ready

- recent

- reduce

- referred

- regulated

- Reports

- requests

- require

- required

- Requirements

- requires

- resource

- Resources

- responding

- result

- roles

- Run

- running

- runs

- sagemaker

- same

- Science

- SCIENCES

- sdk

- Section

- secure

- security

- see

- senior

- sentiment

- Services

- setup

- Share

- showcase

- shown

- Shows

- Simple

- Size

- So

- Software

- software engineering

- solution

- Solutions

- SOLVE

- Solving

- some

- Source

- spaces

- span

- specialist

- specializes

- specific

- specified

- Sports

- stack

- Stacks

- started

- Status

- Step

- Steps

- Still

- storage

- stored

- Stories

- studio

- subnet

- subnets

- Successfully

- such

- supplied

- Supporting

- Take

- tasks

- teams

- template

- Terminal

- test

- Thanks

- that

- The

- the UK

- their

- Them

- then

- There.

- therefore

- These

- they

- this

- Through

- time

- times

- to

- Topics

- traditional

- traffic

- Trends

- try

- two

- type

- typically

- Uk

- under

- until

- use

- used

- User

- uses

- using

- value

- Values

- various

- Vast

- verify

- very

- via

- Videos

- Waiting

- want

- Way..

- ways

- we

- web

- web services

- welcome

- WELL

- when

- whether

- which

- while

- why

- wide

- Wide range

- Wikipedia

- will

- with

- within

- Work

- working

- writing

- yaml

- You

- Your

- zephyrnet

- zones

- ZOO