On August 9, 2022, we announced the general availability of cross-account sharing of Amazon SageMaker Pipelines entities. You can now use cross-account support for Pipelines Amazon SageMaker to share pipeline entities across AWS accounts and access shared pipelines directly through Amazon SageMaker Apeluri API.

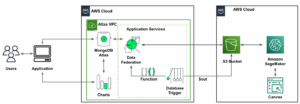

Customers are increasingly adopting multi-account architectures for deploying and managing machine learning (ML) workflows with SageMaker Pipelines. This involves building workflows in development or experimentation (dev) accounts, deploying and testing them in a testing or pre-production (test) account, and finally promoting them to production (prod) accounts to integrate with other business processes. You can benefit from cross-account sharing of SageMaker pipelines in the following use cases:

- When data scientists build ML workflows in a dev account, those workflows are then deployed by an ML engineer as a SageMaker pipeline into a dedicated test account. To further monitor those workflows, data scientists now require cross-account read-only permission to the deployed pipeline in the test account.

- ML engineers, ML admins, and compliance teams, who manage deployment and operations of those ML workflows from a shared services account, also require visibility into the deployed pipeline in the test account. They might also require additional permissions for starting, stopping, and retrying those ML workflows.

In this post, we present an example multi-account architecture for developing and deploying ML workflows with SageMaker Pipelines.

Prezentare generală a soluțiilor

A multi-account strategy helps you achieve data, project, and team isolation while supporting software development lifecycle steps. Cross-account pipeline sharing supports a multi-account strategy, removing the overhead of logging in and out of multiple accounts and improving ML testing and deployment workflows by sharing resources directly across multiple accounts.

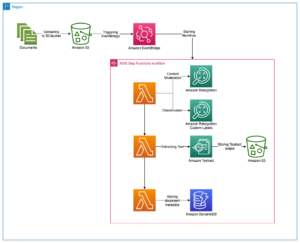

In this example, we have a data science team that uses a dedicated dev account for the initial development of the SageMaker pipeline. This pipeline is then handed over to an ML engineer, who creates a continuous integration and continuous delivery (CI/CD) pipeline in their shared services account to deploy this pipeline into a test account. To still be able to monitor and control the deployed pipeline from their respective dev and shared services accounts, resource shares are set up with Manager de acces la resurse AWS in the test and dev accounts. With this setup, the ML engineer and the data scientist can now monitor and control the pipelines in the dev and test accounts from their respective accounts, as shown in the following figure.

In the workflow, the data scientist and ML engineer perform the following steps:

- The data scientist (DS) builds a model pipeline in the dev account.

- The ML engineer (MLE) productionizes the model pipeline and creates a pipeline, (for this post, we call it

sagemaker-pipeline). sagemaker-pipelinecode is committed to an AWS CodeCommit repository in the shared services account.- The data scientist creates an AWS RAM resource share for

sagemaker-pipelineand shares it with the shared services account, which accepts the resource share. - From the shared services account, ML engineers are now able to describe, monitor, and administer the pipeline runs in the dev account using SageMaker API calls.

- A CI/CD pipeline triggered in the shared service account builds and deploys the code to the test account using AWS CodePipeline.

- The CI/CD pipeline creates and runs

sagemaker-pipelinein the test account. - După alergare

sagemaker-pipelinein the test account, the CI/CD pipeline creates a resource share forsagemaker-pipelinein the test account. - A resource share from the test

sagemaker-pipelinewith read-only permissions is created with the dev account, which accepts the resource share. - The data scientist is now able to describe and monitor the test pipeline run status using SageMaker API calls from the dev account.

- A resource share from the test

sagemaker-pipelinewith extended permissions is created with the shared services account, which accepts the resource share. - The ML engineer is now able to describe, monitor, and administer the test pipeline run using SageMaker API calls from the shared services account.

In the following sections, we go into more detail and provide a demonstration on how to set up cross-account sharing for SageMaker pipelines.

How to create and share SageMaker pipelines across accounts

In this section, we walk through the necessary steps to create and share pipelines across accounts using AWS RAM and the SageMaker API.

Configurați mediul înconjurător

First, we need to set up a multi-account environment to demonstrate cross-account sharing of SageMaker pipelines:

- Set up two AWS accounts (dev and test). You can set this up as member accounts of an organization or as independent accounts.

- If you’re setting up your accounts as member of an organization, you can enable resource sharing with your organization. With this setting, when you share resources in your organization, AWS RAM doesn’t send invitations to principals. Principals in your organization gain access to shared resources without exchanging invitations.

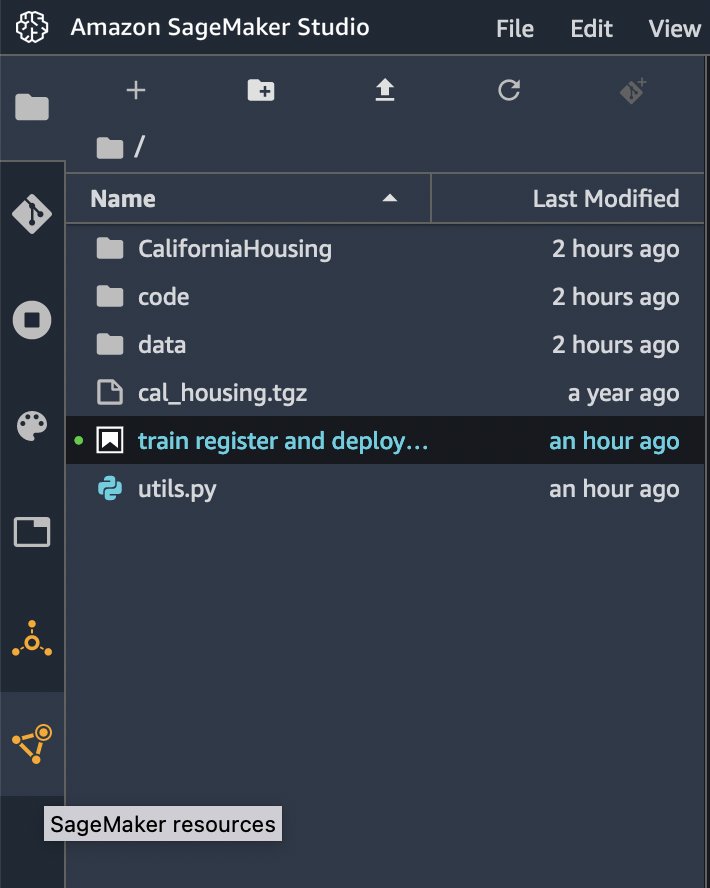

- In the test account, launch Amazon SageMaker Studio and run the notebook train-register-deploy-pipeline-model. This creates an example pipeline in your test account. To simplify the demonstration, we use SageMaker Studio in the test account to launch the the pipeline. For real life projects, you should use Studio only in the dev account and launch SageMaker Pipeline in the test account using your CI/CD tooling.

Follow the instructions in the next section to share this pipeline with the dev account.

Set up a pipeline resource share

To share your pipeline with the dev account, complete the following steps:

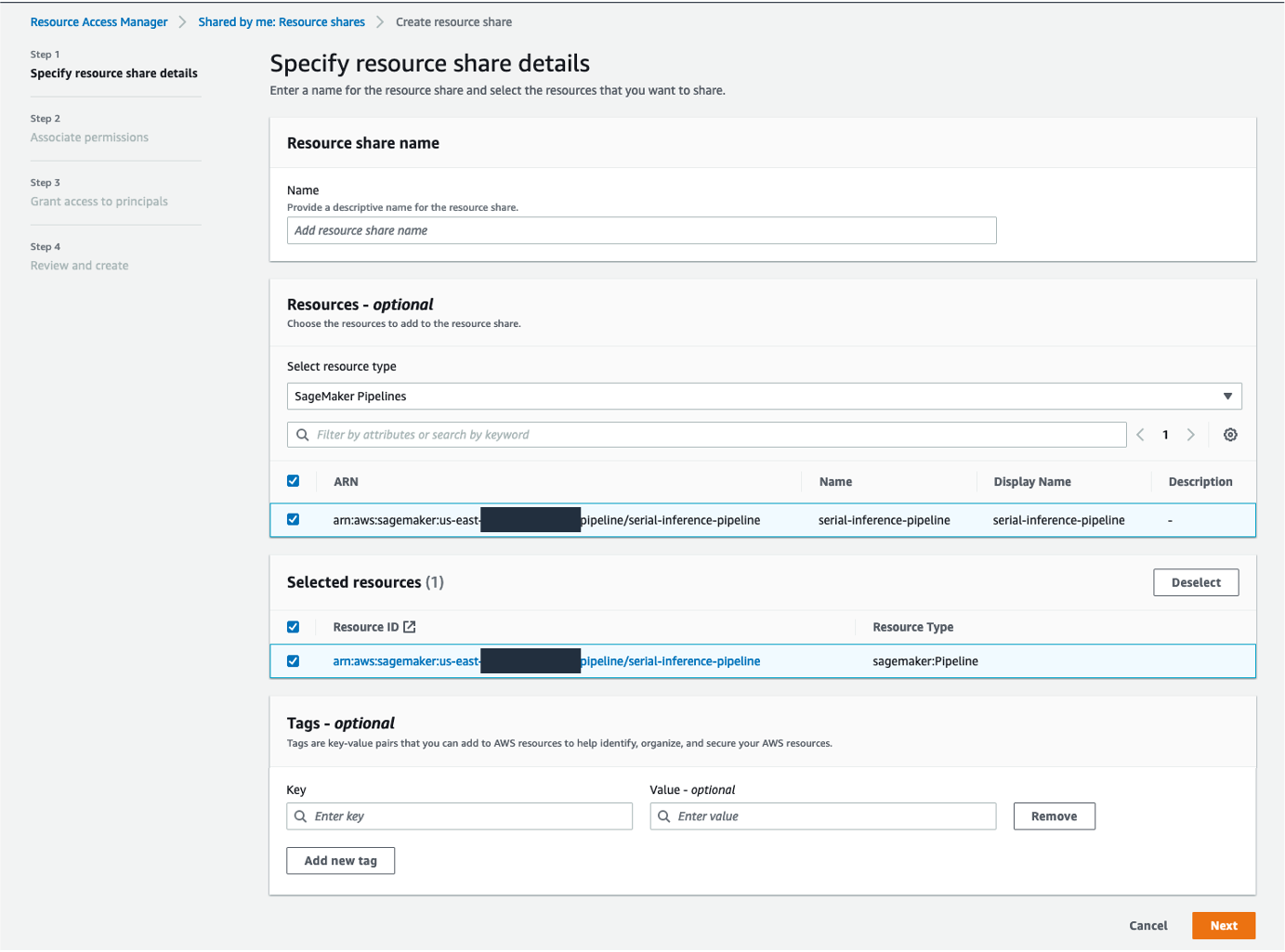

- Pe consola RAM AWS, alegeți Creați partajarea resurselor.

- Pentru Select resource type, alege Conducte SageMaker.

- Select the pipeline you created in the previous step.

- Alege Pagina Următoare →.

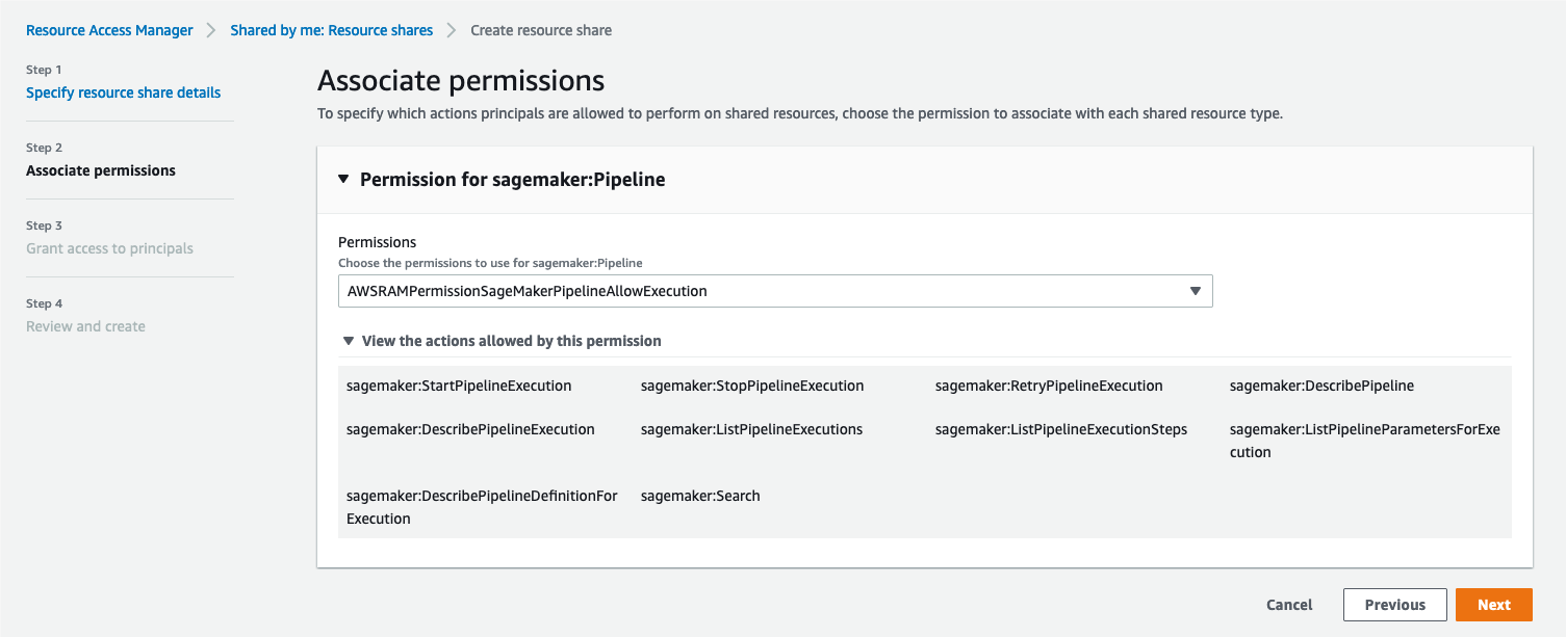

- Pentru Permisiuni, choose your associated permissions.

- Alege Pagina Următoare →.

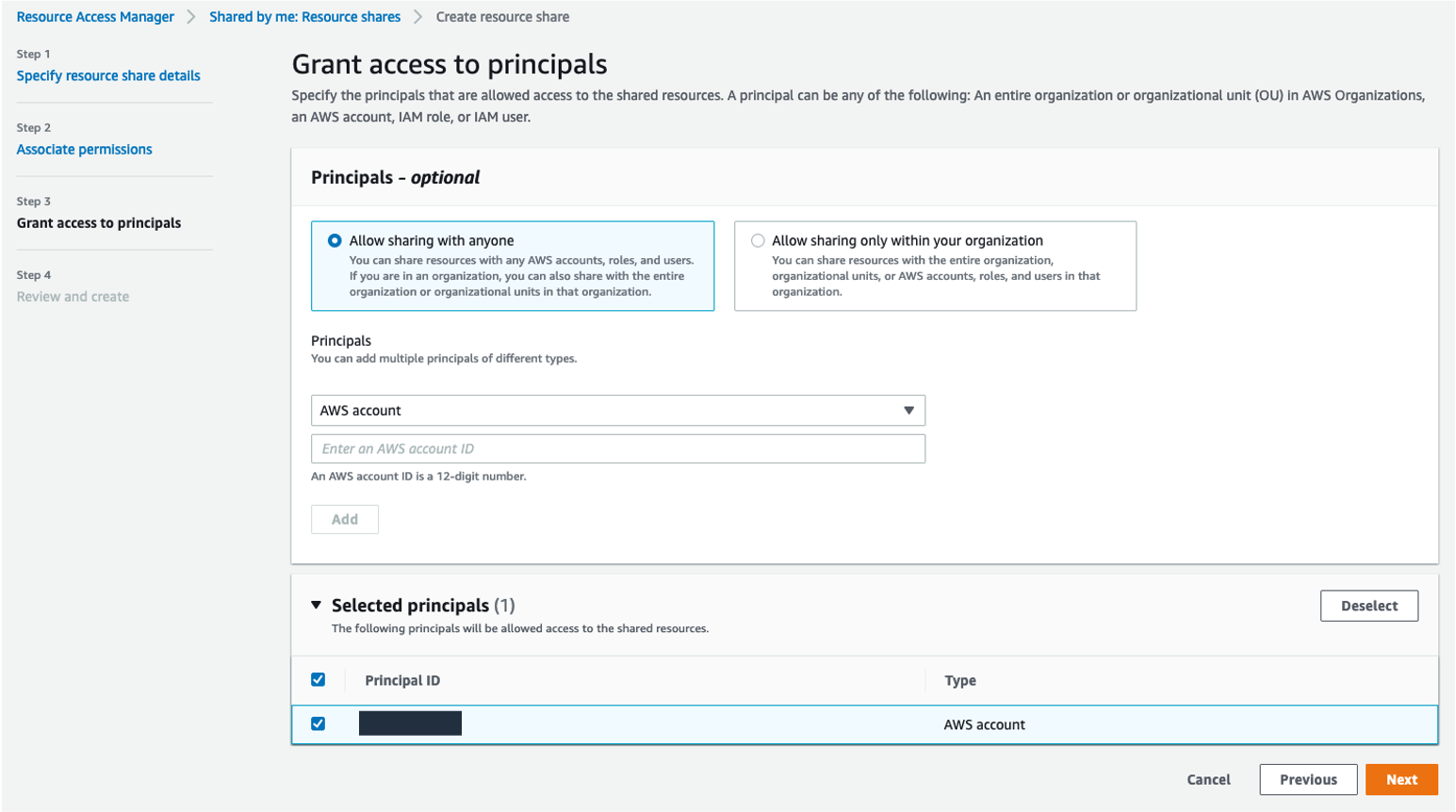

Next, you decide how you want to grant access to principals.

Next, you decide how you want to grant access to principals. - If you need to share the pipeline only within your organization accounts, select Allow Sharing only within your organization; otherwise select Allow sharing with anyone.

- Pentru Directorii, choose your principal type (you can use an AWS account, organization, or organizational unit, based on your sharing requirement). For this post, we share with anyone at the AWS account level.

- Select your principal ID.

- Alege Pagina Următoare →.

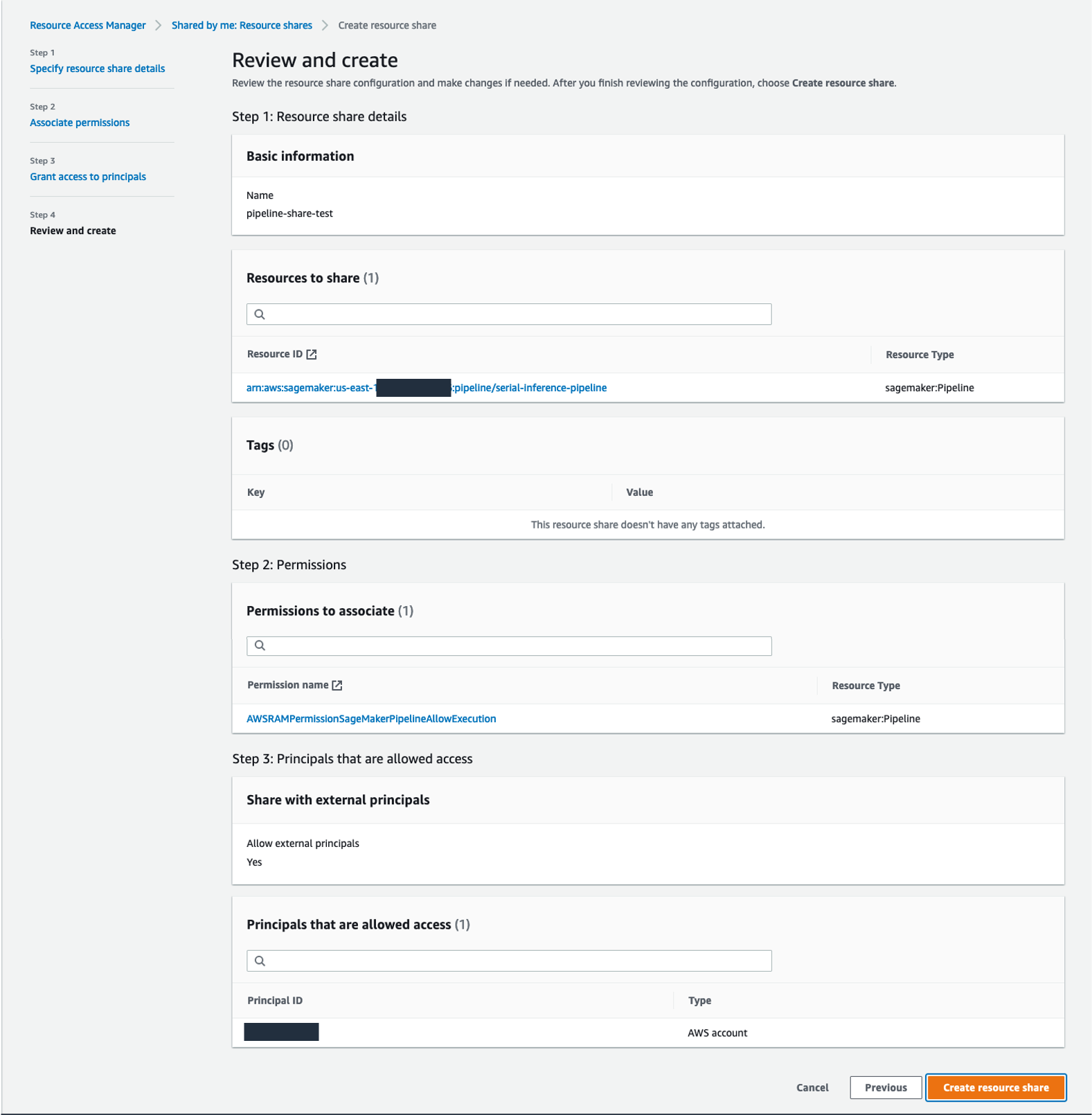

- Pe Examinați și creați page, verify your information is correct and choose Creați partajarea resurselor.

- Navigate to your destination account (for this post, your dev account).

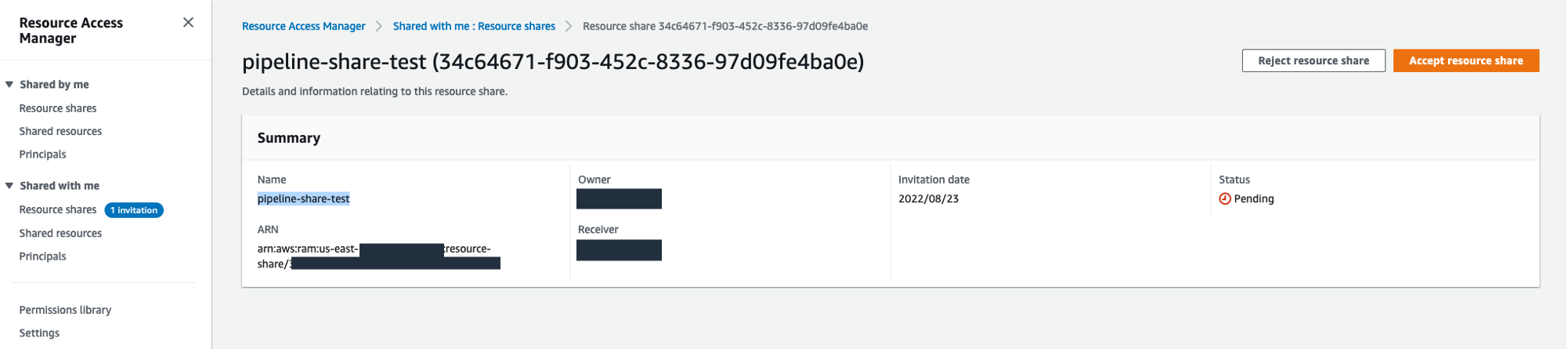

- Pe consola RAM AWS, sub Împărțit cu mine în panoul de navigare, alegeți Distribuirea resurselor.

- Choose your resource share and choose Acceptați partajarea resurselor.

Resource sharing permissions

When creating your resource share, you can choose from one of two supported permission policies to associate with the SageMaker pipeline resource type. Both policies grant access to any selected pipeline and all of its runs.

AWSRAMDefaultPermissionSageMakerPipeline policy allows the following read-only actions:

AWSRAMPermissionSageMakerPipelineAllowExecution policy includes all of the read-only permissions from the default policy, and also allows shared accounts to start, stop, and retry pipeline runs.

The extended pipeline run permission policy allows the following actions:

Access shared pipeline entities through direct API calls

In this section, we walk through how you can use various SageMaker Pipeline API calls to gain visibility into pipelines running in remote accounts that have been shared with you. For testing the APIs against the pipeline running in the test account from the dev account, log in to the dev account and use AWS CloudShell.

For the cross-account SageMaker Pipeline API calls, you always need to use your pipeline ARN as the pipeline identification. That also includes the commands requiring the pipeline name, where you need to use your pipeline ARN as the pipeline name.

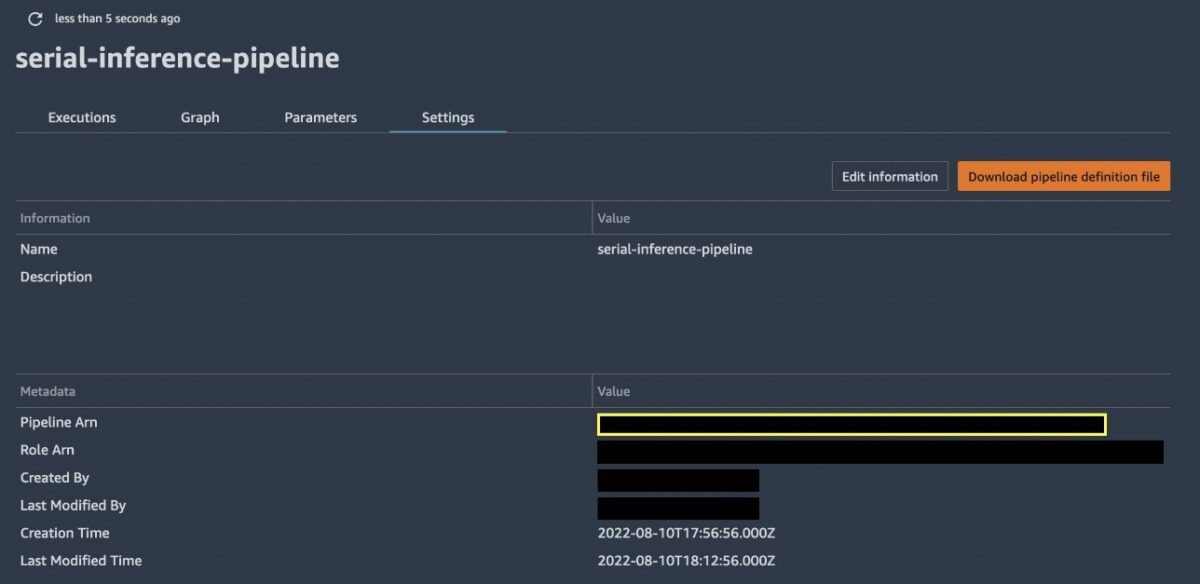

To get your pipeline ARN, in your test account, navigate to your pipeline details in Studio via SageMaker Resources.

Alege Conducte on your resources list.

Choose your pipeline and go to your pipeline setări cont tab. You can find the pipeline ARN with your Metadata information. For this example, your ARN is defined as "arn:aws:sagemaker:us-east-1:<account-id>:pipeline/serial-inference-pipeline".

ListPipelineExecutions

This API call lists the runs of your pipeline. Run the following command, replacing $SHARED_PIPELINE_ARN with your pipeline ARN from CloudShell or using the Interfața liniei de comandă AWS (AWS CLI) configured with the appropriated Gestionarea identității și accesului AWS (EU SUNT) rol:

The response lists all the runs of your pipeline with their PipelineExecutionArn, StartTime, PipelineExecutionStatus, și PipelineExecutionDisplayName:

DescribePipeline

This API call describes the detail of your pipeline. Run the following command, replacing $SHARED_PIPELINE_ARN with your pipeline ARN:

The response provides the metadata of your pipeline, as well as information about creation and modifications of it:

DescribePipelineExecution

This API call describes the detail of your pipeline run. Run the following command, replacing $SHARED_PIPELINE_ARN with your pipeline ARN:

The response provides details on your pipeline run, including the PipelineExecutionStatus, ExperimentName, și TrialName:

StartPipelineExecution

This API call începe a pipeline run. Run the following command, replacing $SHARED_PIPELINE_ARN with your pipeline ARN and $CLIENT_REQUEST_TOKEN with a unique, case-sensitive identifier that you generate for this run. The identifier should have between 32–128 characters. For instance, you can generate a string using the AWS CLI kms generate-random command.

As a response, this API call returns the PipelineExecutionArn of the started run:

StopPipelineExecution

This API call se oprește a pipeline run. Run the following command, replacing $PIPELINE_EXECUTION_ARN with the pipeline run ARN of your running pipeline and $CLIENT_REQUEST_TOKEN with an unique, case-sensitive identifier that you generate for this run. The identifier should have between 32–128 characters. For instance, you can generate a string using the AWS CLI kms generate-random command.

As a response, this API call returns the PipelineExecutionArn of the stopped pipeline:

Concluzie

Cross-account sharing of SageMaker pipelines allows you to securely share pipeline entities across AWS accounts and access shared pipelines through direct API calls, without having to log in and out of multiple accounts.

In this post, we dove into the functionality to show how you can share pipelines across accounts and access them via SageMaker API calls.

As a next step, you can use this feature for your next ML project.

Resurse

To get started with SageMaker Pipelines and sharing pipelines across accounts, refer to the following resources:

Despre autori

Ram Vittal este arhitect de soluții specializat în ML la AWS. Are peste 20 de ani de experiență în arhitectura și construirea de aplicații distribuite, hibride și cloud. El este pasionat de construirea de soluții sigure și scalabile de AI/ML și de date mari pentru a ajuta clienții întreprinderilor în călătoria lor de adoptare și optimizare a cloud-ului pentru a-și îmbunătăți rezultatele în afaceri. În timpul liber, îi place tenisul, fotografia și filmele de acțiune.

Ram Vittal este arhitect de soluții specializat în ML la AWS. Are peste 20 de ani de experiență în arhitectura și construirea de aplicații distribuite, hibride și cloud. El este pasionat de construirea de soluții sigure și scalabile de AI/ML și de date mari pentru a ajuta clienții întreprinderilor în călătoria lor de adoptare și optimizare a cloud-ului pentru a-și îmbunătăți rezultatele în afaceri. În timpul liber, îi place tenisul, fotografia și filmele de acțiune.

Maira Ladeira Tanke este arhitect de soluții specializat în ML la AWS. Cu experiență în știința datelor, ea are 9 ani de experiență în arhitectura și construirea de aplicații ML cu clienți din diverse industrii. În calitate de lider tehnic, ea ajută clienții să-și accelereze atingerea valorii afacerii prin tehnologii emergente și soluții inovatoare. În timpul liber, Mairei îi place să călătorească și să petreacă timpul cu familia ei într-un loc cald.

Maira Ladeira Tanke este arhitect de soluții specializat în ML la AWS. Cu experiență în știința datelor, ea are 9 ani de experiență în arhitectura și construirea de aplicații ML cu clienți din diverse industrii. În calitate de lider tehnic, ea ajută clienții să-și accelereze atingerea valorii afacerii prin tehnologii emergente și soluții inovatoare. În timpul liber, Mairei îi place să călătorească și să petreacă timpul cu familia ei într-un loc cald.

Gabriel Zylka is a Professional Services Consultant at AWS. He works closely with customers to accelerate their cloud adoption journey. Specialized in the MLOps domain, he focuses on productionizing machine learning workloads by automating end-to-end machine learning lifecycles and helping achieve desired business outcomes. In his spare time, he enjoys traveling and hiking in the Bavarian Alps.

Gabriel Zylka is a Professional Services Consultant at AWS. He works closely with customers to accelerate their cloud adoption journey. Specialized in the MLOps domain, he focuses on productionizing machine learning workloads by automating end-to-end machine learning lifecycles and helping achieve desired business outcomes. In his spare time, he enjoys traveling and hiking in the Bavarian Alps.

- AI

- ai art

- ai art generator

- ai robot

- Amazon SageMaker

- inteligență artificială

- certificare de inteligență artificială

- inteligența artificială în domeniul bancar

- robot cu inteligență artificială

- roboți cu inteligență artificială

- software de inteligență artificială

- Învățare automată AWS

- blockchain

- conferință blockchain ai

- coingenius

- inteligența artificială conversațională

- criptoconferință ai

- dall-e

- învățare profundă

- google ai

- masina de învățare

- Plato

- platoul ai

- Informații despre date Platon

- Jocul lui Platon

- PlatoData

- platogaming

- scara ai

- sintaxă

- zephyrnet