With personalized content more likely to drive customer engagement, businesses continuously seek to provide tailored content based on their customer’s profile and behavior. Recommendation systems in particular seek to predict the preference an end-user would give to an item. Some common use cases include product recommendations on online retail stores, personalizing newsletters, generating music playlist recommendations, or even discovering similar content on online media services.

However, it can be challenging to create an effective recommendation system due to complexities in model training, algorithm selection, and platform management. Amazonska prilagoditev enables developers to improve customer engagement through personalized product and content recommendations with no machine learning (ML) expertise required. Developers can start to engage customers right away by using captured user behavior data. Behind the scenes, Amazon Personalize examines this data, identifies what is meaningful, selects the right algorithms, trains and optimizes a personalization model that is customized for your data, and provides recommendations via an API endpoint.

Although providing recommendations in real time can help boost engagement and satisfaction, sometimes this might not actually be required, and performing this in batch on a scheduled basis can simply be a more cost-effective and manageable option.

This post shows you how to use AWS services to not only create recommendations but also operationalize a batch recommendation pipeline. We walk through the end-to-end solution without a single line of code. We discuss two topics in detail:

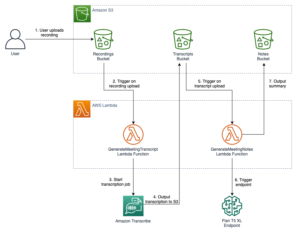

Pregled rešitev

V tej rešitvi uporabljamo FilmLens dataset. This dataset includes 86,000 ratings of movies from 2,113 users. We attempt to use this data to generate recommendations for each of these users.

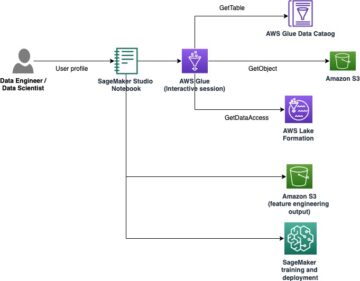

Data preparation is very important to ensure we get customer behavior data into a format that is ready for Amazon Personalize. The architecture described in this post uses AWS Glue, a serverless data integration service, to perform the transformation of raw data into a format that is ready for Amazon Personalize to consume. The solution uses Amazon Personalize to create batch recommendations for all users by using a batch inference. We then use a Step Functions workflow so that the automated workflow can be run by calling Amazon Personalize APIs in a repeatable manner.

The following diagram demonstrates this solution.

We will build this solution with the following steps:

- Build a data transformation job to transform our raw data using AWS Glue.

- Build an Amazon Personalize solution with the transformed dataset.

- Build a Step Functions workflow to orchestrate the generation of batch inferences.

Predpogoji

You need the following for this walkthrough:

Build a data transformation job to transform raw data with AWS Glue

With Amazon Personalize, input data needs to have a specific schema and file format. Data from interactions between users and items must be in CSV format with specific columns, whereas the list of users for which you want to generate recommendations for must be in JSON format. In this section, we use AWS Glue Studio to transform raw input data into the required structures and format for Amazon Personalize.

AWS Glue Studio provides a graphical interface that is designed for easy creation and running of extract, transform, and load (ETL) jobs. You can visually create data transformation workloads through simple drag-and-drop operations.

We first prepare our source data in Preprosta storitev shranjevanja Amazon (Amazon S3), then we transform the data without code.

- On the Amazon S3 console, create an S3 bucket with three folders: raw, transformed, and curated.

- Prenos Nabor podatkov MovieLens and upload the uncompressed file named user_ratingmovies-timestamp.dat to your bucket under the raw folder.

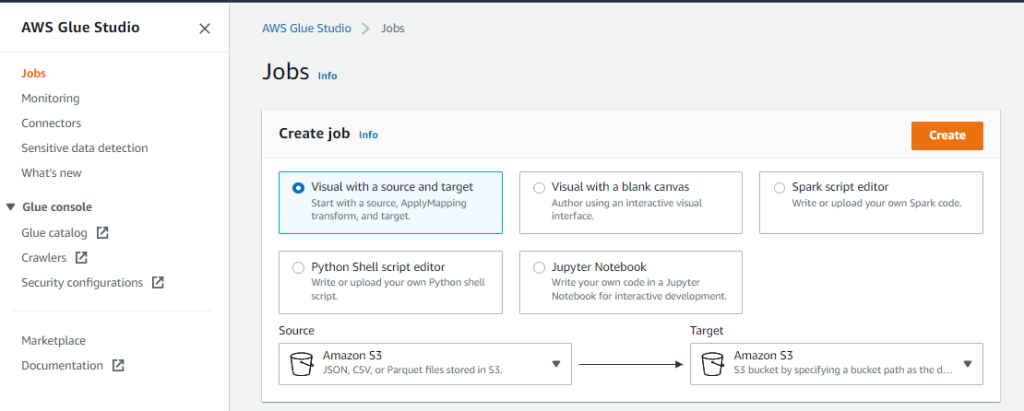

- Na konzoli AWS Glue Studio izberite Delovna mesta v podoknu za krmarjenje.

- Izberite Vizualno z virom in ciljem, nato izberite ustvarjanje.

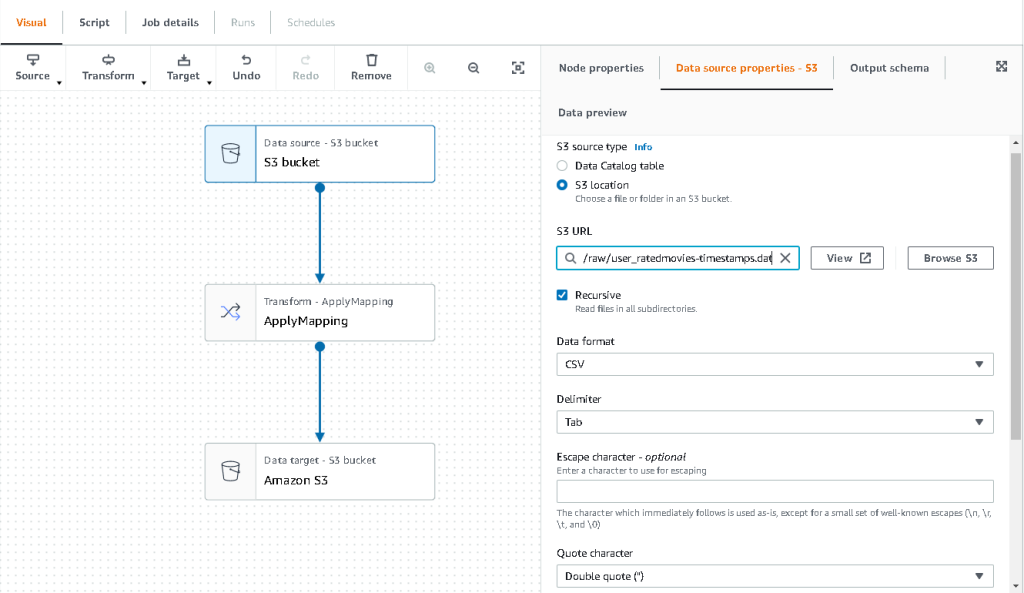

- Choose the first node called Vir podatkov – vedro S3. This is where we specify our input data.

- o Lastnosti vira podatkov jeziček, izberite S3 lokacija and browse to your uploaded file.

- za Oblika podatkov, izberite CSVIn za Delititer, izberite Tab.

- We can choose the Output schema tab to verify that the schema has inferred the columns correctly.

- If the schema doesn’t match your expectations, choose Uredi to edit the schema.

Next, we transform this data to follow the schema requirements for Amazon Personalize.

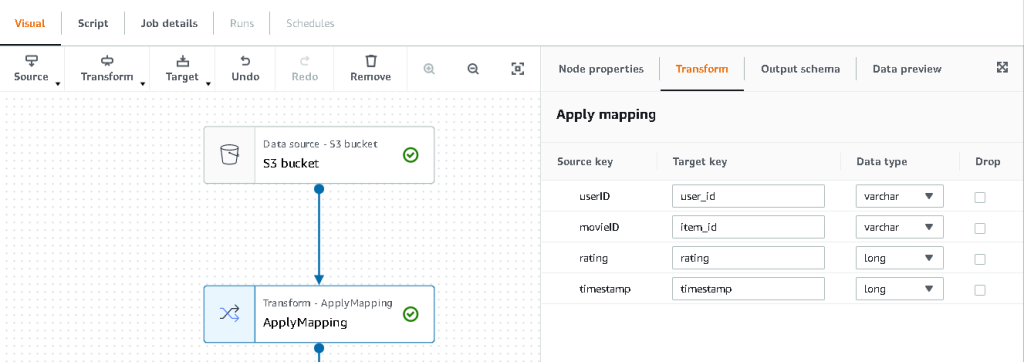

- Izberite Transform – Apply Mapping node and, on the Transform tab, update the target key and data types.

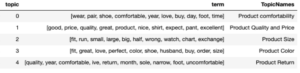

Amazon Personalize, at minimum, expects the following structure for the nabor podatkov o interakcijah:

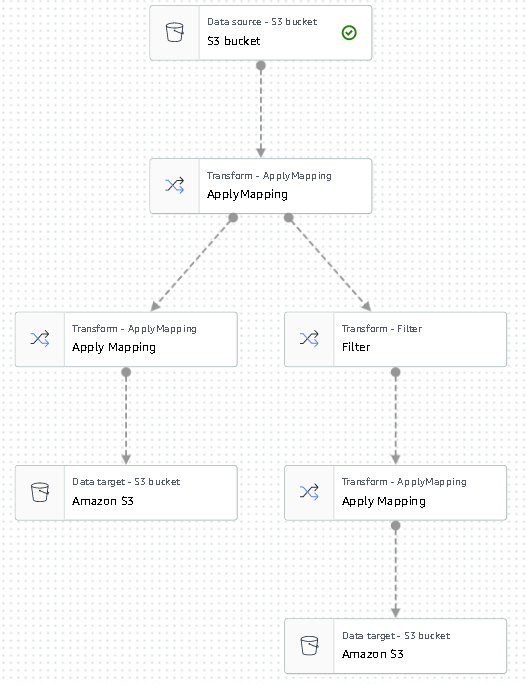

In this example, we exclude the poorly rated movies in the dataset.

- To do so, remove the last node called S3 bucket and add a filter node on the Transform tab.

- Izberite Dodaj stanje and filter out data where rating < 3.5.

We now write the output back to Amazon S3.

- Razširi ciljna izberite in izberite Amazon S3.

- za S3 Ciljna lokacija, choose the folder named

transformed. - Izberite CSV as the format and suffix the Ciljna lokacija z

interactions/.

Next, we output a list of users that we want to get recommendations for.

- Izberite ApplyMapping node again, and then expand the Transform izberite in izberite ApplyMapping.

- Drop all fields except for

user_idand rename that field touserId. Amazon Personalize expects that field to be named Uporabniško ime. - Razširi ciljna menu again and choose Amazon S3.

- This time, choose JSON as the format, and then choose the transformed S3 folder and suffix it with

batch_users_input/.

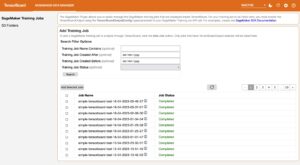

This produces a JSON list of users as input for Amazon Personalize. We should now have a diagram that looks like the following.

We are now ready to run our transform job.

- On the IAM console, create a role called glue-service-role and attach the following managed policies:

AWSGlueServiceRoleAmazonS3FullAccess

For more information on how to create IAM service roles, refer to the Creating a role to delegate permissions to an AWS service.

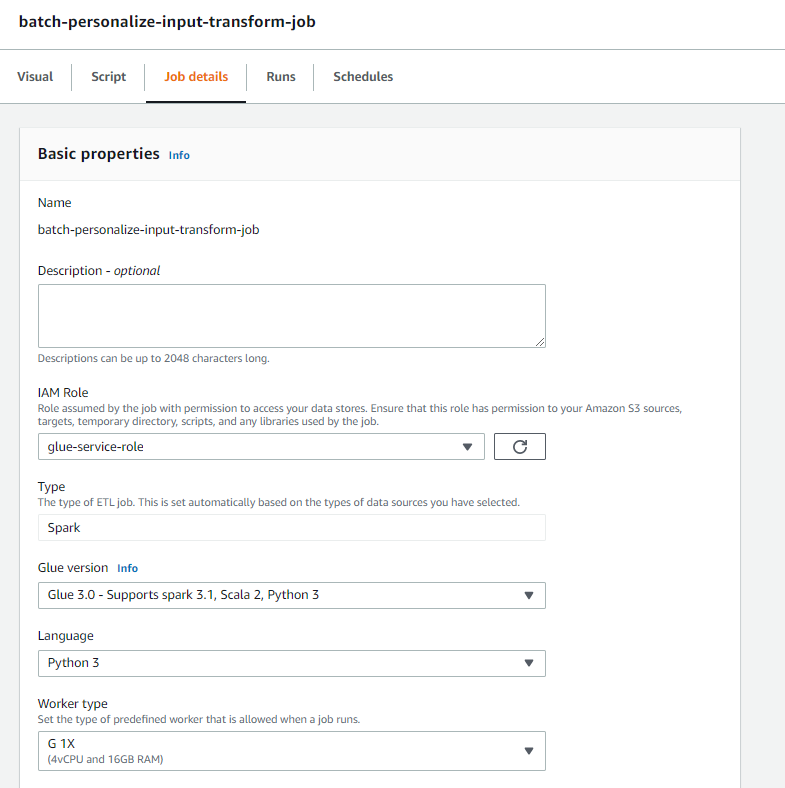

- Navigate back to your AWS Glue Studio job, and choose the Podrobnosti o delovnem mestu tab.

- Set the job name as

batch-personalize-input-transform-job. - Choose the newly created IAM role.

- Keep the default values for everything else.

- Izberite Shrani.

- Ko ste pripravljeni, izberite Run and monitor the job in the Teče tab.

- When the job is complete, navigate to the Amazon S3 console to validate that your output file has been successfully created.

We have now shaped our data into the format and structure that Amazon Personalize requires. The transformed dataset should have the following fields and format:

- Nabor podatkov o interakcijah – CSV format with fields

USER_ID,ITEM_ID,TIMESTAMP - User input dataset – JSON format with element

userId

Build an Amazon Personalize solution with the transformed dataset

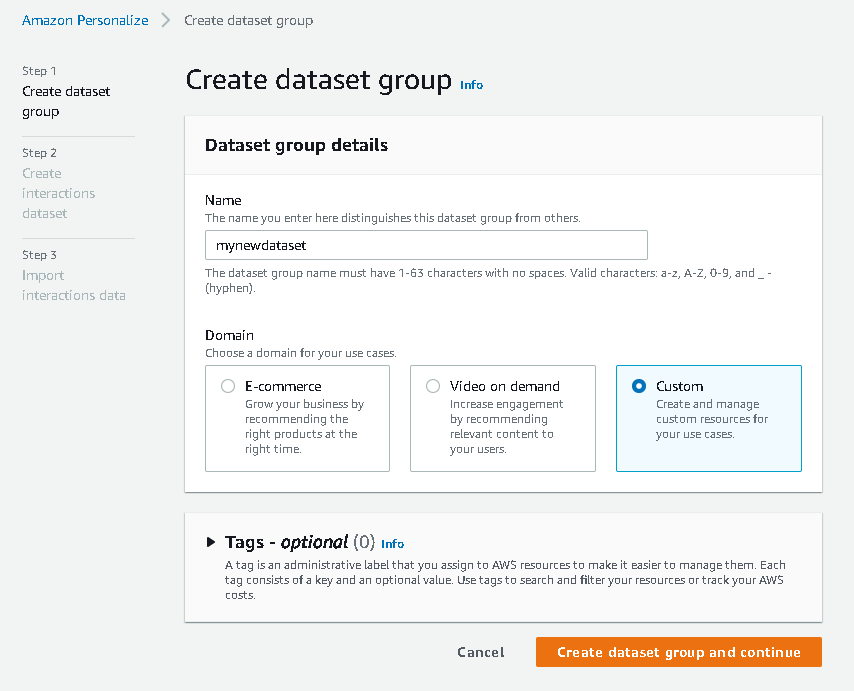

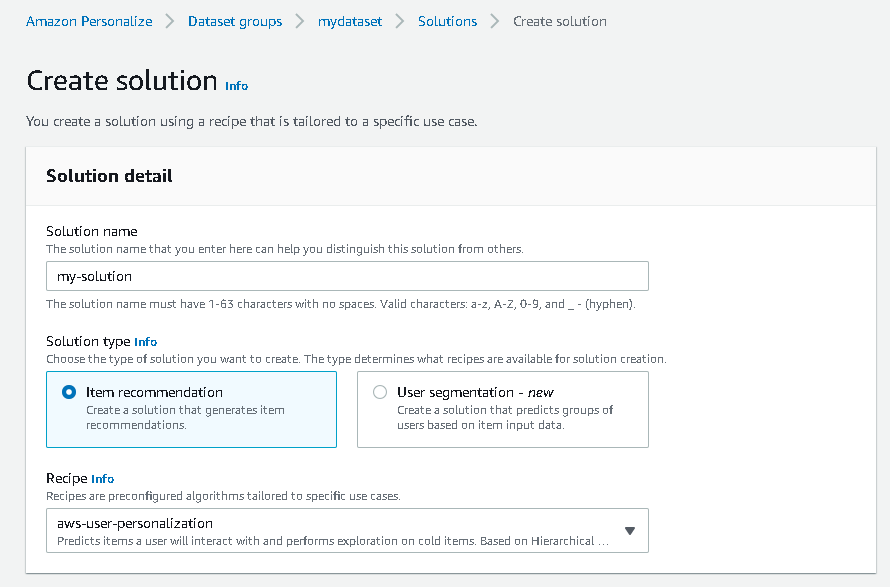

With our interactions dataset and user input data in the right format, we can now create our Amazon Personalize solution. In this section, we create our dataset group, import our data, and then create a batch inference job. A dataset group organizes resources into containers for Amazon Personalize components.

- Na konzoli Amazon Personalize izberite ustvarjanje skupina podatkovnih zbirk.

- za Domenatako, da izberete po meri.

- Izberite Create dataset group and continue.

Next, create the interactions dataset.

We now import the interactions data that we had created earlier.

- Navigate to the S3 bucket in which we created our interactions CSV dataset.

- o Dovoljenja tab, add the following bucket access policy so that Amazon Personalize has access. Update the policy to include your bucket name.

Navigate back to Amazon Personalize and choose Create your dataset import job. Our interactions dataset should now be importing into Amazon Personalize. Wait for the import job to complete with a status of Active before continuing to the next step. This should take approximately 8 minutes.

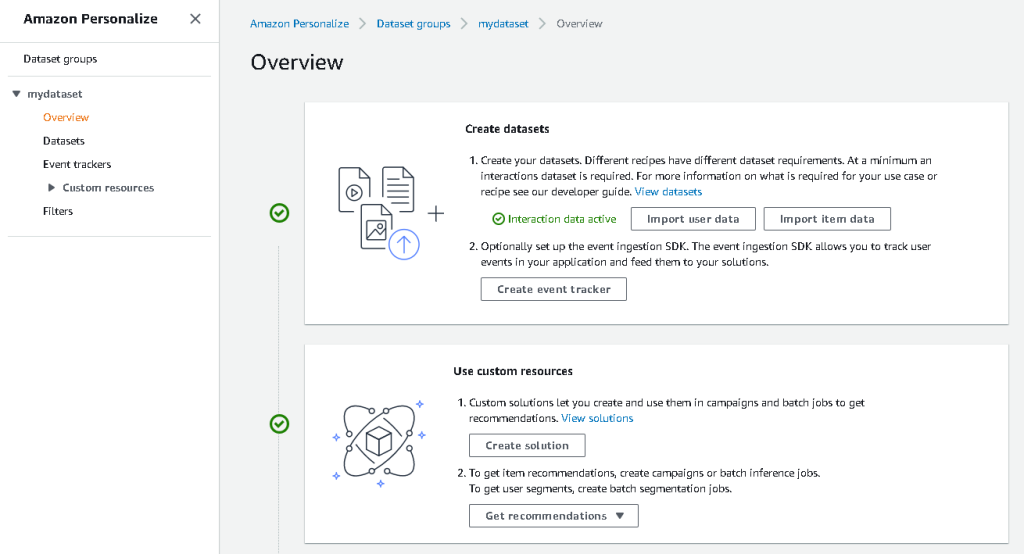

- Na konzoli Amazon Personalize izberite Pregled v navigacijskem podoknu in izberite Ustvari rešitev.

- Enter a solution name.

- za Vrsta rešitve, izberite Priporočilo artikla.

- za Recept, izberite

aws-user-personalizationrecept. - Izberite Create and train solution.

The solution now trains against the interactions dataset that was imported with the user personalization recipe. Monitor the status of this process under Različice rešitve. Wait for it to complete before proceeding. This should take approximately 20 minutes.

We now create our batch inference job, which generates recommendations for each of the users present in the JSON input.

- V podoknu za krmarjenje pod Viri po meri, izberite Batch inference jobs.

- Enter a job name, and for Rešitev, choose the solution created earlier.

- Izberite Ustvarite opravilo paketnega sklepanja.

- za Konfiguracija vhodnih podatkov, enter the S3 path of where the

batch_users_inputdatoteka.

This is the JSON file that contains userId.

- za Konfiguracija izhodnih podatkov pot, choose the curated path in S3.

- Izberite Ustvarite opravilo paketnega sklepanja.

This process takes approximately 30 minutes. When the job is finished, recommendations for each of the users specified in the user input file are saved in the S3 output location.

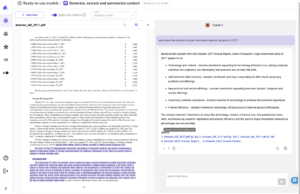

We have successfully generated a set of recommendations for all of our users. However, we have only implemented the solution using the console so far. To make sure that this batch inferencing runs regularly with the latest set of data, we need to build an orchestration workflow. In the next section, we show you how to create an orchestration workflow using Step Functions.

Build a Step Functions workflow to orchestrate the batch inference workflow

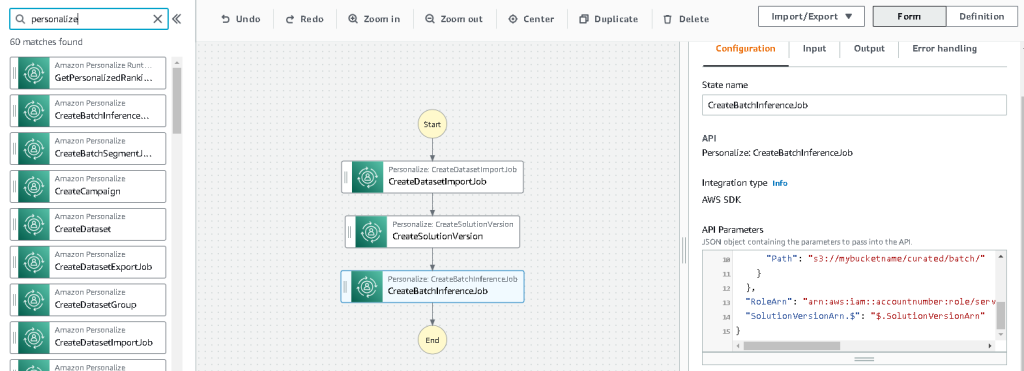

To orchestrate your pipeline, complete the following steps:

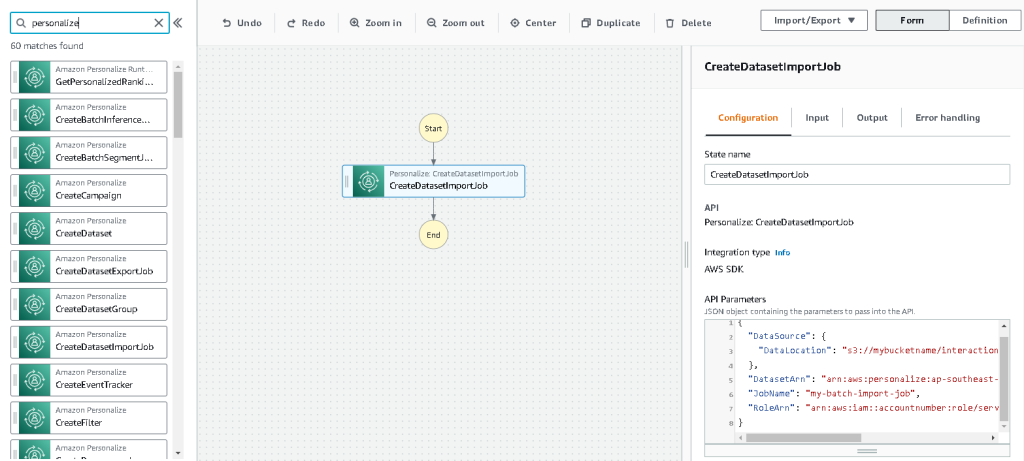

- Na konzoli Step Functions izberite Create State Machine.

- Izberite Design your workflow visually, nato izberite Naslednji.

- Povlecite

CreateDatasetImportJobnode from the left (you can search for this node in the search box) onto the canvas. - Choose the node, and you should see the configuration API parameters on the right. Record the ARN.

- Enter your own values in the Parametri API-ja Polje z besedilom.

This calls the CreateDatasetImportJob API with the parameter values that you specify.

- Povlecite

CreateSolutionVersionnode onto the canvas. - Update the API parameters with the ARN of the solution that you noted down.

This creates a new solution version with the newly imported data by calling the CreateSolutionVersion API.

- Povlecite

CreateBatchInferenceJobnode onto the canvas and similarly update the API parameters with the relevant values.

Prepričajte se, da uporabljate $.SolutionVersionArn syntax to retrieve the solution version ARN parameter from the previous step. These API parameters are passed to the CreateBatchInferenceJob API.

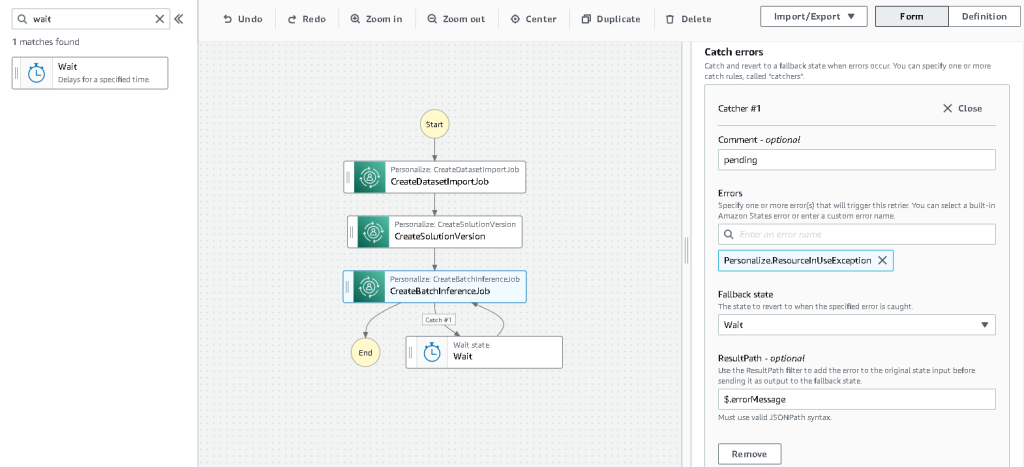

We need to build a wait logic in the Step Functions workflow to make sure the recommendation batch inference job finishes before the workflow completes.

- Find and drag in a Wait node.

- In the configuration for Čakaj, enter 300 seconds.

This is an arbitrary value; you should alter this wait time according to your specific use case.

- Izberite

CreateBatchInferenceJobnode again and navigate to the Napaka pri ravnanju tab. - za wrestling napake, vnesite

Personalize.ResourceInUseException. - za Fallback state, izberite Čakaj.

This step enables us to periodically check the status of the job and it only exits the loop when the job is complete.

- za ResultPath, vnesite

$.errorMessage.

This effectively means that when the “resource in use” exception is received, the job waits for x seconds before trying again with the same inputs.

- Izberite Shrani, nato pa izberite Start the execution.

We have successfully orchestrated our batch recommendation pipeline for Amazon Personalize. As an optional step, you can use Amazon EventBridge to schedule a trigger of this workflow on a regular basis. For more details, refer to EventBridge (CloudWatch Events) for Step Functions execution status changes.

Čiščenje

To avoid incurring future charges, delete the resources that you created for this walkthrough.

zaključek

In this post, we demonstrated how to create a batch recommendation pipeline by using a combination of AWS Glue, Amazon Personalize, and Step Functions, without needing a single line of code or ML experience. We used AWS Glue to prep our data into the format that Amazon Personalize requires. Then we used Amazon Personalize to import the data, create a solution with a user personalization recipe, and create a batch inferencing job that generates a default of 25 recommendations for each user, based on past interactions. We then orchestrated these steps using Step Functions so that we can run these jobs automatically.

For steps to consider next, user segmentation is one of the newer recipes in Amazon Personalize, which you might want to explore to create user segments for each row of the input data. For more details, refer to Getting batch recommendations and user segments.

O avtorju

Maxine Wee is an AWS Data Lab Solutions Architect. Maxine works with customers on their use cases, designs solutions to solve their business problems, and guides them through building scalable prototypes. Prior to her journey with AWS, Maxine helped customers implement BI, data warehousing, and data lake projects in Australia.

- Napredno (300)

- AI

- ai art

- ai art generator

- imajo robota

- Amazonska prilagoditev

- Umetna inteligenca

- certificiranje umetne inteligence

- umetna inteligenca v bančništvu

- robot z umetno inteligenco

- roboti z umetno inteligenco

- programska oprema za umetno inteligenco

- AWS lepilo

- Strojno učenje AWS

- blockchain

- blockchain konferenca ai

- coingenius

- pogovorna umetna inteligenca

- kripto konferenca ai

- dall's

- globoko učenje

- strojno učenje

- platon

- platon ai

- Platonova podatkovna inteligenca

- Igra Platon

- PlatoData

- platogaming

- lestvica ai

- sintaksa

- Tehnična navodila

- zefirnet