Enterprises seek to harness the potential of Machine Learning (ML) to solve complex problems and improve outcomes. Until recently, building and deploying ML models required deep levels of technical and coding skills, including tuning ML models and maintaining operational pipelines. Since its introduction in 2021, ایمیزون سیج میکر کینوس has enabled business analysts to build, deploy, and use a variety of ML models – including tabular, computer vision, and natural language processing – without writing a line of code. This has accelerated the ability of enterprises to apply ML to use cases such as time-series forecasting, customer churn prediction, sentiment analysis, industrial defect detection, and many others.

جیسا کہ اعلان کیا گیا ہے۔ اکتوبر 5، 2023, SageMaker Canvas expanded its support of models to foundation models (FMs) – large language models used to generate and summarize content. With the 12 اکتوبر 2023 کو ریلیز, SageMaker Canvas lets users ask questions and get responses that are grounded in their enterprise data. This ensures that results are context-specific, opening up additional use cases where no-code ML can be applied to solve business problems. For example, business teams can now formulate responses consistent with an organization’s specific vocabulary and tenets, and can more quickly query lengthy documents to get responses specific and grounded to the contents of those documents. All this content is performed in a private and secure manner, ensuring that all sensitive data is accessed with proper governance and safeguards.

To get started, a cloud administrator configures and populates ایمیزون کیندر indexes with enterprise data as data sources for SageMaker Canvas. Canvas users select the index where their documents are, and can ideate, research, and explore knowing that the output will always be backed by their sources-of-truth. SageMaker Canvas uses state-of-the-art FMs from ایمیزون بیڈرک اور ایمیزون سیج میکر جمپ اسٹارٹ. Conversations can be started with multiple FMs side-by-side, comparing the outputs and truly making generative-AI accessible to everyone.

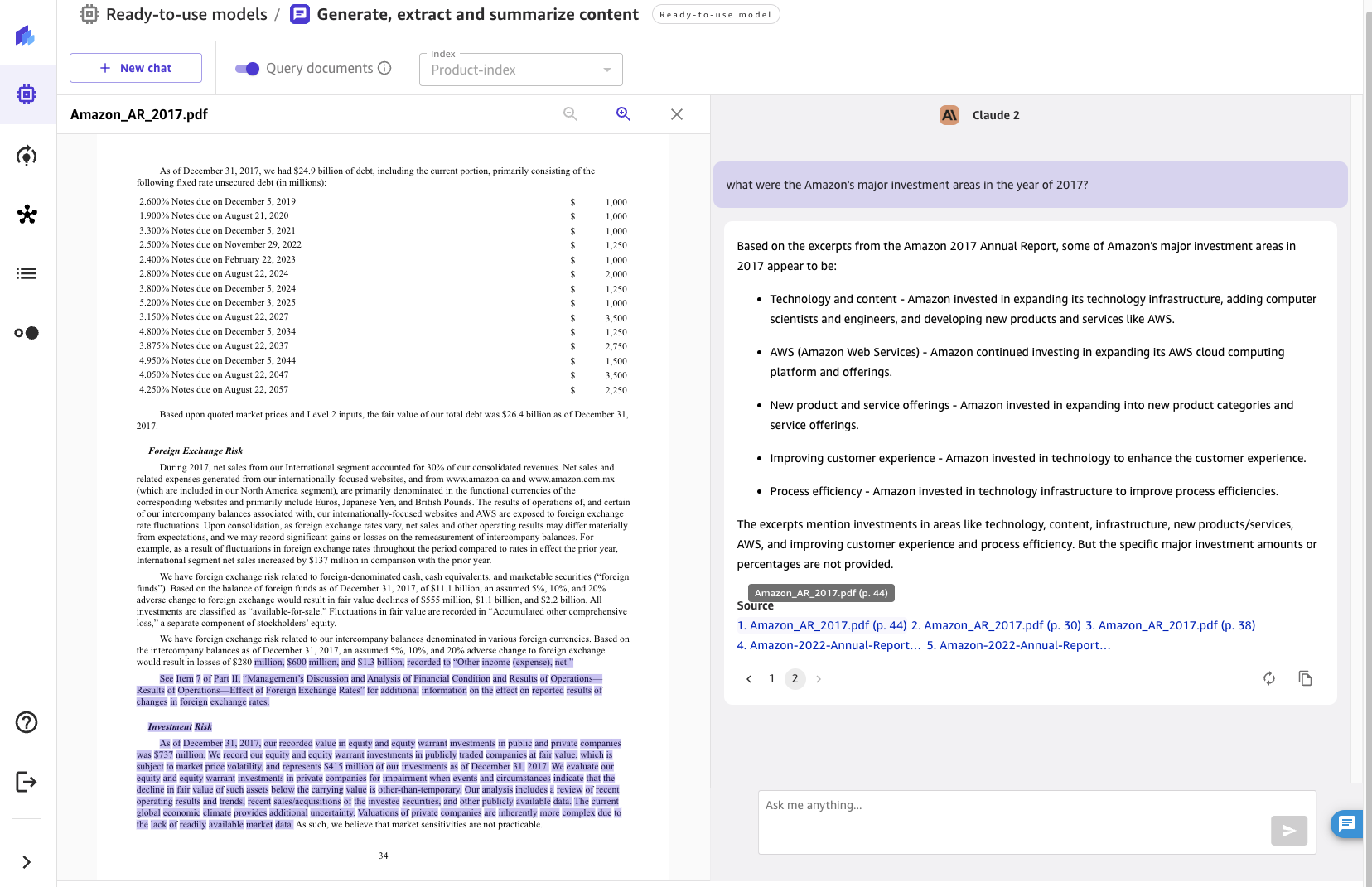

In this post, we will review the recently released feature, discuss the architecture, and present a step-by-step guide to enable SageMaker Canvas to query documents from your knowledge base, as shown in the following screen capture.

حل جائزہ

Foundation models can produce hallucinations – responses that are generic, vague, unrelated, or factually incorrect. بازیافت اگمینٹڈ جنریشن (RAG) is a frequently used approach to reduce hallucinations. RAG architectures are used to retrieve data from outside of an FM, which is then used to perform in-context learning to answer the user’s query. This ensures that the FM can use data from a trusted knowledge base and use that knowledge to answer users’ questions, reducing the risk of hallucination.

With RAG, the data external to the FM and used to augment user prompts can come from multiple disparate data sources, such as document repositories, databases, or APIs. The first step is to convert your documents and any user queries into a compatible format to perform relevancy semantic search. To make the formats compatible, a document collection, or knowledge library, and user-submitted queries are converted into numerical representations using embedding models.

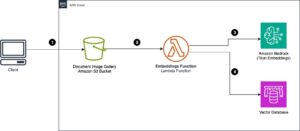

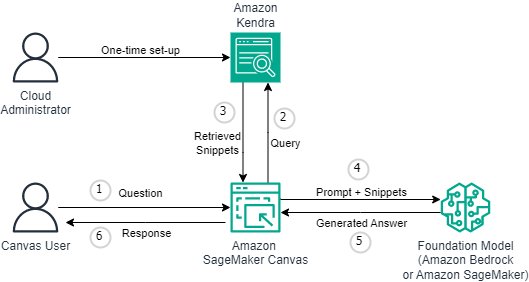

With this release, RAG functionality is provided in a no-code and seamless manner. Enterprises can enrich the chat experience in Canvas with Amazon Kendra as the underlying knowledge management system. The following diagram illustrates the solution architecture.

Connecting SageMaker Canvas to Amazon Kendra requires a one-time set-up. We describe the set-up process in detail in Setting up Canvas to query documents. If you haven’t already set-up your SageMaker Domain, refer to ایمیزون سیج میکر ڈومین پر آن بورڈ.

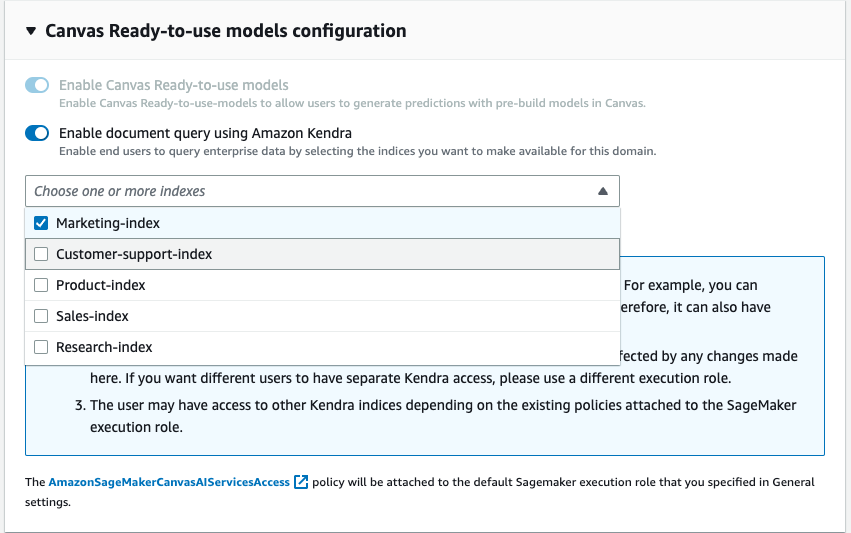

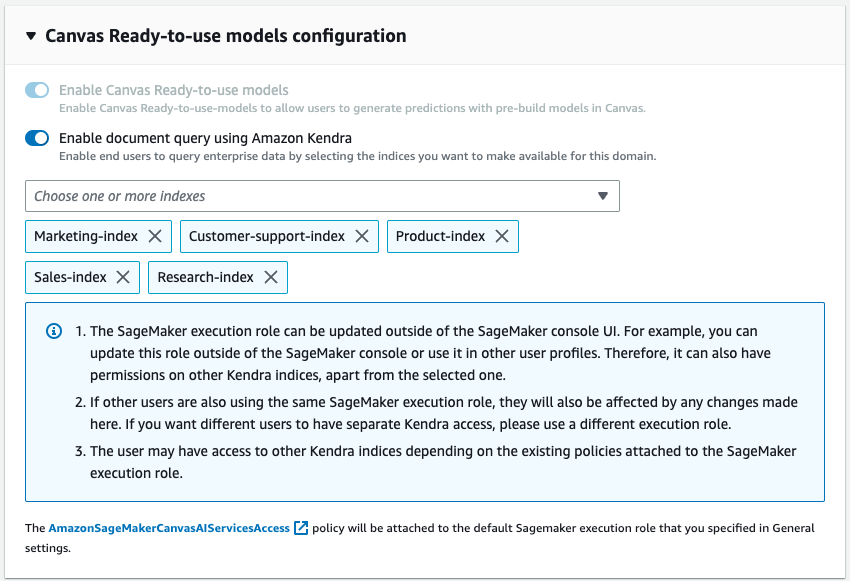

As part of the domain configuration, a cloud administrator can choose one or more Kendra indices that the business analyst can query when interacting with the FM through SageMaker Canvas.

After the Kendra indices are hydrated and configured, business analysts use them with SageMaker Canvas by starting a new chat and selecting “Query Documents” toggle. SageMaker Canvas will then manage the underlying communication between Amazon Kendra and the FM of choice to perform the following operations:

- Query the Kendra indices with the question coming from the user.

- Retrieve the snippets (and the sources) from Kendra indices.

- Engineer the prompt with the snippets with the original query so that the foundation model can generate an answer from the retrieved documents.

- Provide the generated answer to the user, along with references to the pages/documents that were used to formulate the response.

Setting up Canvas to query documents

In this section, we will walk you through the steps to set up Canvas to query documents served through Kendra indexes. You should have the following prerequisites:

- SageMaker Domain setup – ایمیزون سیج میکر ڈومین پر آن بورڈ

- ایک تخلیق کریں Kendra index (or more than one)

- Setup the Kendra Amazon S3 connector – follow the Amazon S3 Connector – and upload PDF files and other documents to the Amazon S3 bucket associated with the Kendra index

- Setup IAM so that Canvas has the appropriate permissions, including those required for calling Amazon Bedrock and/or SageMaker endpoints – follow the Set-up Canvas Chat دستاویزات

Now you can update the Domain so that it can access the desired indices. On the SageMaker console, for the given Domain, select Edit under the Domain Settings tab. Enable the toggle “Enable query documents with Amazon Kendra” which can be found at the Canvas Settings step. Once activated, choose one or more Kendra indices that you want to use with Canvas.

That’s all that’s needed to configure Canvas query documents feature. Users can now jump into a chat within Canvas and start using the knowledge bases that have been attached to the Domain through the Kendra indexes. The maintainers of the knowledge-base can continue to update the source-of-truth and with the syncing capability in Kendra, the chat users will automatically be able to use the up-to-date information in a seamless manner.

Using the Query Documents feature for chat

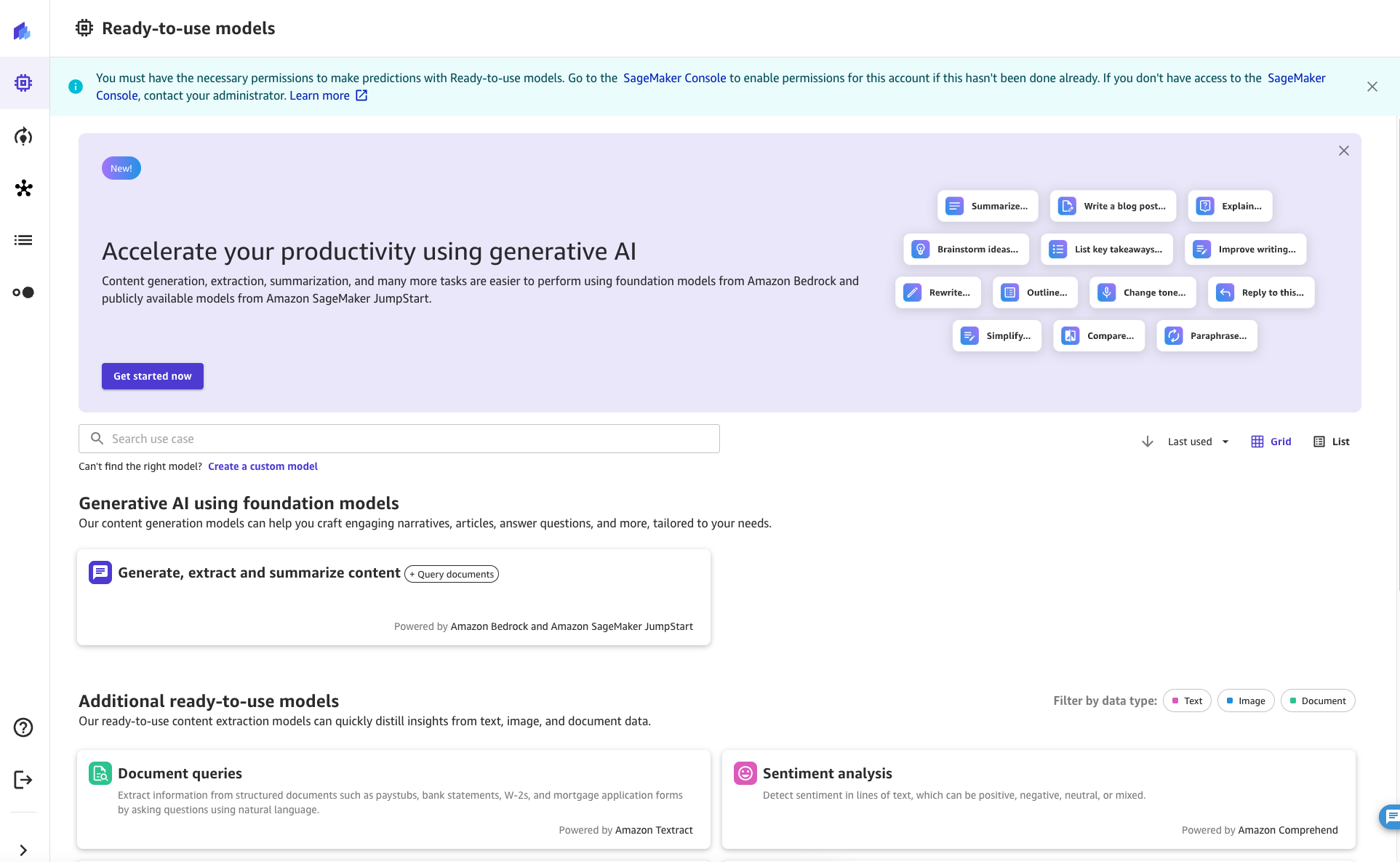

As a SageMaker Canvas user, the Query Documents feature can be accessed from within a chat. To start the chat session, click or search for the “Generate, extract and summarize content” button from the Ready-to-use models tab in SageMaker Canvas.

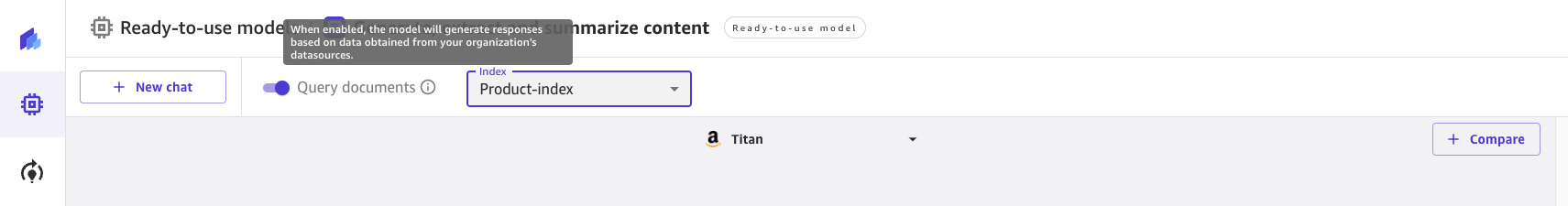

Once there, you can turn on and off Query Documents with the toggle at the top of the screen. Check out the information prompt to learn more about the feature.

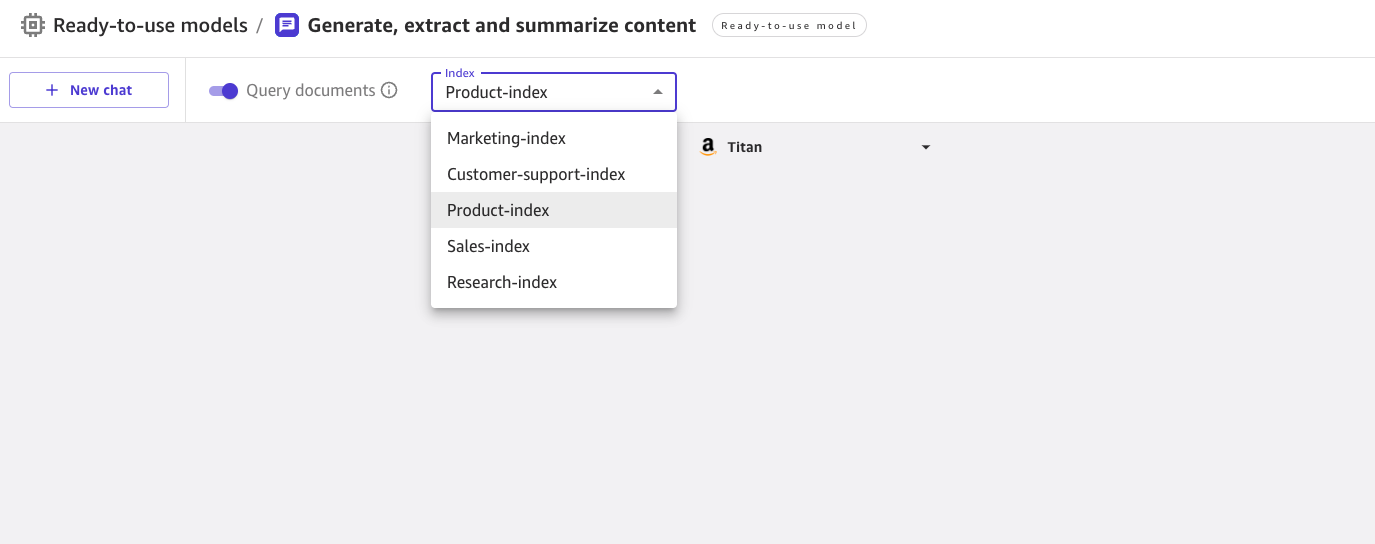

When Query Documents is enabled, you can choose among a list of Kendra indices enabled by the cloud administrator.

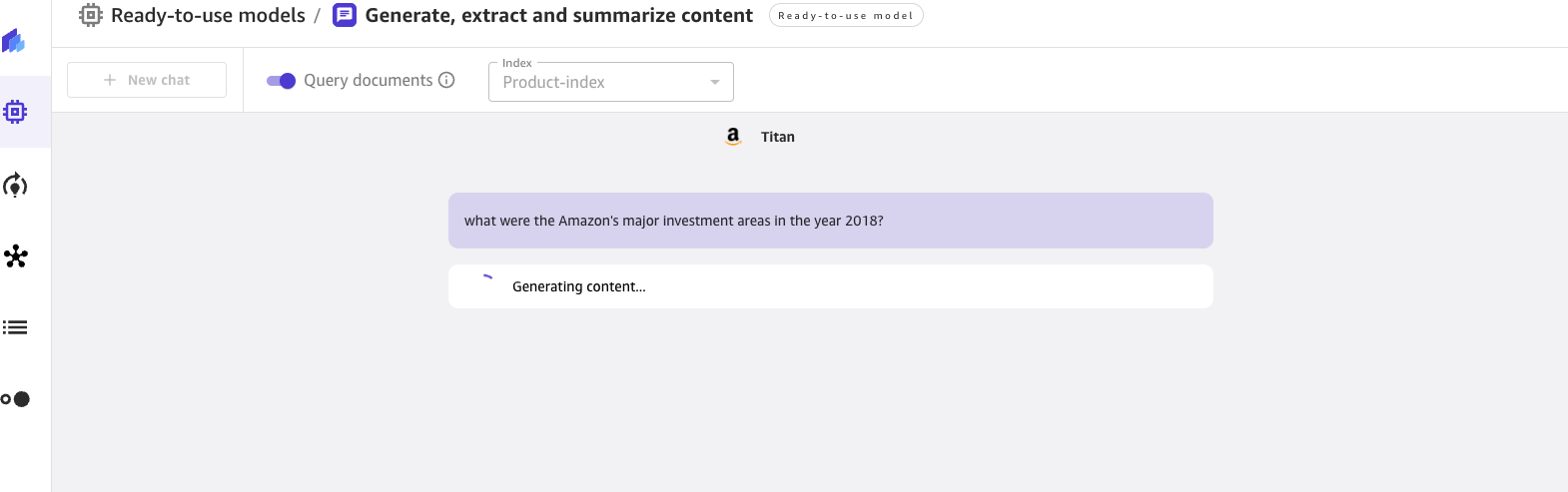

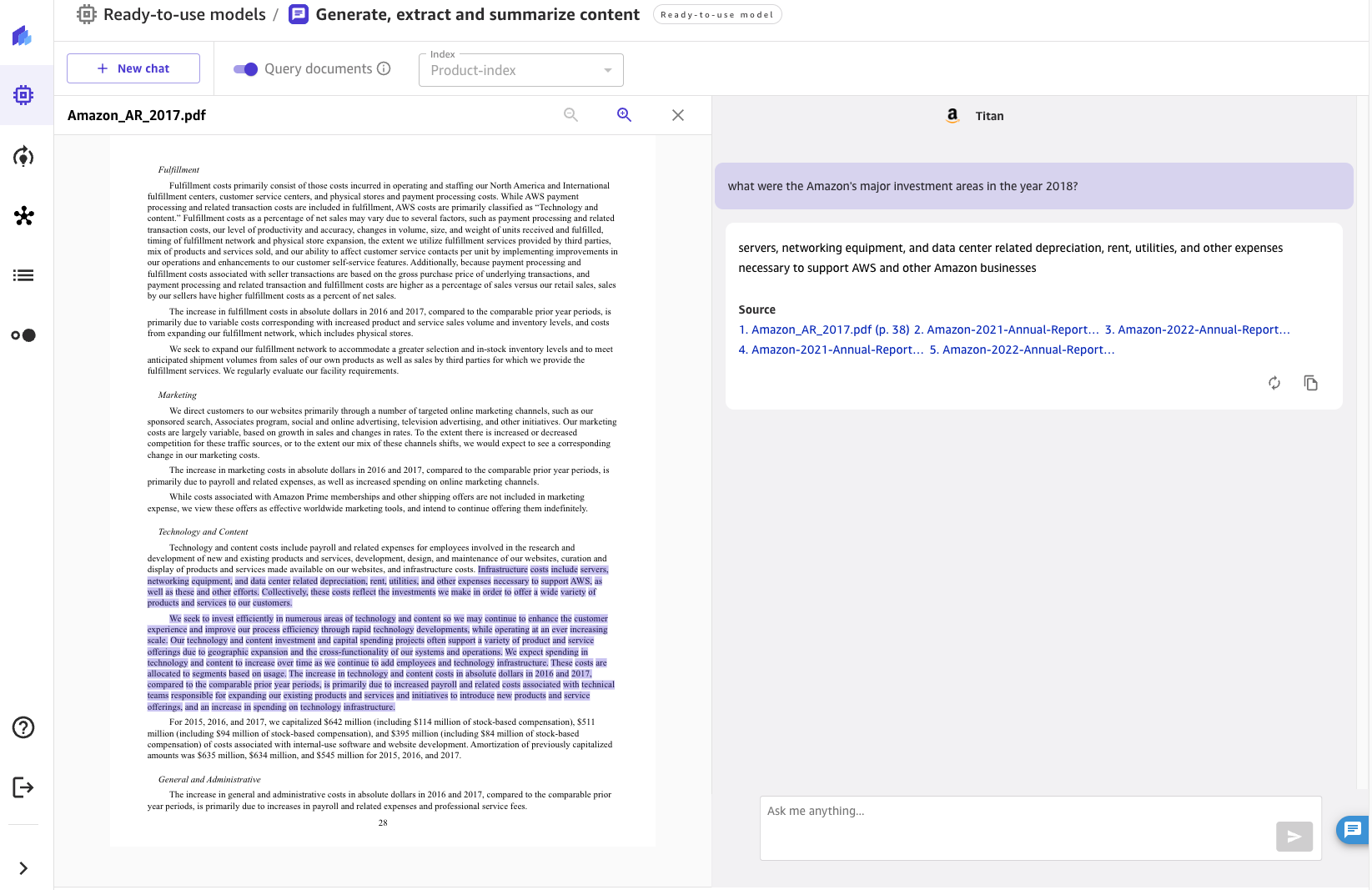

You can select an index when starting a new chat. You can then ask a question in the UX with knowledge being automatically sourced from the selected index. Note that after a conversation has started against a specific index, it is not possible to switch to another index.

For the questions asked, the chat will show the answer generated by the FM along with the source documents that contributed to generating the answer. When clicking any of the source documents, Canvas opens a preview of the document, highlighting the excerpt used by the FM.

نتیجہ

Conversational AI has immense potential to transform customer and employee experience by providing a human-like assistant with natural and intuitive interactions such as:

- Performing research on a topic or search and browse the organization’s knowledge base

- Summarizing volumes of content to rapidly gather insights

- Searching for Entities, Sentiments, PII and other useful data, and increasing the business value of unstructured content

- Generating drafts for documents and business correspondence

- Creating knowledge articles from disparate internal sources (incidents, chat logs, wikis)

The innovative integration of chat interfaces, knowledge retrieval, and FMs enables enterprises to provide accurate, relevant responses to user questions by using their domain knowledge and sources-of-truth.

By connecting SageMaker Canvas to knowledge bases in Amazon Kendra, organizations can keep their proprietary data within their own environment while still benefiting from state-of-the-art natural language capabilities of FMs. With the launch of SageMaker Canvas’s Query Documents feature, we are making it easy for any enterprise to use LLMs and their enterprise knowledge as source-of-truth to power a secure chat experience. All this functionality is available in a no-code format, allowing businesses to avoid handling the repetitive and non-specialized tasks.

To learn more about SageMaker Canvas and how it helps make it easier for everyone to start with Machine Learning, check out the SageMaker Canvas announcement. Learn more about how SageMaker Canvas helps foster collaboration between data scientists and business analysts by reading the Build, Share & Deploy post. Finally, to learn how to create your own Retrieval Augmented Generation workflow, refer to SageMaker JumpStart RAG.

حوالہ جات

Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., Küttler, H., Lewis, M., Yih, W., Rocktäschel, T., Riedel, S., Kiela, D. (2020). Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. نیورل انفارمیشن پروسیسنگ سسٹمز میں پیشرفت, 33، 9459-9474.

مصنفین کے بارے میں

ڈیوڈ گیلیٹیلی AI/ML کے لیے ایک سینئر اسپیشلسٹ سلوشنز آرکیٹیکٹ ہیں۔ وہ برسلز میں مقیم ہے اور پوری دنیا کے صارفین کے ساتھ مل کر کام کرتا ہے جو لو-کوڈ/نو-کوڈ مشین لرننگ ٹیکنالوجیز، اور جنریٹو AI کو اپنانے کے خواہاں ہیں۔ وہ اس وقت سے ایک ڈویلپر رہا ہے جب وہ بہت چھوٹا تھا، اس نے 7 سال کی عمر میں کوڈ بنانا شروع کیا۔ اس نے یونیورسٹی میں AI/ML سیکھنا شروع کیا، اور تب سے اسے اس سے پیار ہو گیا۔

ڈیوڈ گیلیٹیلی AI/ML کے لیے ایک سینئر اسپیشلسٹ سلوشنز آرکیٹیکٹ ہیں۔ وہ برسلز میں مقیم ہے اور پوری دنیا کے صارفین کے ساتھ مل کر کام کرتا ہے جو لو-کوڈ/نو-کوڈ مشین لرننگ ٹیکنالوجیز، اور جنریٹو AI کو اپنانے کے خواہاں ہیں۔ وہ اس وقت سے ایک ڈویلپر رہا ہے جب وہ بہت چھوٹا تھا، اس نے 7 سال کی عمر میں کوڈ بنانا شروع کیا۔ اس نے یونیورسٹی میں AI/ML سیکھنا شروع کیا، اور تب سے اسے اس سے پیار ہو گیا۔

Bilal Alam is an Enterprise Solutions Architect at AWS with a focus on the Financial Services industry. On most days Bilal is helping customers with building, uplifting and securing their AWS environment to deploy their most critical workloads. He has extensive experience in Telco, networking, and software development. More recently, he has been looking into using AI/ML to solve business problems.

Bilal Alam is an Enterprise Solutions Architect at AWS with a focus on the Financial Services industry. On most days Bilal is helping customers with building, uplifting and securing their AWS environment to deploy their most critical workloads. He has extensive experience in Telco, networking, and software development. More recently, he has been looking into using AI/ML to solve business problems.

پشمین مستری AWS میں ایک سینئر پروڈکٹ مینیجر ہے۔ کام سے باہر، پشمین کو مہم جوئی کے سفر، فوٹو گرافی، اور اپنے خاندان کے ساتھ وقت گزارنے کا لطف آتا ہے۔

پشمین مستری AWS میں ایک سینئر پروڈکٹ مینیجر ہے۔ کام سے باہر، پشمین کو مہم جوئی کے سفر، فوٹو گرافی، اور اپنے خاندان کے ساتھ وقت گزارنے کا لطف آتا ہے۔

ڈین سنریچ AWS میں ایک سینئر پروڈکٹ مینیجر ہے، جو کم کوڈ/نو کوڈ مشین لرننگ کو جمہوری بنانے میں مدد کرتا ہے۔ AWS سے پہلے، ڈین نے بنایا اور تجارتی بنایا انٹرپرائز SaaS پلیٹ فارمز اور ٹائم سیریز کے ماڈل جو ادارہ جاتی سرمایہ کاروں کے ذریعہ خطرے کا انتظام کرنے اور بہترین پورٹ فولیوز کی تعمیر کے لیے استعمال کیے گئے۔ کام سے باہر، وہ ہاکی، سکوبا ڈائیونگ، اور سائنس فکشن پڑھتے ہوئے پایا جا سکتا ہے۔

ڈین سنریچ AWS میں ایک سینئر پروڈکٹ مینیجر ہے، جو کم کوڈ/نو کوڈ مشین لرننگ کو جمہوری بنانے میں مدد کرتا ہے۔ AWS سے پہلے، ڈین نے بنایا اور تجارتی بنایا انٹرپرائز SaaS پلیٹ فارمز اور ٹائم سیریز کے ماڈل جو ادارہ جاتی سرمایہ کاروں کے ذریعہ خطرے کا انتظام کرنے اور بہترین پورٹ فولیوز کی تعمیر کے لیے استعمال کیے گئے۔ کام سے باہر، وہ ہاکی، سکوبا ڈائیونگ، اور سائنس فکشن پڑھتے ہوئے پایا جا سکتا ہے۔

- SEO سے چلنے والا مواد اور PR کی تقسیم۔ آج ہی بڑھا دیں۔

- پلیٹو ڈیٹا ڈاٹ نیٹ ورک ورٹیکل جنریٹو اے آئی۔ اپنے آپ کو بااختیار بنائیں۔ یہاں تک رسائی حاصل کریں۔

- پلیٹوآئ اسٹریم۔ ویب 3 انٹیلی جنس۔ علم میں اضافہ۔ یہاں تک رسائی حاصل کریں۔

- پلیٹو ای ایس جی۔ کاربن، کلین ٹیک، توانائی ، ماحولیات، شمسی، ویسٹ مینجمنٹ یہاں تک رسائی حاصل کریں۔

- پلیٹو ہیلتھ۔ بائیوٹیک اینڈ کلینیکل ٹرائلز انٹیلی جنس۔ یہاں تک رسائی حاصل کریں۔

- ماخذ: https://aws.amazon.com/blogs/machine-learning/empower-your-business-users-to-extract-insights-from-company-documents-using-amazon-sagemaker-canvas-generative-ai/

- : ہے

- : ہے

- : نہیں

- :کہاں

- ][p

- $UP

- 100

- 12

- 2020

- 2021

- 2023

- 225

- 7

- a

- کی صلاحیت

- قابلیت

- ہمارے بارے میں

- تیز

- تک رسائی حاصل

- رسائی

- قابل رسائی

- درست

- ایڈیشنل

- اپنانے

- کے بعد

- کے خلاف

- عمر

- AI

- AI / ML

- تمام

- اجازت دے رہا ہے

- ساتھ

- پہلے ہی

- ہمیشہ

- ایمیزون

- ایمیزون کیندر

- ایمیزون سیج میکر

- ایمیزون سیج میکر کینوس

- ایمیزون ویب سروسز

- کے درمیان

- an

- تجزیہ

- تجزیہ کار

- تجزیہ کار کہتے ہیں

- اور

- کا اعلان کیا ہے

- ایک اور

- جواب

- کوئی بھی

- APIs

- اطلاقی

- کا اطلاق کریں

- نقطہ نظر

- مناسب

- فن تعمیر

- کیا

- ارد گرد

- مضامین

- AS

- پوچھنا

- اسسٹنٹ

- منسلک

- At

- اضافہ

- اضافہ

- خود کار طریقے سے

- دستیاب

- سے اجتناب

- AWS

- حمایت کی

- بیس

- کی بنیاد پر

- BE

- رہا

- کیا جا رہا ہے

- فائدہ مند

- کے درمیان

- برسلز

- تعمیر

- عمارت

- تعمیر

- کاروبار

- کاروبار

- بٹن

- by

- بلا

- کر سکتے ہیں

- کینوس

- صلاحیتوں

- صلاحیت

- قبضہ

- مقدمات

- چیک کریں

- انتخاب

- میں سے انتخاب کریں

- کلک کریں

- قریب سے

- بادل

- کوڈ

- کوڈنگ

- تعاون

- مجموعہ

- کس طرح

- آنے والے

- مواصلات

- کمپنی کے

- موازنہ

- ہم آہنگ

- پیچیدہ

- کمپیوٹر

- کمپیوٹر ویژن

- ترتیب

- تشکیل شدہ

- مربوط

- متواتر

- کنسول

- تعمیر

- مواد

- مندرجات

- جاری

- حصہ ڈالا

- بات چیت

- مکالمات

- تبدیل

- تبدیل

- تخلیق

- اہم

- گاہک

- گاہکوں

- اعداد و شمار

- ڈیٹا بیس

- دن

- گہری

- جمہوری بنانا

- تعیناتی

- تعینات

- بیان

- مطلوبہ

- تفصیل

- کھوج

- ڈیولپر

- ترقی

- بات چیت

- متفق

- ڈائیونگ

- دستاویز

- دستاویزات

- ڈومین

- e

- آسان

- آسان

- سرایت کرنا

- ملازم

- بااختیار

- کو چالو کرنے کے

- چالو حالت میں

- کے قابل بناتا ہے

- افزودگی

- یقینی بناتا ہے

- کو یقینی بنانے ہے

- انٹرپرائز

- اداروں

- اداروں

- ماحولیات

- سب

- مثال کے طور پر

- توسیع

- تجربہ

- تلاش

- وسیع

- وسیع تجربہ

- بیرونی

- نکالنے

- گر

- خاندان

- نمایاں کریں

- افسانے

- فائلوں

- آخر

- مالی

- مالیاتی خدمات

- پہلا

- توجہ مرکوز

- پر عمل کریں

- کے بعد

- کے لئے

- فارمیٹ

- رضاعی

- ملا

- فاؤنڈیشن

- اکثر

- سے

- فعالیت

- جمع

- پیدا

- پیدا

- پیدا کرنے والے

- نسل

- پیداواری

- پیداواری AI۔

- حاصل

- دی

- دنیا

- گورننس

- رہنمائی

- ہینڈلنگ

- کنٹرول

- ہے

- he

- مدد

- مدد کرتا ہے

- اجاگر کرنا۔

- پریشان

- ان

- کس طرح

- کیسے

- HTML

- HTTP

- HTTPS

- if

- وضاحت کرتا ہے

- بہت زیادہ

- کو بہتر بنانے کے

- in

- سمیت

- اضافہ

- انڈکس

- انڈیکس

- Indices

- صنعتی

- صنعت

- معلومات

- جدید

- بصیرت

- ادارہ

- ادارہ جاتی سرمایہ کاروں

- انضمام

- بات چیت

- بات چیت

- انٹرفیسز

- اندرونی

- میں

- تعارف

- بدیہی

- سرمایہ

- IT

- میں

- فوٹو

- کودنے

- رکھیں

- جاننا

- علم

- علم مینجمنٹ

- زبان

- بڑے

- شروع

- جانیں

- سیکھنے

- آو ہم

- سطح

- لیوس

- لائبریری

- لائن

- لسٹ

- تلاش

- محبت

- مشین

- مشین لرننگ

- برقرار رکھنے

- بنا

- بنانا

- انتظام

- انتظام

- مینیجر

- انداز

- بہت سے

- ML

- ماڈل

- ماڈل

- زیادہ

- سب سے زیادہ

- ایک سے زیادہ

- قدرتی

- قدرتی زبان عملیات

- ضرورت

- نیٹ ورکنگ

- عصبی

- نئی

- ویزا

- اب

- of

- بند

- on

- ایک بار

- ایک

- کھولنے

- کھولتا ہے

- آپریشنل

- آپریشنز

- زیادہ سے زیادہ

- or

- تنظیمیں

- اصل

- دیگر

- دیگر

- باہر

- نتائج

- پیداوار

- نتائج

- باہر

- خود

- حصہ

- انجام دیں

- کارکردگی

- اجازتیں

- فوٹو گرافی

- تصویر

- پلیٹ فارم

- پلاٹا

- افلاطون ڈیٹا انٹیلی جنس

- پلیٹو ڈیٹا

- کھیل

- محکموں

- ممکن

- پوسٹ

- ممکنہ

- طاقت

- کی پیشن گوئی

- ضروریات

- حال (-)

- پیش نظارہ

- پچھلا

- نجی

- مسائل

- عمل

- پروسیسنگ

- پیدا

- مصنوعات

- پروڈکٹ مینیجر

- مناسب

- ملکیت

- فراہم

- فراہم

- فراہم کرنے

- سوالات

- سوال

- سوالات

- جلدی سے

- میں تیزی سے

- پڑھنا

- حال ہی میں

- کو کم

- کو کم کرنے

- کا حوالہ دیتے ہیں

- حوالہ جات

- جاری

- جاری

- متعلقہ

- بار بار

- ضرورت

- کی ضرورت ہے

- تحقیق

- جواب

- جوابات

- نتائج کی نمائش

- کا جائزہ لینے کے

- رسک

- s

- ساس

- تحفظات

- sagemaker

- سائنس

- اشتھانکلپنا

- سائنسدانوں

- سکرین

- ہموار

- تلاش کریں

- سیکشن

- محفوظ بنانے

- محفوظ

- طلب کرو

- منتخب

- منتخب

- سینئر

- حساس

- جذبات

- احساسات

- خدمت کی

- سروسز

- اجلاس

- مقرر

- قائم کرنے

- ترتیبات

- سیٹ اپ

- سیکنڈ اور

- ہونا چاہئے

- دکھائیں

- دکھایا گیا

- بعد

- مہارت

- So

- سافٹ ویئر کی

- سوفٹ ویئر کی نشوونما

- حل

- حل

- حل

- ماخذ

- ھٹا

- ذرائع

- ماہر

- مخصوص

- خرچ کرنا۔

- شروع کریں

- شروع

- شروع

- ریاستی آرٹ

- مرحلہ

- مراحل

- ابھی تک

- اس طرح

- مختصر

- حمایت

- سوئچ کریں

- کے نظام

- کاموں

- ٹیموں

- ٹیکنیکل

- ٹیکنالوجی

- ٹیلکو

- اصولوں

- سے

- کہ

- ۔

- کے بارے میں معلومات

- ماخذ

- ان

- ان

- تو

- وہاں.

- اس

- ان

- کے ذریعے

- وقت

- کرنے کے لئے

- سب سے اوپر

- موضوع

- تبدیل

- واقعی

- قابل اعتماد

- ٹیوننگ

- ٹرن

- کے تحت

- بنیادی

- یونیورسٹی

- جب تک

- اپ ڈیٹ کرنے کے لئے

- اپ ڈیٹ کریں

- ترقی

- استعمال کی شرائط

- استعمال کیا جاتا ہے

- رکن کا

- صارفین

- استعمال

- کا استعمال کرتے ہوئے

- ux

- قیمت

- مختلف اقسام کے

- بہت

- نقطہ نظر

- جلد

- W

- چلنا

- چاہتے ہیں

- تھا

- we

- ویب

- ویب خدمات

- تھے

- جب

- جس

- جبکہ

- گے

- ساتھ

- کے اندر

- بغیر

- کام

- کام کا بہاؤ

- کام کرتا ہے

- تحریری طور پر

- تم

- نوجوان

- اور

- زیفیرنیٹ