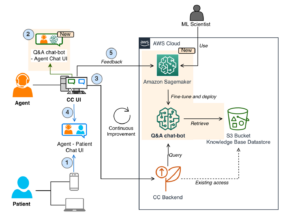

今天,我们很高兴地宣布 Meta 开发的 Llama 2 基础模型可供客户通过 亚马逊SageMaker JumpStart。 Llama 2 系列大型语言模型 (LLM) 是预先训练和微调的生成文本模型的集合,其规模从 7 亿到 70 亿个参数不等。 经过微调的 LLM,称为 Llama-2-chat,针对对话用例进行了优化。 您可以轻松尝试这些模型并将其与 SageMaker JumpStart 结合使用,SageMaker JumpStart 是一个机器学习 (ML) 中心,可提供对算法、模型和 ML 解决方案的访问,以便您可以快速开始使用 ML。

在这篇文章中,我们将介绍如何通过 SageMaker JumpStart 使用 Llama 2 模型。

什么是骆驼 2

Llama 2 是一种使用优化的 Transformer 架构的自回归语言模型。 Llama 2 旨在用于英语商业和研究用途。 它具有一系列参数大小(7 亿、13 亿和 70 亿)以及预训练和微调的变量。 根据 Meta 的说法,调整后的版本使用监督微调(SFT)和带有人类反馈的强化学习(RLHF)来符合人类对帮助和安全的偏好。 Llama 2 使用来自公开来源的 2 万亿个代币数据进行了预训练。 调整后的模型旨在用于类似助理的聊天,而预训练的模型可适用于各种自然语言生成任务。 无论开发人员使用哪个版本的模型, Meta 的负责任使用指南 可以帮助指导通过适当的安全缓解措施定制和优化模型可能需要的额外微调。

什么是 SageMaker JumpStart

借助 SageMaker JumpStart,机器学习从业者可以从多种开源基础模型中进行选择。 机器学习从业者可以将基础模型部署到专用的 亚马逊SageMaker 来自网络隔离环境的实例,并使用 SageMaker 自定义模型进行模型训练和部署。

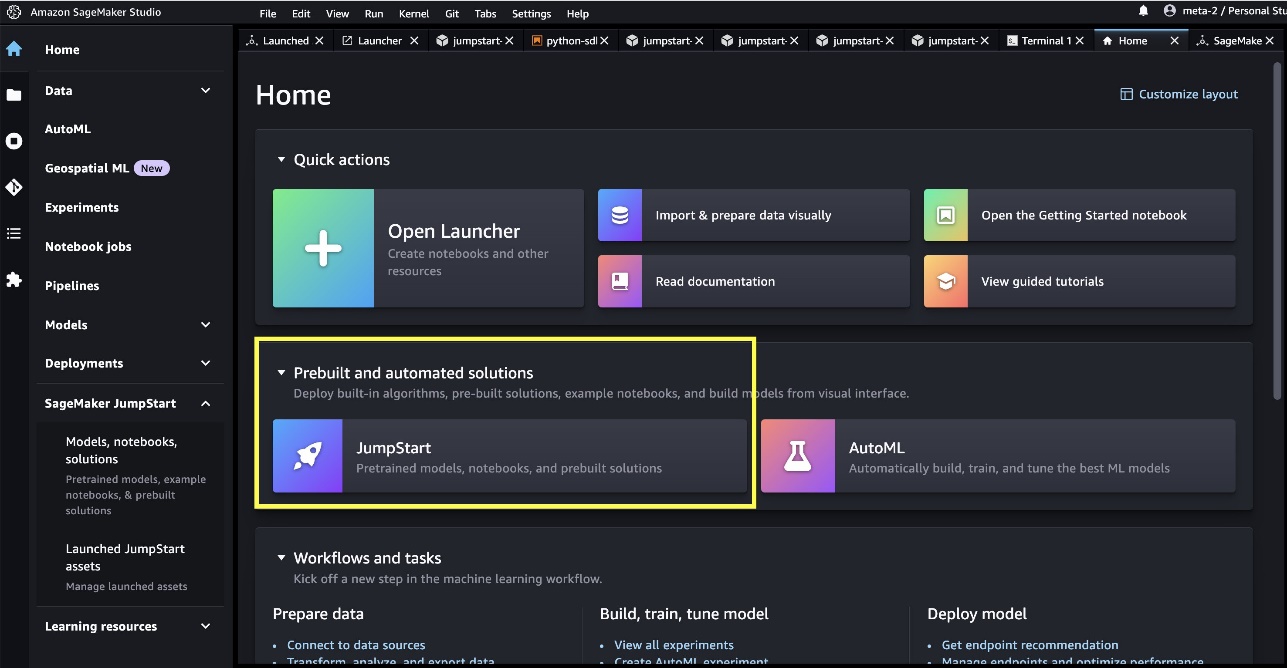

您现在只需点击几下即可发现并部署 Llama 2 亚马逊SageMaker Studio 或通过 SageMaker Python SDK 以编程方式,使您能够利用 SageMaker 功能导出模型性能和 MLOps 控制,例如 Amazon SageMaker管道, Amazon SageMaker调试器,或容器日志。 该模型部署在 AWS 安全环境中并受您的 VPC 控制,有助于确保数据安全。 Llama 2 模型现已在 Amazon SageMaker Studio 中提供,最初在 us-east 1 和 us-west 2 地区。

探索型号

您可以通过 SageMaker Studio UI 和 SageMaker Python SDK 中的 SageMaker JumpStart 访问基础模型。 在本节中,我们将介绍如何在 SageMaker Studio 中发现模型。

SageMaker Studio 是一个集成开发环境 (IDE),提供基于 Web 的单一可视化界面,您可以在其中访问专用工具来执行所有 ML 开发步骤,从准备数据到构建、训练和部署 ML 模型。 有关如何开始和设置 SageMaker Studio 的更多详细信息,请参阅 亚马逊SageMaker Studio.

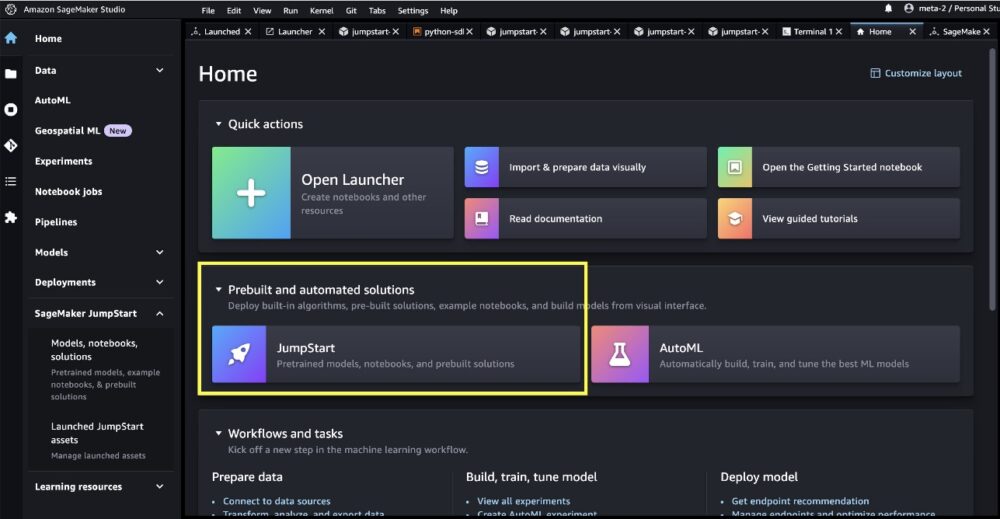

进入 SageMaker Studio 后,您可以访问 SageMaker JumpStart,其中包含预训练的模型、笔记本和预构建的解决方案,位于 预构建和自动化解决方案.

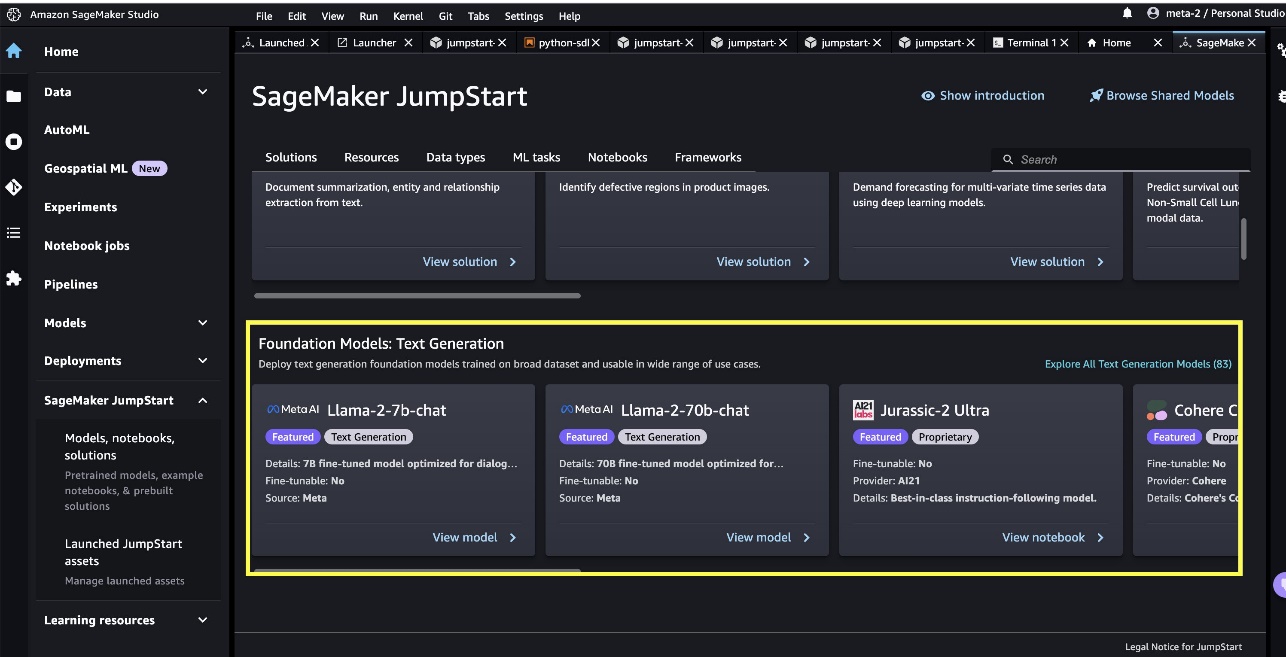

在 SageMaker JumpStart 登录页面中,您可以浏览解决方案、模型、笔记本和其他资源。 您可以在以下位置找到两款旗舰 Llama 2 型号: 基础型号: 文本生成 旋转木马。 如果您没有看到 Llama 2 模型,请通过关闭并重新启动来更新您的 SageMaker Studio 版本。 有关版本更新的更多信息,请参阅 关闭并更新 Studio 应用程序.

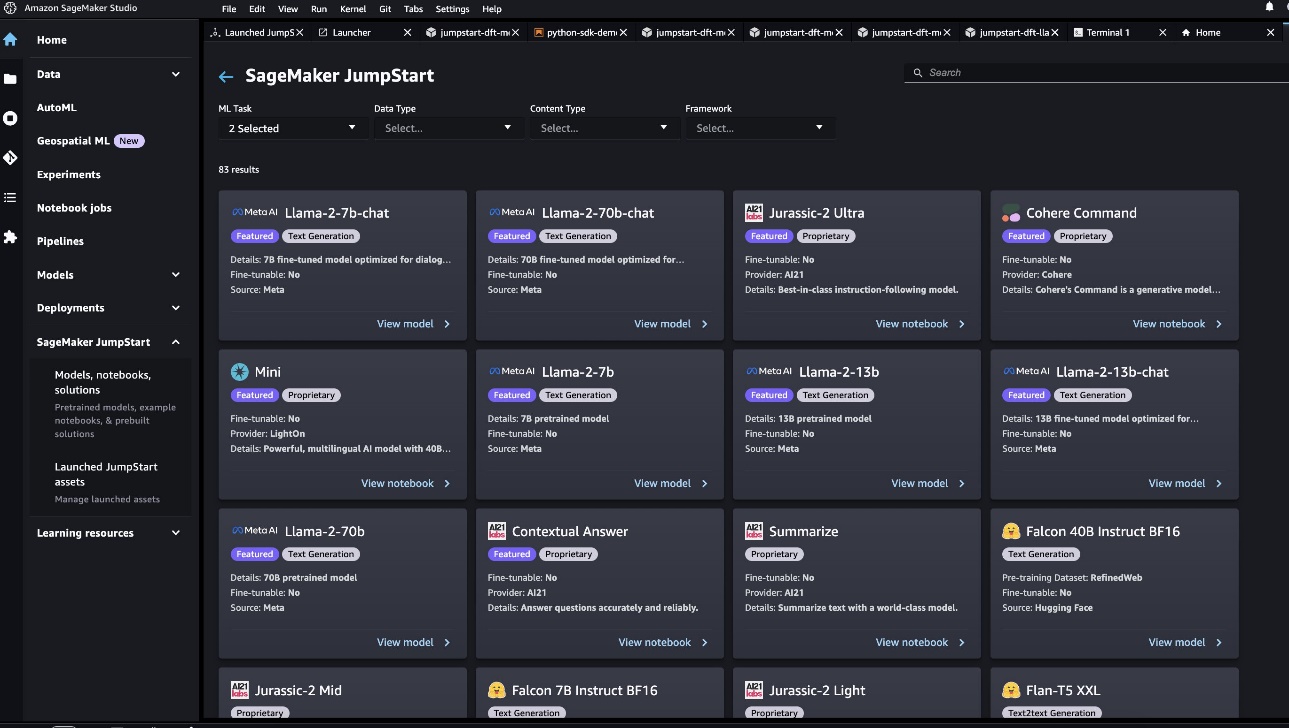

您还可以通过选择找到其他四种型号变体 探索所有文本生成模型 或搜寻 llama 在搜索框中。

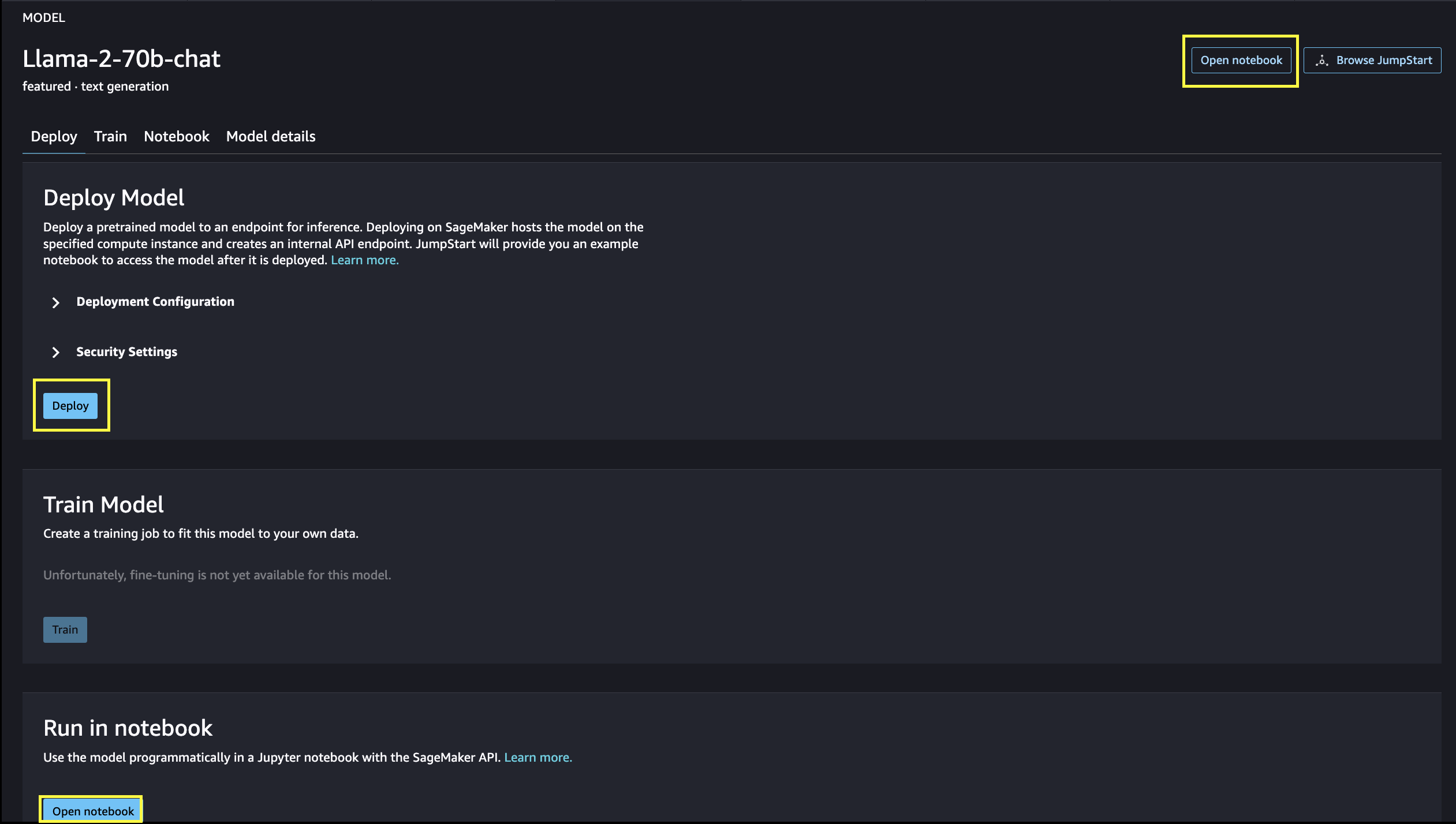

您可以选择模型卡来查看有关模型的详细信息,例如许可证、用于训练的数据以及如何使用。 您还可以找到两个按钮, 部署 和 打开笔记本,这可以帮助您使用该模型。

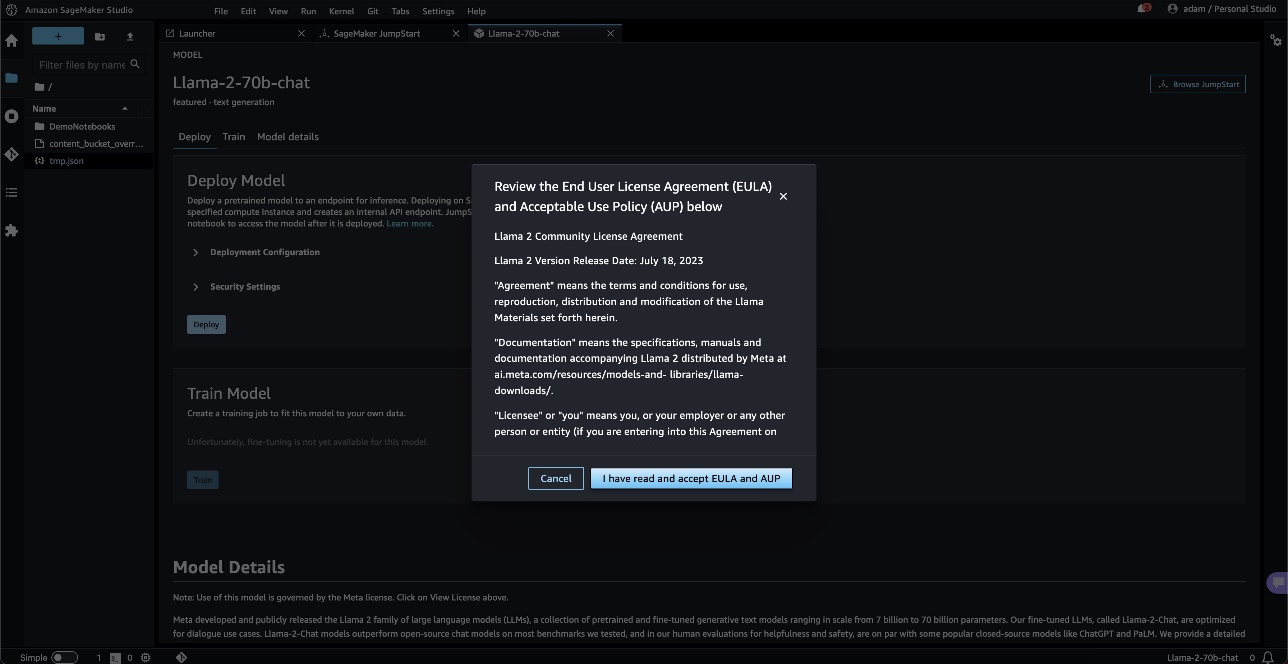

当您选择任一按钮时,弹出窗口将显示最终用户许可协议和可接受的使用政策,供您确认。

确认后,您将继续下一步使用该模型。

部署模型

当你选择 部署 并确认条款,模型部署将开始。 或者,您可以通过选择显示的示例笔记本进行部署 打开笔记本。 该示例笔记本提供了有关如何部署模型进行推理和清理资源的端到端指导。

要使用笔记本进行部署,我们首先选择适当的模型,由 model_id。 您可以使用以下代码在 SageMaker 上部署任何选定的模型:

这会使用默认配置(包括默认实例类型和默认 VPC 配置)在 SageMaker 上部署模型。 您可以通过在中指定非默认值来更改这些配置 快速启动模型。 部署后,您可以通过 SageMaker 预测器对部署的终端节点运行推理:

微调的聊天模型(Llama-2-7b-chat、Llama-2-13b-chat、Llama-2-70b-chat)接受用户和聊天助手之间的聊天历史记录,并生成后续聊天。 预训练模型(Llama-2-7b、Llama-2-13b、Llama-2-70b)需要字符串提示并根据提供的提示执行文本完成。 请看下面的代码:

请注意,默认情况下, accept_eula 设置为 false。 你需要设置 accept_eula=true 成功调用端点。 通过这样做,您接受前面提到的用户许可协议和可接受的使用政策。 你也可以 下载 许可协议。

Custom_attributes 用于传递 EULA 的是键/值对。 键和值之间用 分隔 = 并且对之间由 ;。 如果用户多次传递相同的键,则保留最后一个值并将其传递给脚本处理程序(即,在本例中,用于条件逻辑)。 例如,如果 accept_eula=false; accept_eula=true 被传递到服务器,然后 accept_eula=true 被保留并传递给脚本处理程序。

推理参数控制端点处的文本生成过程。 最大新令牌控制是指模型生成的输出的大小。 请注意,这与单词数不同,因为模型的词汇表与英语词汇不同,并且每个标记可能不是英语单词。 温度控制输出的随机性。 较高的温度会产生更多的创造性和幻觉输出。 所有推理参数都是可选的。

下表列出了 SageMaker JumpStart 中可用的所有 Llama 模型以及 model_ids、默认实例类型以及每个模型支持的最大总令牌数(输入令牌数与生成令牌数之和)。

| 型号名称 | 型号ID | 最大总代币数 | 默认实例类型 |

| 骆驼-2-7b | 元文本生成-llama-2-7b | 4096 | ml.g5.2xlarge |

| Llama-2-7b-聊天 | 元文本生成-llama-2-7b-f | 4096 | ml.g5.2xlarge |

| 骆驼-2-13b | 元文本生成-llama-2-13b | 4096 | ml.g5.12xlarge |

| Llama-2-13b-聊天 | 元文本生成-llama-2-13b-f | 4096 | ml.g5.12xlarge |

| 骆驼-2-70b | 元文本生成-llama-2-70b | 4096 | ml.g5.48xlarge |

| Llama-2-70b-聊天 | 元文本生成-llama-2-70b-f | 4096 | ml.g5.48xlarge |

请注意,SageMaker 端点的超时限制为 60 秒。 因此,即使模型可能能够生成 4096 个令牌,但如果文本生成时间超过 60 秒,请求将会失败。 对于 7B、13B 和 70B 型号,我们建议设置 max_new_tokens 分别不超过1500、1000、500,同时保持代币总数小于4K。

Llama-2-70b 的推理和示例提示

您可以使用 Llama 模型来完成任何文本片段的文本补全。 通过文本生成,您可以执行各种任务,例如回答问题、语言翻译、情感分析等等。 端点的输入有效负载类似于以下代码:

以下是一些示例提示和模型生成的文本。 所有输出均使用推理参数生成 {"max_new_tokens":256, "top_p":0.9, "temperature":0.6}.

在下一个示例中,我们将展示如何使用 Llama 模型进行少量上下文学习,其中我们为模型提供可用的训练样本。 请注意,我们仅对已部署的模型进行推断,在此过程中,模型权重不会改变。

Llama-2-70b-chat 的推理和示例提示

对于针对对话用例进行了优化的 Llama-2-Chat 模型,聊天模型端点的输入是聊天助手和用户之间的先前历史记录。 您可以提出与目前所发生的对话相关的问题。 您还可以提供系统配置,例如定义聊天助理行为的角色。 端点的输入有效负载类似于以下代码:

以下是一些示例提示和模型生成的文本。 所有输出均使用推理参数生成 {"max_new_tokens": 512, "top_p": 0.9, "temperature": 0.6}.

在以下示例中,用户与助理就巴黎的旅游景点进行了对话。 接下来,用户询问聊天助手推荐的第一个选项。

在以下示例中,我们设置系统的配置:

清理

运行完笔记本后,请确保删除所有资源,以便删除在此过程中创建的所有资源并停止计费:

结论

在这篇文章中,我们向您展示了如何在 SageMaker Studio 中开始使用 Llama 2 模型。 这样,您就可以访问包含数十亿个参数的六个 Llama 2 基础模型。 由于基础模型是预先训练的,因此它们还可以帮助降低培训和基础设施成本,并支持针对您的用例进行定制。 要开始使用 SageMaker JumpStart,请访问以下资源:

关于作者

六月赢了 是 SageMaker JumpStart 的产品经理。 他专注于使基础模型易于发现和使用,以帮助客户构建生成式人工智能应用程序。 他在亚马逊的经验还包括移动购物应用程序和最后一英里送货。

六月赢了 是 SageMaker JumpStart 的产品经理。 他专注于使基础模型易于发现和使用,以帮助客户构建生成式人工智能应用程序。 他在亚马逊的经验还包括移动购物应用程序和最后一英里送货。

Vivek Madan 博士 是 Amazon SageMaker JumpStart 团队的一名应用科学家。 他在伊利诺伊大学厄巴纳-香槟分校获得博士学位,并且是乔治亚理工学院的博士后研究员。 他是机器学习和算法设计方面的活跃研究员,并在 EMNLP、ICLR、COLT、FOCS 和 SODA 会议上发表过论文。

Vivek Madan 博士 是 Amazon SageMaker JumpStart 团队的一名应用科学家。 他在伊利诺伊大学厄巴纳-香槟分校获得博士学位,并且是乔治亚理工学院的博士后研究员。 他是机器学习和算法设计方面的活跃研究员,并在 EMNLP、ICLR、COLT、FOCS 和 SODA 会议上发表过论文。  凯尔乌尔里希博士 是 Amazon SageMaker JumpStart 团队的应用科学家。 他的研究兴趣包括可扩展的机器学习算法、计算机视觉、时间序列、贝叶斯非参数和高斯过程。 他拥有杜克大学博士学位,并在 NeurIPS、Cell 和 Neuron 上发表过论文。

凯尔乌尔里希博士 是 Amazon SageMaker JumpStart 团队的应用科学家。 他的研究兴趣包括可扩展的机器学习算法、计算机视觉、时间序列、贝叶斯非参数和高斯过程。 他拥有杜克大学博士学位,并在 NeurIPS、Cell 和 Neuron 上发表过论文。  Ashish Khetan 博士 是 Amazon SageMaker JumpStart 的高级应用科学家,帮助开发机器学习算法。 他在伊利诺伊大学厄巴纳-香槟分校获得博士学位。 他是机器学习和统计推断领域的活跃研究员,并在 NeurIPS、ICML、ICLR、JMLR、ACL 和 EMNLP 会议上发表了多篇论文。

Ashish Khetan 博士 是 Amazon SageMaker JumpStart 的高级应用科学家,帮助开发机器学习算法。 他在伊利诺伊大学厄巴纳-香槟分校获得博士学位。 他是机器学习和统计推断领域的活跃研究员,并在 NeurIPS、ICML、ICLR、JMLR、ACL 和 EMNLP 会议上发表了多篇论文。  桑达尔·兰加纳坦 是 AWS GenAI/Frameworks GTM 专家的全球主管。 他专注于为大型语言模型、GenAI 和跨 AWS 服务(例如 Amazon EC2、EKS、EFA、AWS Batch 和 Amazon SageMaker)的大规模 ML 工作负载制定 GTM 策略。 他的经验包括在 NetApp、Micron Technology、Qualcomm 和 Mentor Graphics 的产品管理和产品开发中担任领导职务。

桑达尔·兰加纳坦 是 AWS GenAI/Frameworks GTM 专家的全球主管。 他专注于为大型语言模型、GenAI 和跨 AWS 服务(例如 Amazon EC2、EKS、EFA、AWS Batch 和 Amazon SageMaker)的大规模 ML 工作负载制定 GTM 策略。 他的经验包括在 NetApp、Micron Technology、Qualcomm 和 Mentor Graphics 的产品管理和产品开发中担任领导职务。

- SEO 支持的内容和 PR 分发。 今天得到放大。

- PlatoData.Network 垂直生成人工智能。 赋予自己力量。 访问这里。

- 柏拉图爱流。 Web3 智能。 知识放大。 访问这里。

- 柏拉图ESG。 汽车/电动汽车, 碳, 清洁科技, 能源, 环境, 太阳能, 废物管理。 访问这里。

- 块偏移量。 现代化环境抵消所有权。 访问这里。

- Sumber: https://aws.amazon.com/blogs/machine-learning/llama-2-foundation-models-from-meta-are-now-available-in-amazon-sagemaker-jumpstart/

- :具有

- :是

- :不是

- :在哪里

- $UP

- 1

- 10

- 100

- 11

- 13

- 15%

- 17

- 19

- 20

- 30

- 31

- 33

- 360度

- 40

- 4k

- 500

- 7

- 70

- 8

- 9

- a

- Able

- 关于

- 接受

- 可接受

- ACCESS

- 访问

- 无障碍

- 根据

- 承认

- 横过

- 要积极。

- 加

- 添加

- 额外

- 地址

- 后

- 再次

- 驳

- 协议

- AI

- 算法

- 算法

- 对齐

- 所有类型

- 让

- 沿

- 还

- 时刻

- am

- Amazon

- Amazon EC2

- 亚马逊SageMaker

- 亚马逊SageMaker JumpStart

- 亚马逊SageMaker Studio

- 亚马逊网络服务

- 美国人

- an

- 分析

- 和

- 和基础设施

- 宣布

- 另一个

- 回答

- 任何

- 任何人

- 应用领域

- 应用领域

- 应用的

- 欣赏

- 适当

- 弧

- 架构

- 保健

- 艺术

- AS

- 协助

- 助理

- At

- 气氛

- 其他景点

- 自动化

- 可使用

- AWS

- 香蕉

- 基本包

- 战斗

- 贝叶斯

- BE

- 美丽

- 美容

- 成为

- 因为

- 成为

- 很

- 啤酒

- before

- 行为

- 北京

- 相信

- 相信

- 最佳

- 之间

- 计费

- 亿

- 十亿美元

- 黑色

- 盒子

- 午休

- 惊险

- 广阔

- 建立

- 建筑物

- 建

- 但是

- 按键

- by

- 被称为

- CAN

- 资本

- 汽车

- 卡

- 旋转木马

- 案件

- 例

- 喵星人

- 更改

- 巧克力

- 选择

- 城市

- 经典

- 码

- 采集

- 结合

- 结合

- 购买的订单均

- 未来

- 商业的

- 公司

- 完成

- 一台

- 计算机视觉

- 会议

- 信心

- 配置

- 考虑

- 常数

- 施工

- 包含

- 容器

- 包含

- 内容

- 上下文

- 继续

- 一直

- 控制

- 控制

- 便捷

- 谈话

- 成本

- 国家

- 勇气

- 外壳

- 创建信息图

- 创建

- 创意奖学金

- 文化

- 文化塑造

- 杯

- 合作伙伴

- 定制

- 定制

- data

- 数据安全

- 专用

- 贡献

- 默认

- 定义

- 交货

- 部署

- 部署

- 部署

- 部署

- 部署

- 设计

- 设计

- 期望

- 目的地

- 旅游目的地

- 详情

- 开发

- 发达

- 开发商

- 发展

- 研发支持

- 对话

- 差异

- 不同

- 难

- 通过各种方式找到

- 独特的

- do

- 纪录片

- 做

- 完成

- 别

- 翻倍

- 向下

- 公爵

- 杜克大学

- ,我们将参加

- e

- 每

- 此前

- 容易

- 易

- 爱德华·

- 爱因斯坦

- 或

- 邮箱地址

- enable

- 使

- 结束

- 端至端

- 端点

- 工程师

- 英语

- 享受

- 更多

- 确保

- 环境

- 设备

- 以太币

- 甚至

- 事件

- 每个人

- 例子

- 例子

- 兴奋

- 体验

- 实验

- 实验

- 特快

- 失败

- 失败

- 公平

- false

- 家庭

- 著名

- 远

- 功绩

- 精选

- 特征

- 反馈

- 脚

- 少数

- 薄膜

- 最后

- 终于

- 找到最适合您的地方

- (名字)

- 旗舰

- 浮动

- 流动

- 重点

- 以下

- 针对

- 向前

- 发现

- 基金会

- 四

- 法国

- 法语

- 止

- 充分

- 进一步

- 未来

- 其他咨询

- 生成

- 产生

- 代

- 生成的

- 生成式人工智能

- 得到

- 给

- 玻璃

- 全球

- Go

- 去

- 图像

- 大

- 更大的

- 奠基

- 增长

- 指导

- 指南

- 民政事务总署

- 手柄

- 发生

- 快乐

- 硬

- 辛勤工作

- 有

- 有

- he

- 头

- 帮助

- 帮助

- 帮助

- 相关信息

- hi

- 高

- 更高

- 他的

- 历史的

- 历史

- 住房

- 创新中心

- How To

- HTML

- HTTPS

- 中心

- 人

- i

- 标志性的

- 主意

- if

- ii

- 伊利诺伊州

- 影响力故事

- 进口

- 重要

- 有声有色

- in

- 包括

- 包括

- 包含

- 成立

- 信息

- 基础设施

- 原来

- 输入

- 灵感

- 鼓舞人心

- 例

- 即刻

- 说明

- 集成

- 拟

- 利益

- 接口

- 成

- 孤立

- IT

- 它的

- 旅程

- JPG

- 只是

- 保持

- 不停

- 键

- 类

- 知道

- 已知

- 着陆

- 里程碑

- 语言

- 大

- 大规模

- 最大

- (姓氏)

- 晚了

- 发射

- 法律

- 领导团队

- 学习用品

- 学习成长

- 学习

- 最少

- 减

- 各级

- 执照

- 生活

- 光

- 喜欢

- 极限

- 书单

- 文学

- ll

- 骆驼

- 逻辑

- 长

- 长时间

- 寻找

- LOOKS

- 爱

- 爱

- 降低

- 机

- 机器学习

- 制成

- 使

- 制作

- 制作

- 颠覆性技术

- 经理

- 许多

- 奇迹

- 问题

- 最多

- 可能..

- 意

- 衡量

- 媒体

- 中等

- 提到

- 的话

- 元

- 微米

- 分钟

- 分钟

- 混合物

- ML

- 多播

- 联络号码

- 模型

- 模型

- 时刻

- 个月

- 更多

- 最先进的

- 最受欢迎的产品

- 运动

- 电影

- 许多

- 博物馆

- 博物馆

- 音乐

- 姓名

- 自然

- 必要

- 需求

- 打印车票

- 网络

- 全新

- 纽约

- 下页

- 没有

- 笔记本

- 现在

- 数

- 众多

- NY

- of

- 提供

- 提供

- 优惠精选

- 油

- on

- 一旦

- 一

- 仅由

- 打开

- 开放源码

- 优化

- 优化

- 附加选项

- or

- 其他名称

- 其它

- 除此以外

- 我们的

- 输出

- 产量

- 超过

- 最划算

- 页

- 对

- 纸类

- 文件

- 参数

- 参数

- 巴黎

- 部分

- 通过

- 通过

- 通行证

- 过去

- 和平

- 演出

- 性能

- 永久

- 电话

- 照片

- 物理

- 片

- 比萨饼

- 塑料

- 柏拉图

- 柏拉图数据智能

- 柏拉图数据

- 播放

- 政策

- 弹出式

- 热门

- 帖子

- 邮政

- 预报器

- 喜好

- 准备

- 当下

- 以前

- 过程

- 过程

- 产品

- 产品开发

- 产品管理

- 产品经理

- 建议

- 骄傲

- 提供

- 提供

- 提供

- 优

- 国家

- 公共交通

- 公然

- 出版

- 目的

- 放

- 蟒蛇

- 高通公司

- 有疑问吗?

- 快速

- 很快

- 坡道

- 随机性

- 范围

- 范围

- 准备

- 原因

- 接收

- 食谱

- 建议

- 建议

- 指

- 而不管

- 地区

- 相对的

- 相对论

- 重复

- 代表

- 请求

- 需要

- 研究

- 研究员

- 资源

- 分别

- 回应

- REST的

- 导致

- 成果

- 河

- 角色

- 角色

- 运行

- 运行

- s

- 实现安全

- sagemaker

- 盐

- 同

- 可扩展性

- 鳞片

- 科学家

- 科学家

- SDK

- SEA

- 搜索

- 搜索

- 部分

- 安全

- 保安

- 看到

- 看到

- 似乎

- 选

- 选择

- 选择

- 提交

- 前辈

- 情绪

- 系列

- 特色服务

- 服务

- 集

- 设置

- 形状

- 鲨鱼

- 购物

- 应该

- 显示

- 显示

- 作品

- 关闭

- 意义

- 显著

- 简易

- 只是

- 单

- 网站

- 网站

- SIX

- 尺寸

- 慢慢地

- 小

- So

- 至今

- 社会

- 社会化媒体

- 解决方案

- 一些

- 来源

- 来源

- 特别

- 专家

- 指定

- 速度

- 看台

- 开始

- 开始

- 州/领地

- 州

- 统计

- 步

- 步骤

- 停止

- 策略

- 串

- 结构体

- 工作室

- 令人惊叹

- 主题

- 随后

- 成功

- 顺利

- 这样

- 支持

- 肯定

- 符号

- 系统

- 表

- 采取

- 需要

- 任务

- 味道

- 团队

- 科技

- 专业技术

- 临时

- 条款

- 比

- 谢谢

- 这

- 资本

- 未来

- 世界

- 其

- 他们

- 理论

- 那里。

- 博曼

- 他们

- 事

- 认为

- Free Introduction

- 虽然?

- 通过

- 虎

- 次

- 时间序列

- 时

- 标题

- 至

- 今晚

- 一起

- 象征

- 令牌

- 工具

- 最佳

- 合计

- 塔

- 培训

- 产品培训

- 变压器

- 翻译

- 翻译

- 交通运输或是

- 兆

- 尝试

- 二

- 类型

- 类型

- ui

- 下

- 难忘

- 独特

- 大学

- 直到

- 更新

- 最新动态

- 可用

- 使用

- 用例

- 用过的

- 用户

- 用户

- 使用

- 运用

- 折扣值

- 价值观

- 各种

- 版本

- 版本

- 非常

- 通过

- 查看

- 意见

- 愿景

- 参观

- 访客

- 体积

- 通缉

- 战争

- 是

- we

- 卷筒纸

- Web服务

- 基于网络的

- 您的网站

- 井

- 鲸鱼

- 什么是

- 什么是

- ,尤其是

- 而

- 这

- 而

- 为什么

- 将

- 窗户

- Word

- 话

- 工作

- 合作

- 世界

- 世界知名

- 包装

- 年

- 纽约

- 完全

- 您一站式解决方案

- 你自己

- 和风网

- 压缩