This blog post is co-written with Hwalsuk Lee at Upstage.

امروز، ما هیجان زده هستیم که اعلام کنیم که خورشیدی foundation model developed by Upstage is now available for customers using Amazon SageMaker JumpStart. Solar is a large language model (LLM) 100% pre-trained with آمازون SageMaker that outperforms and uses its compact size and powerful track records to specialize in purpose-training, making it versatile across languages, domains, and tasks.

اکنون می توانید از Solar Mini Chat و Solar Mini Chat – Quant pretrained models within SageMaker JumpStart. SageMaker JumpStart is the machine learning (ML) hub of SageMaker that provides access to foundation models in addition to built-in algorithms to help you quickly get started with ML.

In this post, we walk through how to discover and deploy the Solar model via SageMaker JumpStart.

What’s the Solar model?

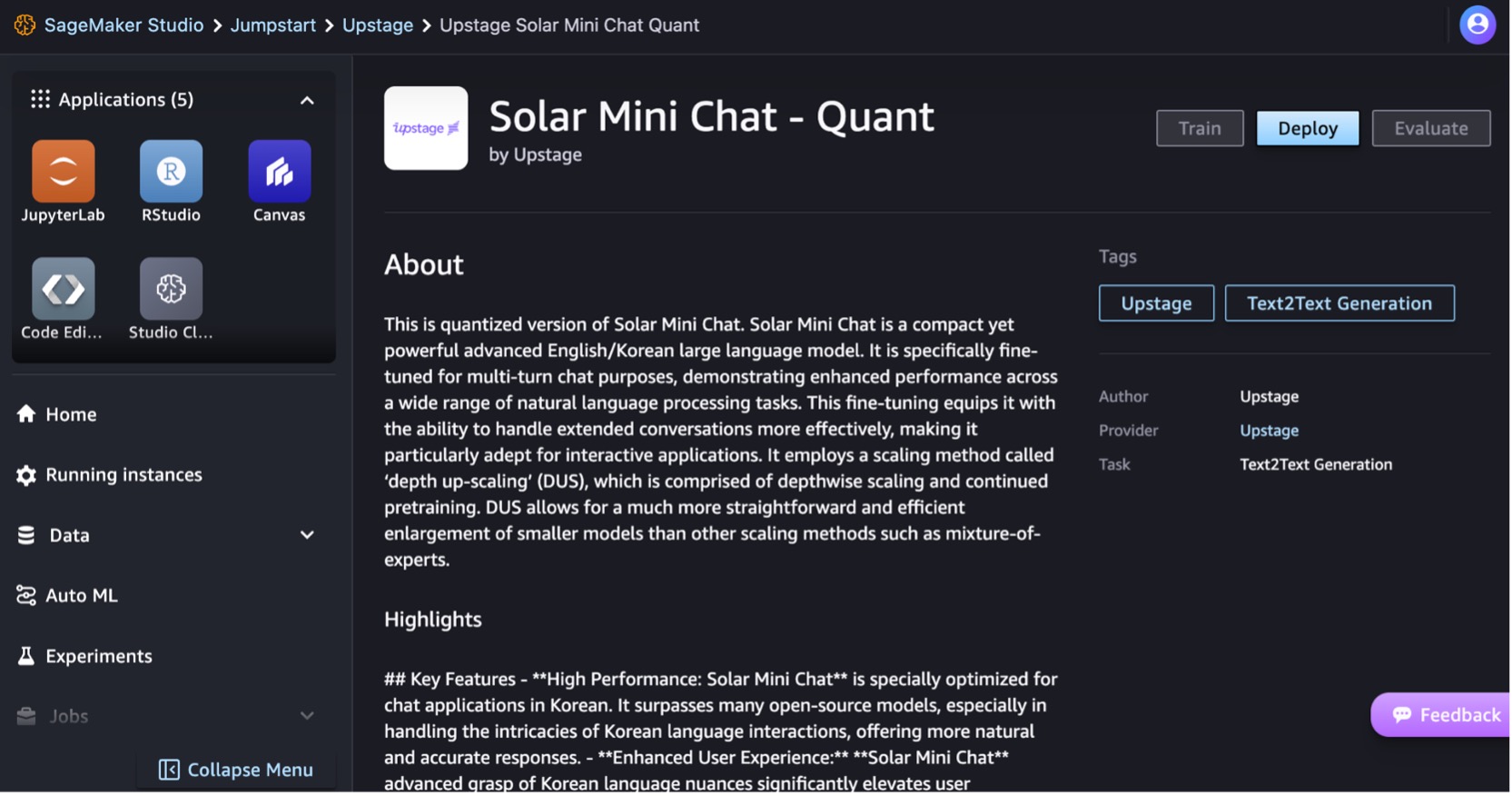

Solar is a compact and powerful model for English and Korean languages. It’s specifically fine-tuned for multi-turn chat purposes, demonstrating enhanced performance across a wide range of natural language processing tasks.

The Solar Mini Chat model is based on Solar 10.7B, with a 32-layer لاما 2 structure, and initialized with pre-trained weights from Mistral 7B compatible with the Llama 2 architecture. This fine-tuning equips it with the ability to handle extended conversations more effectively, making it particularly adept for interactive applications. It employs a scaling method called depth up-scaling (DUS), which is comprised of depth-wise scaling and continued pretraining. DUS allows for a much more straightforward and efficient enlargement of smaller models than other scaling methods such as مخلوطی از متخصصان (MOE).

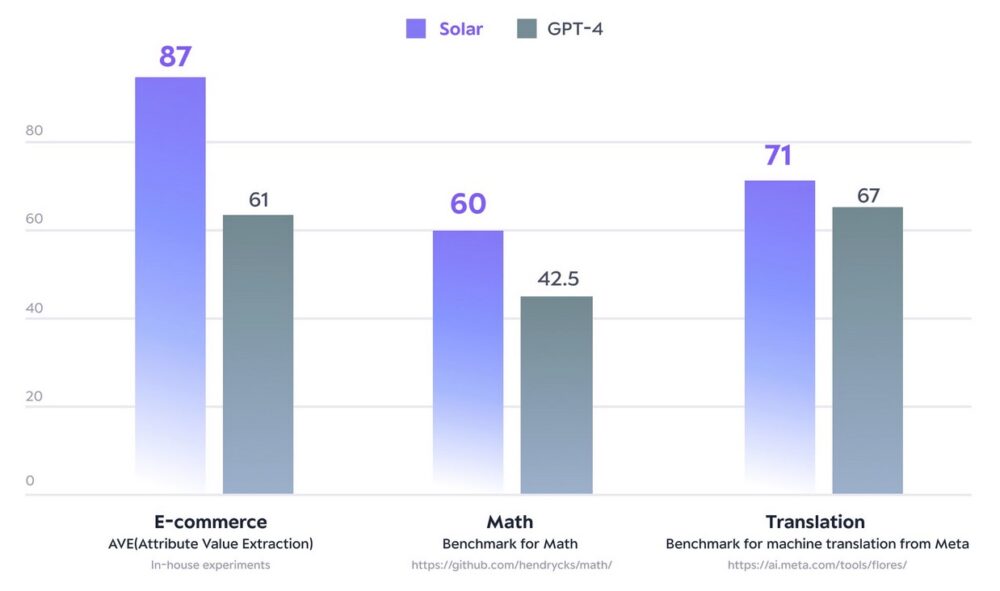

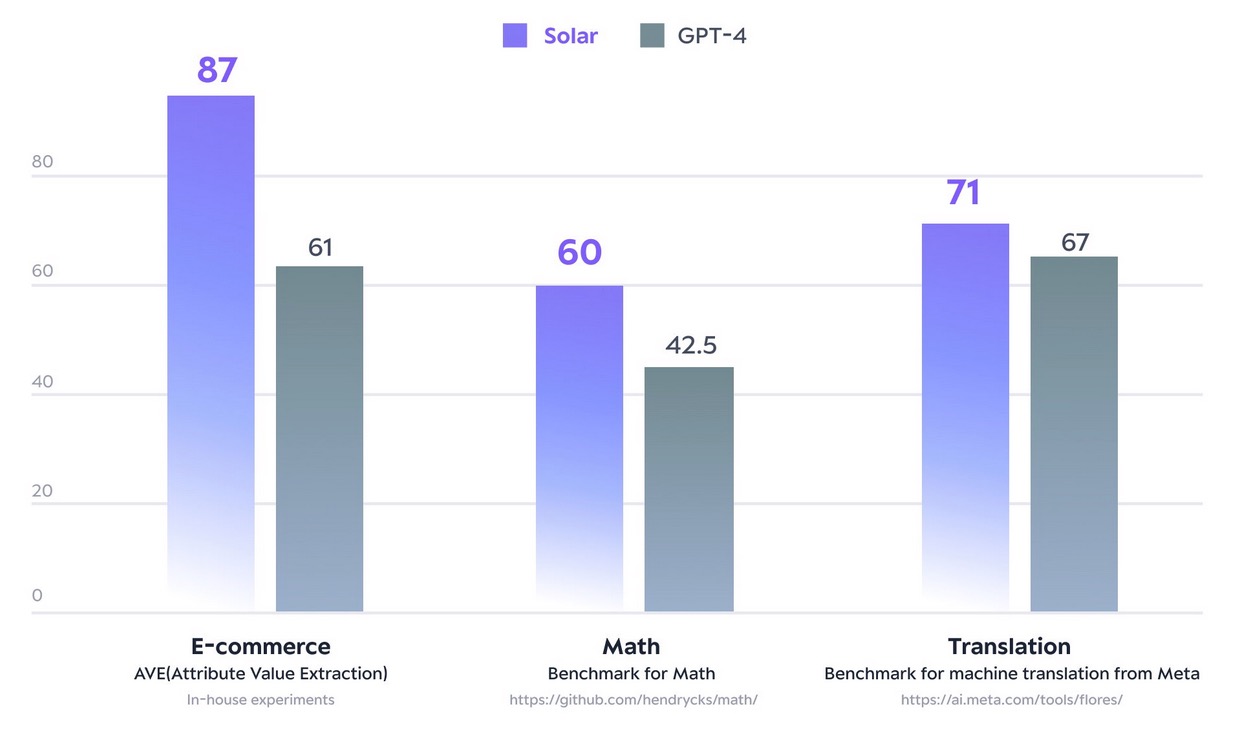

In December 2023, the Solar 10.7B model made waves by reaching the pinnacle of the تابلوی امتیازات LLM را باز کنید of Hugging Face. Using notably fewer parameters, Solar 10.7B delivers responses comparable to GPT-3.5, but is 2.5 times faster. Along with topping the Open LLM Leaderboard, Solar 10.7B outperforms GPT-4 with purpose-trained models on certain domains and tasks.

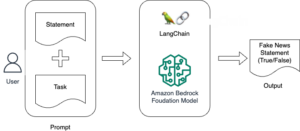

The following figure illustrates some of these metrics:

With SageMaker JumpStart, you can deploy Solar 10.7B based pre-trained models: Solar Mini Chat and a quantized version of Solar Mini Chat, optimized for chat applications in English and Korean. The Solar Mini Chat model provides an advanced grasp of Korean language nuances, which significantly elevates user interactions in chat environments. It provides precise responses to user inputs, ensuring clearer communication and more efficient problem resolution in English and Korean chat applications.

Get started with Solar models in SageMaker JumpStart

To get started with Solar models, you can use SageMaker JumpStart, a fully managed ML hub service to deploy pre-built ML models into a production-ready hosted environment. You can access Solar models through SageMaker JumpStart in Amazon SageMaker Studio, a web-based integrated development environment (IDE) where you can access purpose-built tools to perform all ML development steps, from preparing data to building, training, and deploying your ML models.

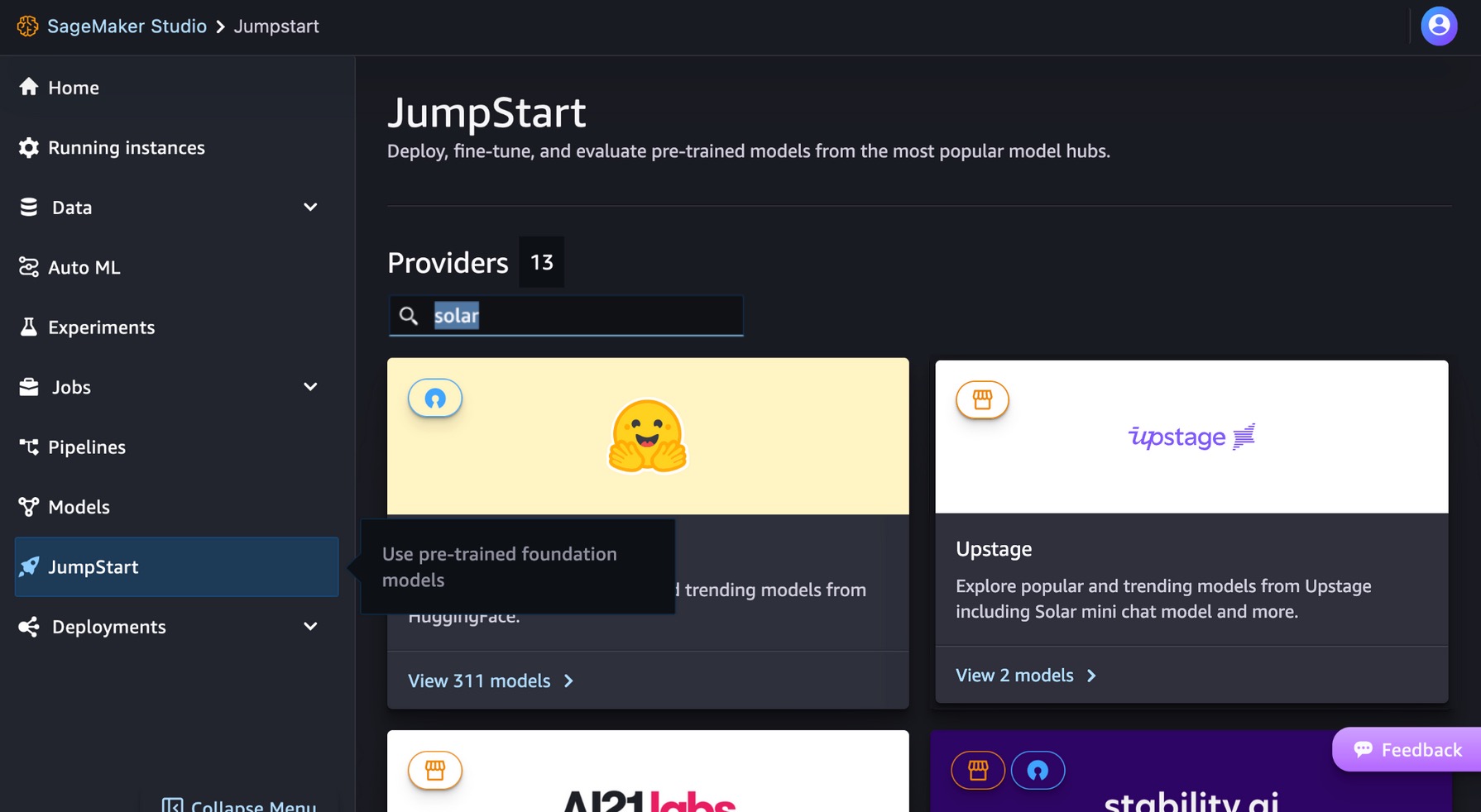

در کنسول SageMaker Studio، را انتخاب کنید شروع به کار in the navigation pane. You can enter “solar” in the search bar to find Upstage’s solar models.

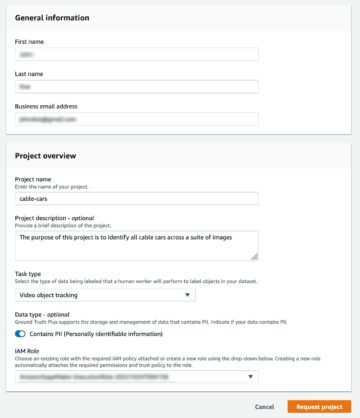

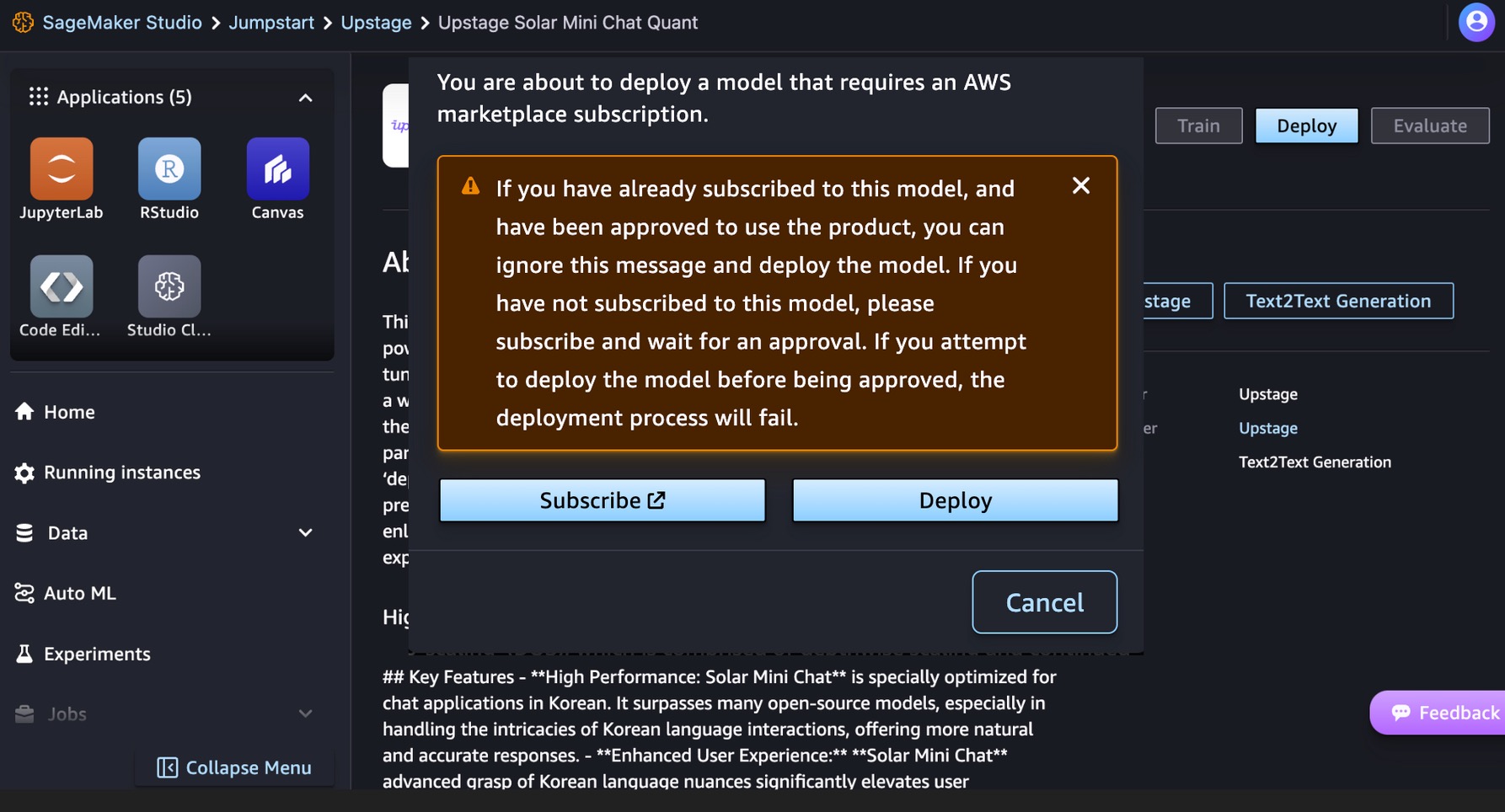

Let’s deploy the Solar Mini Chat – Quant model. Choose the model card to view details about the model such as the license, data used to train, and how to use the model. You will also find a گسترش option, which takes you to a landing page where you can test inference with an example payload.

This model requires an بازار AWS subscription. If you have already subscribed to this model, and have been approved to use the product, you can deploy the model directly.

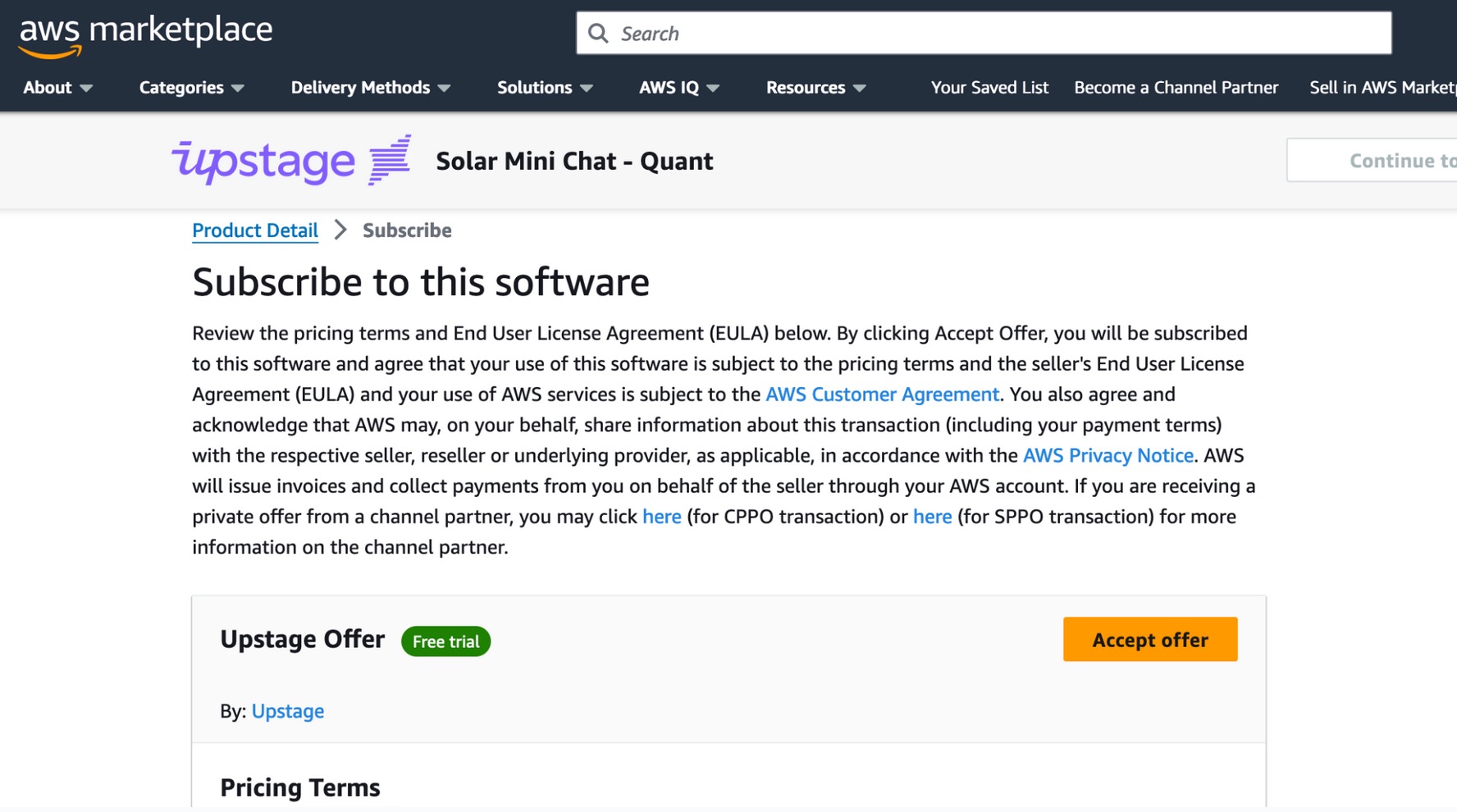

If you have not subscribed to this model, choose اشتراک , go to AWS Marketplace, review the pricing terms and End User License Agreement (EULA), and choose قبول پیشنهاد.

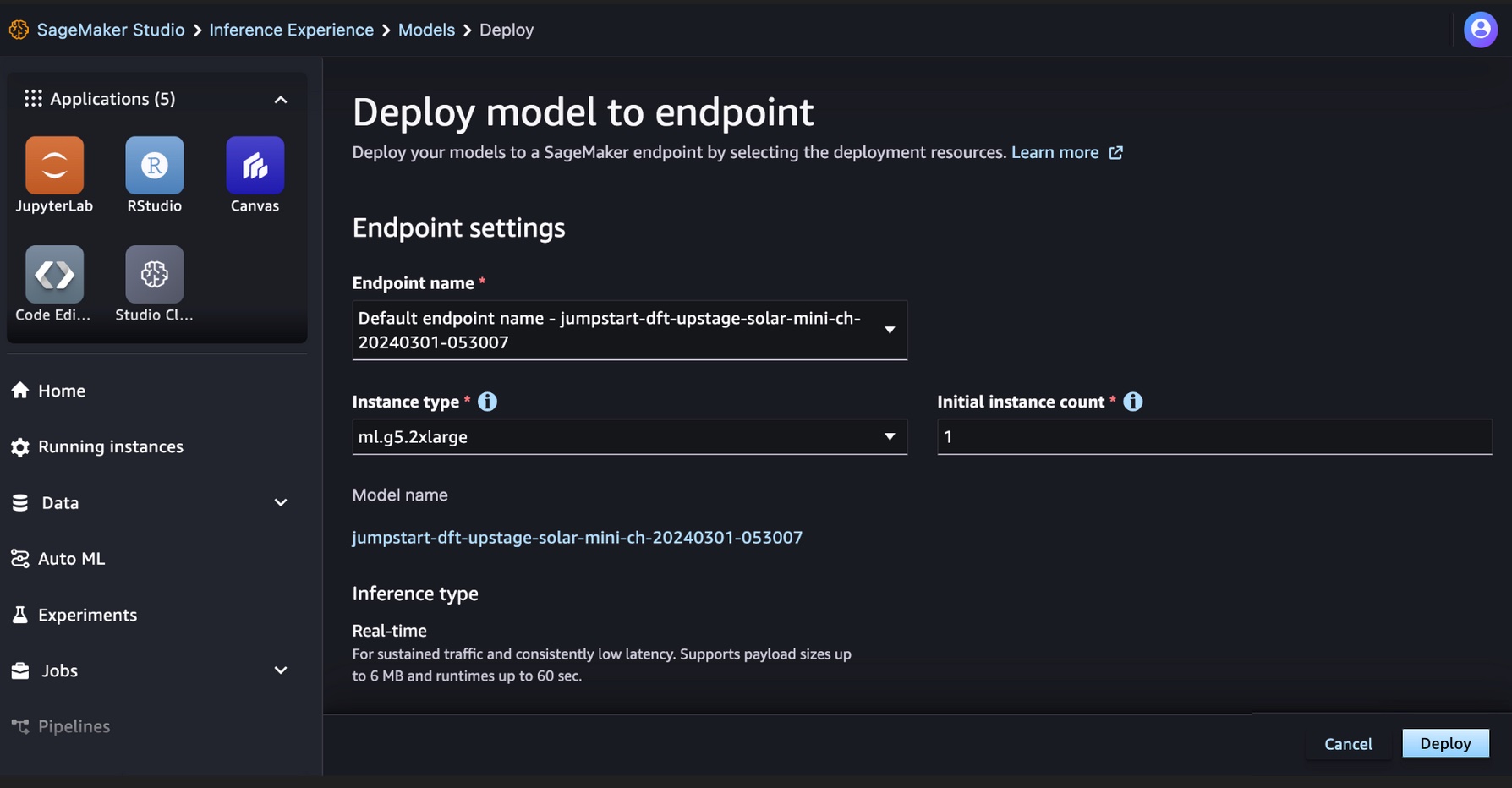

After you’re subscribed to the model, you can deploy your model to a SageMaker endpoint by selecting the deployment resources, such as instance type and initial instance count. Choose گسترش and wait an endpoint to be created for the model inference. You can select an ml.g5.2xlarge instance as a cheaper option for inference with the Solar model.

When your SageMaker endpoint is successfully created, you can test it through the various SageMaker application environments.

Run your code for Solar models in SageMaker Studio JupyterLab

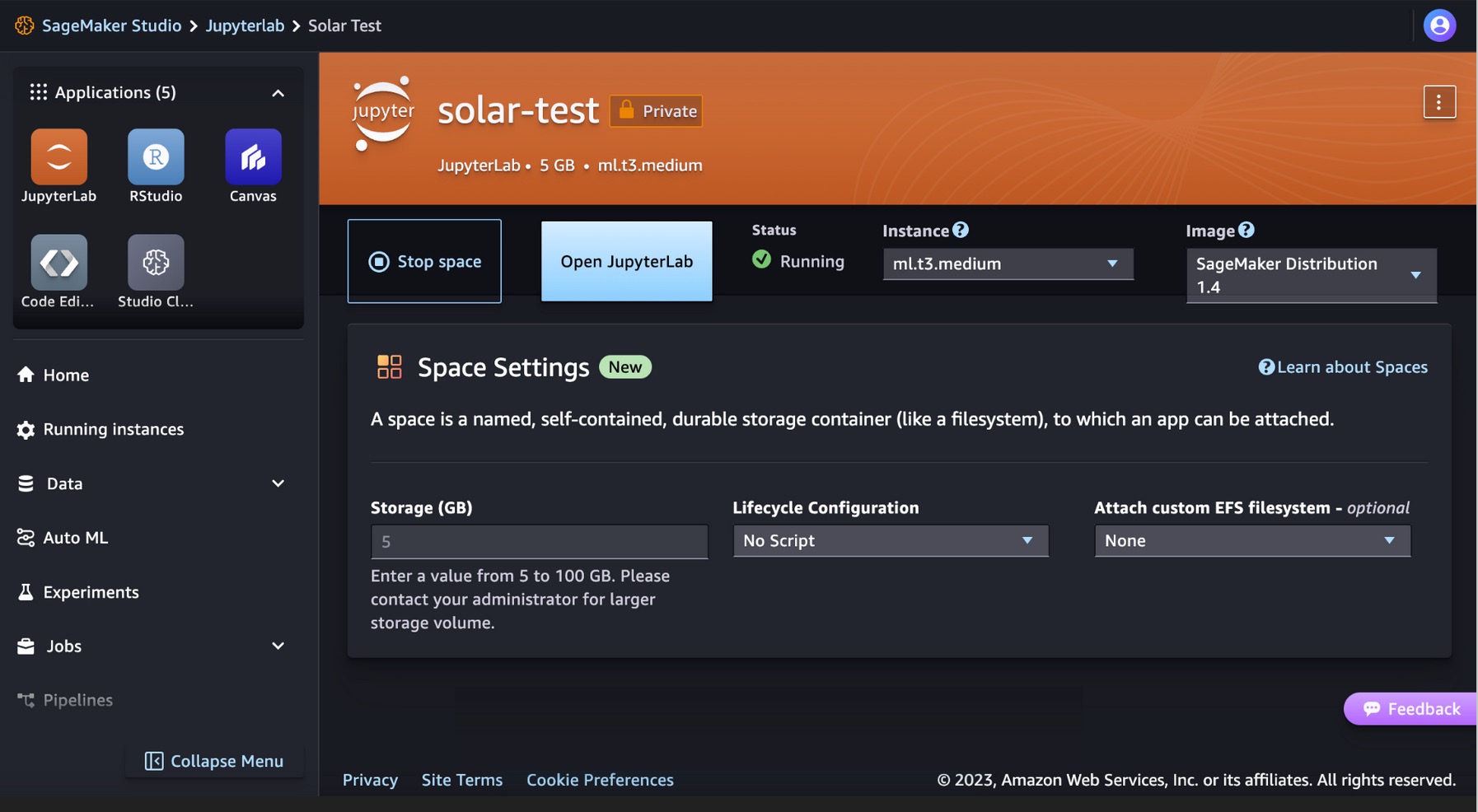

SageMaker Studio supports various application development environments, including JupyterLab, a set of capabilities that augment the fully managed notebook offering. It includes kernels that start in seconds, a preconfigured runtime with popular data science, ML frameworks, and high-performance private block storage. For more information, see SageMaker JupyterLab.

Create a JupyterLab space within SageMaker Studio that manages the storage and compute resources needed to run the JupyterLab application.

You can find the code showing the deployment of Solar models on SageMaker JumpStart and an example of how to use the deployed model in the GitHub repo. You can now deploy the model using SageMaker JumpStart. The following code uses the default instance ml.g5.2xlarge for the Solar Mini Chat – Quant model inference endpoint.

Solar models support a request/response payload compatible to OpenAI’s Chat completion endpoint. You can test single-turn or multi-turn chat examples with Python.

# Get a SageMaker endpoint

sagemaker_runtime = boto3.client("sagemaker-runtime")

endpoint_name = sagemaker.utils.name_from_base(model_name)

# Multi-turn chat prompt example

input = {

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Can you provide a Python script to merge two sorted lists?"

},

{

"role": "assistant",

"content": """Sure, here is a Python script to merge two sorted lists:

```python

def merge_lists(list1, list2):

return sorted(list1 + list2)

```

"""

},

{

"role": "user",

"content": "Can you provide an example of how to use this function?"

}

]

}

# Get response from the model

response = sagemaker_runtime.invoke_endpoint(EndpointName=endpoint_name, ContentType='application/json', Body=json.dumps (input))

result = json.loads(response['Body'].read().decode())

print resultYou have successfully performed a real time inference with the Solar Mini Chat model.

پاک کردن

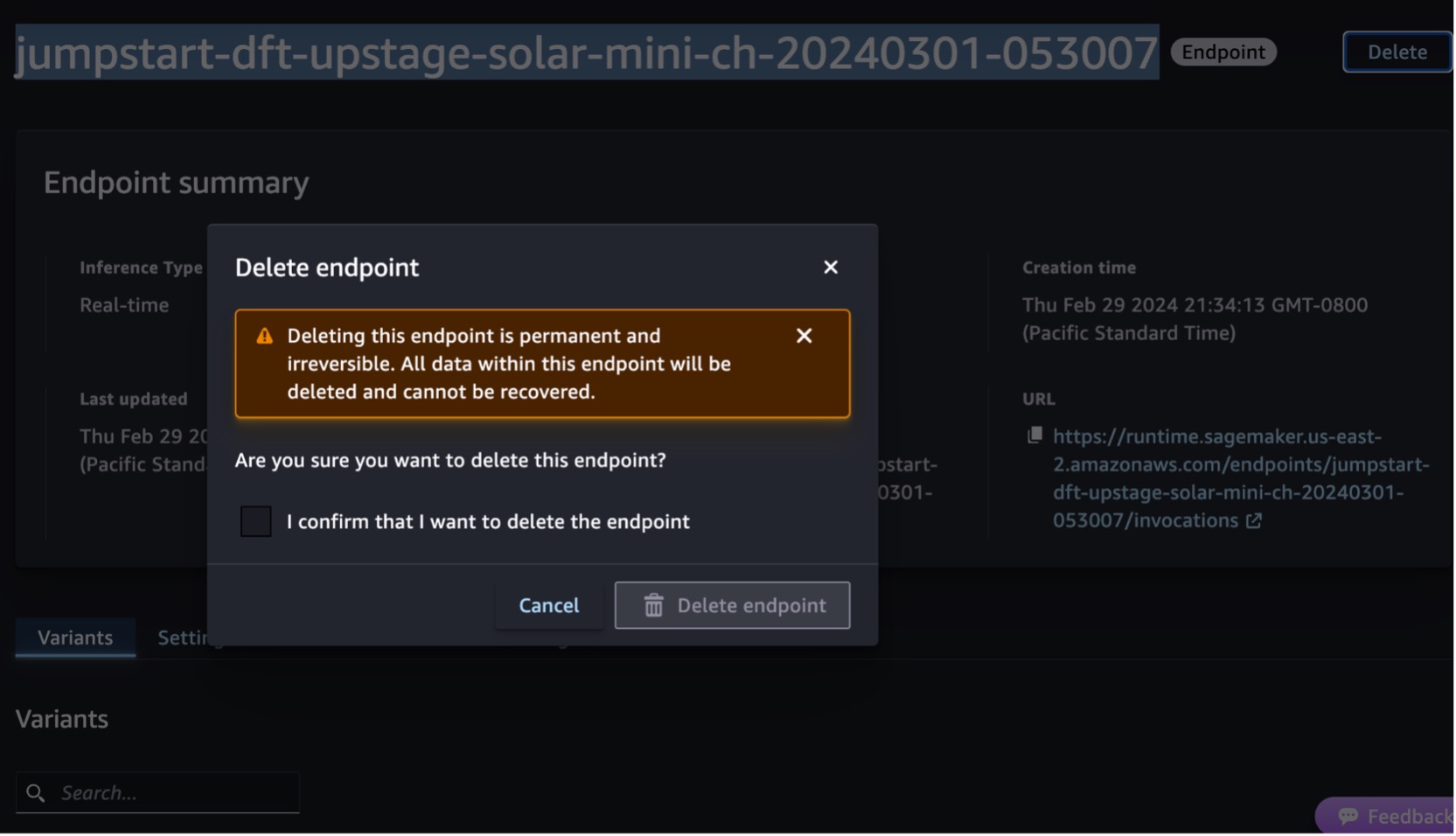

After you have tested the endpoint, delete the SageMaker inference endpoint and delete the model to avoid incurring charges.

You can also run the following code to delete the endpoint and mode in the notebook of SageMaker Studio JupyterLab:

# Delete the endpoint

model.sagemaker_session.delete_endpoint(endpoint_name)

model.sagemaker_session.delete_endpoint_config(endpoint_name)

# Delete the model

model.delete_model()برای اطلاعات بیشتر، نگاه کنید به نقاط پایانی و منابع را حذف کنید. علاوه بر این، شما می توانید منابع SageMaker Studio را ببندید که دیگر مورد نیاز نیستند

نتیجه

In this post, we showed you how to get started with Upstage’s Solar models in SageMaker Studio and deploy the model for inference. We also showed you how you can run your Python sample code on SageMaker Studio JupyterLab.

Because Solar models are already pre-trained, they can help lower training and infrastructure costs and enable customization for your generative AI applications.

Try it out on the SageMaker JumpStart console or SageMaker Studio console! You can also watch the following video, Try ‘Solar’ with Amazon SageMaker.

این راهنما فقط برای مقاصد اطلاعاتی است. شما همچنان باید ارزیابی مستقل خود را انجام دهید و اقداماتی را انجام دهید تا اطمینان حاصل شود که از رویهها و استانداردهای کنترل کیفیت خاص خود و قوانین، قوانین، مقررات، مجوزها و شرایط استفاده محلی که در مورد شما، محتوای شما اعمال میشود، پیروی میکنید. و مدل شخص ثالث اشاره شده در این راهنما. AWS هیچ کنترل یا اختیاری بر مدل شخص ثالث اشاره شده در این راهنما ندارد، و هیچ گونه تضمین یا تضمینی مبنی بر ایمن بودن، عاری از ویروس، عملیاتی بودن یا سازگاری مدل شخص ثالث با محیط تولید و استانداردهای شما ندارد. AWS هیچ گونه اظهارنظر، ضمانت یا تضمینی مبنی بر اینکه هر گونه اطلاعات موجود در این راهنما منجر به نتیجه یا نتیجه خاصی شود، ارائه نمی کند.

درباره نویسنده

Channy Yun is a Principal Developer Advocate at AWS, and is passionate about helping developers build modern applications on the latest AWS services. He is a pragmatic developer and blogger at heart, and he loves community-driven learning and sharing of technology.

Channy Yun is a Principal Developer Advocate at AWS, and is passionate about helping developers build modern applications on the latest AWS services. He is a pragmatic developer and blogger at heart, and he loves community-driven learning and sharing of technology.

Hwalsuk Lee is Chief Technology Officer (CTO) at Upstage. He has worked for Samsung Techwin, NCSOFT, and Naver as an AI Researcher. He is pursuing his PhD in Computer and Electrical Engineering at the Korea Advanced Institute of Science and Technology (KAIST).

Hwalsuk Lee is Chief Technology Officer (CTO) at Upstage. He has worked for Samsung Techwin, NCSOFT, and Naver as an AI Researcher. He is pursuing his PhD in Computer and Electrical Engineering at the Korea Advanced Institute of Science and Technology (KAIST).

براندون لی is a Senior Solutions Architect at AWS, and primarily helps large educational technology customers in the Public Sector. He has over 20 years of experience leading application development at global companies and large corporations.

براندون لی is a Senior Solutions Architect at AWS, and primarily helps large educational technology customers in the Public Sector. He has over 20 years of experience leading application development at global companies and large corporations.

- محتوای مبتنی بر SEO و توزیع روابط عمومی. امروز تقویت شوید.

- PlatoData.Network Vertical Generative Ai. به خودت قدرت بده دسترسی به اینجا.

- PlatoAiStream. هوش وب 3 دانش تقویت شده دسترسی به اینجا.

- PlatoESG. کربن ، CleanTech، انرژی، محیط، خورشیدی، مدیریت پسماند دسترسی به اینجا.

- PlatoHealth. هوش بیوتکنولوژی و آزمایشات بالینی. دسترسی به اینجا.

- منبع: https://aws.amazon.com/blogs/machine-learning/solar-models-from-upstage-are-now-available-in-amazon-sagemaker-jumpstart/

- : دارد

- :است

- :نه

- :جایی که

- 10

- 120

- 152

- 20

- سال 20

- 2023

- 7

- 990

- a

- توانایی

- درباره ما

- پذیرفتن

- دسترسی

- در میان

- اضافه

- علاوه بر این

- ماهر

- پیشرفته

- مدافع

- توافق

- AI

- الگوریتم

- معرفی

- اجازه می دهد تا

- در امتداد

- قبلا

- همچنین

- آمازون

- آمازون SageMaker

- Amazon SageMaker JumpStart

- آمازون خدمات وب

- an

- و

- و زیرساخت

- اعلام

- هر

- کاربرد

- برنامه توسعه

- برنامه های کاربردی

- درخواست

- تایید کرد

- معماری

- هستند

- AS

- ارزیابی

- دستیار

- At

- تقویت کردن

- قدرت

- در دسترس

- اجتناب از

- AWS

- بازار AWS

- بار

- مستقر

- BE

- بوده

- مسدود کردن

- بلاگ

- بدن

- براندون

- ساختن

- بنا

- ساخته شده در

- اما

- by

- نام

- CAN

- قابلیت های

- کارت

- معین

- بار

- گپ

- ارزان تر

- رئیس

- مدیر ارشد فناوری

- را انتخاب کنید

- واضح تر

- رمز

- ارتباط

- جامعه محور

- جمع و جور

- شرکت

- قابل مقایسه

- سازگار

- اتمام

- مطابق

- شامل

- محاسبه

- کامپیوتر

- کنسول

- محتوا

- ادامه داد:

- کنترل

- گفتگو

- شرکت ها

- هزینه

- تعداد دفعات مشاهده

- ایجاد

- ایجاد شده

- CTO

- مشتریان

- سفارشی سازی

- داده ها

- علم اطلاعات

- دسامبر

- به طور پیش فرض

- ارائه

- نشان دادن

- گسترش

- مستقر

- استقرار

- گسترش

- جزئیات

- توسعه

- توسعه دهنده

- توسعه دهندگان

- پروژه

- مستقیما

- كشف كردن

- میکند

- حوزه

- پایین

- آموزش

- به طور موثر

- موثر

- ارتفاعات

- کار می کند

- قادر ساختن

- پایان

- نقطه پایانی

- مهندسی

- انگلیسی

- افزایش

- اطمینان حاصل شود

- حصول اطمینان از

- وارد

- محیط

- محیط

- مثال

- مثال ها

- برانگیخته

- تجربه

- تمدید شده

- چهره

- سریعتر

- کمتر

- شکل

- پیدا کردن

- پیروی

- برای

- پایه

- چارچوب

- از جانب

- کاملا

- تابع

- مولد

- هوش مصنوعی مولد

- دریافت کنید

- جهانی

- Go

- فهم

- تضمین می کند

- راهنمایی

- دسته

- آیا

- he

- قلب

- کمک

- مفید

- کمک

- کمک می کند

- اینجا کلیک نمایید

- عملکرد بالا

- خود را

- میزبانی

- چگونه

- چگونه

- HTML

- HTTPS

- قطب

- if

- نشان می دهد

- in

- شامل

- از جمله

- مستقل

- اطلاعات

- اطلاعاتی

- شالوده

- اول

- ورودی

- ورودی

- نمونه

- موسسه

- یکپارچه

- فعل و انفعالات

- تعاملی

- به

- IT

- ITS

- JPG

- json

- کشور کره

- کره ای

- فرود

- زبان

- زبان ها

- بزرگ

- آخرین

- قوانین

- رهبران

- برجسته

- یادگیری

- انسوی کشتی که از باد در پناه است

- مجوز

- مجوزها

- لیست

- پشم لاما

- LLM

- محلی

- دیگر

- دوست دارد

- کاهش

- دستگاه

- فراگیری ماشین

- ساخته

- ساخت

- ساخت

- اداره می شود

- مدیریت می کند

- بازار

- معیارهای

- ادغام کردن

- پیام

- متا

- روش

- روش

- متریک

- ML

- حالت

- مدل

- مدل

- مدرن

- بیش

- کارآمدتر

- بسیار

- طبیعی

- پردازش زبان طبیعی

- ناور

- جهت یابی

- ضروری

- نه

- به ویژه

- دفتر یادداشت

- اکنون

- تفاوت های ظریف

- of

- ارائه

- ارائه

- افسر

- on

- فقط

- باز کن

- قابل استفاده

- بهینه

- گزینه

- or

- دیگر

- خارج

- نتیجه

- عملکرد بهتر

- روی

- خود

- با ما

- قطعه

- پارامترهای

- ویژه

- ویژه

- احساساتی

- انجام

- کارایی

- انجام

- دکترا

- عکس

- اوج

- افلاطون

- هوش داده افلاطون

- PlatoData

- محبوب

- پست

- قوی

- شیوه های

- عملگرا

- دقیق

- آماده

- قیمت گذاری

- در درجه اول

- اصلی

- چاپ

- خصوصی

- مشکل

- در حال پردازش

- محصول

- تولید

- ارائه

- فراهم می کند

- عمومی

- اهداف

- پــایتــون

- کیفیت

- مانند

- به سرعت

- محدوده

- رسیدن به

- واقعی

- زمان واقعی

- سوابق

- اشاره کرد

- مقررات

- ضروری

- نیاز

- پژوهشگر

- وضوح

- منابع

- پاسخ

- پاسخ

- نتیجه

- برگشت

- این فایل نقد می نویسید:

- نقش

- قوانین

- دویدن

- زمان اجرا

- حکیم ساز

- استنباط SageMaker

- نمونه

- سامسونگ

- مقیاس گذاری

- علم

- علم و تکنولوژی

- خط

- جستجو

- ثانیه

- بخش

- امن

- دیدن

- را انتخاب کنید

- انتخاب

- ارشد

- سرویس

- خدمات

- تنظیم

- اشتراک

- باید

- نشان داد

- نمایش

- به طور قابل توجهی

- اندازه

- کوچکتر

- خورشیدی

- مزایا

- برخی از

- فضا

- متخصص

- خاص

- به طور خاص

- استانداردهای

- شروع

- آغاز شده

- مراحل

- هنوز

- ذخیره سازی

- ساده

- ساختار

- استودیو

- مشترک

- اشتراک، ابونمان

- موفقیت

- چنین

- پشتیبانی

- پشتیبانی از

- مطمئن

- سیستم

- گرفتن

- طول می کشد

- وظایف

- پیشرفته

- قوانین و مقررات

- آزمون

- آزمایش

- نسبت به

- که

- La

- اینها

- آنها

- شخص ثالث

- این

- از طریق

- زمان

- بار

- به

- ابزار

- مسیر

- قطار

- آموزش

- دو

- نوع

- استفاده کنید

- استفاده

- کاربر

- استفاده

- با استفاده از

- مختلف

- همه کاره

- نسخه

- از طريق

- تصویری

- چشم انداز

- صبر کنيد

- راه رفتن

- ضمانت نامه ها

- تماشا کردن

- امواج

- we

- وب

- خدمات وب

- مبتنی بر وب

- که

- وسیع

- دامنه گسترده

- اراده

- با

- در داخل

- مشغول به کار

- سال

- شما

- شما

- یوتیوب

- زفیرنت