Amazon SageMaker offers several ways to run distributed data processing jobs with Apache Spark, a popular distributed computing framework for big data processing.

You can run Spark applications interactively from Amazon SageMaker Studio by connecting SageMaker Studio notebooks and AWS Glue Interactive Sessions to run Spark jobs with a serverless cluster. With interactive sessions, you can choose Apache Spark or Ray to easily process large datasets, without worrying about cluster management.

Alternately, if you need more control over the environment, you can use a pre-built SageMaker Spark container to run Spark applications as batch jobs on a fully managed distributed cluster with Amazon SageMaker Processing. This option allows you to select several types of instances (compute optimized, memory optimized, and more), the number of nodes in the cluster, and the cluster configuration, thereby enabling greater flexibility for data processing and model training.

Finally, you can run Spark applications by connecting Studio notebooks with Amazon EMR clusters, or by running your Spark cluster on Amazon Elastic Compute Cloud (Amazon EC2).

All these options allow you to generate and store Spark event logs to analyze them through the web-based user interface commonly named the Spark UI, which runs a Spark History Server to monitor the progress of Spark applications, track resource usage, and debug errors.

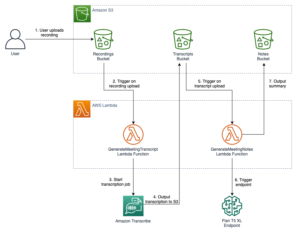

In this post, we share a solution for installing and running Spark History Server on SageMaker Studio and accessing the Spark UI directly from the SageMaker Studio IDE, for analyzing Spark logs produced by different AWS services (AWS Glue Interactive Sessions, SageMaker Processing jobs, and Amazon EMR) and stored in an Amazon Simple Storage Service (Amazon S3) bucket.

Solution overview

The solution integrates Spark History Server into the Jupyter Server app in SageMaker Studio. This allows users to access Spark logs directly from the SageMaker Studio IDE. The integrated Spark History Server supports the following:

- Accessing logs generated by SageMaker Processing Spark jobs

- Accessing logs generated by AWS Glue Spark applications

- Accessing logs generated by self-managed Spark clusters and Amazon EMR

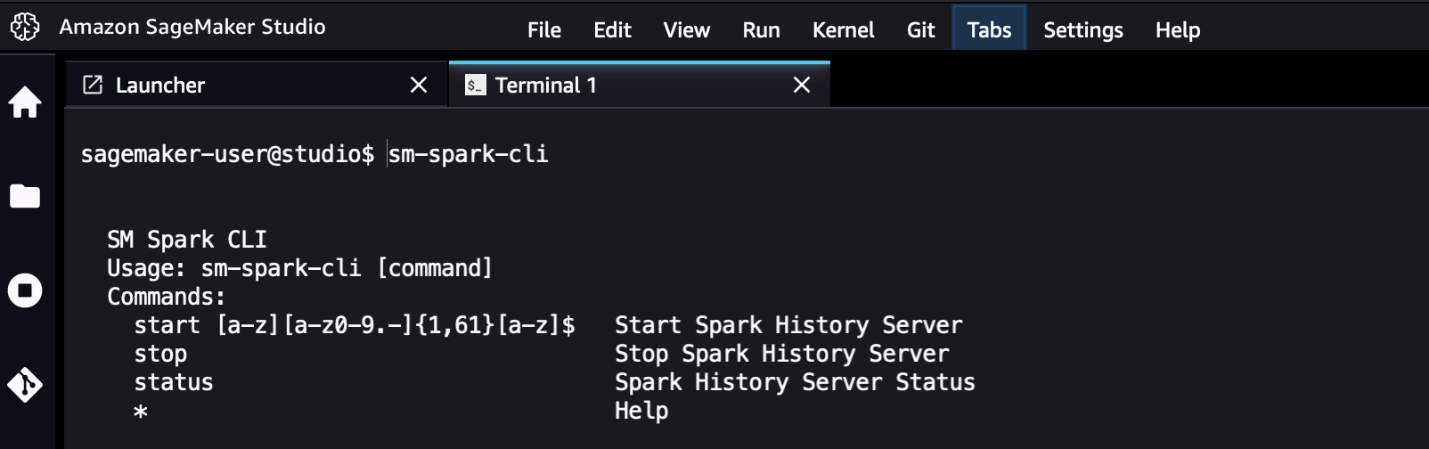

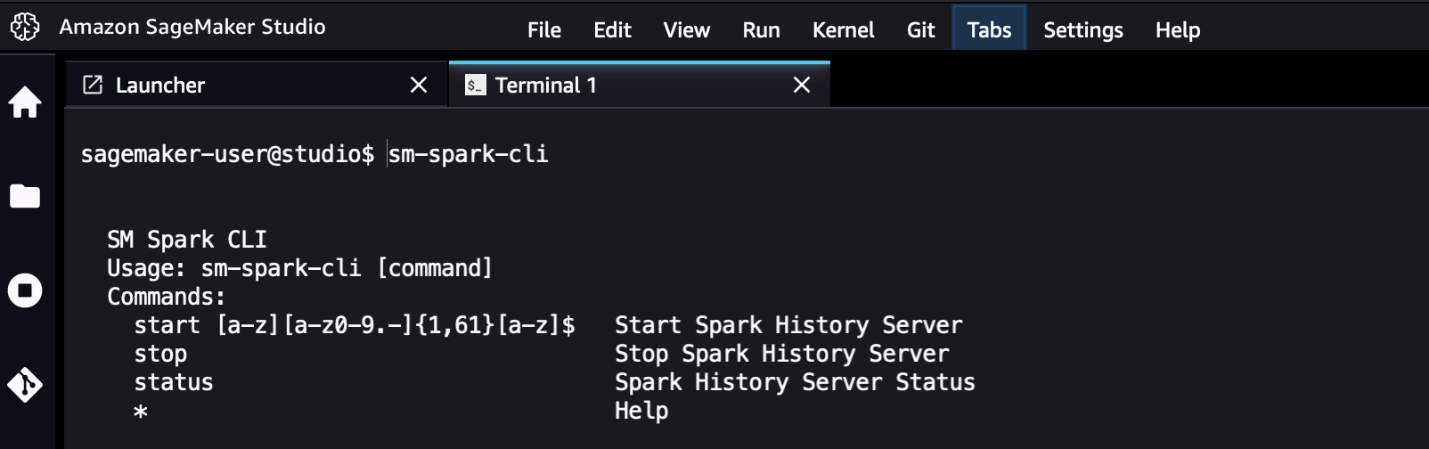

A utility command line interface (CLI) called sm-spark-cli is also provided for interacting with the Spark UI from the SageMaker Studio system terminal. The sm-spark-cli enables managing Spark History Server without leaving SageMaker Studio.

The solution consists of shell scripts that perform the following actions:

- Install Spark on the Jupyter Server for SageMaker Studio user profiles or for a SageMaker Studio shared space

- Install the

sm-spark-clifor a user profile or shared space

Install the Spark UI manually in a SageMaker Studio domain

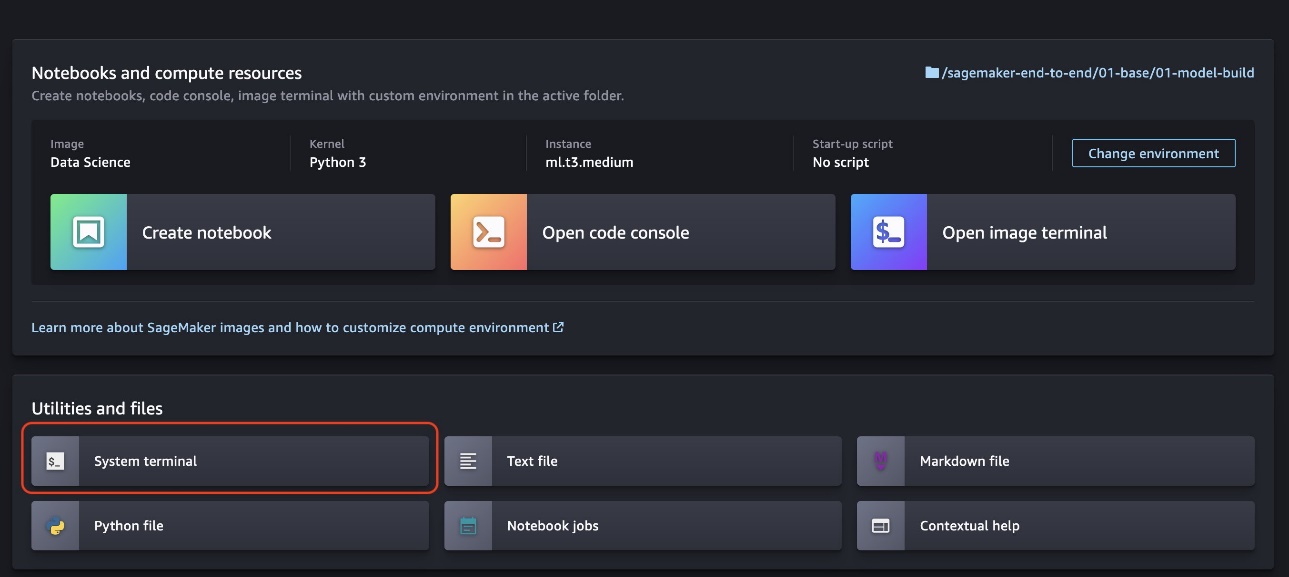

To host Spark UI on SageMaker Studio, complete the following steps:

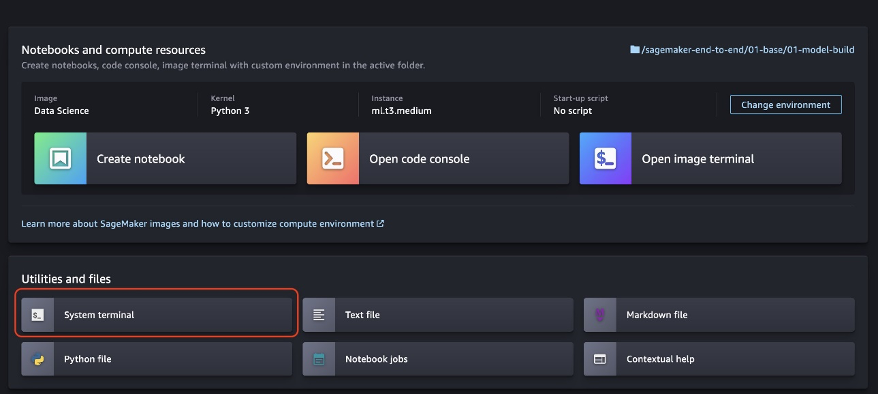

- Choose System terminal from the SageMaker Studio launcher.

- Run the following commands in the system terminal:

The commands will take a few seconds to complete.

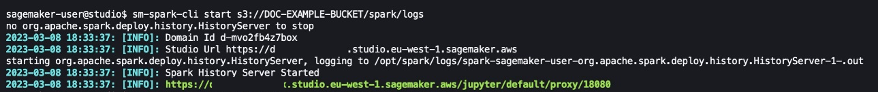

- When the installation is complete, you can start the Spark UI by using the provided

sm-spark-cliand access it from a web browser by running the following code:

sm-spark-cli start s3://DOC-EXAMPLE-BUCKET/<SPARK_EVENT_LOGS_LOCATION>

The S3 location where the event logs produced by SageMaker Processing, AWS Glue, or Amazon EMR are stored can be configured when running Spark applications.

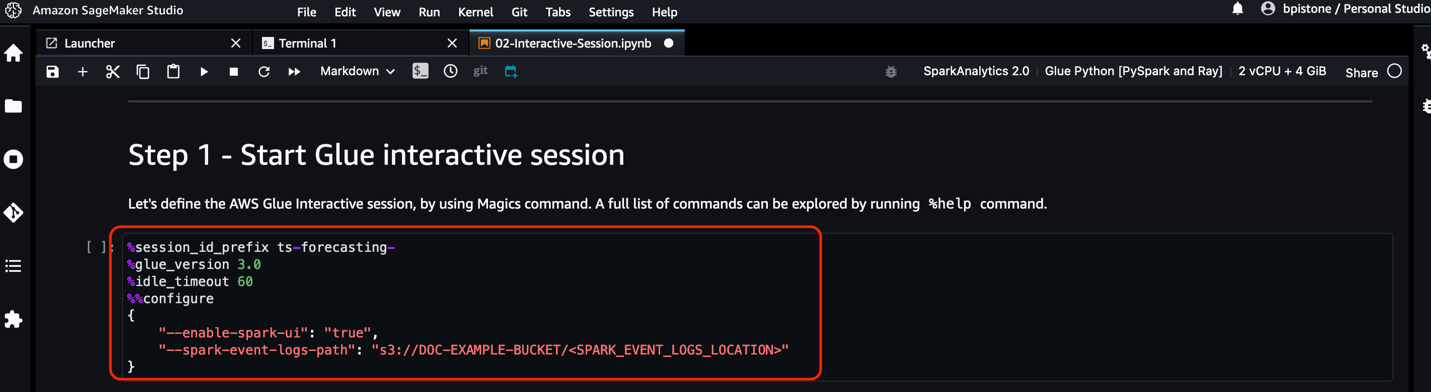

For SageMaker Studio notebooks and AWS Glue Interactive Sessions, you can set up the Spark event log location directly from the notebook by using the sparkmagic kernel.

The sparkmagic kernel contains a set of tools for interacting with remote Spark clusters through notebooks. It offers magic (%spark, %sql) commands to run Spark code, perform SQL queries, and configure Spark settings like executor memory and cores.

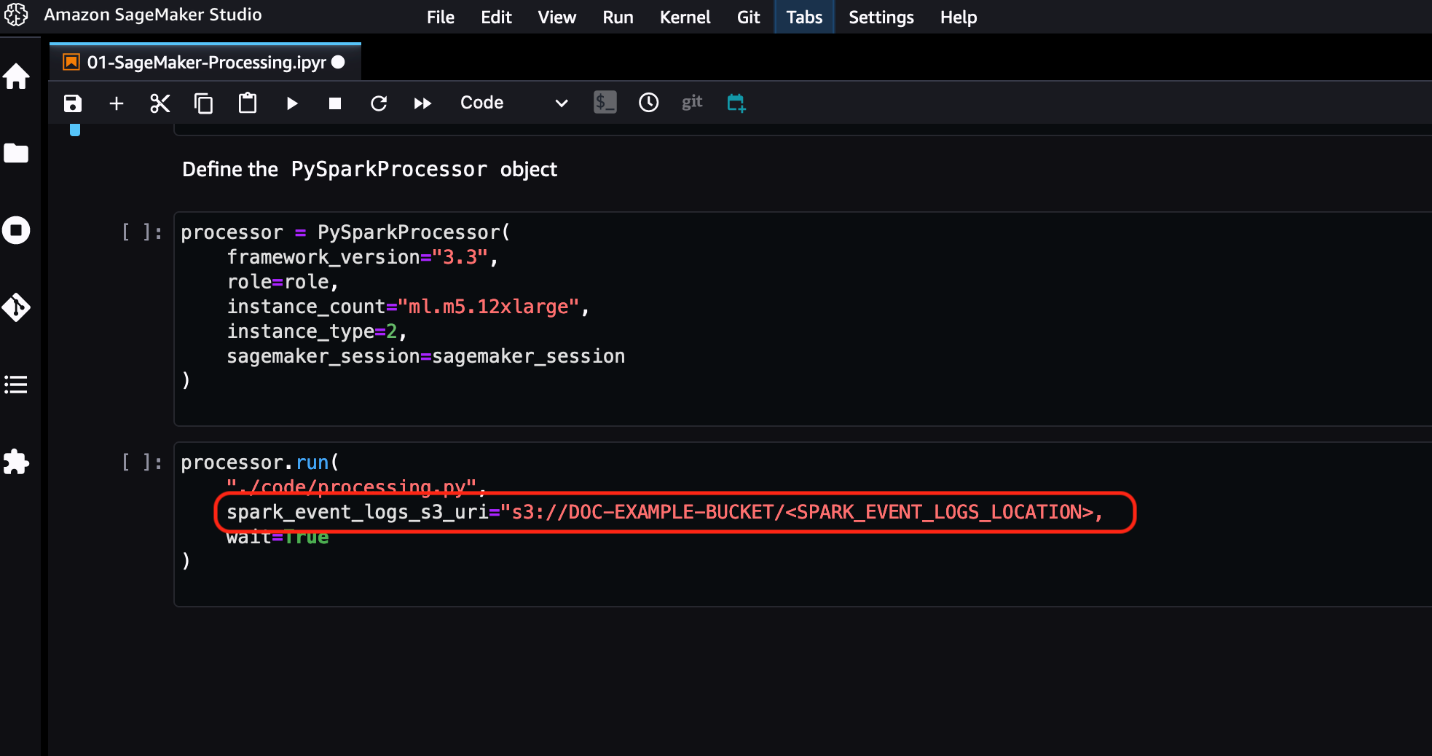

For the SageMaker Processing job, you can configure the Spark event log location directly from the SageMaker Python SDK.

Refer to the AWS documentation for additional information:

You can choose the generated URL to access the Spark UI.

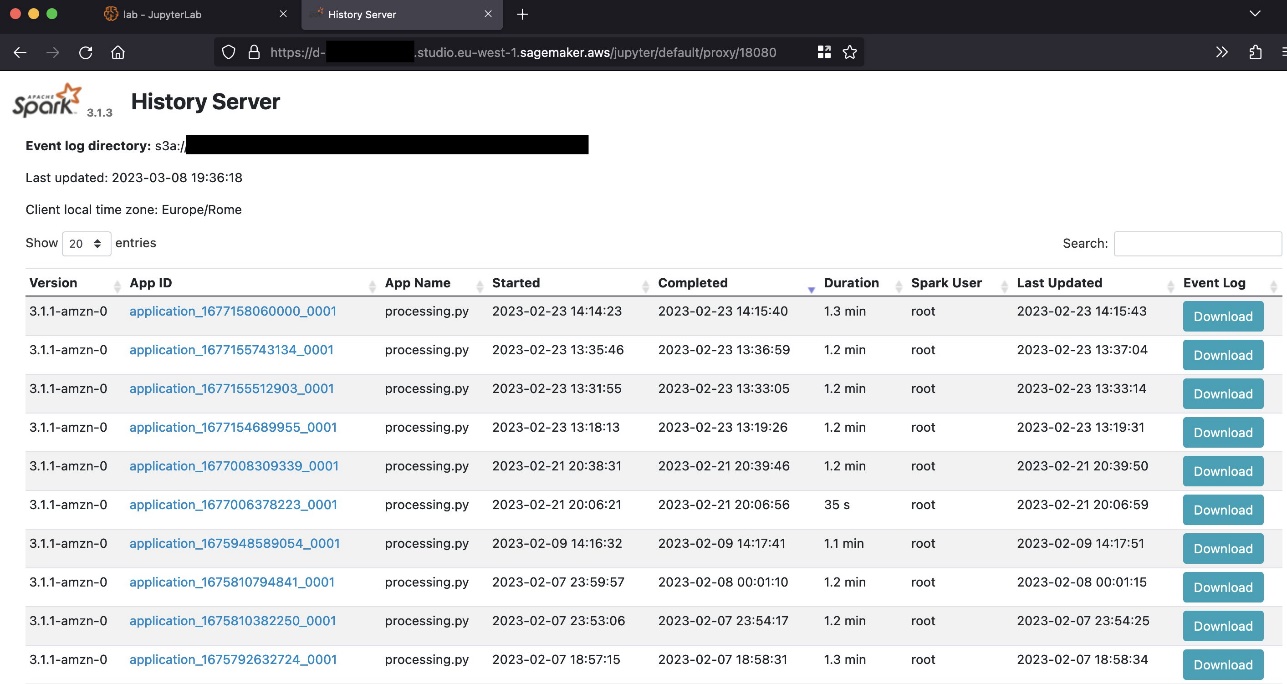

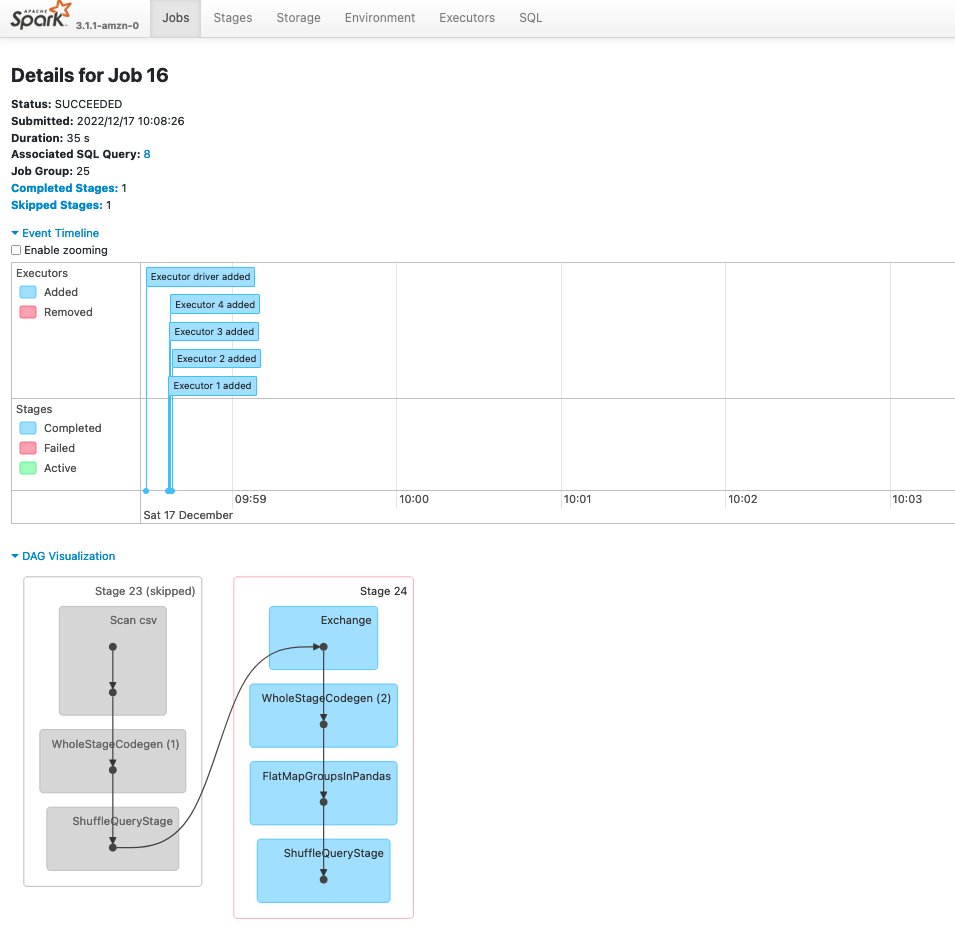

The following screenshot shows an example of the Spark UI.

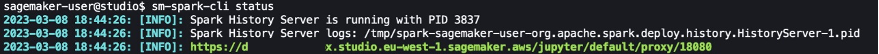

You can check the status of the Spark History Server by using the sm-spark-cli status command in the Studio System terminal.

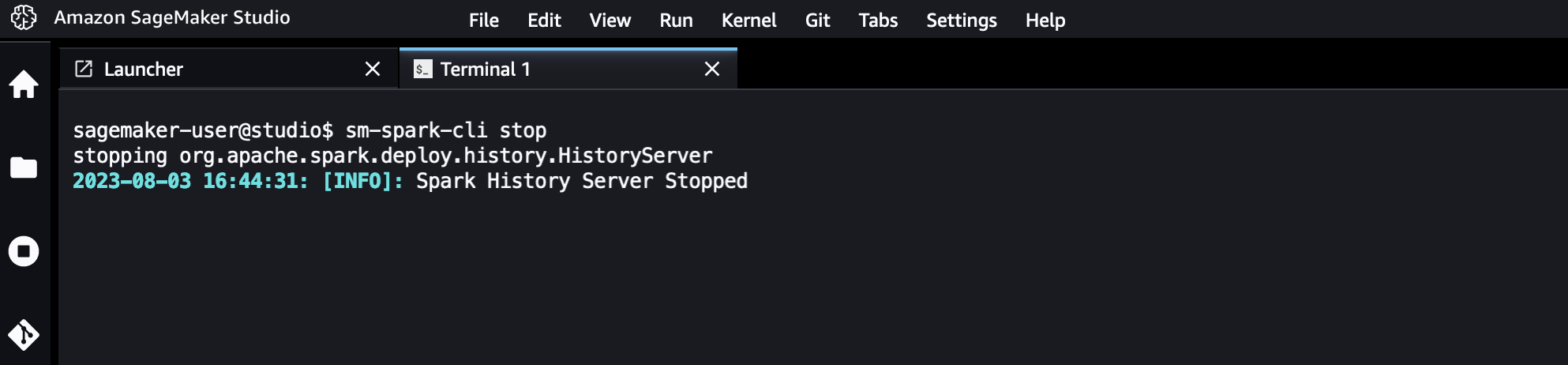

You can also stop the Spark History Server when needed.

Automate the Spark UI installation for users in a SageMaker Studio domain

As an IT admin, you can automate the installation for SageMaker Studio users by using a lifecycle configuration. This can be done for all user profiles under a SageMaker Studio domain or for specific ones. See Customize Amazon SageMaker Studio using Lifecycle Configurations for more details.

You can create a lifecycle configuration from the install-history-server.sh script and attach it to an existing SageMaker Studio domain. The installation is run for all the user profiles in the domain.

From a terminal configured with the AWS Command Line Interface (AWS CLI) and appropriate permissions, run the following commands:

After Jupyter Server restarts, the Spark UI and the sm-spark-cli will be available in your SageMaker Studio environment.

Clean up

In this section, we show you how to clean up the Spark UI in a SageMaker Studio domain, either manually or automatically.

Manually uninstall the Spark UI

To manually uninstall the Spark UI in SageMaker Studio, complete the following steps:

- Choose System terminal in the SageMaker Studio launcher.

- Run the following commands in the system terminal:

Uninstall the Spark UI automatically for all SageMaker Studio user profiles

To automatically uninstall the Spark UI in SageMaker Studio for all user profiles, complete the following steps:

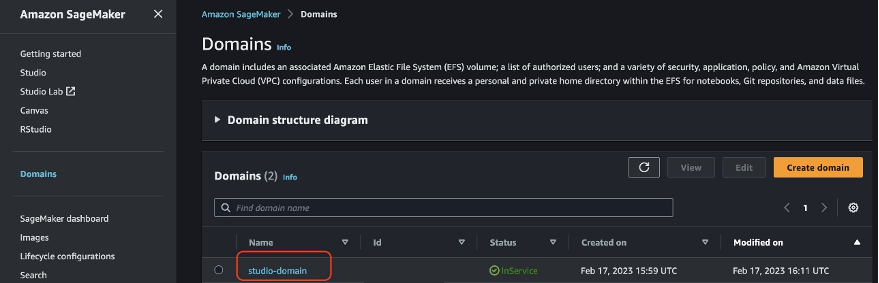

- On the SageMaker console, choose Domains in the navigation pane, then choose the SageMaker Studio domain.

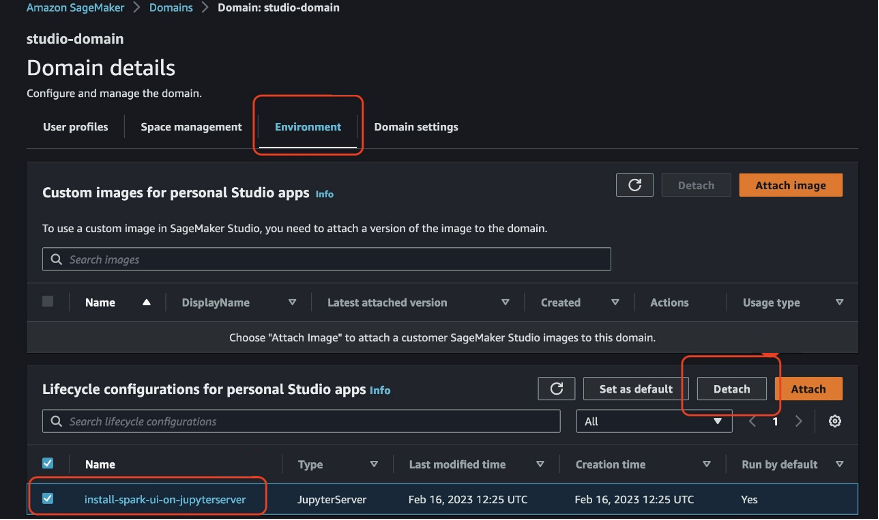

- On the domain details page, navigate to the Environment tab.

- Select the lifecycle configuration for the Spark UI on SageMaker Studio.

- Choose Detach.

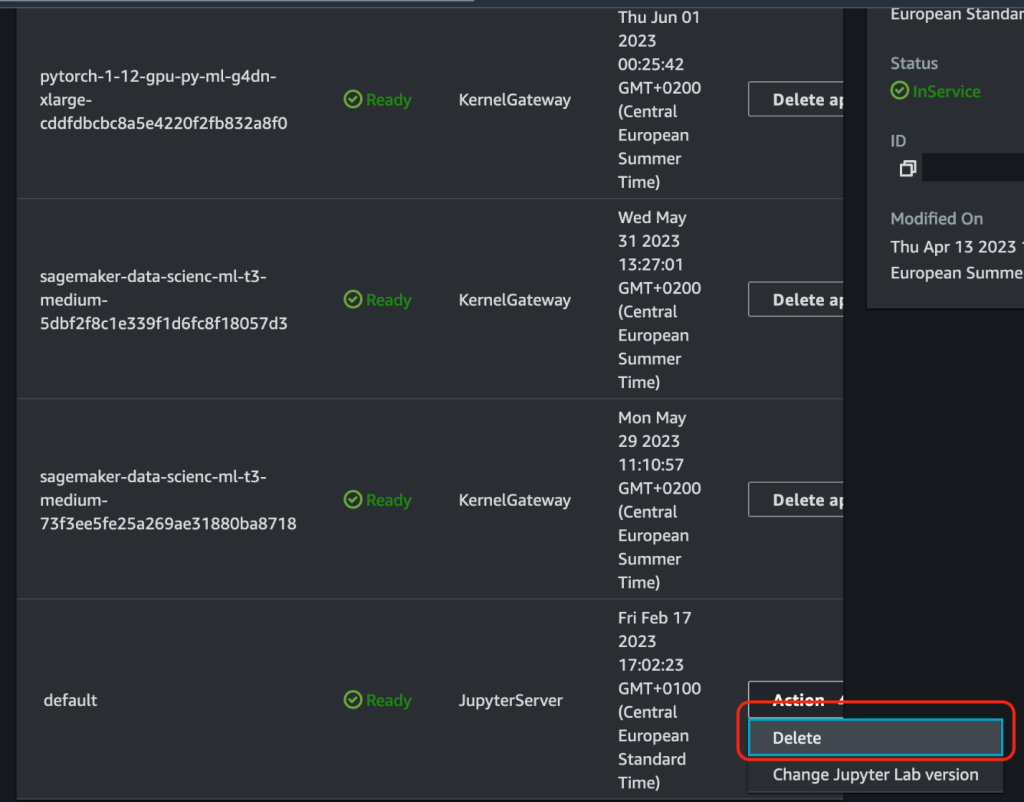

- Delete and restart the Jupyter Server apps for the SageMaker Studio user profiles.

Conclusion

In this post, we shared a solution you can use to quickly install the Spark UI on SageMaker Studio. With the Spark UI hosted on SageMaker, machine learning (ML) and data engineering teams can use scalable cloud compute to access and analyze Spark logs from anywhere and speed up their project delivery. IT admins can standardize and expedite the provisioning of the solution in the cloud and avoid proliferation of custom development environments for ML projects.

All the code shown as part of this post is available in the GitHub repository.

About the Authors

Giuseppe Angelo Porcelli is a Principal Machine Learning Specialist Solutions Architect for Amazon Web Services. With several years software engineering and an ML background, he works with customers of any size to understand their business and technical needs and design AI and ML solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. He has worked on projects in different domains, including MLOps, computer vision, and NLP, involving a broad set of AWS services. In his free time, Giuseppe enjoys playing football.

Giuseppe Angelo Porcelli is a Principal Machine Learning Specialist Solutions Architect for Amazon Web Services. With several years software engineering and an ML background, he works with customers of any size to understand their business and technical needs and design AI and ML solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. He has worked on projects in different domains, including MLOps, computer vision, and NLP, involving a broad set of AWS services. In his free time, Giuseppe enjoys playing football.

Bruno Pistone is an AI/ML Specialist Solutions Architect for AWS based in Milan. He works with customers of any size, helping them understand their technical needs and design AI and ML solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. His field of expertice includes machine learning end to end, machine learning endustrialization, and generative AI. He enjoys spending time with his friends and exploring new places, as well as traveling to new destinations.

Bruno Pistone is an AI/ML Specialist Solutions Architect for AWS based in Milan. He works with customers of any size, helping them understand their technical needs and design AI and ML solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. His field of expertice includes machine learning end to end, machine learning endustrialization, and generative AI. He enjoys spending time with his friends and exploring new places, as well as traveling to new destinations.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://aws.amazon.com/blogs/machine-learning/host-the-spark-ui-on-amazon-sagemaker-studio/

- :has

- :is

- :where

- $UP

- 1

- 100

- 12

- 7

- 8

- 9

- a

- About

- access

- accessing

- actions

- Additional

- Additional Information

- admin

- AI

- AI/ML

- All

- allow

- allows

- also

- Amazon

- Amazon EC2

- Amazon EMR

- Amazon Machine Learning

- Amazon SageMaker

- Amazon SageMaker Studio

- Amazon Web Services

- an

- analyze

- analyzing

- and

- any

- anywhere

- Apache

- app

- applications

- appropriate

- apps

- ARE

- AS

- attach

- automate

- automatically

- available

- avoid

- AWS

- AWS Glue

- background

- based

- BE

- BEST

- Big

- Big Data

- broad

- browser

- business

- by

- called

- CAN

- CD

- check

- Choose

- Cloud

- Cluster

- code

- commonly

- complete

- Compute

- computer

- Computer Vision

- computing

- Configuration

- configured

- Connecting

- consists

- Console

- Container

- contains

- control

- create

- custom

- Customers

- data

- data processing

- datasets

- delivery

- Design

- destinations

- details

- Development

- different

- directly

- distributed

- distributed computing

- documentation

- domain

- domains

- done

- easily

- either

- enables

- enabling

- end

- Engineering

- Environment

- environments

- Errors

- Event

- example

- existing

- expedite

- Exploring

- few

- field

- Flexibility

- following

- Football

- For

- Framework

- Free

- friends

- from

- fully

- generate

- generated

- generative

- Generative AI

- greater

- he

- helping

- his

- history

- host

- hosted

- How

- How To

- HTML

- http

- HTTPS

- if

- in

- includes

- Including

- information

- install

- installation

- installing

- integrated

- Integrates

- interacting

- interactive

- Interface

- into

- involving

- IT

- Job

- Jobs

- jpg

- large

- learning

- leaving

- lifecycle

- like

- Line

- location

- log

- machine

- machine learning

- magic

- make

- managed

- management

- managing

- manually

- Memory

- MILAN

- ML

- MLOps

- model

- Monitor

- more

- Named

- Navigate

- Navigation

- Need

- needed

- needs

- New

- nlp

- nodes

- notebook

- number

- of

- Offers

- on

- ones

- optimized

- Option

- Options

- or

- over

- page

- pane

- part

- Perform

- permissions

- Places

- plato

- Plato Data Intelligence

- PlatoData

- playing

- Popular

- Post

- Principal

- process

- processing

- Produced

- Profile

- Profiles

- Progress

- project

- projects

- provided

- Python

- queries

- quickly

- RAY

- remote

- resource

- Run

- running

- runs

- sagemaker

- scalable

- scripts

- sdk

- seconds

- Section

- see

- Serverless

- Services

- sessions

- set

- settings

- several

- Share

- shared

- Shell

- show

- shown

- Shows

- Simple

- Size

- Software

- software engineering

- solution

- Solutions

- Spark

- specialist

- specific

- speed

- Spending

- stack

- start

- Status

- Steps

- Stop

- storage

- store

- stored

- studio

- Supports

- system

- Take

- teams

- Technical

- Terminal

- that

- The

- their

- Them

- then

- thereby

- These

- this

- Through

- time

- to

- tools

- track

- Training

- Traveling

- types

- ui

- under

- understand

- URL

- Usage

- use

- User

- User Interface

- users

- using

- utility

- vision

- ways

- we

- web

- web browser

- web services

- web-based

- WELL

- when

- which

- will

- with

- without

- worked

- works

- years

- You

- Your

- zephyrnet