IBM and NASA have put together and released Prithvi: an open source foundation AI model that may help scientists and other folks analyze satellite imagery.

The vision transformer model, released under an Apache 2 license, is relatively small at 100 million parameters, and was trained on a year’s worth of images collected by the US space boffins’ Harmonized Landsat Sentinel-2 (HLS) program. As well as the main model, three variants of Prithvi are available, fine-tuned for identifying flooding; wildfire burn scars; and crops and other land use.

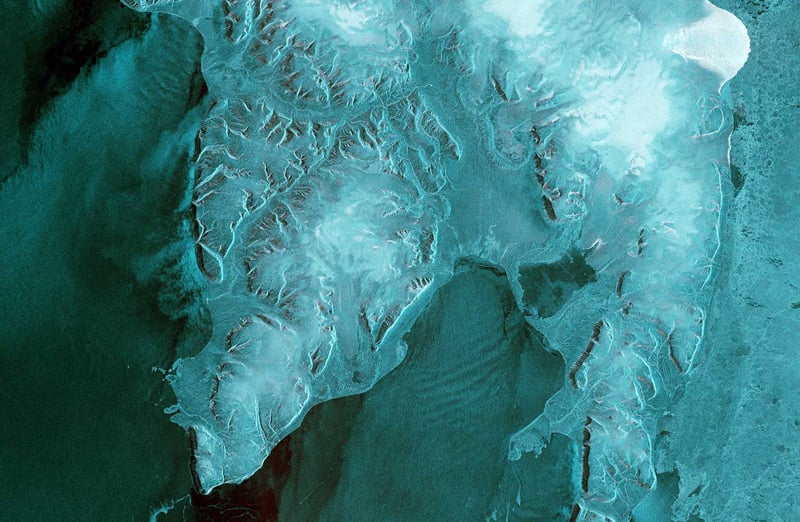

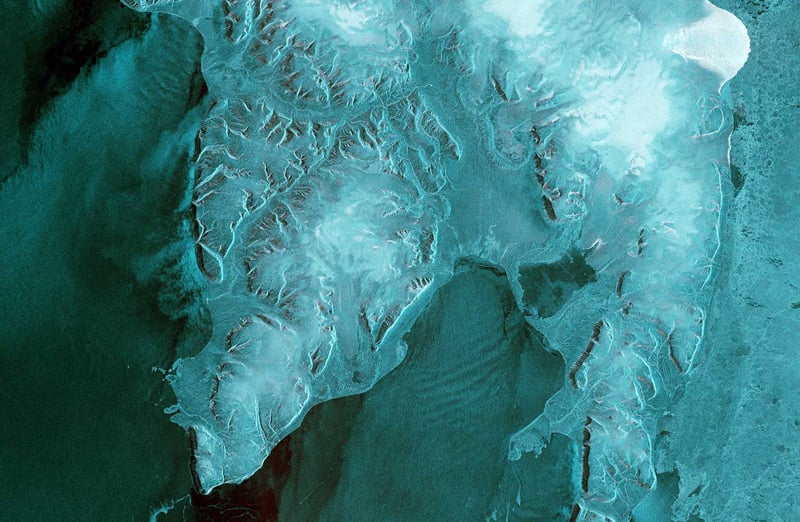

Essentially, it works like this: you feed one of the models an overhead satellite photo, and it labels areas in the snap it understands. For example, the variant fine-tuned for crops can point out where there’s probably water, forests, corn fields, cotton fields, developed land, wetlands, and so on.

This collection, we imagine, would be useful for, say, automating the study of changes to land over time – such as tracking erosion from flooding, or how drought and wildfires have hit a region. Big Blue and NASA aren’t the first to do this with machine learning: there are plenty of previous efforts we could cite.

A demo of the crop-classifying Prithvi model can be found here. Provide your own satellite imagery or use one of the examples at the bottom of the page. Click Submit to run the model live.

“We believe that foundation models have the potential to change the way observational data is analyzed and help us to better understand our planet,” Kevin Murphy, chief science data officer at NASA, said in a statement. “And by open sourcing such models and making them available to the world, we hope to multiply their impact.”

Developers can download the models from Hugging Face here.

There are other online demos of Prithvi, such as this one for the variant fine-tuned for bodies of water; this one for detecting wildfire scars; and this one that shows off the model’s ability to reconstruct partially photographed areas.

A foundation model is a pre-trained generalized model capable of being fine-tuned to perform specific tasks; it’s a term coined by the Stanford Institute for Human-Centered Artificial Intelligence. IBM claims Prithvi is up to 15 percent better than previous (unnamed) state-of-the-art techniques at analyzing geospatial imagery, despite relying on less than half as much labelled data.

It’s hoped this model will help people track climate change and land use, especially as the amount of satellite data collected by science probes orbiting Earth is estimated [PDF] to reach 250,000 terabytes by 2024.

IBM said it trained the model using Vela, its AI supercomputer cluster. That said, we’re also told it took Big Blue only about an hour to fine-tune the model for detecting flooding using an Nvidia V100 GPU, so you may not need huge stacks of iron to create your own variant.

A commercialized version, whatever that may be, of Prithvi is due to be made available later this year.

“AI foundation models for Earth observations present enormous potential to address intricate scientific problems and expedite the broader deployment of AI across diverse applications,” said Rahul Ramachandran, a manager and a senior research scientist at NASA’s Interagency Implementation and Advanced Concepts Team (IMPACT).

“We call on the Earth science and applications communities to evaluate this initial HLS foundation model for a variety of uses and share feedback on its merits and drawbacks,” he added. ®

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://go.theregister.com/feed/www.theregister.com/2023/08/04/ibm_nasa_geospatial_ai/

- :is

- :not

- :where

- $UP

- 000

- 100

- 15%

- 2024

- 250

- 7

- a

- ability

- About

- across

- added

- address

- advanced

- AI

- also

- amount

- an

- analyze

- analyzed

- analyzing

- and

- Apache

- applications

- ARE

- areas

- artificial

- artificial intelligence

- AS

- At

- automating

- available

- BE

- being

- believe

- Better

- Big

- Blue

- bodies

- Bottom

- broader

- burn

- by

- call

- CAN

- capable

- change

- Changes

- chief

- click

- Climate

- Climate change

- Cluster

- CO

- coined

- collection

- Communities

- concepts

- could

- create

- crops

- data

- demo

- Demos

- deployment

- Despite

- developed

- diverse

- do

- download

- drawbacks

- Drought

- due

- earth

- enormous

- especially

- estimated

- evaluate

- example

- examples

- expedite

- Face

- feedback

- Fields

- First

- For

- found

- Foundation

- from

- GPU

- Half

- Have

- he

- help

- Hit

- hope

- hour

- How

- HTTPS

- huge

- IBM

- identifying

- images

- imagine

- Impact

- implementation

- in

- initial

- Institute

- Intelligence

- IT

- ITS

- jpg

- Labels

- Land

- later

- learning

- less

- License

- like

- live

- machine

- machine learning

- made

- Main

- Making

- manager

- May..

- merits

- million

- model

- models

- much

- Nasa

- Nature

- Need

- Nvidia

- of

- off

- Officer

- on

- ONE

- online

- only

- open

- open source

- or

- orbiting

- Other

- our

- out

- over

- own

- page

- parameters

- People

- percent

- Perform

- photo

- planet

- plato

- Plato Data Intelligence

- PlatoData

- Point

- potential

- present

- previous

- probably

- problems

- Program

- provide

- put

- RE

- reach

- region

- relatively

- released

- relying

- research

- Run

- s

- Said

- satellite

- say

- Science

- scientific

- Scientist

- scientists

- senior

- Share

- Shows

- small

- Snap

- So

- Source

- Sourcing

- Space

- specific

- Stacks

- stanford

- state-of-the-art

- Statement

- Study

- submit

- such

- supercomputer

- tasks

- team

- techniques

- term

- than

- that

- The

- the world

- their

- Them

- There.

- this

- this year

- three

- time

- to

- together

- took

- track

- Tracking

- trained

- transformer

- under

- understand

- understands

- UNNAMED

- us

- use

- uses

- using

- Variant

- variety

- version

- vision

- was

- Water

- Way..

- we

- WELL

- whatever

- will

- with

- works

- world

- worth

- would

- year

- You

- Your

- zephyrnet