Amazon SageMaker Studio provides a fully managed solution for data scientists to interactively build, train, and deploy machine learning (ML) models. In addition to the interactive ML experience, data workers also seek solutions to run notebooks as ephemeral jobs without the need to refactor code as Python modules or learn DevOps tools and best practices to automate their deployment infrastructure. Some common use cases for doing this include:

- Se rulează regulat inferența modelului pentru a genera rapoarte

- Scaling up a feature engineering step after having tested in Studio against a subset of data on a small instance

- Reantrenarea și implementarea modelelor pe o anumită cadență

- Analyzing your team’s Amazon SageMaker usage on a regular cadence

Previously, when data scientists wanted to take the code they built interactively on notebooks and run them as batch jobs, they were faced with a steep learning curve using Pipelines Amazon SageMaker, AWS Lambdas, Amazon EventBridge, Sau alte solutii that are difficult to set up, use, and manage.

cu Locuri de muncă pentru notebook-uri SageMaker, you can now run your notebooks as is or in a parameterized fashion with just a few simple clicks from the SageMaker Studio or SageMaker Studio Lab interface. You can run these notebooks on a schedule or immediately. There’s no need for the end-user to modify their existing notebook code. When the job is complete, you can view the populated notebook cells, including any visualizations!

In this post, we share how to operationalize your SageMaker Studio notebooks as scheduled notebook jobs.

Prezentare generală a soluțiilor

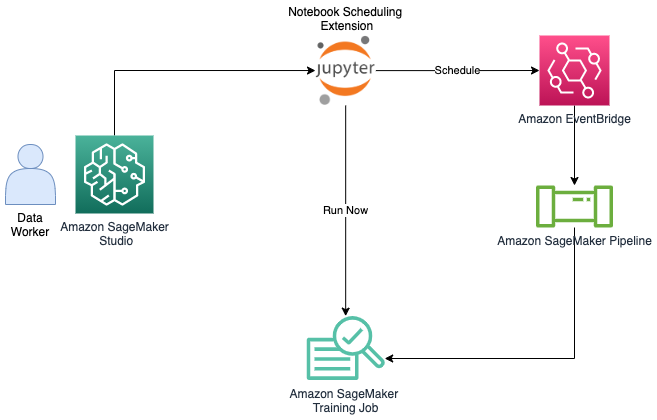

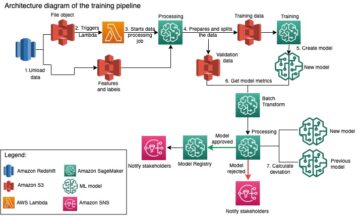

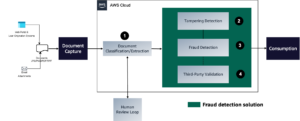

The following diagram illustrates our solution architecture. We utilize the pre-installed SageMaker extension to run notebooks as a job immediately or on a schedule.

In the following sections, we walk through the steps to create a notebook, parameterize cells, customize additional options, and schedule your job. We also include a sample use case.

Cerințe preliminare

To use SageMaker notebook jobs, you need to be running a JupyterLab 3 JupyterServer app within Studio. For more information on how to upgrade to JupyterLab 3, refer to View and update the JupyterLab version of an app from the console. Asigurați-vă că Shut down and Update SageMaker Studio in order to pick up the latest updates.

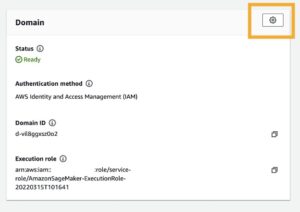

To define job definitions that run notebooks on a schedule, you may need to add additional permissions to your SageMaker execution role.

First, add a trust relationship to your SageMaker execution role that allows events.amazonaws.com to assume your role:

Additionally, you may need to create and attach an inline policy to your execution role. The below policy is supplementary to the very permissive AmazonSageMakerFullAccess policy. For a complete and minimal set of permissions see Install Policies and Permissions.

Creați o lucrare de notebook

To operationalize your notebook as a SageMaker notebook job, choose the Creați o lucrare de notebook icon.

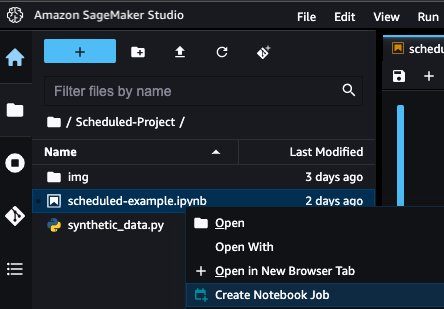

Alternatively, you can choose (right-click) your notebook on the file system and choose Creați job pentru notebook.

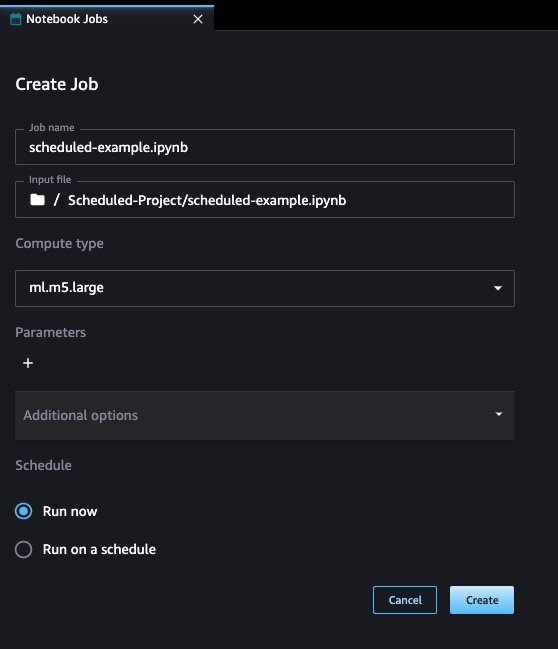

În Creați loc de muncă section, simply choose the right instance type for your scheduled job based on your workload: standard instances, compute optimized instances, or accelerated computing instances that contain GPUs. You can choose any of the instances available for SageMaker training jobs. For the complete list of instances available, refer to Prețuri Amazon SageMaker.

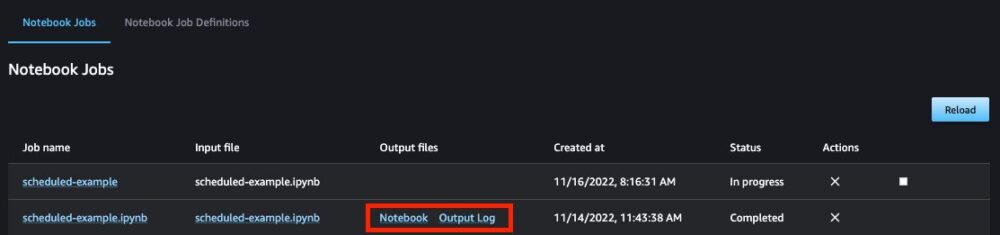

When a job is complete, you can view the output notebook file with its populated cells, as well as the underlying logs from the job runs.

Parameterize cells

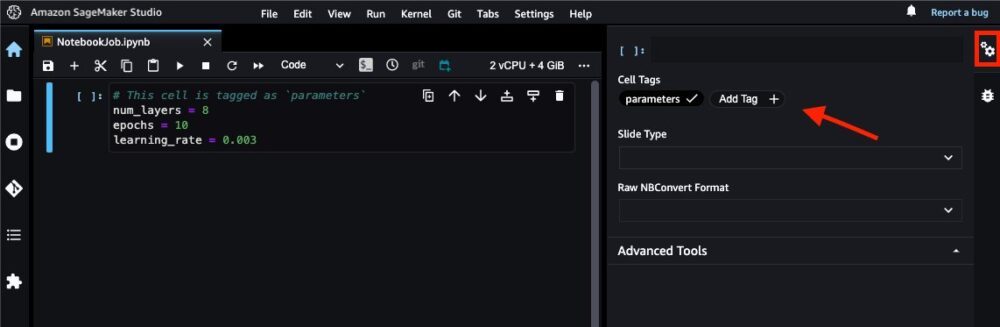

When moving a notebook to a production workflow, it’s important to be able to reuse the same notebook with different sets of parameters for modularity. For example, you may want to parameterize the dataset location or the hyperparameters of your model so that you can reuse the same notebook for many distinct model trainings. SageMaker notebook jobs support this through cell tags. Simply choose the double gear icon in the right pane and choose Adaugă etichetă. Then label the tag as parameters.

By default, the notebook job run uses the parameter values specified in the notebook, but alternatively, you can modify these as a configuration for your notebook job.

Configure additional options

When creating a notebook job, you can expand the Opțiuni suplimentare section in order to customize your job definition. Studio will automatically detect the image or kernel you’re using in your notebook and pre-select it for you. Ensure that you have validated this selection.

You can also specify environment variables or startup scripts to customize your notebook run environment. For the full list of configurations, see Opțiuni suplimentare.

Schedule your job

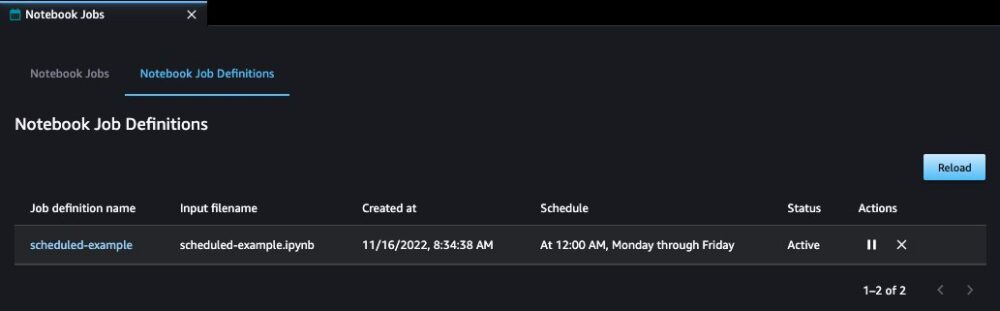

To schedule your job, choose Rulați după un program and set an appropriate interval and time. Then you can choose the Lucrări de notebook tab that is visible after choosing the home icon. After the notebook is loaded, choose the Definiții job pentru notebook tab to pause or remove your schedule.

Exemplu de caz de utilizare

For our example, we showcase an end-to-end ML workflow that prepares data from a ground truth source, trains a refreshed model from that time period, and then runs inference on the most recent data to generate actionable insights. In practice, you might run a complete end-to-end workflow, or simply operationalize one step of your workflow. You can schedule an AWS Adeziv sesiune interactivă for daily data preparation, or run a batch inference job that generates graphical results directly in your output notebook.

The full notebook for this example can be found in our SageMaker Examples GitHub repository. The use case assumes that we’re a telecommunications company that is looking to schedule a notebook that predicts probable customer churn based on a model trained with the most recent data we have available.

To start, we gather the most recently available customer data and perform some preprocessing on it:

We train our refreshed model on this updated training data in order to make accurate predictions on todays_data:

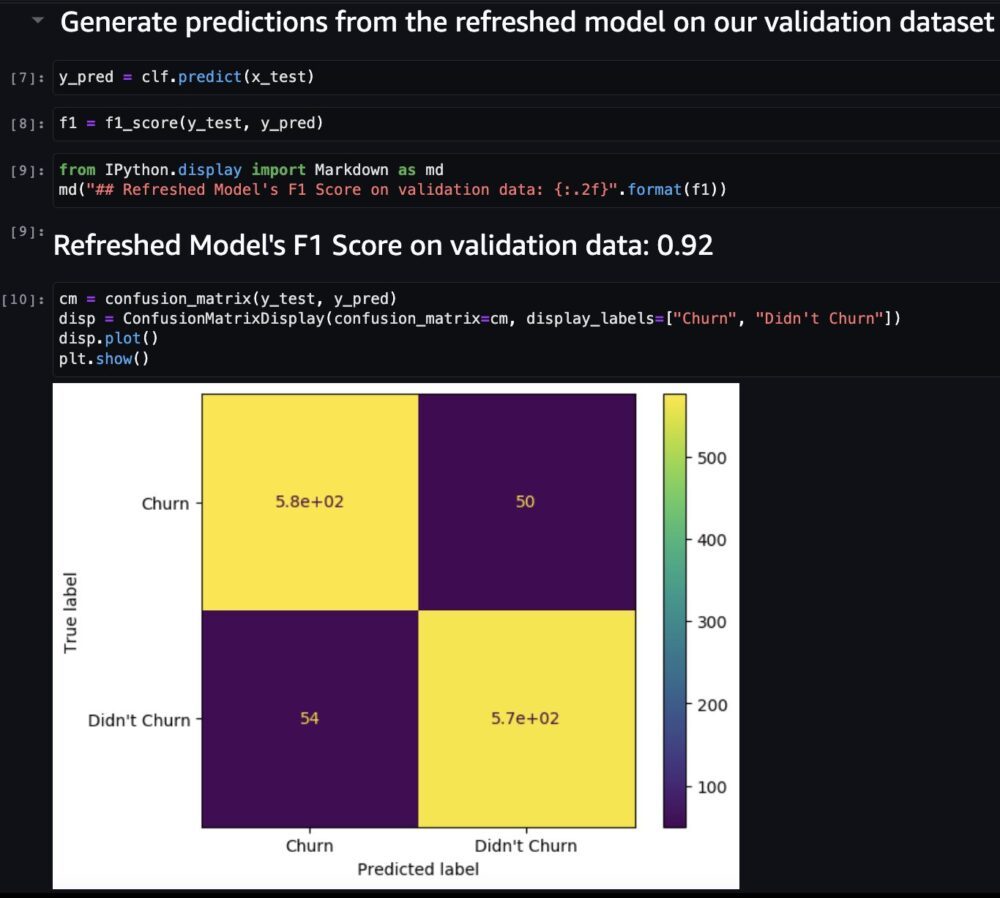

Because we’re going to schedule this notebook as a daily report, we want to capture how good our refreshed model performed on our validation set so that we can be confident in its future predictions. The results in the following screenshot are from our scheduled inference report.

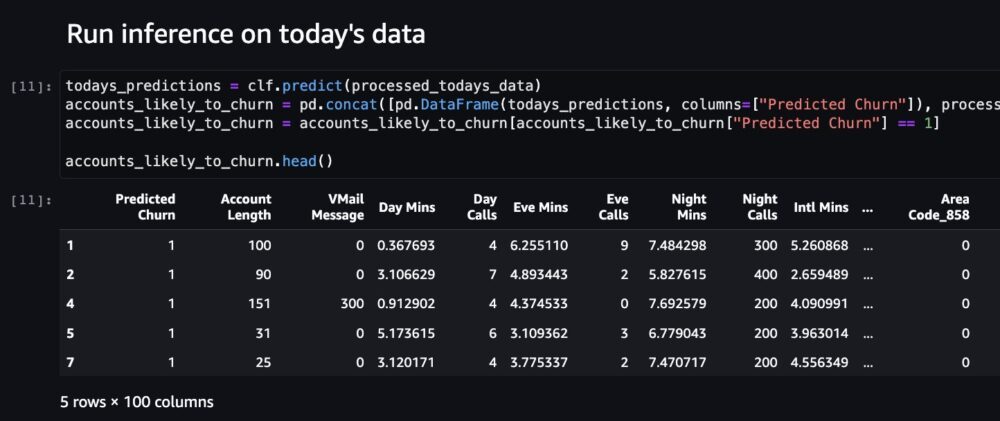

Lastly, you want to capture the predicted results of today’s data into a database so that actions can be taken based on the results of this model.

After the notebook is understood, feel free to run this as an ephemeral job using the Fugi acum option described earlier or test out the scheduling functionality.

A curăța

If you followed along with our example, be sure to pause or delete your notebook job’s schedule to avoid incurring ongoing charges.

Concluzie

Bringing notebooks to production with SageMaker notebook jobs vastly simplifies the undifferentiated heavy lifting required by data workers. Whether you’re scheduling end-to-end ML workflows or a piece of the puzzle, we encourage you to put some notebooks in production using SageMaker Studio or SageMaker Studio Lab! To learn more, see Notebook-based Workflows.

Despre autori

Sean Morgan este arhitect senior de soluții ML la AWS. Are experiență în domeniul semiconductorilor și al cercetării academice și își folosește experiența pentru a ajuta clienții să-și atingă obiectivele pe AWS. În timpul său liber, Sean este un colaborator/menținător cu sursă deschisă activ și este liderul grupului de interese speciale pentru TensorFlow Addons.

Sean Morgan este arhitect senior de soluții ML la AWS. Are experiență în domeniul semiconductorilor și al cercetării academice și își folosește experiența pentru a ajuta clienții să-și atingă obiectivele pe AWS. În timpul său liber, Sean este un colaborator/menținător cu sursă deschisă activ și este liderul grupului de interese speciale pentru TensorFlow Addons.

Sumedha Swamy este manager de produs principal la Amazon Web Services. El conduce echipa SageMaker Studio pentru a-l construi în IDE-ul ales pentru fluxurile de lucru interactive de știință a datelor și de inginerie a datelor. El și-a petrecut ultimii 15 ani construind produse pentru consumatori și întreprinderi obsedați de clienți folosind Machine Learning. În timpul liber îi place să fotografieze geologia uimitoare a sud-vestului american.

Sumedha Swamy este manager de produs principal la Amazon Web Services. El conduce echipa SageMaker Studio pentru a-l construi în IDE-ul ales pentru fluxurile de lucru interactive de știință a datelor și de inginerie a datelor. El și-a petrecut ultimii 15 ani construind produse pentru consumatori și întreprinderi obsedați de clienți folosind Machine Learning. În timpul liber îi place să fotografieze geologia uimitoare a sud-vestului american.

Edward Sun este un SDE senior care lucrează pentru SageMaker Studio la Amazon Web Services. El se concentrează pe construirea de soluții interactive ML și simplificarea experienței clienților pentru a integra SageMaker Studio cu tehnologii populare în ingineria datelor și ecosistemul ML. În timpul său liber, Edward este un mare fan al campingului, al drumețiilor și al pescuitului și se bucură de timpul petrecut cu familia sa.

Edward Sun este un SDE senior care lucrează pentru SageMaker Studio la Amazon Web Services. El se concentrează pe construirea de soluții interactive ML și simplificarea experienței clienților pentru a integra SageMaker Studio cu tehnologii populare în ingineria datelor și ecosistemul ML. În timpul său liber, Edward este un mare fan al campingului, al drumețiilor și al pescuitului și se bucură de timpul petrecut cu familia sa.

- AI

- ai art

- ai art generator

- ai robot

- Amazon SageMaker

- Amazon SageMaker Studio

- inteligență artificială

- certificare de inteligență artificială

- inteligența artificială în domeniul bancar

- robot cu inteligență artificială

- roboți cu inteligență artificială

- software de inteligență artificială

- Învățare automată AWS

- blockchain

- conferință blockchain ai

- coingenius

- inteligența artificială conversațională

- criptoconferință ai

- dall-e

- învățare profundă

- google ai

- Intermediar (200)

- masina de învățare

- Plato

- platoul ai

- Informații despre date Platon

- Jocul lui Platon

- PlatoData

- platogaming

- scara ai

- sintaxă

- zephyrnet