1IRIF, CNRS – Université Paris Cité, France

2کیو سی ویئر، پالو آلٹو، امریکہ اور پیرس، فرانس

3School of Informatics, University of Edinburgh, Scotland, UK

4F. Hoffmann La Roche AG

اس کاغذ کو دلچسپ لگتا ہے یا اس پر بات کرنا چاہتے ہیں؟ SciRate پر تبصرہ کریں یا چھوڑیں۔.

خلاصہ

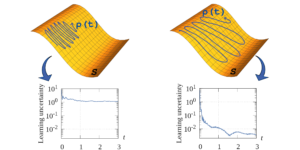

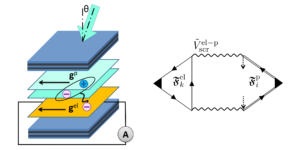

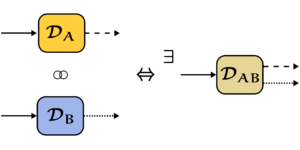

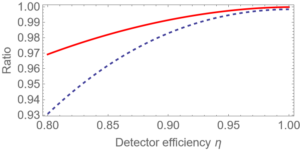

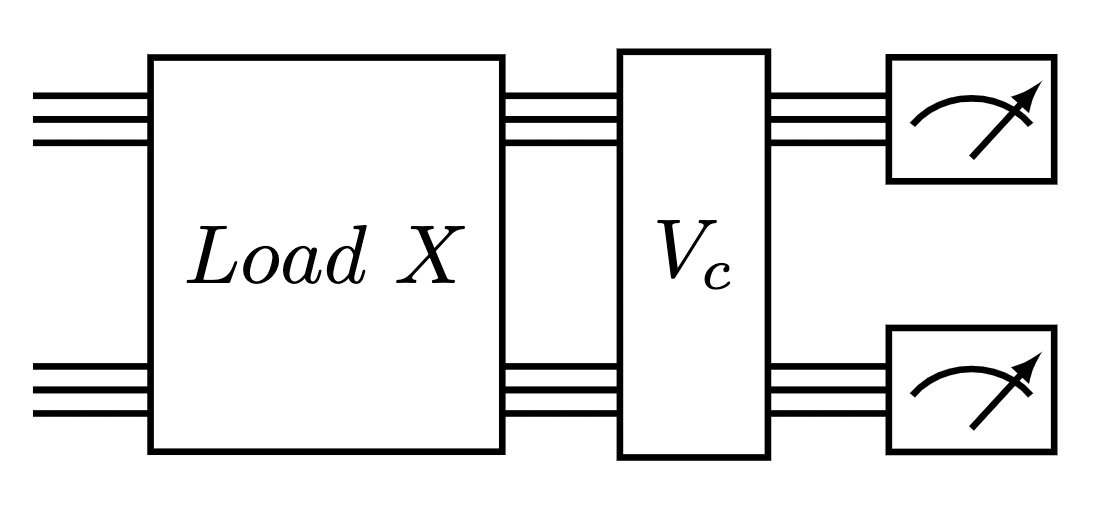

In this work, quantum transformers are designed and analysed in detail by extending the state-of-the-art classical transformer neural network architectures known to be very performant in natural language processing and image analysis. Building upon the previous work, which uses parametrised quantum circuits for data loading and orthogonal neural layers, we introduce three types of quantum transformers for training and inference, including a quantum transformer based on compound matrices, which guarantees a theoretical advantage of the quantum attention mechanism compared to their classical counterpart both in terms of asymptotic run time and the number of model parameters. These quantum architectures can be built using shallow quantum circuits and produce qualitatively different classification models. The three proposed quantum attention layers vary on the spectrum between closely following the classical transformers and exhibiting more quantum characteristics. As building blocks of the quantum transformer, we propose a novel method for loading a matrix as quantum states as well as two new trainable quantum orthogonal layers adaptable to different levels of connectivity and quality of quantum computers. We performed extensive simulations of the quantum transformers on standard medical image datasets that showed competitively, and at times better performance compared to the classical benchmarks, including the best-in-class classical vision transformers. The quantum transformers we trained on these small-scale datasets require fewer parameters compared to standard classical benchmarks. Finally, we implemented our quantum transformers on superconducting quantum computers and obtained encouraging results for up to six qubit experiments.

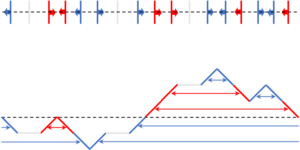

Featured image: Quantum circuit to execute one attention layer of the Compound Transformer. A matrix data loader followed by an orthogonal quantum layer.

مقبول خلاصہ

► BibTeX ڈیٹا

► حوالہ جات

ہے [1] جیکب بیامونٹے، پیٹر وٹیک، نکولا پینکوٹی، پیٹرک ریبینٹروسٹ، ناتھن ویبی، اور سیٹھ لائیڈ۔ "کوانٹم مشین لرننگ"۔ فطرت 549، 195–202 (2017)۔

https://doi.org/10.1038/nature23474

ہے [2] Iris Cong، Soonwon Choi، اور Mikhail D Lukin. "کوانٹم کنولوشنل نیورل نیٹ ورکس"۔ نیچر فزکس 15، 1273–1278 (2019)۔

https://doi.org/10.1038/s41567-019-0648-8

ہے [3] کشور بھارتی، البا سرویرا لیرٹا، تھی ہا کیاو، ٹوبیاس ہاگ، سمنر الپرین لی، ابھینو آنند، میتھیاس ڈیگروٹ، ہرمننی ہیمونن، جیکب ایس کوٹ مین، ٹم مینکے، وغیرہ۔ "شور انٹرمیڈیٹ اسکیل کوانٹم الگورتھم"۔ جدید طبیعیات کے جائزے 94، 015004 (2022)۔

https:///doi.org/10.1103/RevModPhys.94.015004

ہے [4] Marco Cerezo، Andrew Arrasmith، Ryan Babbush، Simon C Benjamin، Suguru Endo، Keisuke Fujii، Jarrod R McClean، Kosuke Mitarai، Xiao Yuan، Lukasz Cincio، et al. "متغیر کوانٹم الگورتھم"۔ فطرت کا جائزہ طبیعیات 3، 625–644 (2021)۔

https://doi.org/10.1038/s42254-021-00348-9

ہے [5] Jonas Landman, Natansh Mathur, Yun Yvonna Li, Martin Strahm, Skander Kazdaghli, Anupam Prakash, and Iordanis Kerenidis. “Quantum methods for neural networks and application to medical image classification”. Quantum 6, 881 (2022).

https://doi.org/10.22331/q-2022-12-22-881

ہے [6] Bobak Kiani, Randall Balestriero, Yann LeCun, and Seth Lloyd. “projunn: Efficient method for training deep networks with unitary matrices”. Advances in Neural Information Processing Systems 35, 14448–14463 (2022).

ہے [7] آشیش واسوانی، نوم شازیر، نکی پرمار، جیکب اسزکوریٹ، لیون جونز، ایڈن این گومز، لوکاز قیصر، اور الیا پولوسوخن۔ "توجہ صرف آپ کی ضرورت ہے"۔ نیورل انفارمیشن پروسیسنگ سسٹم میں پیشرفت 30 (2017)۔

ہے [8] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. “Bert: Pre-training of deep bidirectional transformers for language understanding” (2018).

ہے [9] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, and Neil Houlsby. “An image is worth 16×16 words: Transformers for image recognition at scale”. International Conference on Learning Representations (2021). url: openreview.net/forum?id=YicbFdNTTy.

https://openreview.net/forum?id=YicbFdNTTy

ہے [10] Yi Tay, Mostafa Dehghani, Dara Bahri, and Donald Metzler. “Efficient transformers: A survey”. ACM Computing Surveys (CSUR) (2020).

https://doi.org/10.1145/3530811

ہے [11] Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. “Neural Machine Translation by Jointly Learning to Align and Translate” (2016). arXiv:1409.0473 [cs, stat].

آر ایکس سی: 1409.0473

ہے [12] J. Schmidhuber. “Reducing the Ratio Between Learning Complexity and Number of Time Varying Variables in Fully Recurrent Nets”. In Stan Gielen and Bert Kappen, editors, ICANN ’93. Pages 460–463. London (1993). Springer.

https://doi.org/10.1007/978-1-4471-2063-6_110

ہے [13] Jürgen Schmidhuber. “Learning to Control Fast-Weight Memories: An Alternative to Dynamic Recurrent Networks”. Neural Computation 4, 131–139 (1992).

https://doi.org/10.1162/neco.1992.4.1.131

ہے [14] Peter Cha, Paul Ginsparg, Felix Wu, Juan Carrasquilla, Peter L McMahon, and Eun-Ah Kim. “Attention-based quantum tomography”. Machine Learning: Science and Technology 3, 01LT01 (2021).

https://doi.org/10.1088/2632-2153/ac362b

ہے [15] Riccardo Di Sipio, Jia-Hong Huang, Samuel Yen-Chi Chen, Stefano Mangini, and Marcel Worring. “The dawn of quantum natural language processing”. In ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Pages 8612–8616. IEEE (2022).

https://doi.org/10.1109/ICASSP43922.2022.9747675

ہے [16] Guangxi Li, Xuanqiang Zhao, and Xin Wang. “Quantum self-attention neural networks for text classification” (2022).

ہے [17] Fabio Sanches, Sean Weinberg, Takanori Ide, and Kazumitsu Kamiya. “Short quantum circuits in reinforcement learning policies for the vehicle routing problem”. Physical Review A 105, 062403 (2022).

https:///doi.org/10.1103/PhysRevA.105.062403

ہے [18] YuanFu Yang and Min Sun. “Semiconductor defect detection by hybrid classical-quantum deep learning”. CVPRPages 2313–2322 (2022).

https:///doi.org/10.1109/CVPR52688.2022.00236

ہے [19] Maxwell Henderson, Samriddhi Shakya, Shashindra Pradhan, and Tristan Cook. “Quanvolutional neural networks: powering image recognition with quantum circuits”. Quantum Machine Intelligence 2, 1–9 (2020).

https://doi.org/10.1007/s42484-020-00012-y

ہے [20] ایڈورڈ فرہی اور ہارٹمٹ نیوین۔ "قریب مدتی پروسیسرز پر کوانٹم نیورل نیٹ ورکس کے ساتھ درجہ بندی" (2018)۔ url: doi.org/10.48550/arXiv.1802.06002۔

https://doi.org/10.48550/arXiv.1802.06002

ہے [21] Kosuke Mitarai، Makoto Negoro، Masahiro Kitagawa، اور Keisuke Fujii۔ "کوانٹم سرکٹ لرننگ"۔ جسمانی جائزہ A 98، 032309 (2018)۔

https:///doi.org/10.1103/PhysRevA.98.032309

ہے [22] کوی جیا، شوائی لی، یوکسین وین، ٹونگلیانگ لیو، اور داچینگ تاؤ۔ "آرتھوگونل ڈیپ نیورل نیٹ ورکس"۔ پیٹرن تجزیہ اور مشین انٹیلی جنس (2019) پر IEEE لین دین۔

https://doi.org/10.1109/TPAMI.2019.2948352

ہے [23] Roger A Horn and Charles R Johnson. “Matrix analysis”. Cambridge university press. (2012).

https://doi.org/10.1017/CBO9780511810817

ہے [24] Iordanis Kerenidis and Anupam Prakash. “Quantum machine learning with subspace states” (2022).

ہے [25] Brooks Foxen, Charles Neill, Andrew Dunsworth, Pedram Roushan, Ben Chiaro, Anthony Megrant, Julian Kelly, Zijun Chen, Kevin Satzinger, Rami Barends, et al. “Demonstrating a continuous set of two-qubit gates for near-term quantum algorithms”. Physical Review Letters 125, 120504 (2020).

https:///doi.org/10.1103/PhysRevLett.125.120504

ہے [26] Sonika Johri, Shantanu Debnath, Avinash Mocherla, Alexandros Singk, Anupam Prakash, Jungsang Kim, and Iordanis Kerenidis. “Nearest centroid classification on a trapped ion quantum computer”. npj Quantum Information 7, 122 (2021).

https://doi.org/10.1038/s41534-021-00456-5

ہے [27] James W Cooley and John W Tukey. “An algorithm for the machine calculation of complex fourier series”. Mathematics of computation 19, 297–301 (1965).

https://doi.org/10.1090/S0025-5718-1965-0178586-1

ہے [28] Li Jing, Yichen Shen, Tena Dubcek, John Peurifoy, Scott A. Skirlo, Yann LeCun, Max Tegmark, and Marin Soljacic. “Tunable efficient unitary neural networks (eunn) and their application to rnns”. In International Conference on Machine Learning. (2016). url: api.semanticscholar.org/CorpusID:5287947.

https:///api.semanticscholar.org/CorpusID:5287947

ہے [29] Léo Monbroussou, Jonas Landman, Alex B. Grilo, Romain Kukla, and Elham Kashefi. “Trainability and expressivity of hamming-weight preserving quantum circuits for machine learning” (2023). arXiv:2309.15547.

آر ایکس سی: 2309.15547

ہے [30] Enrico Fontana, Dylan Herman, Shouvanik Chakrabarti, Niraj Kumar, Romina Yalovetzky, Jamie Heredge, Shree Hari Sureshbabu, and Marco Pistoia. “The adjoint is all you need: Characterizing barren plateaus in quantum ansätze” (2023). arXiv:2309.07902.

آر ایکس سی: 2309.07902

ہے [31] Michael Ragone, Bojko N. Bakalov, Frédéric Sauvage, Alexander F. Kemper, Carlos Ortiz Marrero, Martin Larocca, and M. Cerezo. “A unified theory of barren plateaus for deep parametrized quantum circuits” (2023). arXiv:2309.09342.

آر ایکس سی: 2309.09342

ہے [32] Xuchen You and Xiaodi Wu. “Exponentially many local minima in quantum neural networks”. In International Conference on Machine Learning. Pages 12144–12155. PMLR (2021).

ہے [33] Eric R. Anschuetz and Bobak Toussi Kiani. “Quantum variational algorithms are swamped with traps”. Nature Communications 13 (2022).

https://doi.org/10.1038/s41467-022-35364-5

ہے [34] Ilya O. Tolstikhin, Neil Houlsby, Alexander Kolesnikov, Lucas Beyer, Xiaohua Zhai, Thomas Unterthiner, Jessica Yung, Daniel Keysers, Jakob Uszkoreit, Mario Lucic, and Alexey Dosovitskiy. “Mlp-mixer: An all-mlp architecture for vision”. In NeurIPS. (2021).

ہے [35] جیانچینگ یانگ، روئی شی، اور بنگ بنگ نی۔ "میڈمنسٹ کی درجہ بندی ڈیکاتھلون: طبی تصویر کے تجزیہ کے لیے ایک ہلکا پھلکا آٹومل بینچ مارک" (2020)۔

https://doi.org/10.1109/ISBI48211.2021.9434062

ہے [36] Jiancheng Yang, Rui Shi, Donglai Wei, Zequan Liu, Lin Zhao, Bilian Ke, Hanspeter Pfister, and Bingbing Ni. “Medmnist v2-a large-scale lightweight benchmark for 2d and 3d biomedical image classification”. Scientific Data 10, 41 (2023).

https://doi.org/10.1038/s41597-022-01721-8

ہے [37] Angelos Katharopoulos, Apoorv Vyas, Nikolaos Pappas, and François Fleuret. “Transformers are rnns: Fast autoregressive transformers with linear attention”. In International Conference on Machine Learning. Pages 5156–5165. PMLR (2020).

ہے [38] James Bradbury, Roy Frostig, Peter Hawkins, Matthew James Johnson, Chris Leary, Dougal Maclaurin, George Necula, Adam Paszke, Jake VanderPlas, Skye Wanderman-Milne, and Qiao Zhang. “JAX: composable transformations of Python+NumPy programs”. Github (2018). url: http://github.com/google/jax.

http:///github.com/google/jax

ہے [39] Diederik P. Kingma and Jimmy Ba. “Adam: A method for stochastic optimization”. CoRR abs/1412.6980 (2015).

ہے [40] Hyeonwoo Noh، Tackgeun You، Jonghwan Mun، اور Bohyung Han۔ "شور کے ذریعہ گہرے اعصابی نیٹ ورکس کو باقاعدہ بنانا: اس کی تشریح اور اصلاح"۔ نیور آئی پی ایس (2017)۔

ہے [41] Xue Ying. “An overview of overfitting and its solutions”. In Journal of Physics: Conference Series. Volume 1168, page 022022. IOP Publishing (2019).

https://doi.org/10.1088/1742-6596/1168/2/022022

کی طرف سے حوالہ دیا گیا

[1] David Peral García, Juan Cruz-Benito, and Francisco José García-Peñalvo, “Systematic Literature Review: Quantum Machine Learning and its applications”, آر ایکس سی: 2201.04093, (2022).

[2] El Amine Cherrat, Snehal Raj, Iordanis Kerenidis, Abhishek Shekhar, Ben Wood, Jon Dee, Shouvanik Chakrabarti, Richard Chen, Dylan Herman, Shaohan Hu, Pierre Minssen, Ruslan Shaydulin, Yue Sun, Romina Yalovetzky, and Marco Pistoia, “Quantum Deep Hedging”, کوانٹم 7, 1191 (2023).

[3] Léo Monbroussou, Jonas Landman, Alex B. Grilo, Romain Kukla, and Elham Kashefi, "Trainability and expressivity of Hamming-weight preserving Quantum Circuits for Machine Learning", آر ایکس سی: 2309.15547, (2023).

[4] Sohum Thakkar, Skander Kazdaghli, Natansh Mathur, Iordanis Kerenidis, André J. Ferreira-Martins, and Samurai Brito, “Improved Financial Forecasting via Quantum Machine Learning”, آر ایکس سی: 2306.12965, (2023).

[5] جیسن آئیکونس اور سونیکا جوہری، "ٹینسر نیٹ ورک پر مبنی موثر کوانٹم ڈیٹا لوڈنگ آف امیجز"، آر ایکس سی: 2310.05897, (2023).

[6] Nishant Jain, Jonas Landman, Natansh Mathur, and Iordanis Kerenidis, “Quantum Fourier Networks for Solving Parametric PDEs”, آر ایکس سی: 2306.15415, (2023).

[7] Daniel Mastropietro, Georgios Korpas, Vyacheslav Kungurtsev, and Jakub Marecek, “Fleming-Viot helps speed up variational quantum algorithms in the presence of barren plateaus”, آر ایکس سی: 2311.18090, (2023).

[8] Aliza U. Siddiqui, Kaitlin Gili, and Chris Ballance, “Stressing Out Modern Quantum Hardware: Performance Evaluation and Execution Insights”, آر ایکس سی: 2401.13793, (2024).

مذکورہ بالا اقتباسات سے ہیں۔ SAO/NASA ADS (آخری بار کامیابی کے ساتھ 2024-02-22 13:37:43)۔ فہرست نامکمل ہو سکتی ہے کیونکہ تمام ناشرین مناسب اور مکمل حوالہ ڈیٹا فراہم نہیں کرتے ہیں۔

نہیں لا سکا کراس ریف کا حوالہ دیا گیا ڈیٹا آخری کوشش کے دوران 2024-02-22 13:37:41: Crossref سے 10.22331/q-2024-02-22-1265 کے لیے حوالہ کردہ ڈیٹا حاصل نہیں کیا جا سکا۔ یہ عام بات ہے اگر DOI حال ہی میں رجسٹر کیا گیا ہو۔

یہ مقالہ کوانٹم میں کے تحت شائع کیا گیا ہے۔ Creative Commons انتساب 4.0 انٹرنیشنل (CC BY 4.0) لائسنس کاپی رائٹ اصل کاپی رائٹ ہولڈرز جیسے مصنفین یا ان کے اداروں کے پاس رہتا ہے۔

- SEO سے چلنے والا مواد اور PR کی تقسیم۔ آج ہی بڑھا دیں۔

- پلیٹو ڈیٹا ڈاٹ نیٹ ورک ورٹیکل جنریٹو اے آئی۔ اپنے آپ کو بااختیار بنائیں۔ یہاں تک رسائی حاصل کریں۔

- پلیٹوآئ اسٹریم۔ ویب 3 انٹیلی جنس۔ علم میں اضافہ۔ یہاں تک رسائی حاصل کریں۔

- پلیٹو ای ایس جی۔ کاربن، کلین ٹیک، توانائی ، ماحولیات، شمسی، ویسٹ مینجمنٹ یہاں تک رسائی حاصل کریں۔

- پلیٹو ہیلتھ۔ بائیوٹیک اینڈ کلینیکل ٹرائلز انٹیلی جنس۔ یہاں تک رسائی حاصل کریں۔

- ماخذ: https://quantum-journal.org/papers/q-2024-02-22-1265/

- : ہے

- : نہیں

- ][p

- $UP

- 1

- 10

- 11

- 12

- 125

- 13

- 14

- 15٪

- 16

- 17

- 19

- 20

- 2012

- 2015

- 2016

- 2017

- 2018

- 2019

- 2020

- 2021

- 2022

- 2023

- 2024

- 22

- 23

- 24

- 25

- 26٪

- 27

- 28

- 29

- 2D

- 30

- 31

- 32

- 33

- 35٪

- 36

- 39

- 3d

- 40

- 41

- 43

- 7

- 8

- 9

- 98

- a

- اوپر

- خلاصہ

- تک رسائی حاصل

- ACM

- آدم

- اس کے علاوہ

- ترقی

- فائدہ

- فوائد

- وابستگیاں

- AL

- یلیکس

- الیگزینڈر

- یلگورتم

- یلگوردمز

- سیدھ کریں

- تمام

- متبادل

- an

- تجزیہ

- اور

- اینڈریو

- انتھونی

- اے پی آئی

- درخواست

- ایپلی کیشنز

- فن تعمیر

- آرکیٹیکچرز

- کیا

- AS

- مفروضے

- At

- کرنے کی کوشش

- توجہ

- مصنف

- مصنفین

- آٹو ایم ایل

- بنجر

- کی بنیاد پر

- BE

- بین

- معیار

- معیارات

- بنیامین

- بہتر

- کے درمیان

- دو سمتی

- بایڈیکل

- بلاکس

- دونوں

- توڑ

- عمارت

- تعمیر

- by

- حساب سے

- کیمبرج

- کر سکتے ہیں

- صلاحیتوں

- کارلوس

- چا

- چانگ

- خصوصیات

- چارلس

- چن

- چو

- کرس

- درجہ بندی

- قریب سے

- تبصرہ

- عمومی

- کموینیکیشن

- مقابلے میں

- مقابلہ

- مکمل

- پیچیدہ

- پیچیدگی

- کمپوزایبل

- کمپاؤنڈ

- حساب

- کمپیوٹر

- کمپیوٹر

- کمپیوٹنگ

- کانفرنس

- رابطہ

- مسلسل

- کنٹرول

- کاپی رائٹ

- سکتا ہے

- کاؤنٹر پارٹ

- ہم منصبوں

- تخلیق

- ڈینیل

- اعداد و شمار

- ڈیٹاسیٹس

- ڈیوڈ

- گہری

- گہری سیکھنے

- مظاہرہ

- ڈیزائن

- تفصیل

- کھوج

- مختلف

- بات چیت

- ڈونالڈ

- کے دوران

- متحرک

- ای اینڈ ٹی

- ایڈیٹرز

- ایڈورڈ

- تاثیر

- ہنر

- el

- حوصلہ افزا

- بڑھانے کے

- ایرک

- تشخیص

- بھی

- عملدرآمد

- پھانسی

- نمائش کر رہا ہے

- تجربات

- تلاش

- توسیع

- وسیع

- فاسٹ

- فروری

- کم

- آخر

- مالی

- توجہ مرکوز

- پیچھے پیچھے

- کے بعد

- کے لئے

- فرانسسکو

- سے

- مکمل طور پر

- گیٹس

- جارج

- GitHub کے

- بم

- ضمانت دیتا ہے

- ہارڈ ویئر

- ہارورڈ

- باڑ لگانا

- مدد

- مدد کرتا ہے

- ہولڈرز

- HTTP

- HTTPS

- ہانگ

- ہائبرڈ

- IEEE

- if

- Illia

- تصویر

- تصویر کی درجہ بندی

- تصویری شناخت

- تصاویر

- عملدرآمد

- بہتر

- in

- سمیت

- معلومات

- بصیرت

- اداروں

- انٹیلی جنس

- دلچسپ

- بین الاقوامی سطح پر

- تشریح

- متعارف کرانے

- میں

- جیکب

- جیمز

- جیمی

- جاوا سکرپٹ

- جمی

- جان

- جانسن

- جان

- جونز

- جرنل

- جان

- کم

- جانا جاتا ہے

- کمر

- زبان

- بڑے پیمانے پر

- آخری

- پرت

- تہوں

- سیکھنے

- چھوڑ دو

- لی

- سطح

- لیورنگنگ

- Li

- لائسنس

- ہلکا پھلکا

- کی طرح

- لن

- لکیری

- لسٹ

- ادب

- بارک

- لوڈ کر رہا ہے

- مقامی

- لندن

- مشین

- مشین لرننگ

- بہت سے

- مارکو

- ماریو

- مارٹن

- ریاضی

- میٹرکس

- میٹھی

- میکس

- زیادہ سے زیادہ چوڑائی

- میکسیل

- مئی..

- mcclean

- میکانزم

- طبی

- یادیں

- طریقہ

- طریقوں

- مائیکل

- میخائل

- منٹ

- ماڈل

- ماڈل

- جدید

- مہینہ

- زیادہ

- قدرتی

- قدرتی زبان عملیات

- فطرت، قدرت

- قریب

- ضرورت ہے

- نیٹ

- نیٹ ورک

- نیٹ ورک

- عصبی

- عصبی نیٹ ورک

- نیند نیٹ ورک

- نئی

- شور

- عام

- ناول

- تعداد

- حاصل کی

- of

- on

- ایک

- کھول

- اصلاح کے

- or

- اصل

- ہمارے

- باہر

- پر

- مجموعی جائزہ

- صفحہ

- صفحات

- پالو آلٹو

- کاغذ.

- پیرامیٹرز

- پیرس

- پیٹرک

- پاٹرن

- پال

- کارکردگی

- کارکردگی

- پیٹر

- جسمانی

- طبعیات

- پتھر

- پلاٹا

- افلاطون ڈیٹا انٹیلی جنس

- پلیٹو ڈیٹا

- پالیسیاں

- polosukhin

- ممکنہ

- طاقتور

- پرکاش

- کی موجودگی

- حال (-)

- محفوظ کر رہا ہے

- پریس

- پچھلا

- مسئلہ

- پروسیسنگ

- پروسیسرز

- پیدا

- پروگرام

- وعدہ

- تجویز کریں

- مجوزہ

- فراہم

- شائع

- پبلیشر

- پبلشرز

- پبلشنگ

- معیار

- کوانٹم

- کوانٹم الگورتھم

- کوانٹم کمپیوٹر

- کوانٹم کمپیوٹرز

- کمانٹم کمپیوٹنگ

- کوانٹم معلومات

- کوانٹم مشین لرننگ

- کیوبیت

- R

- ریمآئ

- تناسب

- حال ہی میں

- تسلیم

- بار بار

- حوالہ جات

- رجسٹرڈ

- باقی

- کی ضرورت

- نتائج کی نمائش

- کا جائزہ لینے کے

- جائزہ

- رچرڈ

- روچ

- رومن

- روٹنگ

- رای

- رن

- رن ٹائم

- ریان

- s

- پیمانے

- سائنس

- سائنس اور ٹیکنالوجی

- سائنسی

- سکٹ

- شان

- سیریز

- مقرر

- ارے

- سے ظاہر ہوا

- اشارہ

- سائمن

- نقوش

- چھ

- حل

- حل کرنا۔

- کچھ

- سپیکٹرم

- تقریر

- تیزی

- معیار

- ریاستی آرٹ

- امریکہ

- مطالعہ

- کامیابی کے ساتھ

- اس طرح

- موزوں

- اتوار

- سپر کنڈکٹنگ

- سروے

- سسٹمز

- کاموں

- ہم جنس پرستوں

- ٹیکنالوجی

- اصطلاح

- شرائط

- متن

- متن کی درجہ بندی

- کہ

- ۔

- ان

- نظریاتی

- نظریہ

- یہ

- وہ

- اس

- تھامس

- تین

- ٹم

- وقت

- اوقات

- عنوان

- کرنے کے لئے

- تربیت یافتہ

- ٹریننگ

- معاملات

- تبدیلی

- ٹرانسفارمر

- ٹرانسفارمرز

- ترجمہ کریں

- ترجمہ

- پھنس گیا

- نیٹ ورک

- دو

- اقسام

- کے تحت

- افہام و تفہیم

- متحد

- منفرد

- یونیورسٹی

- اپ ڈیٹ

- صلی اللہ علیہ وسلم

- URL

- امریکا

- استعمال

- کا استعمال کرتے ہوئے

- مختلف

- مختلف

- گاڑی

- بہت

- کی طرف سے

- نقطہ نظر

- حجم

- W

- وانگ

- چاہتے ہیں

- تھا

- we

- اچھا ہے

- جس

- ساتھ

- لکڑی

- الفاظ

- کام

- قابل

- wu

- ژاؤ

- سال

- پیداوار

- ینگ

- تم

- یوآن

- زیفیرنیٹ

- زو