Mixed reality (MR) can open the door to a variety of unique experiences, from productivity and real-time collaboration with colleagues to hanging out with friends and family to new types of games and interactive apps we haven’t even dreamt of yet. While VR fully immerses you in a digital environment, MR gives you the freedom to move around and interact with people and objects in your physical space while enjoying virtual content, unlocking a new class of AR-like experiences in VR that augment your physical space.

“Being able to see the physical world around you and blend it with virtual content opens up new possibilities for VR today—like co-located games you can play with friends in the same physical room or productivity experiences that combine huge virtual monitors with physical tools,” explains Meta Product Manager Sarthak Ray. “And it’s a step toward our longer-term vision for augmented reality.”

There’s a common misconception that mixed reality can be accomplished simply by showing a real-time high-resolution video feed of the physical world inside the headset, but the truth is far more complex. Believable MR experiences require the ability to convincingly blend the virtual and physical worlds—that means the headset must do more than just display your physical surroundings as a 2D video. It must actually digitally reconstruct your room as a 3D space with an understanding of the objects and surfaces around you. That takes a complex system of multiple hardware and software technologies like high-resolution sensors, Passthrough, AI-powered Scene Understanding, and Spatial Anchors, all working together in a seamless and comfortable way.

At Meta, we continually invest in original research and development to create leading VR technologies that we deliver to consumers at scale—all while putting people first in our design. We focus on the details, informed by a deep understanding of how people perceive the world around them and a drive to connect that perception to immersive experiences that excite people about the possibilities of virtual and mixed reality. Today, we’re introducing a new name for our unique mixed reality system—Meta Reality—and giving you an under-the-hood look at what goes into a truly first-class MR experience.

Stereoscopic Color Passthrough for Spatial Awareness

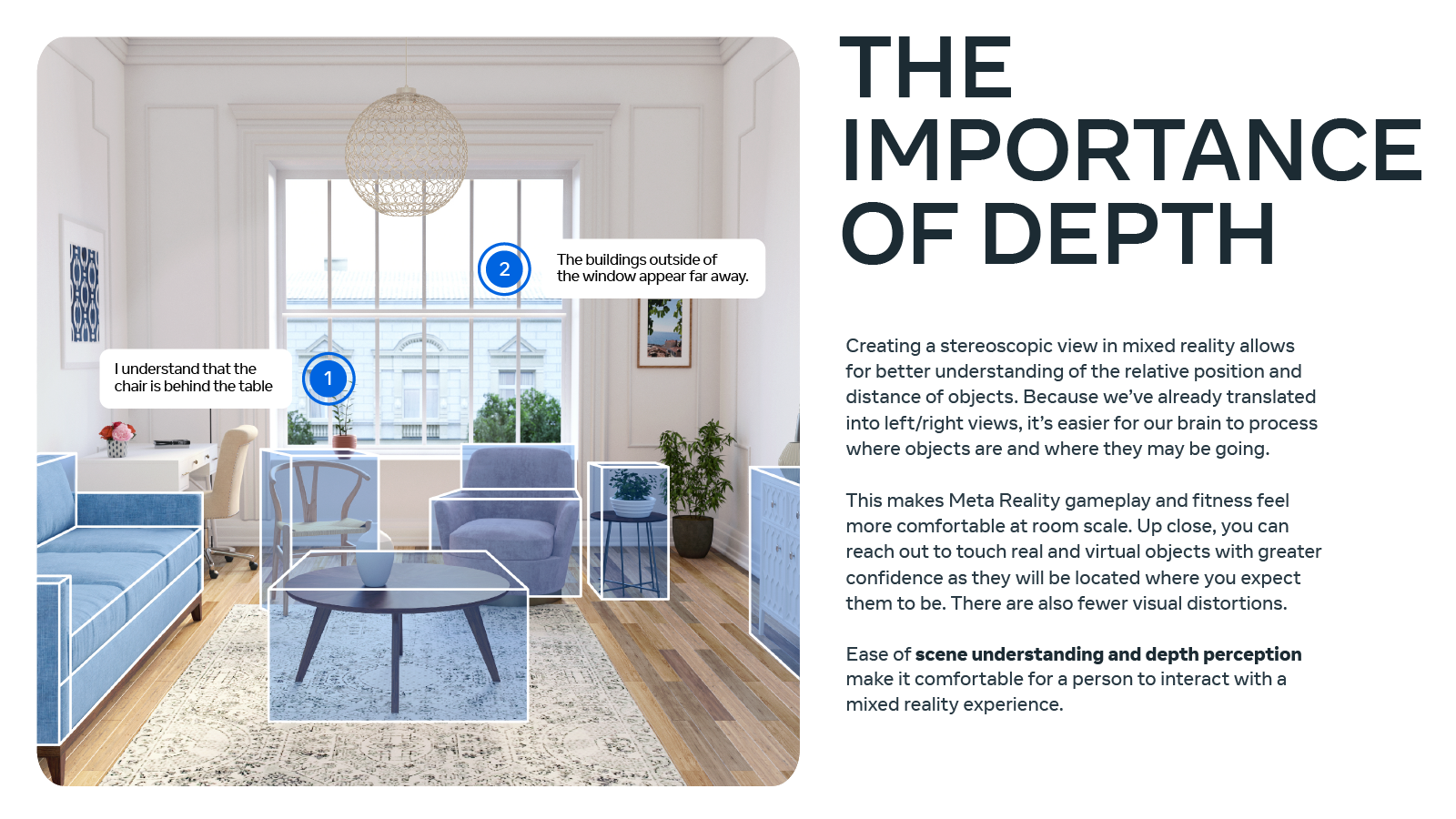

First and foremost, a true MR experience must be comfortable and feel like a natural viewing experience—a direct video feed that fails to convey a sense of depth simply can’t deliver that, even if it’s high-resolution. Additionally if Passthrough is monoscopic (seen from a single viewing angle) and/or lacks depth cues, it can be uncomfortable for the viewer. For Passthrough to be comfortable, the images rendered on the individual eye buffer need to be generated from two separate angles providing stereoscopic cues. This, along with the presence of depth and color information, is important to reconstruct a comfortable experience.

Our stereoscopic color Passthrough solution uses a custom hardware architecture, advanced computer vision algorithms, and state-of-the-art machine learning that model how your eyes naturally see the world around you to meet this challenge. Better camera and display resolution make for improved image quality, with Meta Quest Pro’s cameras capturing four times the pixels of Meta Quest 2’s cameras. But perhaps more importantly, its image-based rendering, stereo geometry reconstruction, and depth understanding establishes the location of objects in relation to the scene within a given room. This novel algorithm stack enables Meta Quest Pro to identify aspects of room geometry and pinpoint the location of objects in 3D space. So when you reach for the coffee cup on your desk while viewing it with stereoscopic color Passthrough, your brain understands that the cup is farther away from your hand than the pen sitting right next to you.

“Meta Quest Pro combines two camera views to reconstruct realistic depth, which ensures mixed reality experiences built using color Passthrough are comfortable for people,” explains Meta Computer Vision Engineering Manager Ricardo Silveira Cabral. “But also, stereo texture cues allow the user’s brain to do the rest and infer depth even when depth reconstruction isn’t perfect or is beyond the range of the system.”

Remember Oculus Insight—our technology that enabled the first standalone full-featured “inside-out” tracking system for a consumer VR device? We’ve upgraded its depth sensing capabilities to produce a dense 3D representation of a given space in real time. While Insight uses roughly 100 interest points in a room to pinpoint the headset’s position in space, our depth sensing solution on Meta Quest Pro produces up to 10,000 points per frame up to five meters away under a variety of natural and artificial lighting conditions. We use this information to create a 3D mesh of the physical world, combining this output across a few frames to generate a dense 3D and temporally-stable representation of the space. This mesh is then used with predictive rendering to produce images of the physical world. That means the reconstructions are individually warped to the headset’s left and right eye views using our Asynchronous TimeWarp technology that predicts the user’s eye positions a few milliseconds in the future to compensate for rendering latency.

Finally, adding color makes the entire experience look and feel more realistic. “The color camera is used to colorize the stereoscopic view to bring the experience as close to the physical world as possible,” says Silveira Cabral. “With this architecture plus optimizations done across the entire pipeline, we can provide stereoscopic color Passthrough on Meta Quest Pro for all existing Meta Quest 2 titles and upcoming MR apps as the default experience with no additional computational resources. That means that existing apps using Passthrough on Meta Quest 2 will work with Meta Quest Pro’s new stereoscopic color Passthrough technology right out of the box.”

When you add it all up, Meta Quest Pro’s stereoscopic color Passthrough results in a more comfortable experience with depth perception and fewer visual distortions than monoscopic solutions, unlocking quality room-scale mixed reality experiences.

Scene Understanding for Blending Virtual Content Into the Physical World

Introduced as part of Presence Platform during Connect 2021, Scene Understanding lets developers quickly build complex and scene-aware MR experiences that enable rich interactions with the user’s environment.

“To minimize complexity and allow developers to focus on building their experiences and business, we introduced Scene Understanding as a system solution,” notes Meta Product Manager Wei Lyu.

- Scene Model is a single, comprehensive, up-to-date, and system-managed representation of the environment consisting of geometric and semantic information. The fundamental elements of a Scene Model are Anchors, and each Anchor can be attached with various components. For example, a user’s living room is organized around individual Anchors with semantic labels, such as the floor, ceiling, walls, desk, and couch. Each is also attached with a simple geometric representation: a 2D boundary or a 3D bounding box.

- Scene Capture is a system-guided flow that lets users walk around and capture their room architecture and furniture to generate a Scene Model. In the future, our goal is to deliver an automated version of Scene Capture that doesn’t require people to manually capture their surroundings.

- Scene API is the interface that apps can query and access the spatial information in the Scene Model for various use cases including content placement, physics, navigation, etc. With Scene API, developers can use the Scene Model to have a virtual ball bounce off physical surfaces in the actual room or a virtual robot that can scale the physical walls.

“Scene Understanding lowers friction for developers, letting them build MR experiences that are as believable and immersive as possible with real-time occlusion and collision effects,” says Ray.

Spatial Anchors for Virtual Object Placement

“If stereoscopic color Passthrough and Scene Understanding do the heavy lifting to let MR experiences blend the physical and virtual world, our anchoring capabilities provide the connective tissue that holds it all together,” says Meta Product Manager Laura Onu.

Spatial Anchors are a core capability to help developers get started building first-class mixed reality experiences. They enable an app to create a frame of reference or a pin in space that allows a virtual object to persist in space over time. Thanks to Spatial Anchors, a product designer can anchor several 3D schematics in place in Gravity Sketch, or a group of friends might anchor the game world in Demeo to their table surface so they can return to the same setup and continue their game the following day.

Spatial Anchors can be used in combination with Scene Model Anchors (walls, a table, the floor, etc.) to create rich experiences and automatic placement at room-scale. The user’s room becomes a spatial rendering canvas for both gameplay and productivity. A developer might choose to anchor a virtual door to a physical wall. The user can then open it to reveal a fully immersive virtual world, complete with its own characters that can then enter the physical space.

“By combining Scene Understanding with Spatial Anchors, you can blend and adapt your MR experiences to the user’s environment to create a new world full of possibilities,” notes Onu. “You can become a secret agent in your own living room, place virtual furniture in your room or sketch an extension on your home, create physics games, and more.”

Shared Spatial Anchors for Co-Located Experiences

Last but not least, we’ve added Shared Spatial Anchors to Presence Platform. Shared Spatial Anchors allows anchors created by one person to be shared with others in the same physical space. This lets developers build local multiplayer experiences by creating a shared world-locked frame of reference for multiple users. For example, two or more people can sit at the same physical table and play a virtual board game on top of it. Click here to learn more.

A Winning Combination for Mixed Reality

You need stereoscopic color Passthrough, Scene Understanding, Spatial Anchors, and Shared Spatial Anchors along with real-time occlusion and collision, object detection, and lighting information—combined in one package to deliver true, meaningful MR experiences. And it all needs to come together with constraints like performance cost, compute, and thermals in mind. But above all, ensuring a comfortable experience is key.

“As with most things in tech, there are trade-offs involved,” acknowledges Meta Product Manager Avinav Pashine. “While our current solution isn’t perfect, it preserves compute while maximizing user comfort—and we’re able to provide better depth and breadth of developer tools because of it.”

This is just the beginning. Meta Reality will continue to evolve through software updates—and with the hardware advances delivered in future Meta Quest products.

“Meta Quest Pro is the first of many MR devices that explore the exciting potential of this emerging technology,” adds Silveira Cabral. “We want to learn together with developers as they build compelling experiences that redefine what’s possible with a VR headset. This story’s not complete yet—this is just page one.”

- AR/VR

- blockchain

- blockchain conference ar

- blockchain conference vr

- coingenius

- crypto conference ar

- crypto conference vr

- extended reality

- Metaverse

- mixed reality

- Oculus

- oculus games

- oppo

- plato

- plato ai

- Plato Data Intelligence

- PlatoData

- platogaming

- robot learning

- telemedicine

- telemedicine companies

- Virtual reality

- virtual reality game

- virtual reality games

- vr

- zephyrnet